ABSTRACT DESIGN AND EVALUATION OF DECISION MAKING ALGORITHMS FOR INFORMATION SECURITY

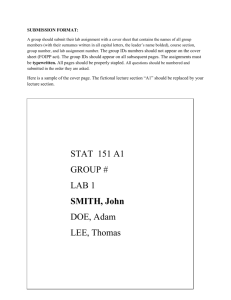

advertisement

ABSTRACT

Title of dissertation:

DESIGN AND EVALUATION OF DECISION MAKING

ALGORITHMS FOR INFORMATION SECURITY

Alvaro A. Cárdenas, Doctor of Philosophy, 2006

Dissertation directed by: Professor John S. Baras

Department of Electrical and Computer Engineering

The evaluation and learning of classifiers is of particular importance in several computer

security applications such as intrusion detection systems (IDSs), spam filters, and watermarking

of documents for fingerprinting or traitor tracing. There are however relevant considerations

that are sometimes ignored by researchers that apply machine learning techniques for security

related problems. In this work we identify and work on two problems that seem prevalent in

security-related applications. The first problem is the usually large class imbalance between

normal events and attack events. We address this problem with a unifying view of different

proposed metrics, and with the introduction of Bayesian Receiver Operating Characteristic (BROC) curves. The second problem to consider is the fact that the classifier or learning rule will

be deployed in an adversarial environment. This implies that good performance on average

might not be a good performance measure, but rather we look for good performance under the

worst type of adversarial attacks. We work on a general methodology that we apply for the

design and evaluation of IDSs and Watermarking applications.

DESIGN AND EVALUATION OF DECISION MAKING ALGORITHMS

FOR INFORMATION SECURITY

by

Alvaro A. Cárdenas

Dissertation submitted to the Faculty of the Graduate School of the

University of Maryland, College Park in partial fulfillment

of the requirements for the degree of

Doctor of Philosophy

2006

Advisory Committee:

Professor John S. Baras, Chair/Advisor

Professor Virgil D. Gligor

Professor Bruce Jacob

Professor Carlos Berenstein

Professor Nuno Martins

c Copyright by

Alvaro A. Cárdenas

2006

TABLE OF CONTENTS

List of Figures

iv

1

Introduction

1

2

Performance Evaluation Under the Class Imbalance Problem

3

I

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3

II

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3

III

Notation and Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4

IV

Evaluation Metrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5

V

Graphical Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

9

VI

Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

18

3

4

5

Secure Decision Making: Defining the Evaluation Metrics and the Adversary

20

I

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

20

II

A Set of Design and Evaluation Guidelines . . . . . . . . . . . . . . . . . . .

20

III

A Black Box Adversary Model . . . . . . . . . . . . . . . . . . . . . . . . . .

22

IV

A White Box Adversary Model . . . . . . . . . . . . . . . . . . . . . . . . . .

32

V

Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

34

Performance Comparison of MAC layer Misbehavior Schemes

35

I

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

35

II

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

35

III

Problem Description and Assumptions . . . . . . . . . . . . . . . . . . . . . .

37

IV

Sequential Probability Ratio Test (SPRT) . . . . . . . . . . . . . . . . . . . .

38

V

Performance analysis of DOMINO . . . . . . . . . . . . . . . . . . . . . . . .

42

VI

Theoretical Comparison . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

46

VII Nonparametric CUSUM statistic . . . . . . . . . . . . . . . . . . . . . . . . .

46

VIII Experimental Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

48

IX

49

Conclusions and future work . . . . . . . . . . . . . . . . . . . . . . . . . . .

Secure Data Hiding Algorithms

52

I

52

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

ii

II

General Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

52

III

Additive Watermarking and Gaussian Attacks . . . . . . . . . . . . . . . . . .

54

IV

B.1

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . .

57

C.1

Minimization with respect to Re . . . . . . . . . . . . . . . .

58

C.2

Minimization with respect to Γ . . . . . . . . . . . . . . . .

58

Towards a Universal Adversary Model . . . . . . . . . . . . . . . . . . . . . .

62

Bibliography

70

iii

LIST OF FIGURES

2.1

Isoline projections of CID onto the ROC curve. The optimal CID value is

CID = 0.4565. The associated costs are C(0, 0) = 3 × 10−5 , C(0, 1) = 0.2156,

C(1, 0) = 15.5255 and C(1, 1) = 2.8487. The optimal operating point is PFA =

2.76 × 10−4 and PD = 0.6749. . . . . . . . . . . . . . . . . . . . . . . . . . .

10

2.2

As the cost ratio C increases, the slope of the optimal isoline decreases . . . . .

11

2.3

As the base-rate p decreases, the slope of the optimal isoline increases . . . . .

12

2.4

The PPV isolines in the ROC space are straight lines that depend only on θ.

The PPV values of interest range from 1 to p . . . . . . . . . . . . . . . . . .

13

The NPV isolines in the ROC space are straight lines that depend only on φ.

The NPV values of interest range from 1 to 1 − p . . . . . . . . . . . . . . . .

14

2.6

PPV and NPV isolines for the ROC of an IDS with p = 6.52 × 10−5 . . . . . .

15

2.7

B-ROC for the ROC of Figure 2.6. . . . . . . . . . . . . . . . . . . . . . . . .

15

2.8

Mapping of ROC to B-ROC . . . . . . . . . . . . . . . . . . . . . . . . . . .

16

2.9

An empirical ROC (ROC2 ) and its convex hull (ROC1 ) . . . . . . . . . . . . .

17

2.10 The B-ROC of the concave ROC is easier to interpret . . . . . . . . . . . . . .

17

2.11 Comparison of two classifiers . . . . . . . . . . . . . . . . . . . . . . . . . . .

18

2.12 B-ROCs comparison for the p of interest . . . . . . . . . . . . . . . . . . . . .

19

3.1

Probability of error for hi vs. p . . . . . . . . . . . . . . . . . . . . . . . . . .

28

3.2

The optimal operating point . . . . . . . . . . . . . . . . . . . . . . . . . . . .

29

3.3

Robust expected cost evaluation . . . . . . . . . . . . . . . . . . . . . . . . .

30

3.4

Robust B-ROC evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

32

4.1

Form of the least favorable pmf p∗1 for two different values of g. When g

approaches 1, p∗1 approaches p0 . As g decreases, more mass of p∗1 concentrated

towards the smaller backoff values. . . . . . . . . . . . . . . . . . . . . . . . .

41

Tradeoff curve between the expected number of samples for a false alarm

E[TFA ] and the expected number of samples for detection E[TD ]. For fixed a

and b, as g increases (low intensity of the attack) the time to detection or to

false alarms increases exponentially. . . . . . . . . . . . . . . . . . . . . . . .

42

2.5

4.2

iv

4.3

For K=3, the state of the variable cheat count can be represented as a Markov

chain with five states. When cheat count reaches the final state (4 in this case)

DOMINO raises an alarm. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

43

4.4

Exact and approximate values of p as a function of m. . . . . . . . . . . . . . .

45

4.5

DOMINO performance for K = 3, m ranges from 1 to 60. γ is shown explicitly.

As γ tends to either 0 or 1, the performance of DOMINO decreases. The SPRT

outperforms DOMINO regardless of γ and m. . . . . . . . . . . . . . . . . . .

46

DOMINO performance for various thresholds K, γ = 0.7 and m in the range

from 1 to 60. The performance of DOMINO decreases with increase of m. For

fixed γ, the SPRT outperforms DOMINO for all values of parameters K and m.

47

The best possible performance of DOMINO is when m = 1 and K changes in

order to accommodate for the desired level of false alarms. The best γ must be

chosen independently. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

47

Tradeoff curves for each of the proposed algorithms. DOMINO has parameters γ = 0.9 and m = 1 while K is the variable parameter. The nonparametric

CUSUM algorithm has as variable parameter c and the SPRT has b = 0.1 and

a is the variable parameter. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

50

Tradeoff curves for default DOMINO configuration with γ = 0.9, best performing DOMINO configuration with γ = 0.7 and SPRT. . . . . . . . . . . . . . . .

50

4.10 Tradeoff curves for best performing DOMINO configuration with γ = 0.7, best

performing CUSUM configuration with γ = 0.7 and SPRT. . . . . . . . . . . .

50

4.11 Comparison between theoretical and experimental results: theoretical analysis

with linear x-axis closely resembles the experimental results. . . . . . . . . . .

51

Let us define a = y − x1 for the detector. We can now see the Lagrangian

function over a, where the adversary tries to distribute the density h such that

L(λ, h) is maximized while satisfying the constraints (i.e., minimizing L(λ, h)

over λ. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

65

5.2

Piecewise linear decision function, where ρ(0) = ρ0 (0) = ρ1 (0) =

. . . . . .

66

5.3

The discontinuity problem in the Lagrangian is solved by using piecewise linear

continuous decision functions. It is now easy to shape the Lagrangian such that

the maxima created form a saddle point equilibrium. . . . . . . . . . . . . . .

66

5.4

New decision function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

67

5.5

With ρ defined in Figure 5.4 the Lagrangian is able to exhibit three local maxima, one of them at the point a = 0, which implies that the adversary will use

this point whenever the distortion constraints are too severe . . . . . . . . . . .

68

4.6

4.7

4.8

4.9

5.1

v

1

2

5.6

ρ¬ represents a decision stating that we do not possess enough information in

order to make a reliable selection between the two hypotheses. . . . . . . . . .

vi

69

Chapter 1

Introduction

Look if I can raise the money fast, can I set up my own lab?

-The Smithsonian Institution. Gore Vidal

The algorithms used to ensure several information security goals, such as authentication,

integrity and secrecy, have often been designed and analyzed with the help of formal mathematical models. One of the most successful examples is the use of theoretical cryptography for

encryption, integrity and authentication. By assuming that some basic primitives hold, (such

as the existence of one-way functions), some cryptographic algorithms can formally be proven

secure.

Information security models have however theoretical limits, since it cannot always be

proven that an algorithm satisfies (or not) certain security conditions. For example, as formalized by Harrison, Ruzzo, and Ullman, the access matrix model is undecidable and Rices

theorem implies that static analysis problems are also undecidable. Because of similar results

such as the undecidability of detecting computer viruses, there is reason to believe that several

intrusion detection problems are also undecidable.

Undecidability is not the only problem for being able to formally characterize the security

properties of an algorithm. Not only are some problems intractable, or undecidable, but also

there are certain inherent uncertainties in several security related problems such as biometrics,

data hiding (watermarking), fraud detection and spam filters that are impossible to factor out.

All algorithms trying to solve these problems will make a non-negligible amount of decision errors. These errors occur due to approximations and can consequently be formulated

as a tradeoff between the costs of operation (e.g., the necessary resources for their operation,

such as the number of false alarms) and the correctness of their output (e.g., the security level

achieved, or the probability of detection). It is however very difficult in practice to assess both:

the real costs of these security solutions and their actual security guarantees. Most of the research therefore relies on ad hoc solutions and heuristics that cannot be shared between security

fields trying to address these hard problems.

In this work we try to address the problem of providing a necessary framework in order to

reason about the security and the costs of an algorithm for solving problems where the decision

between two hypotheses cannot be made without errors.

Our framework is composed of two main parts.

Evaluation Metrics: We introduce in a unified framework several metrics that have been previously proposed in the literature. We give intuitive interpretations of them and provide

new metrics that address two of the main problems for decision algorithms. First, the

large class imbalance between the two hypotheses, and second, the uncertainty of several

parameters, including costs and the class imbalance severity.

Security Model: In order to reason formally about the security level of a decision algorithm,

we need to introduce a formal model for an adversary and the system being evaluated.

We therefore clearly define the feasible design space, and the properties our algorithm

1

need to satisfy by an evaluation metric. Then we model the considered adversary class

by clearly defining the information available to the adversary, the capabilities of the adversary and its goal. Finally, since the easiest way to break the security of a system is

to step outside the box, i.e., to break the rules under which the algorithm was evaluated,

we clearly identify the assumptions made in our model. These assumptions then play

an important part in the evaluation of the algorithm. Our tools to solve and analyze this

models are based on robust detection theory and game theory, and the aim is always the

same, minimize the advantage of the adversary (the advantage of the adversary is defined

according to the metric used), for any possible adversary in a given adversary class.

We apply our model to different problems in intrusion detection, selfish packet forwarding in ad hoc networks, selfish MAC layer misbehavior in wireless access points and watermarking algorithms. Our results show that formally modeling these problems and obtaining

the least favorable attack distribution can lead in the best cases to show how there is merit in

our framework, by outperforming previously proposed heuristic solutions (analytically and by

simulations), and in the worst cases they can be seen as pessimistic decisions based on risk

aversion.

The following is the layout of this dissertation. In chapter 2 we view in a unified framework traditional metrics used to evaluate the performance of intrusion detection systems and

introduce a new evaluation curve called the B-ROC curve. In chapter 3 we introduce the notion

of security of a decision algorithm and define the adversarial models that are going to be used

in the next chapters in order to design and evaluate decision making algorithms. In chapter

4 we compare the performance of two classification algorithms used for detecting MAC layer

misbehavior in wireless networks. This chapter is a validation of our approach, since it shows

how the design of classification algorithms using robust detection theory and formal adversarial

modeling can outperform theoretically and empirically previously proposed algorithms based

on heuristics. Finally in chapter 5 we apply our framework for the design and evaluation of

data hiding algorithms.

2

Chapter 2

Performance Evaluation Under the Class Imbalance Problem

For there seem to be many empty alarms in war

-Nicomachean Ethics, Aristotle

I

Overview

Classification accuracy in intrusion detection systems (IDSs) deals with such fundamental problems as how to compare two or more IDSs, how to evaluate the performance of an IDS,

and how to determine the best configuration of the IDS. In an effort to analyze and solve these

related problems, evaluation metrics such as the Bayesian detection rate, the expected cost, the

sensitivity and the intrusion detection capability have been introduced. In this chapter, we study

the advantages and disadvantages of each of these performance metrics and analyze them in a

unified framework. Additionally, we introduce the Bayesian Receiver Operating Characteristic

(B-ROC) curves as a new IDS performance tradeoff which combines in an intuitive way the

variables that are more relevant to the intrusion detection evaluation problem.

II

Introduction

Consider a company that, in an effort to improve its information technology security

infrastructure, wants to purchase either intrusion detector 1 (I DS 1 ) or intrusion detector 2

(I DS 2 ). Furthermore, suppose that the algorithms used by each IDS are kept private and therefore the only way to determine the performance of each IDS (unless some reverse engineering

is done [1]) is through empirical tests determining how many intrusions are detected by each

scheme while providing an acceptable level of false alarms. Suppose these tests show with high

confidence that I DS 1 detects one-tenth more attacks than I DS 2 but at the cost of producing

one hundred times more false alarms. The company needs to decide based on these estimates,

which IDS will provide the best return of investment for their needs and their operational environment.

This general problem is more concisely stated as the intrusion detection evaluation problem, and its solution usually depends on several factors. The most basic of these factors are the

false alarm rate and the detection rate, and their tradeoff can be intuitively analyzed with the

help of the receiver operating characteristic (ROC) curve [2, 3, 4, 5, 6]. However, as pointed

out in [7, 8, 9], the information provided by the detection rate and the false alarm rate alone

might not be enough to provide a good evaluation of the performance of an IDS. Therefore,

the evaluation metrics need to consider the environment the IDS is going to operate in, such

as the maintenance costs and the hostility of the operating environment (the likelihood of an

attack). In an effort to provide such an evaluation method, several performance metrics such

as the Bayesian detection rate [7], expected cost [8], sensitivity [10] and intrusion detection

capability [9], have been proposed in the literature.

Yet despite the fact that each of these performance metrics makes their own contribution to the analysis of intrusion detection systems, they are rarely applied in the literature when

3

proposing a new IDS. It is our belief that the lack of widespread adoption of these metrics stems

from two main reasons. Firstly, each metric is proposed in a different framework (e.g. information theory, decision theory, cryptography etc.) and in a seemingly ad hoc manner. Therefore

an objective comparison between the metrics is very difficult.

The second reason is that the proposed metrics usually assume the knowledge of some

uncertain parameters like the likelihood of an attack, or the costs of false alarms and missed

detections. Moreover, these uncertain parameters can also change during the operation of an

IDS. Therefore the evaluation of an IDS under some (wrongly) estimated parameters might not

be of much value.

A. Our Contributions

In this chapter, we introduce a framework for the evaluation of IDSs in order to address

the concerns raised in the previous section. First, we identify the intrusion detection evaluation

problem as a multi-criteria optimization problem. This framework will let us compare several

of the previously proposed metrics in a unified manner. To this end, we recall that there are

in general two ways to solve a multi-criteria optimization problem. The first approach is to

combine the criteria to be optimized in a single optimization problem. We then show how

the intrusion detection capability, the expected cost and the sensitivity metrics all fall into this

category. The second approach to solve a multi-criteria optimization problem is to evaluate a

tradeoff curve. We show how the Bayesian rates and the ROC curve analysis are examples of

this approach.

To address the uncertainty of the parameters assumed in each of the metrics, we then

present a graphical approach that allows the comparison of the IDS metrics for a wide range of

uncertain parameters. For the single optimization problem we show how the concept of isolines

can capture in a single value (the slope of the isoline) the uncertainties like the likelihood of an

attack and the operational costs of the IDS. For the tradeoff curve approach, we introduce a new

tradeoff curve we call the Bayesian ROC (B-ROC). We believe the B-ROC curve combines in

a single graph all the relevant (and intuitive) parameters that affect the practical performance of

an IDS.

In an effort to make this evaluation framework accessible to other researchers and in order

to complement our presentation, we started the development of a software application available

at [11] to implement the graphical approach for the expected cost and our new B-ROC analysis

curves. We hope this tool can grow to become a valuable resource for research in intrusion

detection.

III

Notation and Definitions

In this section we present the basic notation and definitions which we use throughout this

document.

We assume that the input to an intrusion detection system is a feature-vector x ∈ X . The

elements of x can include basic attributes like the duration of a connection, the protocol type,

the service used etc. It can also include specific attributes selected with domain knowledge

such as the number of failed logins, or if a superuser command was attempted. Examples of

x used in intrusion detection are sequences of system calls [12], sequences of user commands

[13], connection attempts to local hosts [14], proportion of accesses (in terms of TCP or UDP

packets) to a given port of a machine over a fixed period of time [15] etc.

4

Let I denote whether a given instance x was generated by an intrusion (represented by

I = 1 or simply I) or not (denoted as I = 0 or equivalently ¬I). Also let A denote whether

the output of an IDS is an alarm (denoted by A = 1 or simply A) or not (denoted by A = 0, or

equivalently ¬A). An IDS can then be defined as an algorithm I DS that receives a continuous

data stream of computer event features X = {x[1], x[2], . . . , } and classifies each input x[ j] as

being either a normal event or an attack i.e. I DS : X →{A, ¬A}. In this chapter we do not

address how the IDS is designed. Our focus will be on how to evaluate the performance of a

given IDS.

Intrusion detection systems are commonly classified as either misuse detection schemes

or anomaly detection schemes. Misuse detection systems use a number of attack signatures describing attacks; if an event feature x matches one of the signatures, an alarm is raised. Anomaly

detection schemes on the other hand rely on profiles or models of the normal operation of the

system. Deviations from these established models raise alarms.

The empirical results of a test for an IDS are usually recorded in terms of how many

attacks were detected and how many false alarms were produced by the IDS, in a data set

containing both normal data and attack data. The percentage of alarms out of the total number

of normal events monitored is referred to as the false alarm rate (or the probability of false

alarm), whereas the percentage of detected attacks out of the total attacks is called the detection

rate (or probability of detection) of the IDS. In general we denote the probability of false alarm

and the probability of detection (respectively) as:

PFA ≡ Pr[A = 1|I = 0]

and

PD ≡ Pr[A = 1|I = 1]

(2.1)

These empirical results are sometimes shown with the help of the ROC curve; a graph

whose x-axis is the false alarm rate and whose y-axis is the detection rate. The graphs of

misuse detection schemes generally correspond to a single point denoting the performance of

the detector. Anomaly detection schemes on the other hand, usually have a monitored statistic

which is compared to a threshold τ in order to determine if an alarm should be raised or not.

Therefore their ROC curve is obtained as a parametric plot of the probability of false alarm

(PFA ) versus the probability of detection (PD ) (with parameter τ) as in [2, 3, 4, 5, 6].

IV

Evaluation Metrics

In this section we first introduce metrics that have been proposed in previous work. Then

we discuss how we can use these metrics to evaluate the IDS by using two general approaches,

that is the expected cost and the tradeoff approach. In the expected cost approach, we give intuition of the expected cost metric by relating all the uncertain parameters (such as the probability

of an attack) to a single line that allows the IDS operator to easily find the optimal tradeoff. In

the second approach, we identify the main parameters that affect the quality of the performance

of the IDS. This will allow us to later introduce a new evaluation method that we believe better

captures the effect of these parameters than all previously proposed methods.

A. Background Work

Expected Cost

In this section we present the expected cost of an IDS by combining some of the ideas

originally presented in [8] and [16]. The expected cost is used as an evaluation method for

5

State of the system

No Intrusion (I = 0)

Intrusion (I = 1)

Detector’s report

No Alarm (A=0) Alarm (A=1)

C(0, 0)

C(0, 1)

C(1, 0)

C(1, 1)

Table 2.1: Costs of the IDS reports given the state of the system

IDSs in order to assess the investment of an IDS in a given IT security infrastructure. In

addition to the rates of detection and false alarm, the expected cost of an IDS can also depend

on the hostility of the environment, the IDS operational costs, and the expected damage done

by security breaches.

A quantitative measure of the consequences of the output of the IDS to a given event,

which can be an intrusion or not are the costs shown in Table 2.1. Here C(0, 1) corresponds to

the cost of responding as though there was an intrusion when there is none, C(1, 0) corresponds

to the cost of failing to respond to an intrusion, C(1, 1) is the cost of acting upon an intrusion

when it is detected (which can be defined as a negative value and therefore be considered as a

profit for using the IDS), and C(0, 0) is the cost of not reacting to a non-intrusion (which can

also be defined as a profit, or simply left as zero.)

Adding costs to the different outcomes of the IDS is a way to generalize the usual tradeoff

between the probability of false alarm and the probability of detection to a tradeoff between the

expected cost for a non-intrusion

R(0, PFA ) ≡ C(0, 0)(1 − PFA ) +C(0, 1)PFA

and the expected cost for an intrusion

R(1, PD ) ≡ C(1, 0)(1 − PD ) +C(1, 1)PD

It is clear that if we only penalize errors of classification with unit costs (i.e. if C(0, 0) =

C(1, 1) = 0 and C(0, 1) = C(1, 0) = 1) the expected cost for non-intrusion and the expected

cost for intrusion become respectively, the false alarm rate and the detection rate.

The question of how to select the optimal tradeoff between the expected costs is still

open. However, if we let the hostility of the environment be quantified by the likelihood of

an intrusion p ≡ Pr[I = 1] (also known as the base-rate [7]), we can average the expected

non-intrusion and intrusion costs to give the overall expected cost of the IDS:

E[C(I, A)] = R(0, PFA )(1 − p) + R(1, PD )p

(2.2)

It should be pointed out that R() and E[C(I, A)] are also known as the risk and Bayesian

risk functions (respectively) in Bayesian decision theory.

Given an IDS, the costs from Table 2.1 and the likelihood of an attack p, the problem now

is to find the optimal tradeoff between PD and PFA in such a way that E[C(I, A)] is minimized.

The Intrusion Detection Capability

The main motivation for introducing the intrusion detection capability CID as an evaluation metric originates from the fact that the costs in Table 2.1 are chosen in a subjective way

[9]. Therefore the authors propose the use of the intrusion detection capability as an objective

6

metric motivated by information theory:

CID =

I(I; A)

H(I)

(2.3)

where I and H respectively denote the mutual information and the entropy [17]. The H(I) term

in the denominator is a normalizing factor so that the value of CID will always be in the [0, 1]

interval. The intuition behind this metric is that by fine tuning an IDS based on CID we are

finding the operating point that minimizes the uncertainty of whether an arbitrary input event x

was generated by an intrusion or not.

The main drawback of CID is that it obscures the intuition that is to be expected when

evaluating the performance of an IDS. This is because the notion of reducing the uncertainty

of an attack is difficult to quantify in practical values of interest such as false alarms or detection rates. Information theory has been very useful in communications because the entropy

and mutual information can be linked to practical quantities, like the number of bits saved by

compression (source coding) or the number of bits of redundancy required for reliable communications (channel coding). However it is not clear how these metrics can be related to

quantities of interest for the operator of an IDS.

The Base-Rate Fallacy and Predictive Value Metrics

In [7] Axelsson pointed out that one of the causes for the large amount of false alarms

that intrusion detectors generate is the enormous difference between the amount of normal

events compared to the small amount of intrusion events. Intuitively, the base-rate fallacy

states that because the likelihood of an attack is very small, even if an IDS fires an alarm,

the likelihood of having an intrusion remains relatively small. Formally, when we compute

the posterior probability of intrusion (a quantity known as the Bayesian detection rate, or the

positive predictive value (PPV)) given that the IDS fired an alarm, we obtain:

PPV ≡ Pr[I = 1|A = 1]

Pr[A = 1|I = 1]Pr[I = 1]

=

Pr[A = 1|I = 1]Pr[I = 1] + Pr[A = 1|I = 0]Pr[I = 0]

PD p

=

(PD − PFA )p + PFA

(2.4)

Therefore, if the rate of incidence of an attack is very small, for example on average only

1 out of 105 events is an attack (p = 10−5 ), and if our detector has a probability of detection

of one (PD = 1) and a false alarm rate of 0.01 (PFA = 0.01), then Pr[I = 1|A = 1] = 0.000999.

That is on average, of 1000 alarms, only one would be a real intrusion.

It is easy to demonstrate that the PPV value is maximized when the false alarm rate of

our detector goes to zero, even if the detection rate also tends to zero! Therefore as mentioned

in [7] we require a trade-off between the PPV value and the negative predictive value (NPV):

NPV ≡ Pr[I = 0|A = 0] =

(1 − p)(1 − PFA )

p(1 − PD ) + (1 − p)(1 − PFA )

B. Discussion

7

(2.5)

The concept of finding the optimal tradeoff of the metrics used to evaluate an IDS is an

instance of the more general problem of multi-criteria optimization. In this setting, we want

to maximize (or minimize) two quantities that are related by a tradeoff, which can be done via

two approaches. The first approach is to find a suitable way of combining these two metrics in

a single objective function (such as the expected cost) to optimize. The second approach is to

directly compare the two metrics via a trade-off curve.

We therefore classify the above defined metrics into two general approaches that will

be explored in the rest of this chapter: the minimization of the expected cost and the tradeoff

approach. We consider these two approaches as complimentary tools for the analysis of IDSs,

each providing its own interpretation of the results.

Minimization of the Expected Cost

Let ROC denote the set of allowed (PFA , PD ) pairs for an IDS. The expected cost approach

will include any evaluation metric that can be expressed as

r∗ =

min

(PFA ,PD )∈ROC

E[C(I, A)]

(2.6)

where r∗ is the expected cost of the IDS. Given I DS 1 with expected cost r1∗ and an I DS 2

with expected cost r2∗ , we can say I DS 1 is better than I DS 2 for our operational environment

if r1∗ < r2∗ .

We now show how CID , and the tradeoff between the PPV and NPV values can be expressed as an expected costs problems. For the CID case note that the entropy of an intrusion

H(I) is independent of our optimization parameters (PFA , PD ), therefore we have:

∗

(PFA

, PD∗ ) = arg

= arg

max

(PFA ,PD )∈ROC

max

I (I; A)

H(I)

I(I; A)

(PFA ,PD )∈ROC

= arg

= arg

min

H(I|A)

min

E[− log Pr[I|A]]

(PFA ,PD )∈ROC

(PFA ,PD )∈ROC

It is now clear that CID is an instance of the expected cost problem with costs given by

C(i, j) = − log Pr[I = i|A = j]. By finding the costs of CID we are making the CID metric more

intuitively appealing, since any optimal point that we find for the IDS will have an explanation

in terms of cost functions (as opposed to the vague notion of diminishing the uncertainty of the

intrusions).

Finally, in order to combine the PPV and the NPV in an average cost metric, recall that

we want to maximize both Pr[I = 1|A = 1] and Pr[I = 0|A = 0]. Our average gain for each

operating point of the IDS is therefore

ω1 Pr[I = I|A = 1]Pr[A = 1] + ω2 Pr[I = 0|A = 0]Pr[A = 0]

where ω1 (ω2 ) is a weight representing a preference towards maximizing PPV (NPV). This

equation is equivalent to the minimization of

−ω1 Pr[I = I|A = 1]Pr[A = 1] − ω2 Pr[I = 0|A = 0]Pr[A = 0]

8

(2.7)

Comparing equation (2.7) with equation (2.2), we identify the costs as being C(1, 1) = −ω1 ,

C(0, 0) = −ω2 and C(0, 1) = C(1, 0) = 0. Relating the predictive value metrics (PPV and NPV)

with the expected cost problem will allow us to examine the effects of the base-rate fallacy on

the expected cost of the IDS in future sections.

IDS classification tradeoffs

An alternate approach in evaluating intrusion detection systems is to directly compare the

tradeoffs in the operation of the system by a tradeoff curve, such as ROC, or DET curves [18] (a

reinterpretation of the ROC curve where the y-axis is 1 − PD , as opposed to PD ). As mentioned

in [7], another tradeoff to consider is between the PPV and the NPV values. However, we do

not know of any tradeoff curves that combine these two values to aid the operator in choosing

a given operating point.

We point out in section B that a tradeoff between PFA and PD (as in the ROC curves) as

well as a tradeoff between PPV and NPV can be misleading for cases where p is very small,

since very small changes in the PFA and NPV values for our points of interest will have drastic

performance effects on the PD and the PPV values. Therefore, in the next section we introduce

the B-ROC as a new tradeoff curve between PD and PPV.

The class imbalance problem

A way to relate our approach with traditional techniques used in machine learning is to

identify the base-rate fallacy as just another instance of the class imbalance problem. The term

class imbalance refers to the case when in a classification task, there are many more instances

of some classes than others. The problem is that under this setting, classifiers in general perform

poorly because they tend to concentrate on the large classes and disregard the ones with few

examples.

Given that this problem is prevalent in a wide range of practical classification problems,

there has been recent interest in trying to design and evaluate classifiers faced with imbalanced

data sets [19, 20, 21].

A number of approaches on how to address these issues have been proposed in the literature. Ideas such as data sampling methods, one-class learning (i.e. recognition-based learning),

and feature selection algorithms, appear to be the most active research directions for learning

classifiers. On the other hand the issue of how to evaluate binary classifiers in the case of class

imbalances appears to be dominated by the use of ROC curves [22, 23] (and to a lesser extent,

by error curves [24]).

V

Graphical Analysis

We now introduce a graphical framework that allows the comparison of different metrics in the analysis and evaluation of IDSs. This graphical framework can be used to adaptively

change the parameters of the IDS based on its actual performance during operation. The framework also allows for the comparison of different IDSs under different operating environments.

Throughout this section we use one of the ROC curves analyzed in [8] and in [9]. Mainly

the ROC curve describing the performance of the COLUMBIA team intrusion detector for the

1998 DARPA intrusion detection evaluation [25]. Unless otherwise stated, we assume for our

analysis the base-rate present in the DARPA evaluation which was p = 6.52 × 10−5 .

9

A. Visualizing the Expected Cost: The Minimization Approach

The biggest drawback of the expected cost approach is that the assumptions and information about the likelihood of attacks and costs might not be known a priori. Moreover, these

parameters can change dynamically during the system operation. It is thus desirable to be able

to tune the uncertain IDS parameters based on feedback from its actual system performance in

order to minimize E[C(I, A)].

We select the use of ROC curves as the basic 2-D graph because they illustrate the behavior of a classifier without regard to the uncertain parameters, such as the base-rate p and the

operational costs C(i, j). Thus the ROC curve decouples the classification performance from

these factors [26]. ROC curves are also general enough such that they can be used to study

anomaly detection schemes and misuse detection schemes (a misuse detection scheme has only

one point in the ROC space).

CID with p=6.52E−005

0.8

0.5

6

0.

0.5

0.7

0.4

0.4

0.5

1

0.6

0

65

.45

0.4

0.3

0.3

0.3

0.4

PD

0.5

0.4

0.2

0.3

0.2

0.2

0.

2

0.3

0.1

0.1

0.2

0.1

0.1

0

0.2

0.4

0.6

PFA

0.8

1

−3

x 10

Figure 2.1: Isoline projections of CID onto the ROC curve. The optimal CID value is CID =

0.4565. The associated costs are C(0, 0) = 3 × 10−5 , C(0, 1) = 0.2156, C(1, 0) = 15.5255 and

C(1, 1) = 2.8487. The optimal operating point is PFA = 2.76 × 10−4 and PD = 0.6749.

In the graphical framework, the relation of these uncertain factors with the ROC curve of

an IDS will be reflected in the isolines of each metric, where isolines refer to lines that connect

pairs of false alarm and detection rates such that any point on the line has equal expected

cost. The evaluation of an IDS is therefore reduced to finding the point of the ROC curve that

intercepts the optimal isoline of the metric (for signature detectors the evaluation corresponds

to finding the isoline that intercepts their single point in the ROC space and the point (0,0) or

(1,1)). In Figure 2.1 we can see as an example the isolines of CID intercepting the ROC curve

of the 1998 DARPA intrusion detection evaluation.

One limitation of the CID metric is that it specifies the costs C(i, j) a priori. However,

in practice these costs are rarely known in advance and moreover the costs can change and be

dynamically adapted based on the performance of the IDS. Furthermore the nonlinearity of CID

makes it difficult to analyze the effect different p values will have on CID in a single 2-D graph.

To make the graphical analysis of the cost metrics as intuitive as possible, we will assume from

10

now on (as in [8]) that the costs are tunable parameters and yet once a selection of their values

is made, they are constant values. This new assumption will let us at the same time see the

effect of different values of p in the expected cost metric.

Under the assumption of constant costs, we can see that the isolines for the expected cost

E[C(I, A)] are in fact straight lines whose slope depends on the ratio between the costs and the

likelihood ratio of an attack. Formally, if we want the pair of points (PFA1 , PD1 ) and (PFA2 , PD2 )

to have the same expected cost, they must be related by the following equation [27, 28, 26]:

mC,p ≡

1 − p C(0, 1) −C(0, 0) 1 − p 1

PD2 − PD1

=

=

PFA1 − PFA2

p C(1, 0) −C(1, 1)

p C

(2.8)

where in the last equality we have implicitly defined C to be the ratio between the costs, and

mC,p to be the slope of the isoline. The set of isolines of E[C(I, A)] can be represented by

I SO E = {mC,p × PFA + b : b ∈ [0, 1]}

(2.9)

For fixed C and p, it is easy to prove that the optimal operating point of the ROC is the

point where the ROC intercepts the isoline in I SO E with the largest b (note however that there

are ROC curves that can have more than one optimal point.) The optimal operating point in the

ROC is therefore determined only by the slope of the isolines, which in turn is determined by

p and C. Therefore we can readily check how changes in the costs and in the likelihood of an

attack will impact the optimal operating point.

Isolines of the expected cost under different C values (with p=6.52×10−005)

0.8

0.7

0.6

C=58.82

PD

0.5

C=10.0

0.4

0.3

0.2

0.1

0

C=2.50

0

0.2

0.4

0.6

PFA

0.8

1

−3

x 10

Figure 2.2: As the cost ratio C increases, the slope of the optimal isoline decreases

Effect of the Costs

In Figure 2.2, consider the operating point corresponding to C = 58.82, and assume that

after some time, the operators of the IDS realize that the number of false alarms exceeds their

response capabilities. In order to reduce the number of false alarms they can increase the cost

of a false alarm C(0, 1) and obtain a second operating point at C = 10. If however the situation

11

persists (i.e. the number of false alarms is still much more than what operators can efficiently

respond to) and therefore they keep increasing the cost of a false alarm, there will be a critical

slope mc such that the intersection of the ROC and the isoline with slope mc will be at the point

(PFA , PD ) = (0, 0). The interpretation of this result is that we should not use the IDS being

evaluated since its performance is not good enough for the environment it has been deployed

in. In order to solve this problem we need to either change the environment (e.g. hire more IDS

operators) or change the IDS (e.g. shop for a more expensive IDS).

The Base-Rate Fallacy Implications on the Costs of an IDS

A similar scenario occurs when the likelihood of an attack changes. In Figure 2.3 we can

see how as p decreases, the optimal operating point of the IDS tends again to (PFA , PD ) = (0, 0)

(again the evaluator must decide not to use the IDS for its current operating environment).

Therefore, for small base-rates the operation of an IDS will be cost efficient only if we have

an appropriate large C∗ such that mC∗ ,p∗ ≤ mc . A large C∗ can be explained if cost of a false

alarm much smaller than the cost of a missed detection: C(1, 0) C(0, 1) (e.g. the case of a

government network that cannot afford undetected intrusions and has enough resources to sort

through the false alarms).

Isolines of the probability of error under different p values

0.8

0.7

0.6

p=0.01

p=0.001

0.5

PD

p=0.1

0.4

0.3

0.2

p=0.0001

0.1

0

0

0.2

0.4

0.6

PFA

0.8

1

−3

x 10

Figure 2.3: As the base-rate p decreases, the slope of the optimal isoline increases

Generalizations

This graphical method of cost analysis can also be applied to other metrics in order to get

some insight into the expected cost of the IDS. For example in [10], the authors define an IDS

with input space X to be σ − sensitive if there exists an efficient algorithm with the same input

E ≥ σ. This metric can be used to find the optimal

space E : X → {¬A, A}, such that PDE − PFA

point of an ROC because it has a very intuitive explanation: as long as the rate of detected

intrusions increases faster than the rate of false alarms, we keep moving the operating point

12

of the IDS towards the right in the ROC. The optimal sensitivity problem for an IDS with a

receiver operating characteristic ROC is thus:

max

(PFA ,PD )∈ROC

PD − PFA

(2.10)

It is easy to show that this optimal sensitivity point is the same optimal point obtained with the

isolines method for mC,p = 1 (i.e. C = (1 − p)/p).

B. The Bayesian Receiver Operating Characteristic: The Tradeoff Approach

PPV = 1

1

PPV =

p

p + (1 − p)tanθ

0.9

0.8

0.7

PD

0.6

0.5

θ

0.4

PPV = p

0.3

0.2

0.1

0

0

0.1

0.2

0.3

0.4

0.5

PFA

0.6

0.7

0.8

0.9

1

Figure 2.4: The PPV isolines in the ROC space are straight lines that depend only on θ. The

PPV values of interest range from 1 to p

Although the graphical analysis introduced so far can be applied to analyze the cost

efficiency of several metrics, the intuition for the tradeoff between the PPV and the NPV is

still not clear. Therefore we now extend the graphical approach by introducing a new pair of

isolines, those of the PPV and the NPV metrics.

Lemma 1:

and only if

Two sets of points (PFA1 , PD1 ) and (PFA2 , PD2 ) have the same PPV value if

PFA2 PFA1

=

= tan θ

(2.11)

PD2

PD1

where θ is the angle between the line PFA = 0 and the isoline. Moreover the PPV value of an

isoline at angle θ is

p

PPVθ,p =

(2.12)

p + (1 − p) tan θ

Similarly, two set of points (PFA1 , PD1 ) and (PFA2 , PD2 ) have the same NPV value if and only if

1 − PD1

1 − PD2

=

= tan φ

1 − PFA1 1 − PFA2

13

(2.13)

NPV = 1

1

NPV =

0.9

0.8

φ

1−p

p(tanφ − 1) + 1

0.7

PD

0.6

0.5

NPV = 1 − p

0.4

0.3

0.2

0.1

0

0

0.1

0.2

0.3

0.4

0.5

PFA

0.6

0.7

0.8

0.9

1

Figure 2.5: The NPV isolines in the ROC space are straight lines that depend only on φ. The

NPV values of interest range from 1 to 1 − p

where φ is the angle between the line PD = 1 and the isoline. Moreover the NPV value of an

isoline at angle φ is

1− p

(2.14)

NPVφ,p =

p(tan φ − 1) + 1

Figures 2.4 and 2.5 show the graphical interpretation of Lemma 1. It is important to note

the range of the PPV and NPV values as a function of their angles. In particular notice that as θ

goes from 0◦ to 45◦ (the range of interest), the value of PPV changes from 1 to p. We can also

see from Figure 2.5 that as φ ranges from 0◦ to 45◦ , the NPV value changes from one to 1 − p.

If p is very small, then NPV ≈ 1.

Figure 2.6 shows the application of Fact 1 to a typical ROC curve of an IDS. In this figure

we can see the tradeoff of four variables of interest: PFA , PD , PPV , and NPV . Notice that if

we choose the optimal operating point based on PFA and PD , as in the typical ROC analysis, we

might obtain misleading results because we do not know how to interpret intuitively very low

false alarm rates, e.g. is PFA = 10−3 much better than PFA = 5 × 10−3 ? The same reasoning

applies to the study of PPV vs. NPV as we cannot interpret precisely small variations in NPV

values, e.g. is NPV = 0.9998 much better than NPV = 0.99975? Therefore we conclude that

the most relevant metrics to use for a tradeoff in the performance of a classifier are PD and PPV,

since they have an easily understandable range of interest.

However, even when you select as tradeoff parameters the PPV and PD values, the isoline

analysis shown in Figure 2.6 has still one deficiency, and it is the fact that there is no efficient

way to account for the uncertainty of p. In order to solve this problem we introduce the B-ROC

as a graph that shows how the two variables of interest: PD and PPV are related under different

severity of class imbalances. In order to follow the intuition of the ROC curves, instead of using

PPV for the x-axis we prefer to use 1-PPV . We use this quantity because it can be interpreted

as the Bayesian false alarm rate: BFA ≡ Pr[C = 0|A = 1]. For example, for IDSs BFA can be a

14

1

PPV = 0.5

PPV = 0.2

PPV = 0.1

ROC

0.9

98

0.999

0.8

0.7

0.8

PD

0.1

0.2

0.5

0.4

0.3

0.7

0.99998

6

999

0.6

0.9

0.5

94

99

0.4

0.9

0.3

0.6

0.2

0.1

ROC

0.5

PD

PD0.4

0

0.1

0.2

0.3

0.4

0.5

PFA

0.6

0.7

0.8

0.9

0.99996

NPV

= 0.99996

0.3

Zoom of the

Area of Interest

0.2

0.1

0

0

0.99994

NPV

= 0.99994

0

0.1

0.2

0.3

0.4

0.5

PFA

0.6

0.7

0.8

0.9

PF A

1

−3

x 10

×10−3

Figure 2.6: PPV and NPV isolines for the ROC of an IDS with p = 6.52 × 10−5

1

0.9

p=0.1

0.8

0.7

0.6

p=1×10−3

PD

p=0.01

0.5

p=1×10−6

p=1×10−4

0.4

p=1×10−5

0.3

p=1×10−7

0.2

0.1

0

0

0.2

0.4

0.6

0.8

BFA

Figure 2.7: B-ROC for the ROC of Figure 2.6.

15

1

1

measure of how likely it is, that the operators of the detection system will loose their time each

time they respond to an alarm. Figure 2.7 shows the B-ROC for the ROC presented in Figure

2.6. Notice also how the values of interest for the x-axis have changed from [0, 10−3 ] to [0, 1].

1

1

PD

PD

0

1

PFA

0

BFA

1−p

Figure 2.8: Mapping of ROC to B-ROC

In order to be able to interpret the B-ROC curves, Figure 2.8 shows how the ROC points

map to points in the B-ROC. The vertical line defined by 0 < PD ≤ 1 and PFA = 0 in the ROC

maps exactly to the same vertical line 0 < PD ≤ 1 and BFA = 0 in the B-ROC. Similarly, the top

horizontal line 0 ≤ PFA ≤ 1 and PD = 1 maps to the line 0 ≤ BFA ≤ 1− p and PD = 1. A classifier

that performs random guessing is represented in the ROC as the diagonal line PD = PFA , and

this random guessing classifier maps to the vertical line defined by BFA = 1 − p and PD > 0

in the B-ROC. Finally, to understand where the point (0, 0) in the ROC maps into the B-ROC,

let α and f (α) denote PFA and the corresponding PD in the ROC curve. Then, as the false

alarm rate α tends to zero (from the right), the Bayesian false alarm rate tends to a value that

depends on p and the slope of the ROC close to the point (0, 0). More specifically, if we let

f 0 (0+ ) = limα→0+ f 0 (α), then:

lim BFA = lim

α→0+

α→0+

1− p

α(1 − p)

=

0

p f (α) + α(1 − p) p( f (0+ ) − 1) + 1

It is also important to recall that a necessary condition for a classifier to be optimal, is

that its ROC curve should be concave. In fact, given any non-concave ROC, by following

Neyman-Pearson theory, you can always get a concave ROC curve by randomizing decisions

between optimal points [27]. This idea has been recently popularized in the machine learning

community by the notion of the ROC convex hull [26].

The importance of this observation is that in order to guarantee that the B-ROC is a well

defined continuous and non-decreasing function, we map only concave ROC curves to B-ROCs.

16

1

0.9

0.8

ROC

0.7

1

ROC

PD

0.6

2

0.5

0.4

0.3

0.2

0.1

0

0

0.2

0.4

0.6

0.8

1

PFA

Figure 2.9: An empirical ROC (ROC2 ) and its convex hull (ROC1 )

1

0.9

0.8

B-ROC1

0.7

B-ROC2

P

D

0.6

0.5

0.4

0.3

0.2

0.1

0

0.75

0.8

0.85

0.9

0.95

1

BFA

Figure 2.10: The B-ROC of the concave ROC is easier to interpret

17

In Figures 2.9 and 2.10 we show the only example in this document of the type of B-ROC curve

that you can get when you do not consider a concave ROC.

1

0.9

0.8

ROC

1

0.7

PD

0.6

ROC

0.5

2

0.4

0.3

0.2

0.1

0

0

0.2

0.4

0.6

0.8

1

PFA

Figure 2.11: Comparison of two classifiers

We also point out the fact that the B-ROC curves can be very useful for the comparison

of classifiers. A typical comparison problem by using ROCs is shown in Figure 2.11. Several

ideas have been proposed in order to solve this comparison problem. For example by using

decision theory as in [29, 26] we can find the optimal classifier between the two by assuming a

given prior p and given misclassification costs. However, a big problem with this approach is

that the misclassification costs are sometimes uncertain and difficult to estimate a priori. With

a B-ROC on the other hand, you can get a better comparison of two classifiers without the

assumption of any misclassification costs, as can be seen in Figure 2.12.

VI

Conclusions

We believe that the B-ROC provides a better way to evaluate and compare classifiers

in the case of class imbalances or uncertain values of p. First, for selecting operating points

in heavily imbalanced environments, B-ROCs use tradeoff parameters that are easier to understand than the variables considered in ROC curves (they provide better intuition for the

performance of the classifier). Second, since the exact class distribution p might not be known

a priori, or accurately enough, the B-ROC allows the plot of different curves for the range of

interest of p. Finally, when comparing two classifiers, there are cases in which by using the

B-ROC, we do not need cost values in order to decide which classifier would be better for given

values of p. Note also that B-ROCs consider parameters that are directly related to exact quantities that the operator of a classifier can measure. In contrast, the exact interpretation of the

expected cost of a classifier is more difficult to relate to the real performance of the classifier

(the costs depend in many other unknown factors).

18

p=1×10−3

1

0.9

0.8

0.7

PD

0.6

B−ROC1

0.5

0.4

0.3

B−ROC2

0.2

0.1

0

0

0.2

0.4

0.6

0.8

1

BFA

Figure 2.12: B-ROCs comparison for the p of interest

19

Chapter 3

Secure Decision Making: Defining the Evaluation Metrics and the Adversary

They did not realize that because of the quasi-reciprocal and circular nature of all

Improbability calculations, anything that was Infinitely Improbable was actually very likely to

happen almost immediately.

-Life the Universe and Everything. Douglas Adams

I

Overview

As we have seen in the previous chapter, the traditional evaluation of IDSs assume that

the intruder will behave similarly before and after the deployment and configuration of the IDS

(i.e. during the evaluation it is assumed that the intruder will be non-adaptive). In practice

however this assumption does not hold, since once an IDS is deployed, intruders will adapt and

try to evade detection or launch attacks against the IDS.

This lack of a proper adversarial model is a big problem for the evaluation of any decision

making algorithm used in security applications, since without the definition of an adversary, we

cannot reason about and much less measure the security of the algorithm.

We therefore layout in this chapter the overall design and evaluation goals that will be

used throughout the rest of this dissertation.

The basic idea is to introduce a formal framework for reasoning about the performance

and security of a decision making algorithm. In particular we do not want to find or design the

best performing decision making algorithm on average, but the algorithm that performs best

against the worst type of attacks.

The chapter layout is the following. In section II we introduce a general set of guidelines

for the design and evaluation of decision making algorithms. In section III we introduce a

black box adversary model. This model is very simple yet very useful and robust for cases

where having an exact adversarial model is intractable. Finally in section IV we introduce a

detailed model of an adversary which we will use in chapters 4 and 5.

II

A Set of Design and Evaluation Guidelines

In this chapter we focus on designing a practical methodology for dealing with attackers.

In particular we propose the use of a framework where each of these components is clearly

defined:

Desired Properties Intuitive definition of the goal of the system.

Feasible Design Space The design space S for the classification algorithm.

Information Available to the Adversary Identify which pieces of information can be available to an attacker.

20

Capabilities of the Adversary Define a feasible class of attackers F based on the assumed

capabilities.

Evaluation Metric The evaluation metric should be a reasonable measure how well the designed system meets our desired properties. We call a system secure if its metric outcome

is satisfied for any feasible attacker.

Objective of the Adversary An attacker can use its capabilities and the information available

in order to perform two main classes of attacks:

• Evaluation attack. The goal of the attacker is opposite to the goal defined by

the evaluation metric. For example, if the goal of the classifier is to minimize

E[L(C, A)], then the goal of the attacker is to maximize E[L(C, A)].

• Base system attack. The goal of an attacker is not the opposite goal of the classifier.

For example, even if the goal of the classifier is to minimize E[L(C, A)], the goal of

the attacker is still to minimize the probability of being detected.

Model Assumptions Identify clearly the assumptions made during the design and evaluation

of the classifier. It is important to realize that when we borrow tools from other fields,

they come with a set of assumptions that might not hold in an adversarial setting, because

the first thing that an attacker will do is violate the set of assumptions that the classifier

is relying on for proper operation. After all, the UCI machine learning repository never

launched an attack against your classifier. Therefore one of the most important ways to

deal with an adversarial environment is to limit the number of assumptions made, and to

evaluate the resiliency of the remaining assumptions to attacks.

Before we use these guidelines for the evaluation of classifiers, we describe a very simple

example of the use of these guidelines and their relationship to cryptography. Cryptography is

one of the best examples in which a precise framework has been developed in order to define

properly what a secure system means, and how to model an adversary. We believe therefore

that this example will help us in identifying the generality and use of the guidelines as a step

towards achieving sound designs1 .

A. Example: Secret key Encryption

In secret key cryptography, Alice and Bob share a single key sk. Given a message m

(called plaintext) Alice uses an encryption algorithm to produce unintelligible data C (called

ciphertext): C ← Esk (m). After receiving C, Bob then uses sk and a decryption algorithm to

recover the secret message m = Dsk (C).

Desired Properties E and D should enable Alice and Bob to communicate secretly, that is, a

feasible adversary should not get any information about m given C except with very small

probability.

Feasible Design Space E and D have to be efficient probabilistic algorithms. They also need

to satisfy correctness: for any sk and m, Dsk (Esk (m)) = m.

1 We

avoid the precise formal treatment of cryptography because our main objective here is to present the

intuition behind the principles rather than the specific technical details.

21

Information Available to the Adversary It is assumed that an adversary knows the encryption

and decryption algorithms. The only information not available to the adversary is the

secret key sk shared between Alice and Bob.

Capabilities of the Adversary The class of feasible adversaries F is the set of algorithms running in a reasonable amount of time.

Evaluation Metric For any messages m0 and m1 , given a ciphertext C which is known to be an

encryption of either m0 or m1 , no adversary A ∈ F can guess correctly which message

was encrypted with probability significantly better than 1/2.

Goal of the Adversary Perform an evaluation attack. That is, design an algorithm A ∈ F that

can guess with probability significantly better than 1/2 which message corresponds to

the given ciphertext.

Model Assumptions The security of an encryption scheme usually relies in a set of cryptographic primitives, such as one way functions.

Another interesting aspect of cryptography is the different notions of security when the

adversary is modified. In the previous example it is sometimes reasonable to assume that the

attacker will obtain valid plaintext and ciphertext pairs: {(m0 ,C0 ), (m1 ,C1 ), . . . , (mk ,Ck )}. This

new setting is modeled by giving the adversary more capabilities: the feasible set F will now

consist of all efficient algorithms that have access to the ciphertexts of chosen plaintexts. An

encryption algorithm is therefore secure against chosen-ciphertext attacks if even with this new

capability, the adversary still cannot break the encryption scheme.

III

A Black Box Adversary Model

In this section we introduce one of the simplest adversary models against classification

algorithms. We refer to it as a black box adversary model since we do not care at the moment

how the adversary creates the observations X. This model is particularly suited to cases where

trying to model the creation of the inputs to the classifier is intractable.

Recall first that for our evaluation analysis in the previous chapter we were assuming

three quantities that can be, up to a certain extent, controlled by the intruder. They are the

base-rate p, the false alarm rate PFA and the detection rate PD . The base-rate can be modified

by controlling the frequency of attacks. The perceived false alarm rate can be increased if the

intruder finds a flaw in any of the signatures of an IDS that allows him to send maliciously

crafted packets that trigger alarms at the IDS but that look benign to the IDS operator. Finally,

the detection rate can be modified by the intruder with the creation of new attacks whose signatures do not match those of the IDS, or simply by evading the detection scheme, for example

by the creation of a mimicry attack [30, 31].

In an effort towards understanding the advantage an intruder has by controlling these parameters, and to provide a robust evaluation framework, we now present a formal framework

to reason about the robustness of an IDS evaluation method. Our work in this section is in

some sense similar to the theoretical framework presented in [10], which was inspired by cryptographic models. However, we see two main differences in our work. First, we introduce the

role of an adversary against the IDS, and thereby introduce a measure of robustness for the

metrics. In the second place, our work is more practical and is applicable to more realistic

22

evaluation metrics. Furthermore we also provide examples of practical scenarios where our

methods can be applied.

In order to be precise in our presentation, we need to extend the definitions introduced

in section III. For our modeling purposes we decompose the I DS algorithm into two parts: a

detector D and a decision maker DM . For the case of an anomaly detection scheme, D (x[ j])

outputs the anomaly score y[ j] on input x[ j] and DM represents the threshold that determines

whether to consider the anomaly score as an intrusion or not, i.e. DM (y[ j]) outputs an alarm

or it does not. For a misuse detection scheme, DM has to decide to use the signature to report

alarms or decide that the performance of the signature is not good enough to justify its use and

therefore will ignore all alarms (e.g. it is not cost-efficient to purchase the misuse scheme being

evaluated).

Definition 1 An I DS algorithm is the composition of algorithms D (an algorithm from where

we can obtain an ROC curve) and DM (an algorithm responsible for selecting an operating point). During operation, an I DS receives a continuous data stream of event features

x[1], x[2], . . . and classifies each input x[ j] by raising an alarm or not. Formally:2

I DS (x)

y = D (x)

A ← DM (y)

Output A (where A ∈ {0, 1})

♦

We now study the performance of an IDS under an adversarial setting. We remark that

our intruder model does not represent a single physical attacker against the IDS. Instead our

model represents a collection of attackers whose average behavior will be studied under the

worst possible circumstances for the IDS.

The first thing we consider, is the amount of information the intruder has. A basic assumption to make in an adversarial setting is to consider that the intruder knows everything

that we know about the environment and can make inferences about the situation the same way

as we can. Under this assumption we assume that the base-rate p̂ estimated by the IDS, its

estimated operating condition (P̂FA , P̂D ) selected during the evaluation, the original ROC curve

(obtained from D ) and the cost function C(I, A) are public values (i.e. they are known to the

intruder).

We model the capability of an adaptive intruder by defining some confidence bounds. We

assume an intruder can deviate p̂ − δl , p̂ + δu from the expected p̂ value. Also, based on our

confidence in the detector algorithm and how hard we expect it to be for an intruder to evade

the detector, or to create non-relevant false positives (this also models how the normal behavior

of the system being monitored can produce new -previously unseen- false alarms), we define α

and β as bounds to the amount of variation we can expect during the IDS operation from the

false alarms and the detection rate (respectively) we expected, i.e. the amount of variation from

(P̂FA , P̂D ) (although in practice estimating these bounds is not an easy task, testing approaches

like the one described in [32] can help in their determination).

The intruder also has access to an oracle Feature(·, ·) that simulates an event to input into

the IDS. Feature(0, ζ) outputs a feature vector modeling the normal behavior of the system that

will raise an alarm with probability ζ (or a crafted malicious feature to only raise alarms in the

2 The

usual arrow notation: a ← DM (y) implies that DM can be a probabilistic algorithm.

23

case Feature(0, 1)). And Feature(1, ζ) outputs the feature vector of an intrusion that will raise

an alarm with probability ζ.

Definition 2 A (δ, α, β) − intruder is an algorithm I that can select its frequency of intrusions

p1 from the interval δ = [ p̂ − δl , p̂ + δu ]. If it decides to attempt an intrusion, then with probability p2 ∈ [0, β], it creates an attack feature x that will go undetected by the IDS (otherwise

this intrusion is detected with probability P̂D ). If it decides not to attempt an intrusion, with

probability p3 ∈ [0, α] it creates a feature x that will raise a false alarm in the IDS

I(δ, α, β)

Select p1 ∈ [ p̂ − δl , p̂ + δu ]

Select p2 ∈ [0, α]

Select p3 ∈ [0, β]

I ← Bernoulli(p1 )

If I = 1

B ← Bernoulli(p3 )

x ← Feature(1, (min{(1 − B), P̂D }))

Else

B ← Bernoulli(p2 )

x ← Feature(0, max{B, P̂FA })

Output (I,x)

where Bernoulli(ζ) outputs a Bernoulli random variable with probability of success ζ.

Furthermore, if δl = p and δu = 1 − p we say that I has the ability to make a chosenintrusion rate attack.

♦

We now formalize what it means for an evaluation scheme to be robust. We stress the

fact that we are not analyzing the security of an IDS, but rather the security of the evaluation of

an IDS, i.e. how confident we are that the IDS will behave during operation similarly to what

we assumed in the evaluation.

A. Robust Expected Cost Evaluation

We start with the general decision theoretic framework of evaluating the expected cost

(per input) E[C(I, A)] for an IDS.

Definition 3 An evaluation method that claims the expected cost of an I DS is at most r is robust against a (δ, α, β) − intruder if the expected cost of I DS during the attack (Eδ,α,β [C[I, A)])

is no larger than r, i.e.

Eδ,α,β [C[I, A)] = ∑ C(i, a)×

i,a

Pr [ (I, x) ← I(δ, α, β); A ← I DS (x) : I = i, A = a ] ≤ r

♦

Now recall that the traditional evaluation framework finds an evaluation value r∗ by using

equation (2.6). So by finding r∗ we are basically finding the best performance of an IDS and

claiming the IDS is better than others if r∗ is smaller than the evaluation of the other IDSs.

In this section we claim that an IDS is better than others if its expected value under the worst

24

performance is smaller than the expected value under the worst performance of other IDSs. In

short

Traditional Evaluation Given a set of IDSs {I DS 1 , I DS 2 , . . . , I DS n } find the best expected

cost for each:

ri∗ =

min

E[C(I, A)]

(3.1)

β

α ,P )∈ROC

(PFA

i

D

Declare that the best IDS is the one with smallest expected cost ri∗ .

Robust Evaluation Given a set of IDSs {I DS 1 , I DS 2 , . . . , I DS n } find the best expected cost

for each I DS i when being under the attack of a (δ, αi , βi ) − intruder3 . Therefore we find

the best IDS as follows:

rirobust =

max Eδ,αi ,βi [C(I, A)]

min

α

β

α ,β

(PFAi ,PDi )∈ROCi i i

(3.2)

I(δ,αi ,βi )

Several important questions can be raised by the above framework. In particular we are

interested in finding the least upper bound r such that we can claim the evaluation of I DS to

be robust. Another important question is how can we design an evaluation of I DS satisfying

this least upper bound? Solutions to these questions are partially based on game theory.

Lemma 2: Given an initial estimate of the base-rate p̂, an initial ROC curve obtained

from D , and constant costs C(I, A), the least upper bound r such that the expected cost evaluation of I DS is r-robust is given by

β

where

α

)(1 − p̂δ ) + R(1, P̂D ) p̂δ

r = R(0, P̂FA

(3.3)

α

α

α

]

) +C(0, 1)P̂FA

) ≡ [C(0, 0)(1 − P̂FA

R(0, P̂FA

(3.4)

is the expected cost of I DS under no intrusion and

β

β

β

R(1, P̂D ) ≡ [C(1, 0)(1 − P̂D ) +C(1, 1)P̂D ]

(3.5)

β

α and P̂ are the solution to a zerois the expected cost of I DS under an intrusion, and p̂δ , P̂FA

D

sum game between the intruder (the maximizer) and the IDS (the minimizer), whose solution

can be found in the following way:

1. Let (PFA , PD ) denote any points of the initial ROC obtained from D and let ROC(α,β)

α , Pβ ), where Pβ = P (1 − β) and Pα =

be the ROC curve defined by the points (PFA

D

D

D

FA

α + PFA (1 − α).

2. Using p̂ + δu in the isoline method, find the optimal operating point (xu , yu )in ROC(α,β)

and using p̂−δl in the isoline method, find the optimal operating point (xl , yl ) in ROC(α,β) .

that different IDSs might have different α and β values. For example if I DS 1 is an anomaly detection

scheme then we can expect that the probability that new normal events will generate alarms α1 is larger than the

same probability α2 for a misuse detection scheme I DS 2 .

3 Note

25

3. Find the points (x∗ , y∗ ) in ROC(α,β) that intersect the line

y=

C(1, 0) −C(0, 0)

C(0, 0) −C(0, 1)

+x

C(1, 0) −C(1, 1)

C(1, 0) −C(1, 1)

(under the natural assumptions C(1, 0) > R(0, x∗ ) > C(0, 0), C(0, 1) > C(0, 0) and C(1, 0) >

C(1, 1)). If there are no points that intersect this line, then set x∗ = y∗ = 1.

4. If x∗ ∈ [xl , xu ] then find the base-rate parameter p∗ such that the optimal isoline of Equaα = x∗ and P̂β = y∗ .

tion (2.9) intercepts ROC(α,β) at (x∗ , y∗ ) and set p̂δ = p∗ , P̂FA

D

5. Else if R(0, xu ) < R(1, yu ) find the base-rate parameter pu such that the optimal isoline of

α = x and P̂β = y .

Equation (2.9) intercepts ROC(α,β) at (xu , yu ) and then set p̂δ = pu , P̂FA

u

u

D

Otherwise, find the base-rate parameter pl such that the optimal isoline of Equation (2.9)

α = x and P̂β = y .

intercepts ROC(α,β) at (xl , yl ) and then set p̂δ = pl , P̂FA

l

l

D

The proof of this lemma is very straightforward. The basic idea is that if the uncertainty

range of p is large enough, the Nash equilibrium of the game is obtained by selecting the point

intercepting equation (3). Otherwise one of the strategies for the intruder is always a dominant

strategy of the game and therefore we only need to find which one is it: either p̂ + δu or p̂ − δl .