NBER WORKING PAPER SERIES DOES FEDERALLY-FUNDED JOB TRAINING WORK? NONEXPERIMENTAL

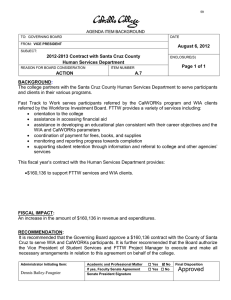

advertisement