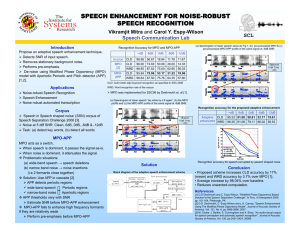

ABSTRACT SYNERGY OF ACOUSTIC-PHONETICS AND AUDITORY MODELING TOWARDS ROBUST SPEECH RECOGNITION

advertisement