Multimodal visual resource discovery Stefan Rüger

advertisement

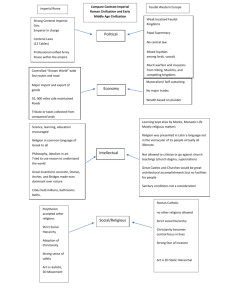

Multimodal visual resource discovery Stefan Rüger http://mmir.doc.ic.ac.uk 1 © Imperial College London Multimodal visual resource discovery • • • • • • 3 Multimedia Content Management Semantic gap and automated annotation Polysemy and relevance feedback Content-based visual search Video summarisation Lateral browsing © Imperial College London Research: Multimedia content management Information is of no use unless you can actually access it. [from trec.nist.gov] 4 © Imperial College London Multimedia Content Management • Archive – text, video, images, speech, music, web content, … • Query – text, stills, sketch, speech, humming, examples, … – content-based • Value-added services – browsing, summaries, story boards – document clustering, cluster summaries • Putting the user in the loop 5 © Imperial College London text stills sketch speech sound humming motion New types of Search Engines query text video Example doc images speech music sketches multimedia 6 © Imperial College London conventional text roar retrieval you and get a wildlife type “floods” documentary and BBCand humget a tune radio news get a music piece Some applications • Medicine – Get diagnosis of cases with similar scans • Law enforcement – Copyright infringement – CCTV video retrieval • Digital libraries – Novel resource discovery methods • Industrial applications – Multimedia knowledge management 7 © Imperial College London Multimodal visual resource discovery • • • • • • 8 Multimedia Content Management Semantic gap and automated annotation Polysemy and relevance feedback Content-based visual search Video summarisation Lateral browsing © Imperial College London The semantic gap 1. 120,000 pixels with a particular spatial colour distribution 2. faces (Caucasian & African) and a vase-like object 3. victory, triumph, ... 9 © Imperial College London Automated image annotation as machine translation problem water grass trees the beautiful sun le soleil beau 10 © Imperial College London Automated image annotation as machine learning problem • Training set, eg, 32,000 images such as animal, creature, face, grass, nature, outdoors, outside, portrait, teeth, tiger, vertical, water, wild, wildlife 11 © Imperial College London Automated image annotation as machine learning problem • Probabilistic models: – maximum entropy models – models for joint and conditional probabilities – evidence combination with Support Vector Machines [PhD work in progress: A Yavlinsky, Nov 2003-, partly ORS funded] [PhD work in progress: J Magalhães, Oct 2004-, funded by FCT] [with Magalhães, SIGIR 2005] [with Yavlinsky and Schofield, CIVR 2005] [with Yavlinsky, Heesch and Pickering: ICASSP May 2004] 12 © Imperial College London A simple Bayesian classifier • Use training data J and annotations w • P(w|I) is probability of word w given unseen image I • The model is an empirical distribution (w,J) 13 © Imperial College London Automated image annotation: an example Automated: water buildings city sunset aerial 14 © Imperial College London Bridging the semantic gap • Region classification (grass, water, sky, ...) • Infer complex concepts from simple models (grass+plates+people = barbecue) [CultureGrid EU proposal, application Sept 2005] [collaboration with AT&T Research, Cambridge 2001] 15 © Imperial College London Multimodal visual resource discovery • • • • • • 16 Multimedia Content Management Semantic gap and automated annotation Polysemy and relevance feedback Content-based visual search Video summarisation Lateral browsing © Imperial College London Polysemy 17 © Imperial College London Relevance feedback • endow system with plasticity (parameters) • system needs to learn from user = change the parameters [funding co-application: relevance feedback using an eye-tracker, Jan 2005] [with Heesch and Yavlinsky, CIVR 2003] [with Heesch, ECIR 2003] 18 © Imperial College London 19 © Imperial College London 20 © Imperial College London 21 © Imperial College London 22 © Imperial College London Multimodal visual resource discovery • • • • • • 23 Multimedia Content Management Semantic gap and automated annotation Polysemy and relevance feedback Content-based visual search Video summarisation Lateral browsing © Imperial College London Content-based Visual Search [invited talks: AMR 2005, National e-Science workshop 2005] [invited panels: EWIMT 2004, AMR 2005] [Choplin 1.3 open-source software, 3 Q 2005] [iBase 1.2 open-source software, 3 Q 2005] [with Howarth, Information Retrieval, submitted 2005] [with Howarth CIVR 2005] [with Chawda, Craft, Cairns and Heesch, HCI 2005] [with Howarth, ECIR 2005] [with Heesch, Pickering, Howarth and Yavlinsky, JCDL 2004] [with Yavlinsky, Heesch and Pickering, ICASSP 2004] [with Howarth, CIVR 2004] [with Pickering, Computer Vision and Image Understanding, 92(1), 2003] [with Heesch, Pickering and Yavlinsky, TRECVID 2003] [with Heesch and Yavlinsky, CIVR 2003] [with Pickering, Heesch, O’Callaghan and Bull, TREC 2002] [with Pickering and Sinclair, CIVR 2002] 24 College London [with Pickering, TREC© Imperial 2001] 11 Primitive Features for photographs • 4 Colour histograms – global HSV, centre HSV, marginal RGB colour moments, colour structure descriptor • 2 Structure maps – Convolution/wavelet map features on grey image • 3 Texture descriptors – Using signal processing, psychology and statistics • 1 Text index – Bag of stemmed-words (tf-idf) • 1 Localisation feature – Thumbnail of grey image 25 © Imperial College London Evaluation conference TRECVID • TRECVID topic 102: Find shots from behind the pitcher in a baseball game as he throws a ball that the batter swings at 26 © Imperial College London TRECVID 2003 interactive search evaluation MAP RANK (out of 36) 27 Median 0.19 Mean 0.18 S + RF + B 0.26 5 S + RF 0.26 4 S+B 0.23 8 © Imperial College London One example feature class: texture • regional property – contrast, coarseness, directionality, … – co-occurrence statistics – signal processing – based on psychological experiments [with Howarth, IEE Proc on Vision, Image and Signal, accepted 2005] [with Howarth, Yavlinsky and Heesch, CLEF 2004] [with Howarth, CIVR 2004] 28 © Imperial College London Image CLEF • Medical image collection, 8725 images, 25 topics • mean average precision 34.5%, 3rd within 1% of 1st 29 © Imperial College London Multimodal visual resource discovery • • • • • • 30 Multimedia Content Management Semantic gap and automated annotation Polysemy and relevance feedback Content-based visual search Video summarisation Lateral browsing © Imperial College London Video summary story-level segmentation keyframe summary videotext summary named entities full-text search [technology being licensed by Imperial Innovations to industry] [UK patent application 2004] [finished PhD project: Pickering, now at HTB, London] [with Wong and Pickering, CIVR 2003] [with Lal, DUC 2002] [Pickering: best UK CS student project 2000 – national prize] 31 © Imperial College London Multimodal visual resource discovery • • • • • • 32 Multimedia Content Management Semantic gap and automated annotation Polysemy and relevance feedback Content-based visual search Video summarisation Lateral browsing © Imperial College London Lateral browsing: NNk network • Precompute all possible nearest neighbours – instantiate network within images of database [with Heesch, CIVR 2005] [with Heesch, CIVR 2004] [with Heesch, ECIR 2004] 33 © Imperial College London Nearest neighbours wrt features query Structure Colour Text 34 © Imperial College London Browsing example • TRECVID topic 102: Find shots from behind the pitcher in a baseball game as he throws a ball that the batter swings at 35 © Imperial College London [Alexander May: SET award 2004: best use of IT – national prize] 36 © Imperial College London 37 © Imperial College London 38 © Imperial College London 39 © Imperial College London Properties • • • • • • 40 Exposure of semantic richness User decides which image meaning is correct Network precomputed -> fast and interactive Supports search without query formulation 3 degrees of separation (for 32,000 images) scale-free, small-world graph © Imperial College London Conclusions: Digital Content Management MM IR ML IS HCI DB KM 41 © Imperial College London Multimodal visual resource discovery Stefan Rüger http://mmir.doc.ic.ac.uk 42 © Imperial College London