Oxford eScience Overview of New technologies for Building Campus Grids Rhys Newman

advertisement

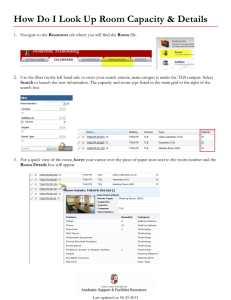

Oxford eScience Overview of New technologies for Building Campus Grids Rhys Newman Manager of Interdisciplinary Grid Development, Oxford University Campus Grids Workshop – Edinburgh June 2005 Grid: Computer Resources Like Electricity An enticing idea for users….. • On demand – effectively unlimited. • Pay as you go. • Ubiquitous and standard. An enticing idea for providers….. • Can enter the business of selling computing resources in a liquid market. • Can generate value from machines already purchased and depreciating daily. Many forget electricity distribution infrastructure took decades to perfect, and even now is being actively developed (remember the New York blackout?) Where are we now? The Grid is “the web on steroids”, so compare to the world wide web: • Exponential growth of web servers for the first 5 years, now over 200 million. • Grid has a few thousand nodes 10 years after the idea was proposed. • Easy to get a server (download Apache install, go, 5 mins). • Which “server” to use? Globus, Sun, IBM, Apple, LCG – none are ubiquitous, standard or simple to install. • Easy to author web pages and information (HTML is simple enough for almost anyone). • Need to recompile, or relink software with libraries, add features to support use across the WAN, support various OS and environments, etc…. The hardware is there • over 500 million computers worldwide), the internet connects them, broadband common, 10GBit coming. The problem is software … We have seen how little hardware is used. eScience has had £250m invested over the last 4 years; a lot of this targeted at Grid Technology Development. After all this, the technology we mostly use is “Condor”. • This product is developed outside the UK framework, and predates the eScience programme by many years. “e-Science will change the dynamic of the way science is undertaken.” Dr John Taylor, Director General of Research Councils, 2000 Unless we can deliver, the basis of eScience, eResearch may be missing a huge opportunity. An embarrassing anecdote…. The EDG project and follow on EGEE project has been funded by over 42m euros. Main thrust to provide a computer grid infrastructure for the LHC experiments in CERN (4 of them). Thus 4 separate software environments struggling to standardise on a common operating system (RH 7.3 and now SL). Currently LCG grid claims 10000 CPUs (although only 1300 were visible last night via GridICE....). However it is a generic infrastructure (LHC) based on Globus and Linux When the LHC designers needed computing power to help design the beam ring they….. Developed their own grid! LHC@Home http://lhcathome.cern.ch/ Based on BOINC. Runs on Windows machines, primarily as a screen saver. Uses donations of CPU time. Attracted over 6000 active “donors” within 6 months. Has so much computer time available now, its not accepting new donors. We need to think beyond YALA! Solutions? Two obvious solutions for users: • Use commercial hosting (1p GHzHr) • Buy own machine (0.1-0.3p GHzHr) In either case the user has complete control of the hardware and software environment. Campus Grids only make sense if they too offer this power, cheaper than commercial offerings and better than “rolling your own”. Cheaper and Better…. Campus grids can be cheaper as the providers don’t buy the resource in the first place (idle time on existing). Campus grids can be better as a standardised, managed environment for the user can be provided (automatically patched, updated etc). • Administration time is therefore less…. Virtualisation is the Key To provide these properties, reliably and uniformly on heterogeneous resources around a university, the user’s environment must be “Virtual”. Simplifies the administration: • The “service end” of eScience can focus on the provision of “virtual hardware” • The “user end” of eScience can focus on developing applications in a standardised environment. Imagine M users and N owners, each wanting their concerns addressed: • Without Virtualisation: MxN negotiations. • With Virtualisation: M+N negotiations. Virtualisation/Emulation: How it Enables CPU sharing….. A “Virtual” machine completely isolates the Grid Process from the underlying operating system: • Essential security – grid process cannot crash the machine, cannot modify the host machine’s software. • Essential privacy – grid process cannot read the host’s files, host cannot read the grid process’s files. Grid Job Guest OS Emulated hardware other software Emulator host OS host hardware open(mydata.root) access /dev/hda read “hard drive” read from c:\hda.img access ide0 read physical hard drive Virtualisation Myths: Speed VMWare is the industry standard • Focus on server consolidation • Fastest growing software company in history (equals Oracle). • Started in 1998, bought in 2004 for $625 million. • Virtualisation rather than Emulation, achieves 97% native performance. Many other players in this market, particularly Transitive technologies – direct binary translation between ISAs at 80% native performance. • The engine “Rosetta” behind Apple’s confidence in moving from PowerPC to x86 Virtualisation Myths: Maturity Intel VTx (Vanterpol) will be released Q1 2006 in IA32 and IA64 bit chips. • This is so mainstream and standard there will be hardware support for virtualisation! Examine VMWare (e.g. virtual centre), VirtualPC (M’soft), IBM eServer, HP Utility Computing, QEMU, Xen….. This is very standard technology, tried, tested and robust. It is certainly ready for use within a grid context. Virtualisation Myths: HPC There are recent commercial installations of VMWare on which old HPC code is run in a virtual HPC environment. • The VMs are virtually connected via “high speed” interconnect • The actual networking is GBit/10GBit ethernet. Useful to avoid the reengineering cost of the software. Campus Grids? Conclusion A grid can generate significant value from idle resources but: • Users must be given absolute control of their environment. • Owners must retain absolute control of their machines. Virtualisation/Emulation enables this to happen: • Administration can be simple enough to become a fixed cost. • Security concerns can be minimised almost to the “anonymous” level. We still need new technology to make this happen, but we may be very close……