Data Handling in Campus Grid Environments Kerstin Kleese van Dam

advertisement

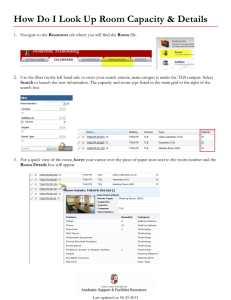

Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Data Handling in Campus Grid Environments Kerstin Kleese van Dam CCLRC - Daresbury and Rutherford Appleton Laboratories k.kleese@dl.ac.uk 1 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Today’s working environment Researchers work on many different platforms ranging from simple desktop systems to high end compute systems. They often use their standard applications in different ways, from development and test (desktop/small cluster) over parameter searches (high throughput) to large studies (high capacity). The systems used are in different organisation, with different access mechanisms, operating systems and schedulers. 2 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Today’s working environment (2) Challenges: • Passwords – User Names and Passwords are different where ever you go. • Application – Is your application available on the desired platform? If so where is it? If not, can you compile it? Do you need a license? Where are the necessary libraries? Are they available in the right version? Do you have a permanent, save user area to save it for the future? • Job Submission – How do I submit my job? What Scheduler is available? What is the optimal queue for my job? • Data – How do I get my input data onto the system and my output data off? Is my data small enough for transfer? If you use many systems – where is your data? What is it you calculated? 3 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Data Management Support for Research Collaboration Based Campus Grid’s 4 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Research Collaboration – Campus Grid e-Minerals: Environment from the Molecular Level Modelling the atomistic processes involved in environmental issues Pollution: molecules and atoms on mineral surfaces Radioactive waste disposal Crystal growth and scale inhibition http://www.eminerals.org Crystal dissolution and weathering 5 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre University of Reading Royal Institution 6 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre 7 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre eMinerals Minigrid 8 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre e-Minerals MiniGrid – Outside Connections (2) NGS HPCx CSAR ….. e-Minerals Mini Grid UK Grid 9 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre e-Minerals Script Interface Condor DAGMan Client Side Condor-G Globus 2 Server Side Queuing S Commands R Commands System • Script based tools provide integration of compute and data functionality • But scripts proved difficult for scientists to understand • Not scalable – only limited number of machines from which to access minigrid 10 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Simple Job Run Application are held in SRB – Directory for application, version + platform, as are standard set up scripts. User insures correct input data is available in SRB. User defines simulation (s – easily up to 1000) with Condor DaGMan Script incl. choosing system type – Pure Condor or Resource accessible via Globus e.g. with PBS scheduler and submits it from his desktop. The Job script is send to Condor Job Manager and submitted directly or via Globus to relevant system. The application code and input data are retrieved via the local SRB access. Job is started. The resulting data for every job is transferred into the user specified directory with in SRB through the local access. The output data in SRB are linked to the metadata entries in the metadata database. Job finished. Results are available through the usual SRB interfaces for the user to look at or further work on. 11 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Storage Resource Broker (SRB) 12 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Storage Resource Broker The SDSC Storage Resource Broker (SRB) is a client-server middleware that provides a uniform interface for connecting to heterogeneous data resources over a network and accessing replicated data sets. SRB, in conjunction with the Metadata Catalog (MCAT), provides a way to access data sets and resources based on their attributes rather than their names or physical locations. 13 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Storage Resource Broker – Main Features •SRB provides an uniform API that can be used to connect to heterogeneous resources that may be distributed and access data sets that may be replicated. •SRB allows users to manage data storage and replication across the wide range of physical storage system types and locations, while still allowing having a single, stable, access point to the data. SRB has two major components, the core SRB, which interfaces with the storage devices, and the MCAT, which holds the metadata elements. •Many different platforms and authentication methods are supported by the modular design, and a web service interface is available. •The system provides interfaces for the ingestion of data and associated metadata; management of replication and data movement; searching the metadata for discovery; and the retrieval of the data itself. Metadata held to support these interfaces includes the physical and logical details of the data held and its replicas, user information, and security rights and access control. 14 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre SRB Architecture Set up Databases for Central MCAT Application Server to Access Central MCAT Storage Resources linked to SRB Application Server to manage Storage Resources in SRB Transparent Access for users from their Desktop 15 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre SRB MCAT Database and Application Server SRB Server and Storage Vault SRB Server and Storage Vault SRB Server and Storage Vault University of Reading SRB Server and Storage Vault Royal Institution 16 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Access from other Resources to e-Minerals SRB The Usage of simple s-Commands allows the e-Minerals users to also access and add to their data from: • The National Grid Service • HPCx • The Minerals and Ceramics Consortium Cluster 17 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre SRB User Interfaces Users can access SRB via a number of well designed interfaces: InQ – Windows based user interface supporting the full range of SRB commands MySRB – Web based user interface allowing access to the users SRB data from anywhere in the world S-Commands – Command line interface to interact with SRB SRB-API for direct interaction with SRB from any programme, examples exist for e.g. Fortran, C, C++, VB, Perl and Python There is also an Administration Interface 18 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Functions including ingestion, movement and replication of data. Providing access to data for others Version of Data Type of Data Replica or Original Data Physical Data Location and Type of Resource 19 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre inQ - SRB user interface for Windows based Systems 20 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Metadata 21 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre CCLRC Scientific Metadata Model - Diversity: Users & Searches Discovery Excavation 22 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre CCLRC Scientific Metadata Model Metadata Object Topic Keywords providing a index on what the study is about. Study Description Provenance about what the study is, who did it and when. Access Conditions Conditions of use providing information on who and how the data can be accessed. Detailed description of the organisation of the data into datasets and files. Data Description Data Location Related Material Locations providing a navigational to where the data on the study can be found. References into the literature and community providing context about the study. 23 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Metadata Editor 24 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre 25 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre 26 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre 27 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre 28 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Data Portal 29 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Data Discovery using the Data Portal • scientist wishes to search for studies and data • he does not know if data exists • he wants to search many data holdings 1. Scientist selects category for search e.g. Enviromental Issues → Hazardous Waste 2. Data Portal contacts each XML Wrapper Web Service to request study under category Data Portal Xml Wrapper Xml Wrapper Local catalogue Local catalogue Local data Local data 3. XML Wrappers use SQL to request studies from Local Catalogue Databases and convert to common XML format 4. Data Portal presents studies to scientist via XSLT 5. Scientist selects study of interest and selects one or more datasets to download 6. Datasets downloaded from Local Data Holdings directly to PC or remote machine via GridFTP Facility 1 Facility N 30 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre General CLRC DataPortal Architecture CLRC DataPortal Server Other Instances of the CLRC DataPortal Server XML wrapper XML wrapper XML wrapper Local metadata Local metadata Local metadata Local data Local data Local data Facility N Facility 1 Facility 1 ... 31 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre 32 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre 33 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre 34 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre 35 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre 36 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Complete Data Management Environment Discovery CCLRC DataPortal Metadata Database SDSC’s SRB Analysis CCLRC InfoPortal CCLRC HPCPortal NGS/Project Resources Results Discovery CCLRC DataPortal Publish Results CCLRC Metadata Editor Annotation SDSC’s SRB NGS/Project Resources Result Storage 37 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Archival Service For Campus Grid’s 38 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Archival Services for Campus Grid’s – both for one site or distributed sites Institute Sites Archival Services operate on the economy of scale and require expert staff to operate them, thus central services for larger Campus Grid’s make financial sense. Archival Service Central Site We operate these services both ‘on site’ within CCLRC and for external partners. This example is for a customer with about 16 sites across the UK, they operate on their own network and only their main site is connected to Janet. Scheduled archival and restores are handled via this central site. 39 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Data Services - Infrastructure STK 1 PB Tape Store 20 TB Disk Cache for Tape Store CCLRC operates a professional Data Management and Storage Infrastructure incl. top range Tape Robots and professional Database Services. 2x2 Node IBM Database Cluster + 1 TB Fast Disk Application Servers e.g. IBM Blade Centre 40 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Distributed Data Management / Archival Environment Combining the storage capabilities of the Tape Stores around the world with the easy access and integration of the Storage Resource Broker. Databases for Central MCAT Atlas Data Store accessed through SRB Application Server to Access SRB Resource Groups and the Central MCAT Transparent Access for users from their Desktop 41 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Remote Institute Site Archival process Data Path Local Storage Filer Central ‘Cache’ Site Site WAN 1 Local machines RAL Local SRB Server Firewall JANET WAN Central SRB Server 2 Tape Traffic Sphymove in to container SRB-ADS Server 3 ADS Tape Resource disk disk Local Vault ads0sb01.cc.rl.ac.uk Sreplcont disk Firewall Firewall Central “cache” Vault ADS SRB Disk Cache Resource 4 1 Archive Submission Interface - Data Ingestion of collection hierarchy into SRB - Uses Java jargon API interface (equivalent of Sput –b) - Ingested - At end of ingestion data logically moved 3 Scheduled transfer to ADS resource - Implemented via CRON job using Sreplcont command which is driven by central SRB Server -Entire container replicated using Sreplcont command -Logical Structure is preserved 2 Scheduled transfer to Central SRB Server (Driven from Central SRB Server) - Smkcont command used to create container on central SRB Server - Data moved from Site SRB to container on central SRB Server using Sphymove - Upon data transfer completion archived data is logically moved 4 -Synchronization of container to tape resource and removal of original container from Central SRB Server - Ssyncont –d –a command used, allowing for a family of containers 42 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Remote Institute Site Local Storage disk Archival Restore Process Data Path 1 RAL Central ‘Cache’ Site Fast Link (JANET) Firewall Local machines 5 Local SRB Server Tape Traffic Central SRB Server Slow Link Sreplcont 2 SRB-ADS Server 4 Local Vault 3 Scp –b disk disk Central “cache” Vault disk Firewall ADS SRB Disk Cache Resource ADS Tape Resource Firewall 1 Restore Request Interface - Metadata search for archived files - Selection of container to restore - Restore request queued on Request Tracker System 2 Staged restore of data to central SRB Server - Driven from central SRB Server using CRON - Container replicated from SRB Tape Resource directly to Central SRB Server using Sreplcont command (by-passes ADS SRB cache resource) - Separate restore vault used to store replicated data 3 Staged restore of data from Central SRB Server to Local SRB Server - Driven from central SRB Server using CRON - Physical content of replicated container copied from central SRB Server to local site SRB Server using Scp bulk command -Upon transfer completion logically Smv 4 - Ssyncont –d issued to remove copy of cached replicated container from central transit site 5 Extraction Interface -User “extracts” restored files to “local” file space with Jargon Interface (Sget –b) -Extraction Logged with Request Tracker 43 Campus Grid Workshop 16th – 17th of June 2005 CCLRC e-Science Centre Thank you for you attention. Any questions?? Contact details http://www.e-science.clrc.ac.uk k.kleese@dl.ac.uk 44