Collaborative Visualization of 3D Point Based Anatomical

advertisement

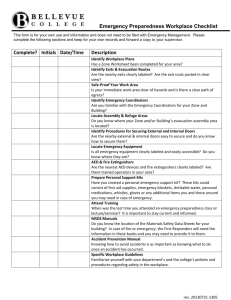

Collaborative Visualization of 3D Point Based Anatomical Model within a Grid Enabled Environment Ian Grimstead, David Walker and Nick Avis; School of Computer Science, Cardiff University Frederic Kleinermann; Department of Computer Science, Vrije Universiteit John McClure; Directorate of Laboratory Medicine, Manchester Royal Infirmary Abstract We present a use of Grid technologies to deliver educational content and support collaborative learning. “Potted” pathology specimens produce many problems with conservation and appropriate access. The planned increase in medical students further exasperates these issues, prompting the use of new computer-based teaching methods. A solution to obtaining accurate organ models is to use point-based laser scanning techniques, exemplified by the Arius3D colour scanner. Such large datasets create visually compelling and accurate (topology and colour) virtual organs, but will often overwhelm local resources, requiring the use of specialised facilities for display and interaction. The Resource-Aware Visualization Environment (RAVE) has been used to collaboratively display these models, supporting multiple simultaneous users on a range of resources. A variety of deployment methods are presented, from web page applets to a direct AccessGrid video feed. 1. Introduction Medical education has to adapt and adjust to recent legislation and the reduction in the working hours of medical students. There is already some evidence that these changes are impacting negatively on students’ anatomical understanding [Ellis02, Older04]. 1.1. Pathology Specimens The University of Manchester Medical School has over 5,000 “potted” pathology specimens (3,000 on display at any one time) at three sites, which is typical of a large UK teaching school. These specimens have been collected, preserved and documented over a number of years, representing a valuable “gold standard” teaching resource. The restrictions on harvesting and use of human tissue, coupled with the problems and logistics associated with the conservation of the collections, result in large issues for the planned increase in medical students; something has to change. New computer based teaching methods and materials are appearing which include anatomical atlases to assist the students’ understanding of anatomy, moving away from the more traditional teaching materials. The availability and use of the Visible Human dataset is a good example of how computer based teaching is predicated on the availability of high quality primary data. However, the construction of high quality teaching material such data requires tremendous amounts of expert human intervention. There is therefore a compelling need to discover ways of simply and quickly creating and sharing digital 3D “virtual organs” that can be used to aid the teaching of anatomy and human pathology. This is the motivation of our studies. 1.2. Point-Based Models It is difficult and time consuming to create a library of 3D virtual organs to expose the trainee to a range of virtual organs that represent biological variability and disease pathology. Furthermore, such virtual organs must be both topologically correct and also exhibit colour consistency to support the identification of pathological lesions. A solution to obtaining accurate organ models is to use a point-based laser scanner. The Arius3D colour laser system is unique, as far as we are aware, in that it recovers both topology and colour from the scanned object. The Arius3D solution is also compelling as it produces an accurate and visually compelling 3D representation of the organ without resorting to more traditional texture mapping techniques. Whilst the above method eases the capture and generation of accurate models, the resulting file sizes and rendering load can quickly overwhelm local resources. Furthermore a technique is also required to distribute the models for collaborative investigation and teaching, which can cope with different types of host platform without the user needing to configure the local machine. 2. RAVE – the Resource-Aware Visualization Environment The continued increases in network speed and connectivity are promoting the use of remote resources via Grid computing, based on the Figure 1: RAVE Architecture concept that compute power should be as simple to access as electricity on an electrical power grid – hence “Grid Computing”. A popular approach for accessing such resources is Web Services, where remote resources can be accessed via a web server hosting the appropriate infrastructure. The underlying machine is abstracted away, permitting users to remotely access different resources without considering their underlying architecture, operating system, etc. This both simplifies access for users and promotes the sharing of specialised equipment. The RAVE (Resource-Aware Visualization Environment) is a distributed, collaborative visualization environment that uses Grid technology to support automated resource discovery across heterogeneous machines. RAVE runs as a background process using Web Services, enabling us to share resources with other users rather than commandeering an entire machine. RAVE supports a wide range of machines, from hand-held PDAs to high-end servers with large-scale stereo, tracked displays. The local display device may render all, some or none of the data set remotely, depending on its capability and present loading. The architecture of RAVE has been published elsewhere [Grims04], so we shall only present a brief overview of the system here. 2.1. Architecture The Data Service is the central part of RAVE (see Figure 2), forming a persistent and centralised distribution point for the data to be visualized. Data are imported from a static file or a live feed from an external program, either of which may be local or remotely hosted. Multiple sessions may be managed by the same Data Service, sharing resources between users. The data are stored in the form of a scene tree; nodes of the tree may contain various types of data, such as voxels, point clouds or polygons. This enables us to support different data formats for visualization, which is particularly important for the medical applications reported here. The end user connects to the Data Service through an Active Client, which is a machine that has a graphics processor and is capable of rendering the dataset. The Active Client downloads a copy of (or a subset of) the latest data, then receiving any changes made to the data by remote users whilst sending any local changes made by the local user, who interacts with the locally rendered dataset. If a local client does not have sufficient resources to render the data, a Render Service can be used instead to perform the rendering and send the rendered frame over the network to the client for display. Multiple render sessions are supported by each Render Service, so multiple users may share available rendering resources. If multiple users view the same session, then single copies of the data are stored in the Render Service to save resources. 2.2. Point-Based Anatomical Models To display point-based models in RAVE, the physical model is first converted into a point cloud dataset via an Arius3D colour laser scanner, retaining surface colour and normal information. This is stored as a proprietary PointStream model, before conversion to an open format for RAVE to use. The three stages are shown in Figure 3, presenting a plastinated human heart; point samples were taken every 200µm producing a model of approximately 6 million datapoints. The flexible nature of RAVE enables this large dataset to be viewed collaboratively by multiple, simultaneous users – irrespective of their local display system. For instance, a PDA can view and interact with the dataset alongside a high-end graphical workstation. Figure 4: Plastinated human heart, data viewed from PointStream and corresponding RAVE client 2.3. Collaborative Environment RAVE supports a shared scenegraph; this basically means that the structure representing the dataset being viewed is actually shared between all participating clients. Hence if one client alters the dataset, the change is reflected to all other participating clients. A side-effect of this is that when a client navigates around the dataset, other users can see the client's “avatar” also move around the dataset (an avatar is an object representing the client in the dataset). Clients can then determine where other clients are looking/located and can opt to fly to other client's positions. If a client discovers a useful artefact in a dataset, they can leave 3D “markers” for other users, together with a textual description entered by the client. These can be laid in advance by a client (such as a teacher or lecturer), leaving a guided trail for others to follow. RAVE also supports AccessGrid (AG) [Childers00], which was demonstrated at SAND'05 [Grims05]. A RAVE thin client was running in Swansea, along with an AG client. An AG feed was also launched from a Render Service in Cardiff which was then controlled from the Thin Client in Swansea. 2.4. Ease of Use To simplify the use of RAVE, we have created a Wizard-style Java applet that can be launched stand-alone or via a web browser. The applet uses UDDI to discover all advertised RAVE data services; these are then interrogated in turn to obtain all hosted data sessions (and hence datasets). The GUI also enables the user to launch a client, and contains a notification area to see progress messages. To collaboratively view a dataset, the user simply selects the dataset in the GUI, enters the size of the visual window they require, the name associated with their avatar and the minimum frames-per-second they are willing to accept. The Wizard then determines if the local host can display the data as an Active Client; if so, it verifies there is sufficient memory to host the data and that local resources can produce the requested frames per second.. If insufficient resources exist at the local host, then a Thin Client will be used instead. The applet searches (via UDDI) for available Render Services; for each responding Render Service, we examine the available network bandwidth to the client, memory available for datasets, remote render speed and if the requested dataset is already hosted on this service. The most appropriate Render Service is then selected, and a Thin Client is spawned in a new window. Render Services contain resource-awareness for Thin Client support. During image render and transmission at the Render Service, the image rendering time is compared with the image transmission time. Image compression over the network is adjusted to match transmission time with render time, to maintain the maximum possible framerate. As this will induce lossy compression, an incremental codec is used; if the image does not change, then the lossy nature is reduced, building on previous frames to produce a perfect image over subsequent frame transmissions (forming a golden thread as [Bergman86]). 3. Discussion and Conclusion We have presented systems and methods to allow the creation of 3D virtual organs and their use in a collaborative, distributed teaching environment through the use of various Grid technologies. The availability of high quality datasets is the beginning of the process in creating compelling and useful anatomical teaching tools. Whilst commodity graphics cards are increasing in their performance at more than Moore’s Law rates, their ability to handle large complex datasets still presents barriers to the widespread use of this material. This coupled with slow communications links between centralised resources and students who are often working remotely and in groups (using problem based teaching methods) and these facts quickly erode some of the advantages of digital assets over their physical counterparts. To this end we have sought to harness grid middleware to remove the need for expensive and high capability local resources – instead harnessing remote resources in a seamless and transparent manner to the end user to effect a compelling and responsive learning infrastructure. We have presented methods to allow both the quick and relatively easy creation of 3D virtual organs and their visualization. We maintain that taken together these developments have the potential to change the present anatomical teaching methods and to hopefully promote greater understanding of human anatomy and pathology. [Grims04] Ian J. Grimstead, Nick J. Avis, and David W. Walker. Automatic Distribution of Rendering Workloads in a Grid Enabled Collaborative Visualization Environment, in Proceedings of Supercomputing 2004, held 6th12th November in Pittsburgh, USA, 2004. [Grims05] Ian J. Grimstead. RAVE – The Resource-Aware Visualization Environment, presentation at SAND'05, Swansea Animation Days 2005, Taliesin Arts Centre, Swansea, Wales, UK, November 2005. [Nava03] A. Nava, E. Mazza, F. Kleinermann, N. J. Avis and J. McClure. Determination of the mechanical properties of soft human tissues through aspiration experiments. In MICCAI 2003, R E Ellis and T M Peters (Eds.) LNCS (2878), pp 222-229, Springer-Verlag, 2003. [Older04] J. Older. Anatomy: A must for teaching the next generation, Surg J R Coll Edinb Irel., 2 April 2004, pp79-90 [Vidal06] F. P. Vidal, F. Bello, K. W. Brodlie, D. A. Gould, N. W. John, R. Phillips and N. J. Avis. Principles and Applications of Computer Graphics in Medicine, Computer Graphics Forum, Vol 25, Issue 1, 2006, pp 113-137. 5. Acknowledgements 4. References [Avis00] N. J. Avis. Virtual Environment Technologies, Journal of Minimally Invasive Therapy and Allied Technologies, Vol 9(5) pp333- 340, 2000. [Avis04] Nick J Avis, Frederic Kleinermann and John McClure. Soft Tissue SurfaceScanning: A Comparison of Commercial 3D Object Scanners for Surgical Simulation Content Creation and Medical Education Applications, Medical Simulation, International Symposium, ISMS 2004, Cambridge, MA, USA, Springer Lecture Notes in Computer Science (3078), Stephane Cotin and Dimitris Metaxas (Eds.), pp 210-220, 2004. [Bergman86] L. D. Bergman, H. Fuchs, E. Grant, and S. Spach. Image rendering by adaptive refinement. Computer Graphics (Proceedings of SIGGRAPH 86), 20(4):29--37, August 1986. Held in Dallas, Texas. [Childers00] Lisa Childers, Terry Disz, Robert Olson, Michael E. Papka, Rick Stevens and Tushar Udeshi. Access Grid: Immersive Groupto-Group Collaborative Visualization, in Proceedings of the 4th International Immersive Projection Technology Workshop, Ames, Iowa, USA, 2000. [Ellis02] H. Ellis. Medico-legal litigation and its links with surgical anatomy. Surgery 2002. This study was in part supported by a grant from the Pathological Society of Great Britain and Ireland and North Western Deanery for Postgraduate Medicine and Dentistry. All specific permissions were granted regarding the use of human material for this study. We also acknowledge the support of Kestrel3D Ltd and the UK’s DTI for funding the RAVE project. We would also like to thank Chris Cornish of Inition Ltd. for his support with dataset conversion.