Fall 2015: Numerical Methods I Assignment 4 (due Nov. 9, 2015)

advertisement

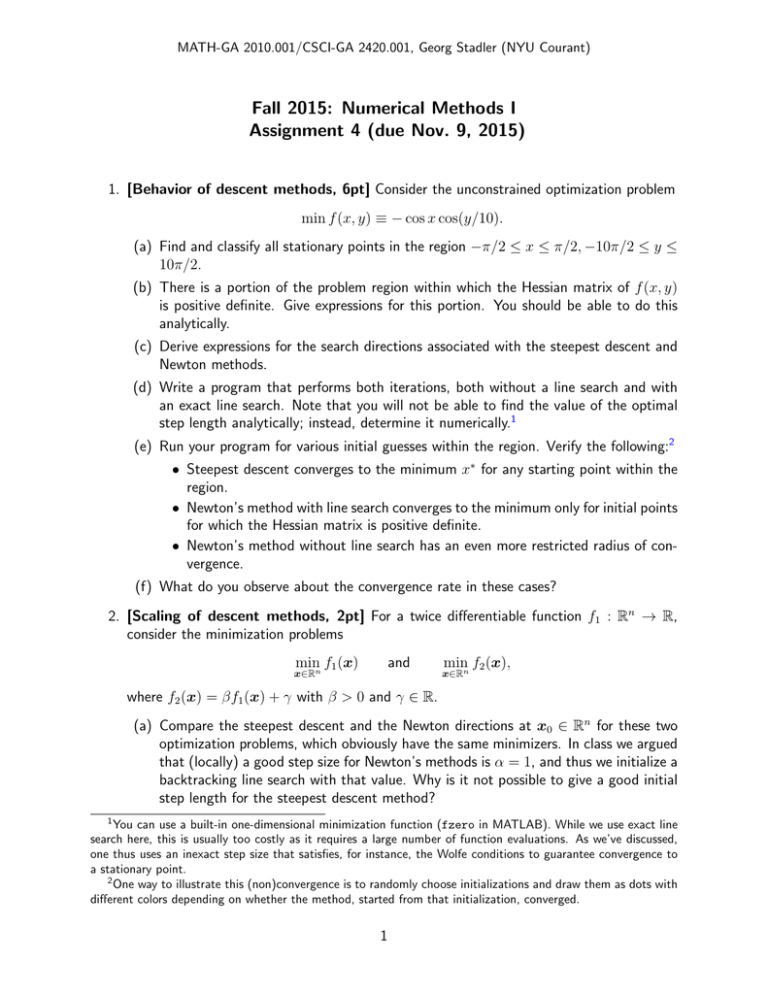

MATH-GA 2010.001/CSCI-GA 2420.001, Georg Stadler (NYU Courant) Fall 2015: Numerical Methods I Assignment 4 (due Nov. 9, 2015) 1. [Behavior of descent methods, 6pt] Consider the unconstrained optimization problem min f (x, y) ≡ − cos x cos(y/10). (a) Find and classify all stationary points in the region −π/2 ≤ x ≤ π/2, −10π/2 ≤ y ≤ 10π/2. (b) There is a portion of the problem region within which the Hessian matrix of f (x, y) is positive definite. Give expressions for this portion. You should be able to do this analytically. (c) Derive expressions for the search directions associated with the steepest descent and Newton methods. (d) Write a program that performs both iterations, both without a line search and with an exact line search. Note that you will not be able to find the value of the optimal step length analytically; instead, determine it numerically.1 (e) Run your program for various initial guesses within the region. Verify the following:2 • Steepest descent converges to the minimum x∗ for any starting point within the region. • Newton’s method with line search converges to the minimum only for initial points for which the Hessian matrix is positive definite. • Newton’s method without line search has an even more restricted radius of convergence. (f) What do you observe about the convergence rate in these cases? 2. [Scaling of descent methods, 2pt] For a twice differentiable function f1 : Rn → R, consider the minimization problems min f1 (x) and x∈Rn min f2 (x), x∈Rn where f2 (x) = βf1 (x) + γ with β > 0 and γ ∈ R. (a) Compare the steepest descent and the Newton directions at x0 ∈ Rn for these two optimization problems, which obviously have the same minimizers. In class we argued that (locally) a good step size for Newton’s methods is α = 1, and thus we initialize a backtracking line search with that value. Why is it not possible to give a good initial step length for the steepest descent method? 1 You can use a built-in one-dimensional minimization function (fzero in MATLAB). While we use exact line search here, this is usually too costly as it requires a large number of function evaluations. As we’ve discussed, one thus uses an inexact step size that satisfies, for instance, the Wolfe conditions to guarantee convergence to a stationary point. 2 One way to illustrate this (non)convergence is to randomly choose initializations and draw them as dots with different colors depending on whether the method, started from that initialization, converged. 1 (b) Newton’s method for optimization problems can also be seen as a method to find stationary points x of the gradient, i.e., points where g(x) = 0. Show that the Newton step for g(x) = 0 coincides with the Newton step for the modified problem Bg(x) = 0, where B ∈ Rn×n is a regular matrix3 . 3. [Globalization of Newton descent, 3+1pt] As we have seen, the Newton direction for solving a minimization problem is only a descent direction if the Hessian matrix is positive definite. This is not always the case, in particular far from the minimizer. To guarantee a descent direction in Newton’s method, a simple idea is as follows (where we choose 0 < α1 < 1 and α2 > 0): • Compute a direction dk by solving the Newton equation ∇2 f (xk )dk = −∇f (xk ). If that is possible and dk satisfies4 −∇f (xk )T dk ≥ min α1 , α2 k∇f (xk )k , k k k∇f (x )kkd k (1) then use dk as descent direction. • Otherwise, use the steepest descent direction dk = −∇f (xk ). To illustrate this globalization, let f : R2 → R be defined by 1 f (x) = (x21 + x22 ) exp(x21 − x22 ). 2 (a) Using the initial iterate x0 = (1, 1)T , find a local minimum of f using the modified Newton method described above, combined with Armijo line search with backtracking. Hand in a listing of your implementation.5 (b) Carry out the computation also with the modified Newton matrix ∇2 f (x) + 3I. Discuss your findings. 3 This property is called affine invariance of Newton’s method, and it is one of the reasons why Newton’s method is so efficient. A comprehensive reference for Newton’s method is the book by P. Deuflhard, Newton Methods for Nonlinear Problems, Springer 2006. 4 This is a condition on the angle between the negative gradient and the Newton directions, which must less than 90◦ . However, using this condition, the angle may approach 90◦ at the same speed as k∇f (xk )k approaches zero. Recall from class that what is required to guarantee convergence for a descent method with Wolfe line search is that an infinite sum that involves the square of the right hand side in (1) and the norm of the gradient is finite. 5 It is sufficient to hand in a listing of the important parts of your implementation, i.e., Armijo line search and computation of the descent direction. 2 4. [Modified metric in steepest descent, 2pt] Consider f : Rn → R continuously differn×n entiable, and x ∈ Rn with ∇f (x) 6= 0. For pa symmetric positive definite matrix A ∈ R , we define the A-weighted norm kykA = y T Ay. Derive the unit norm steepest descent direction of f in x with respect to the k · kA -norm, i.e., find the solution to the problem6 min ∇f (x)T d. kdkA =1 Hint: Use the factorization A = B T B and the Cauchy-Schwarz inequality. 5. [Equality-constrained optimization, 2pt] Solve the optimization problem min x1 x2 x∈R2 subject to the equality constraint x21 + x22 − 1 = 0. We know that at minimizer(s) x∗ there exists a Lagrange multiplier λ∗ , such that the Lagrangian function L(x, λ) := x1 x2 −λ(x21 +x22 −1) is stationary, i.e., its partial derivatives with respect to x and λ L,x (x∗ , λ∗ ) and L,λ (x∗ , λ∗ ) vanish. Use this to compute the minima of this equality-constrained optimization problem. 6. [Largest eigenvalues using the power method, 2+1pt] The power method to compute the largest eigenvalue only requires the matrix-application rather than the matrix itself. To illustrate this, we use the power method to compute the largest eigenvalue of a matrix A, of which we only know its action on vectors. (a) Use the power method (with a reasonable stopping tolerance) to compute the largest eigenvalues of the symmetric matrix A, which is implicitly given through a function Afun()7 that applies A to vectors v. Use the convergence rate to estimate the 2 magnitude of the next largest eigenvalue. Report results for n = 10, 50, where v ∈ Rn 2 2 and A ∈ Rn ×n . (b) Compare your result for the largest two eigenvalues with what you obtain with an available eigenvalue solver.8 7. [Stability of eigenvalues, 2+1pt] Let λ 1 ... ε Aε be a family of matrices given by ... ∈ Rn×n . ... 1 λ Obviously, A0 has λ as its only eigenvalue with multiplicity n. 6 If f is twice differentiable with positive definite Hessian matrix, one can choose A = ∇2 (x). This shows that the Newton descent direction is the steepest descent direction in the metric where norms are weighted by the Hessian matrix. 7 Download the MATLAB function from http://cims.nyu.edu/~stadler/num1/material/Afun.m. 8 In MATLAB, eigs finds the largest eigenvalues of a matrix that is given through its application to vectors. 3 (a) Show that for ε > 0, Aε has n different eigenvalues given by λε,k = λ + ε1/n exp(2πik/n), k = 0, . . . , n − 1, and thus that |λ − λε,k | = ε1/n . (b) Based on the above result, what accuracy can be expected for the eigenvalues of A0 when the machine epsilon is 10−16 ? 4