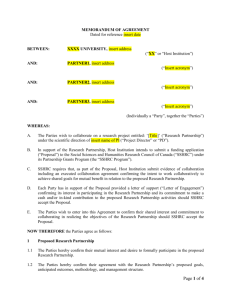

Social Sciences and Humanities Research Council (SSHRC) Departmental Evaluation Plan

advertisement