Fundamentals Tools of Modern Multilingual Speech Processing Technology in “Urdu”

advertisement

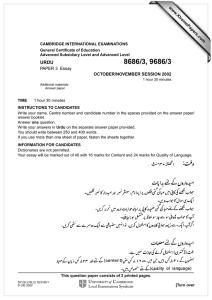

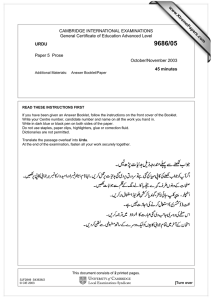

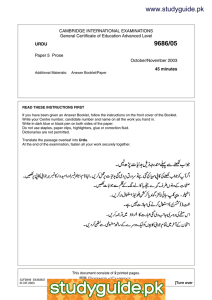

International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 Fundamentals Tools of Modern Multilingual Speech Processing Technology in “Urdu” langauage Speech Processing Mohammed Waseem Ashfaque1, Quadri N.Naveed2, Sayyada Sara Banu3, Quadri S. S Ali Ahmed4 1 . Department of Computer Science & IT, College of Management and Computer Technology, Aurangabad, India 2 . Department of Computer Science & IT, King Khalid University, Abha, Saudi Arabia 3 4 . College of computer science and information system,J azan university,Saudi Arabia . Department of Computer Science & IT, College of Management and Computer Technology, Aurangabad, India ABSTRACT In this paper we have try out to describe of Fundamentals Tools of Modernized Multilingual Speech Processing Technology in Urdu Speech processing language the case study being selected as Urdu language. And one of the comprehensive reviews of Urdu speech processing technology is being discussed Standard Urdu is a standardized register of the Indian language. Urdu is historically associated with the Muslims of the region of India. It is the national language and lingua franca of Pakistan, and an official language of six Indian states and one of the 22 scheduled languages in the Constitution of India. 1920, is a history of the Urdu language from its origins to the development of an Urdu literature. Urdu and Hindi share an Indo-Aryan base, but Urdu is associated with the Nastaliq script style of Persian calligraphy and reads right-to-left, whereas Hindi resembles Sanskrit and reads left-to-right. The earliest linguistic influences in the development of Urdu probably began with the Muslim conquest of Sindh in 711. Using a Urdu alphabet, but has no explicit word boundaries, similar to several Asian languages, such as Hindi and Persian. It does have explicit marks for tones, as in the languages of the neighboring countries Like Pakistan, Bangladesh, Afghanistan Therefore, with these unique features of Urdu Research and development of language and speech processing specifically for Urdu language is necessary and quite challenging. Keywords:Multilingual speech processing,speech synthesis,speech recognition,speech-to-speech translation, language identification. 1.INTRODUCTION 1.1 Urdu Language at a Glance Urdu formed from Khariboli a Prakrit, or vernacular, spoken in North India by adding Persian and Arabic words to it. Contrary to the widely held misconception it is not formed in the camp of the Mughal armies.[1][2][3][4][5][6] But the word Urdu is derived from the same Turkic word ordu (army) that has given Englishhorde.[7] However, Turkish borrowings in Urdu are minimum.[8] The words that Urdu has borrowed from Turkish and Arabic have been borrowed through Farsi and hence are a Persianized version of the original word, for instance the Arabic 'teh marbuta ( ) ة changes toheh ( ) هor teh ( ) ت. [9] . The Mughal Empire's official language was Persian [10]. With the advent of the British RajPersian language was replaced by the Hindustani written in the Persian script and this script was used by both Hindus and Muslims. The name Urdu was first used by the poet Ghulam Hamadani Mushafi around 1780.[11][12], From the 13th century until the end of the 18th century Urdu was commonly known as Hindi. The language was also known by various other names such as Hindavi and DehlaviThe communal nature of the language lasted until it replaced Persian as the official language in 1837 and was made co-official, along with English. Urdu was promoted in British India by British policies to counter the previous emphasis on Persian.[13] Fig:1 Phonetics Alphabets of Urdu With Other Languages Volume 4, Issue 2, February 2015 Page 59 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 This triggered a Hindu backlash in northwestern India, which argued that the language should be written in the native Devanagari script. Thus a new literary register, called "Hindi", replaced traditional Hindustani as the official language of Bihar in 1881, establishing a sectarian divide of "Urdu" for Muslims and "Hindi" for Hindus, a divide that was formalized with the division of India and Pakistan after independence (though there are Hindu poets who continue to write in Urdu to this day, with post-independence examples including Gopi Chand Narang and Gulzar). At independence, Pakistan established a highly Persianized literary form of Urdu as its national language.There have been attempts to "purify" Urdu and Hindi, by purging Urdu of Sanskrit loan words, and Hindi of Persian loan words, and new vocabulary draws primarily from Persian and Arabic for Urdu and from Sanskrit for Hindi. This has primarily affected academic and literary vocabulary, and both national standards remain heavily influenced by both Persian and Sanskrit.[14] English has exerted a heavy influence on both as a co-official language.[15]. Urdu is the national and one of the two official languages of Pakistan, along with English, and is spoken and understood throughout the country, whereas the state-by-state languages (languages spoken throughout various regions) are the provincial languages. Only 7.57% of Pakistanis have Urdu as their mother language, 1.2 Official Status [16] but Urdu is understood all over Pakistan. It is used in education, literature, office and court business.[17] It holds in itself a repository of the cultural andsocial heritage of the country.[18] Although English is used in most elite circles, and Punjabi has a plurality of native speakers, Urdu is the lingua franca and national language of Pakistan. In practice English is used instead of Urdu in the higher echelons of government.[19] Urdu is also one of the officially recognized languages in India and has official language status in the Indian states of Uttar Pradesh, Bihar,[20] Telangana, Jammu and Kashmir and the national capital, New Delhi.In Jammu and Kashmir, section 145 of the Kashmir Constitution provides: "The official language of the State shall be Urdu but the English language shall unless the Legislature by law otherwise provides, continue to be used for all the official purposes of the State for which it was being used immediately before the commencement of the Constitution."[21] The importance of Urdu in the Muslim world is visible in the Islamic Holy cities of Mecca and Medina in Saudi Arabia, where most informational signage is written in Arabic, English and Urdu, and sometimes in other languages.[22]. 1.3 Multilingual speech processing (MLSP) is a distinct field of research in speech and language technology that combines many of the techniques developed for monolingual systems with new approaches that address specific challenges of the multilingual domain. Research in MLSP should be of particular interest to countries such as India and Switzerland where there are several officially recognized languages and many more additional languages are commonly spoken. In such multilingual environments, the language barrier can pose significant difficulties in both commerce and government administration and technological advances that could help break down this barrier would be of great cultural and economic value [23][24][25][26].In this paper, we discuss current trends in MLSP and how these relate to advances in the general domain of speech and language technology. Examples of current advances and trends in the field of MLSP are provided in the form of case studies of research being conducted at Idiap Research Institute in the framework of international and national research programmes.In language, there are normally two forms of communication, namely, spoken form and written form1. Depending upon the granularity of representation, both these forms can have different or common representation in terms of basic units. For instance, in spoken form, phonemes/syllables can be considered as the basic unit. Similarly, in the case of (most) written forms, graphemes/characters are the smallest basic units. However, above the smaller units, a word2 can be seen as a common unit for both spoken and written forms. The word then in turn can be described in terms of the smaller basic units corresponding to the spoken form or written form. For a given language, there can be a Volume 4, Issue 2, February 2015 Page 60 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 consistent relationship between spoken form and written form. However, the relationship may not be same across languages. Addressing the issueof a common basic representation across languages is central to MLSP, although this can raise [27][28] different challenges in applications such as ASR and TTS 1.4 Automatic speech recognition In the field of ASR, advances have been largely promoted by the US government via the National Institute of Science and Technology (NIST6). Besides running evaluations, one of the the main contributions of this has been to provide databases This has led naturally to an English language focus for ASR research and development. That is not to say that ASR research is English centric; rather, many of the algorithmic advances have been made initially in that language. Such advances have, however, translated easily to different languages, largely owing to the robustness of statistical approaches to the different specificities of languages. State-of-the-art ASR systems are stochastic in nature and are typically based on HMM. Illustrates a typical HMM-based ASR system for English language. From a spoken language perspective, the different components of the HMM-based ASR system are as follows [29][30][31][32]. (i) Feature extractor that extracts the relevant information from the speech signal yielding a sequence of feature observations/vectors. Feature extraction is typically considered a language independent process (i.e., common feature extraction algorithms are used in most systems regardless of language), although in some cases (such as tonal languages) specific processing should be explored. (ii) Acoustic model that models the relation between the feature vector and units of spoken form (sound units such as phones). (iii) Lexicon that integrates lexical constraints on top of spoken unit level representation yielding a unit representation that is typically common to both spoken form and written form such as, word or morpheme. (iv) Language model that tends to model syntactical/grammatical constraints of the spoken language using the unit representation resulting after integrating lexical constraints[33][34]. 1.5 Text-to-speech synthesis In the domain of TTS, issues in multilingual speech processing have been dominated by two main areas. This is partially due to the limitations of the dominant unit selection paradigm, which directly uses the recordings from voice talents in the generation of synthesized speech. It is clear that multilingual techniques in unit selection are thus bound by the limited availability of multilingual voice talents and more so by the availability of such recordings to the research community, although some research has been conducted to overcome this The first area concerns the construction of TTS voices in multiple languages, which is dealt with in detail by Sproat (1997). This area of research is largely concerned with the development of methodologies that can be made portable across languages, and include issues in text parsing, intonation and waveform generation. Many of these general issues are mirrored in challenges faced by the multilingual ASR community, as has been discussed in the opening of this section, although many of the related challenges are arguably more complex in TTS due to the extensive use of supra-segmental features and the greater degree of language dependence of such features there is no ‘international prosodic alphabet’[35][36][37]. 1.6 Automatic language recognition The objective of automatic language recognition is to recognize the spoken language by automatic analysis of speech data. Automatic language recognition can be classified into two tasks (i) automatic language identification and (ii) automatic language detection. In principle, this classification is similar to speaker identification and speaker verification in speaker recognition research. The goal of automatic language identification (LID) is to classify a given input speech utteranceas belonging to one out of L languages. Various possible applications of LID can be found in multilingual speech processing, call routing, interactive voice response applications, and front-end processing for speech translation translation. There are a variety of cues, including phonological, morphological, syntactical or prosodic cues, that can be exploited by an LID system In the literature, different approaches have been proposed to perform LID, such as using only low-level spectral information using phoneme recognizers in conjunction with phonotactic constraints or using medium- to high-level information (e.g. lexical constraints, language models) through speech recognition [38][39]. 1.7 Recent trends in speech and language processing We can identify a number of advances in speech and languages processing that have significantly impacted MLSP. One of the most important developments has been the rise of statistical machine translation that has resulted in substantial funding and, consequently, research activity being directed towards machine translation and its related multilingual applications (speech-to-speech, speech-to-text translation, etc.). Several notable projects have been pursued in recent years, to mention only a few: Spoken Language Communication and Translation System for Tactical Use (TRANSTAC DARPA initiative), Technology and Corpora for Speech to Speech Translation (TC-STAR FP6 European project), and the Global Autonomous Language Exploitation (GALE DARPA initiative10)[40][41][42][43][44] .Research in these projects not only needs to focus on the optimization of individual components, but also on how the components can be integrated together effectively to provide overall improved output. This is not a trivial task. For instance, speech recognition systems are typically optimized to reduce word error rate (WER) (or character/letter error rate, CER/LER, in some languages). The WER measure gives equal importance to the different types of error that can be committed by Volume 4, Issue 2, February 2015 Page 61 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 the ASR system, namely, deletion, insertion and substitution. Suppose, if the ASR system output (i.e., text transcript) is processed by a machine translation system, then deletion error is probably more expensive compared to other two errors as all information is lost. It then follows that the optimal performance with respect to WER may not provide the best possible translated output and vice versa. Indeed, in the GALE project (in the context of translation of Mandarin language speech data to English), it was observed that CER is less correlated with translation error rate when compared to objective functions such as SParseval (parsing-based word string scoring function)[45][46][47][48]. 2. LITERATURE REVIEW Dialects:-Urdu has a few recognised dialects, including Dakhni, Rekhta, and Modern Vernacular Urdu (based on the Khariboli dialect of the Delhi region).Dakhni (also known as Dakani, Deccani, Desia, Mirgan) is spoken in Deccan region of southern India. It is distinct by its mixture of vocabulary from Marathi and Konkani, as well as some vocabulary from Arabic, Persian and Turkish that are not found in the standard dialect of Urdu. Dakhini is widely spoken in all parts of Maharashtra, Telangana, Andhra Pradesh and Karnataka. Urdu is read and written as in other parts of India. A number of daily newspapers and several monthly magazines in Urdu are published in these states. In terms of pronunciation, the easiest way to recognize a native speaker is their pronunciation of the letter "qāf" ()ق as "ḵẖe" ()خ.The Pakistani variant of the language becomes increasingly divergent from the Indian dialects and forms of Urdu, as it has absorbed many loan words, proverbs and phonetics from Pakistan's indigenous languages such as Pashto, Punjabi, Balochi and Sindhi. Furthermore, due to the region's history, the Urdu dialect of Pakistan draws heavily from the Persian and Arabic languages, and the intonation and pronunciation are more formal compared with corresponding Indian dialects.In addition, Rekhta (or Rekhti), the language of Urdu poetry, is sometimes counted as a separate dialect, one famously used by several poets of high acclaim in the bulk of their work. These included Mirza Ghalib, Mir Taqi Mir and Muhammad Iqbal. Urdu spoken in Indian state of Odisha is different from Urdu spoken in other areas; it is a mixture of Oriya and Bihari. Comparasion with Hindi language:- Standard Urdu is often contrasted with Standard Hindi. Apart from religious associations, the differences are largely restricted to the standard forms: Standard Urdu is conventionally written in the Nastaliq style of the Persian alphabet and relies heavily on Persian and Arabic as a source for technical and literary vocabulary,[49] whereas Standard Hindi is conventionally written in Devanāgarī and draws on Sanskrit.[50] However, both have large numbers of Arabic, Persian and Sanskrit words, and most linguists consider them to be two standardized forms of the same language,[51][52] and consider the differences to besociolinguistic,[53] though a few classify them separately.[54] Old Urdu dictionaries also contain most of the Sanskrit words now present in Hindi.[55][56] Mutual intelligibility decreases in literary and specialized contexts that rely on educated vocabulary. Further, it is quite easy in a longer conversation to distinguish differences in vocabulary and pronunciation of some Urdu phonemes. Due to religious nationalism since thepartition of British India and continued communal tensions, native speakers of both Hindi and Urdu frequently assert them to be distinct languages, despite the numerous similarities between the two in a colloquial setting. The barrier created between the Hindi and Urdu is eroding: Hindispeakers are comfortable with using Persian-Arabic borrowed words[57] and Urdu-speakers are also comfortable with using Sanskrit terminology.[58][59] 2.1 Vocubalry The language's Indo-Aryan base has been enriched by borrowing from Persian and Arabic. There are also a smaller number of borrowings fromChagatai, Portuguese, and more recently English. Many of the words of Arabic origin have been adopted through Persian and have different pronunciations and nuances of meaning and usage than they do in Arabic. Levels of formality:-Urdu in its less formalised register has been referred to as a rek̤h̤tah (رﯾﺨﺘہ, [reːxt̪aː]), meaning "rough mixture". The more formal register of Urdu is sometimes referred to as zabān-i Urdū-yi muʿ allá ( زﺑﺎنِ اُردُوﺋﮯ [ ﻣﻌﻠّٰﻰzəbaːn eː ʊrd̪u eː moəllaː]), the "Language of the Exalted Camp", referring to the Imperial army.[60]The etymology of the word used in the Urdu language for the most part decides how polite or refined one's speech is. For example, Urdu speakers would distinguish between ﭘﺎﻧﯽpānī and آبāb, both meaning "water"; the former is used colloquially and has older Indic origins, whereas the latter is used formally and poetically, being of Persian origin.If a word is of Persian or Arabic origin, the level of speech is considered to be more formal and grand. Similarly, if Persian or Arabic grammar constructs, such as the izafat, are used in Urdu, the level of speech is also considered more formal and grand. If a word is inherited fromSanskrit, the level of speech is considered more colloquial and personal.[61] This distinction is similar to the division in English between words ofLatin, French and Old English origins. 2.2 Politeness and Smoothness Urdu syntax and vocabulary reflect a three tiered system of politeness called ādāb. Due to its emphasis on politeness and propriety, Urdu has always been considered an elevated, somewhat aristocratic, language in South Asia. It continues to conjure a subtle, polished affect in South Asian linguistic and literary sensibilities and thus continues to be Volume 4, Issue 2, February 2015 Page 62 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 preferred for song-writing and poetry, even by non-native speakers.Any verb can be conjugated as per three or four different tiers of politeness. For example, the verb to speak in Urdu is bolnā ( )ﺑﻮﻟﻨﺎand the verbto sit is baiṭ hnā ()ﺑﯿﭩﮭﻨﺎ. The imperatives "speak!" and "sit!" can thus be conjugated five different ways, each marking subtle variation in politeness and propriety. These permutations exclude a host of auxiliary verbs and expressions that can be added to these verbs to add even greater degree of subtle variation. For extremely polite, formal or ceremonial situations, nearly all commonly used verbs have equivalent Persian/Arabic synonyms. Table:1 Comparasion with other languages Disparaging/Ex tremely Casual [TŪ] BOL Casual Intimate And [TU M] BOL O Polite Intimate And Formal Intimate Polite Formal Ceremonial Extremely Formal (Persian Yet And / [ĀP] BOL O [ﺗُﻮ ]ﺑﻮل TŪ] बोल![ BAIṭ H !! . [ﺗُﻢ [तुम] ]ﺑﻮﻟﻮ۔ बोलो TUM] BAIṭ H O .] [آپ [आप ] ]ﺑﻮﻟﻮ۔ [ĀP] BOL EṈ. [آپ ]ﺑﻮﻟﯿﮟ [ĀP] BOLI' E [آپ ﺑﻮﻟﺌﮯ۔ ] [ĀP] FAR MĀ'I YE. [तू] [آپ رﻣﺎﺋﯿ ]ے बोलो [आप ] बोल [ĀP] BAIṭ H O. [ĀP] BAIṭ H EṈ [आप ] . बोिल BAIṭ H I'E. ए [आप ] फ़रमा इये [ﺗُﻮ ]ﺑﯿﭩﮫ [. [ﺗُﻢ ]ﺑﯿﭩﮭﻮ۔ [آپ ﺑﯿﭩﮭﻮ बैठ! [तुम] बैठो [आप ] बैठो [ [آپ ﺑﯿﭩﮭﯿﮟ۔ ][ [ĀP] ĀP] TAS̱H̱R ĪF RAKHI' E [तू] [آپ ﺑﯿﭩﮭﺌﮯ []آپ ﺗﺸﺮﯾ ف رﮐﮭﺌ [ے۔ आप] बैठ [आप ] बै ठए [आप ] तशर फ़ र खए Urdu script:-Urdu is written right-to left in an extension of the Persian alphabet, which is itself an extension of the Arabic alphabet. Urdu is associated with the Nasta līq style ofPersian calligraphy, whereas Arabic is generally written in the Naskh or Ruq'ahstyles. Nasta’liq is notoriously difficult to typeset, so Urdu newspapers were handwritten by masters of calligraphy, known as katib or khush-navees, until the late 1980s.[citation needed] One handwritten Urdu newspaper, The Musalman, is still published daily in Chennai[62]. 2. 3 Kaithi script Urdu was also written in the Kaithi script. A highly Persianized and technical form of Urdu was the lingua franca of the law courts of the British administration in Bengal,Bihar, and the North-West Provinces & Oudh. Until the late 19th century, all proceedings and court transactions in this register of Urdu were written officially in the Persian script. In 1880, Sir Ashley Eden, the Lieutenant-Governor of Bengal abolished the use of the Persian alphabet in the law courts of Bengal and Bihar and ordered the exclusive use of Kaithi, a popular script used for both Urdu and Hindi.[63]. 2.4 Devanagari script More recently in India, Urdu speakers have adopted Devanagari for publishing Urdu periodicals and have innovated new strategies to mark Urdū in Devanagari as distinct from Hindi in Devanagari. Such publishers have introduced new orthographic features into Devanagari for the purpose of representing the Perso-Arabic etymology of Urdu words. One example is the use of अ (Devanagari a) with vowel signs to mimic contexts of ‘(عain), in violation of Hindi Volume 4, Issue 2, February 2015 Page 63 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 orthographic rules. For Urdu publishers, the use of Devanagari gives them a greater audience, whereas the orthographic changes help them preserve a distinct identity of Urdu.[64]. 2.5 Roman script Urdu is occasionally written in the Roman script. Roman Urdu has been used since the days of the British Raj, partly as a result of the availability and low cost of Roman movable type for printing presses. The use of Roman Urdu was common in contexts such as product labels. Today it is regaining popularity among users of text-messaging and Internet services and is developing its own style and conventions. Habib R. Sulemanisays, 2.6 Encoding Urdu in Unicode & Writing/Sound systems Like other writing systems derived from the Arabic script, Urdu uses the 0600–06FF Unicode range.[63] Certain glyphs in this range appear visually similar (or identical when presented using particular fonts) even though the underlying encoding is different. This presents problems for information storage and retrieval. For example, the University of Chicago's electronic copy of John Shakespear's "A Dictionary, Hindustani, and English"[64] includes the word ''ﺑﮭﺎرت (India). Searching for the string " "ﺑﮭﺎرتreturns no results, whereas querying with the (identical-looking in many fonts) string " "ﺑﮭﺎرتreturns the correct entry.[65] This is because the medial form of the Urdu letter do chashmi he (U+06BE) — used to form aspirate digraphs in Urdu — is visually identical in its medial form to the Arabic letter hā (U+0647; phonetic value /h/). In Urdu, the /h/ phoneme is represented by the character U+06C1, called gol he (round he), or chhoti he (small he). Table:2 Encoding Urdu in Unicode 2.7 Recent and ongoing MLSP research at Idiap Research Institute At Idiap, multilingual speech processing is an important research objective given Switzerland’slocation within Europe, at the intersection of three major linguistic cultures, and Swiss society itself being inherently multilingual. Towards this end, we have been conducting research in MLSP as part of several internationally and nationally funded projects, including for instance: (i) EMIME11 (Effective Multilingual Interaction in Mobile Environments): This FP7 EU project commenced in March 2008 and is investigating the personalization of speech-to speech translation systems. The EMIME project aims to achieve its goal through the use of HMM-based ASR and TTS, more specifically, the main research goal is the development of techniques that enable unsupervised cross-lingual speaker adaptation for TTS. (ii) GALE12 (Global Autonomous Language Exploitation): Idiap was involved in this DARPAfunded project as part of the SRI-lead team. The project primarily involved machine translation and information distillation.We have mostly studied the development of new discriminative MLP-based features and MLP combination methods for the ASR components. Despite cessation of the project, we have continued our research of this topic. (iii) MULTI (MULTImodal Interaction and MULTImedia Data Mining) is a Swiss National Science Foundation (SNSF) project carrying out fundamental research in several related fields of multimodal interaction and multimedia data mining. A recently initiated MULTI sub-project is conducting research in MLP-based methods for language identification and multilingual speech recognition with a focus on Swiss languages (iv) COMTIS13 (Improving the coherence of machine translation output by modelling intersentential relations) is a new machine translation project funded by the SNSF that was started on March 2010. The project is concerned with modelling the coherency between sentences in machine translation output, thereby improving overall translation performance. In the remainder of the paper, we present three case studies conducted in MSLP at Idiap that have resulted from participation in the above-mentioned research projects. Volume 4, Issue 2, February 2015 Page 64 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 3.METHODOLOGY 3.1 Fundamental analyses and tools Certain fundamental tools are critical to research and development of advanced speech processing technology. This section summarizes existing speech, text, and pronunciation modeling tools developed mainly for Urdu. One of the key issues is the analysis of the behavior of tones in Urdu, which is often modulated by phrase and sentence level intonation. Other attributes of speech which have been explored for Urdu include stress and pause. Work conducted on speech segmentation and basic phoneme modeling is also described. For text processing, word and sentence segmentation are reviewed. Fundamental tools for pronunciation modeling are described at the end of this section. There are certain Speech analyses tools are as follows Tone analysis Intonation analysis Stress analysis 4 Pause analysis 3.2 Speech segmentation Syllable-unit based speech recognition has been pursued by some researchers since this approach makes it easier to incorporate prosodic features, such as tones, into the syllable units. Moreover, some papers have shown a high accuracy for tone recognition when applying this method to isolated syllables and hand-segmented syllables extracted from continuous speech. Automatic segmentation of continuous speech into syllable units is therefore an important issue. proposed using several prosody features including short-time energy, a zero-crossing rate, and pitch with some heuristic rules for syllable segmentation. suggested the use of a dual-band energy contour for phoneme segmentation. This method decomposed input speech into a low and a high-frequency component using wavelet transformation, computed the time-domain normalized energy of both components, and introduced some heuristic rules for selecting endpoints of syllables and phonemes based on energy contours. Although there is no comparative experiment using other typical techniques, dividing the speech signal into detailed frequency bands before applying energy-based segmentation rules seems to be an effective approach. 3.3 Phoneme modeling During the early 2000s, a series of research papers on Urdu vowel modeling was published by Chulalongkorn University Basicfeatures such as formant frequencies were extracted and grouped according to vowels. This research provided the first analysis of Urdu phonemes using a large real-speech corpus, not just a small set of sample utterances. There is still a lack of sufficient research on prosody analysis for Urdu, however data collection is not a trivial task, and thus research on prosody modeling has been substantially delayed. Since 2000, research on basic linguistic features has decreased and the engineering of high-level speech applications such as speech recognition and synthesis has taken priority 3.4 Text analyses and tools:3.4.1 Morphological analysis Morphological analysis is a process for segmenting words into sub-word units called morphemes. The morpheme is the shortest textual chunk that still has meaning. Morphological analysis not only helps in finding the pronunciation of a word, but also serves as a necessary process for reducing the number of lexical words in automatic speech recognition (ASR). For Urdu, a morpheme may be as short as a syllable. Since Urdu contains a large number of monosyllabic words and many polysyllabic words are concatenations of monosyllabic words, morphological analysis in Urdu consists mainly in a joint process of word segmentation and syllabification. 3.4.2 Pronunciation modeling tools Pronunciation modeling is an important component for various language processing technologies such as speech recognition, text-to-speech synthesis, and spoken document retrieval. The task is to find the pronunciation of a given text by using a pronunciation dictionary and/or a phonological analyzer. This subsection describes the details of these two techniques for Urdu. 3.4.3 Pronunciation dictionary At present, there are many Urdu dictionaries available both offline and online, in a monolingual format, a bilingual format such as Urdu to English, or a multi-lingual format i.e., Urdu to multiple languages. Dictionaries have been constructed for varying purposes, generally for translation and writing tasks. Only a few dictionaries are designed for use in natural language processing research. There are some Urdu dictionaries. 3.4.4 Urdu Speech synthesis A general structure of TTS is shown in Fig. 2; viewing this structure, research regarding TTS can be classified into four major groups. The first group focuses on the general problem of developing Urdu TTS engines, while the other three groups focus on the various subcomponents of these systems, which include text processing, linguistic/prosodic processing, and waveform synthesis. Volume 4, Issue 2, February 2015 Page 65 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 Table:3 Some words in Urdu dictionary English Urdu (hello) peace be upon you. اﻟﺴﻼ ُم ﻋﻠﯿﮑﻢ۔ reply to salam) peace be upon you, too. hello. goodbye. yes. yes. yes. no thank you. please come in Transliterati on assalām-ualaikum Notes وَﻋﻠﯿﮑُﻢ اﻟﺴﻼم۔ waalaikumussal ām lit. "and upon you, peace." response to assalāmu alaikum. آداب )ﻋَﺮض ﮨﮯ(۔ ﺧُﺪا ،اﻟﻠّٰہ ﺣﺎﻓِﻆ ﺣﺎﻓِﻆ۔ ādāb (arz hai). ﮨﺎں۔ ﺟﯽ۔ ﺟﯽ ﮨﺎں۔ ﻧَہ۔ ﺷُﮑﺮِﯾَہ۔ ﺗَﺸﺮﯾﻒ ﻻﺋﯿﮯ hāⁿ. lit. "regards (are expressed).", a very formal secular greeting. lit. "may god be your guardian". "khuda" from persian for "god", "allah" from arabic for "god". casual. formal confident formal. rare from arabic shukran. lit. "(please) bring your honour". khuda hāfiz, allah hāfiz. ji jī hāⁿ. nā. shukriyā. tashrīf la'iyē. lit. "peace be upon you." (from arabic). often shortened to 'salām' 3.4.5 Development of Urdu TTS The development of a Urdu TTS engine was published in 1983 by where a speech-unit concatenation algorithm was applied to Urdu. two publications on similar techniques. This paper reviews the progress of Urdu speech technology in five areas of research: fundamental analyses and tools, text-to-speech synthesis like (TTS), automatic speech recognition (ASR), speech applications, and language resources Latest tools of speech recognition systems merge interdisciplinary technologies from Linguistics into a unified statistical framework ,Signal Processing, Natural Language ,and Pattern Recognition, These systems, have varieties of applications in a bright and broad range of signal processing problems, represent a revolution in Digital Signal Processing. Such tools are now capable of recognition continuous speech input for vocabularies of several thousand words in operational environments. Multilingual speech processing and provide emerging challenges for the researcher to research for community. Multilingual speech processing has been a topic of ongoing interest to the research. Fig:2 Architecture of Urdu Text to Speech (TTS ) The synthesis approach that is recognized as giving the most human-like speech quality is based on corpus-based Unitselection. In Urdu, such an approach was implemented in the latest version developed at NECTEC. Although the newest Vaja engine produces a much higher sound-quality than the former unit-concatenation based engine, the synthetic speech sometimes sounds unnatural, especially when synthesizing non-Urdu words written with Urdu characters. Improvement of the quality in both text processing and speech synthesis is required before state-of-the-art engines of the same level of quality as those which have been successfully developed for other languages, such as English and Japanese, can be achieved Volume 4, Issue 2, February 2015 Page 66 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 3.4.6 Text processing Text processing in TTS works by finding the most probablesequence of phonemes given an input text. For Urdu, there are several crucial subtasks involved as well. Urdu text has no explicit sentence or word boundary marker. Space is often placed between sentences but not all the spaces are sentence boundary markers. Therefore, text is first tokenized into text chunks using some specific characters such as punctuations, digits, and spaces. The next subtask is to determine which space characters function as sentence boundary markers. Algorithms for sentence break detection were described, In these algorithms, text chunks are segmented into word sequences, and a POS is attributed to each word. The space is determined to be a sentence break based on the POS of surrounding words. Therefore, word segmentation is considered to be one of the most crucial sub-tasks involved since it is commonly the first necessary step in the processing systems. Algorithms for Urdu word segmentation have been explained, the next subtask is called text normalization, where non-verbal words are replaced by their orthographies. Non-verbal words include numerals, symbols e.g., ‘‘$’’ and ‘‘=’’, and abbreviations. The final step in text processing is grapheme-to-phoneme conversion. This issue was discussed extensively in Section. 3.4.7 Linguistic/prosodic processing In TTS, important prosody features include phrasal pauses, F0, duration, and energy. These basic features form higherlevel supras egmental features such as stress and intonation. In Urdu, the lexical tone is another additional feature which needs to be generated. The lexical tone itself is often modified by stress and phrasal intonation. There are a number of research sites investigating Urdu speech prosody. Some have focused exclusively on prosody analysis, whereas some have attempted to model prosody and have used the models for Urdu TTS. This section focuses on only the latter. Lexical tones in Urdu are almost completely described by textual forms. Simple rules or a pronunciation dictionary are useful for examining the lexical tones. Only some specific words, mostly those which originated in the Bali or Sanskrit languages, diverge from the rules. 3.4.8 Urdu speech recognition Fig. 3 illustrates a diagrammatic representation of the major components of general ASR. For other languages, such as English, there have been major advancements in each of these areas. For Urdu ASR, we cluster research issues into five groups: general Urdu ASR applications, acoustic modeling, language modeling, noise robustness, and prosody 3.4.9 Development of Urdu ASR The first discussion of Urdu isolated syllable recognition appeared in the thesis where distinctive features were extracted from speech utterances and used for recognition. Since 1995, many academic institutions in Pakistan have presented their inventions for Urdu ASR. Development started with isolated word recognition, including numeral speech recognition Fig:3 Architecture of Urdu ASR 3.4.10 Urdu speech applications Since basic research of Urdu speech, as well as the development of large Urdu resources, is still limited and, in certain areas, still in the initial stages, research into high-level speech applications presents quite a challenge. According to past literature reviews, three applications including spoken dialogue systems, speech translation, and speaker recognition have been explored. It is noted that the speaker recognition issue is not particularly language-dependent. Phonetic-feature analysis Speech input Tonalfeature analysis Tonal-syllable recognizer HMMs of tonalsyllables Tonal-syllable sequence Phonetic –feature analysis Speech input Tonalfeature analysis Base syllable recognizer HMMs of base syllables Tone sequence Tone recognizer HMMs of tones Base-syllable sequence Phonetic – feature analysis Speech input Tonalfeature analysis Base –syllable recognizer HMMs of basesyllables Tone recognizer HMMs of tones Tonal-syllable sequence Volume 4, Issue 2, February 2015 Page 67 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 4.FUTURE WORK PLAN 4.1 Phonetically Rich and Balanced Speech Corpus Speech corpus is an important requirement for developing any ASR system. The developed corpus contains recordings of 500 Urdu sentences. 400 written and were recorded and used for training the acoustic model. For testing the acou tic model, 48 additional sentences representing Urdu proverbs were created by an Urdu language specialist. The speech corpus was recorded by 60 (30 male and 30 female) Urdu native speakers from 11 different Indian countries This speech corpus was recorded in a sound proof studio using Sound Forge 8.0 software and took nearly six months to complete starting from April 2012 to Oct 212 This speech corpus was enriched with varieties of Urdu native speakers taking into consideration the following characteristics representing: Age Criteria. various nationalities. Various indian states. various professionals. various academic qualifications. Since this speech corpus contains training and testing written and spoken data of a variety of Urdu native speakers who represent different genders, age categories, nationalities, regions, and professions, and is also based on phonetically rich and balanced sentences, it is expected to be used for development of many Urdu speech and text based applications, such as speaker dependent and independent ASR, Text-To- Speech (TTS) synthesis, speaker recognition, and many others. Fig:4 Flowchart carrying towards Result 4.2 Speech Data Preparation and Analysis This section covers all preparation and pre-processing steps we developed in order to produce ready to use speech data, which are used later for training the acoustic model. 4.3 Automatic Urdu Speech Segmentation During the recording sessions, speakers were asked to utter the 500 sentences sequentially starting with training sentences followed by testing sentences. Recordings for a single speaker were saved into one “.wav” file and sometimes up to four “.wav” files depending on number of sessions the speaker spent to finish recording the 415 sentences. It is time consuming to save every single recording once uttered. Therefore, there was a need to segment these bigger “.wav” files into smaller ones each having a single recording of a single sentence. We developed a Matlab program that has two functions. The first function “read.m” reads the original bigger “.wav” files, identifies the starting and ending points for each sentence utterance, generates a text “segments.txt” file that automatically assigns a name for each utterance and concatenates the name with the corresponding starting and ending points. Whereas the second function Volume 4, Issue 2, February 2015 Page 68 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 “segment.m” reads the automatically generated text file “segments.txt” and compares it with the original bigger “.wav” file, it then segments the bigger “.wav” file based on starting and ending points read from “segments.txt” into smaller“.wav” files carrying the same name as identified in “segments.txt”. 4.4 Manual Classification and Validation of Correct Speech Data The automatic Urdu speech segmentation explained in subsection outputs all possible “.wav” files in a single directory. Therefore, a human manual classification of those “.wav” files into the corresponding sentence directories was done. Wrongly pronounced utterances were ignored at this stage. As a result, only correct utterances are considered for further pre-processing steps 4.5 Directory Structure, Sound Filenames Convention and Automatic Generation of Training and Testing Transcription Files Each speaker has a single folder that contains three sub-folders namely, “Training Sentences”, “Testing Sentences”, and “Others”. “Training Sentences” subfolder contains 400 sub-folders representing the 400 training sentences, whereas “Testing Sentences” subfoldercontains 48 sub-folders representing the 51 testing sentences. The sub-folder “Others” contains out of content utterances for each speaker. Each sentence sub-folder contains two other sub-folders namely “Correct” and “Wrong”. Utterances classified under the sub-folder “Correct” are the ones used for further preprocessing steps 4.6 Speech Corpus Testing and Evaluation for Urdu ASR Systems This section covers three major requirements for Urdu ASR namely, Urdu phonetic dictionary, the acoustic model training, the language model training. An evaluation of the Urdu ASR is finally presented Fig:5 System of Speech translation Mechanism 4.7 Software/Interfacing There are efforts underway to develop more sophisticated and user-friendly Urdu support on computers and the Internet,the most widespread of which is InPage Desktop Publishing package. Microsoft has included Urdu language support in all new versions of Windows versions and Microsoft Office 2007 are available in Urdu through Language Interface Pack support. Most Linux Desktop distributions allow the easy installation of Urdu support and translations as well; Apple implemented the Urdu language keyboard across Mobile devices in its iOS 8 update in September 2014. and now a day’s latest Android operating system also providing the Urdu plugging in its various device s. Fig:6 Supporting Urdu Plugins in various devices Volume 4, Issue 2, February 2015 Page 69 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 5.CONCLUSION In this paper we march out towards developing a compatible and high performance Urdu language ASR system based on fundamental tools which are currently available in multilingual speech processing technology and its being utilized in Urdu language speech processing ,phonetically rich and balanced speech corpus we found. This hypothesis also includes creating the full diacritical marks Transcription, build an Urdu phonetic dictionary, and Urdu statistical language model. Recognition results show that this Urdu ASR system is speaker independent and is highly better than many others reported Urdu recognition results. References [1] Literary Notes: Common misconceptions about Urdu, Dawn Media Group [2] Two Languages or One?, Hindi-Urdu Flagship University of Texas [3] Hamari History, Hamari Boli [4] Dua, Hans R. (1992). Hindi-Urdu as a pluricentric language. In M. G. Clyne (Ed.), Pluricentric languages: Differing norms in different nations. Berlin: Mouton de Gruyter. ISBN 3-11-012855-1. [5] Salimuddin S et al. (2013). "Oxford Urdu-English Dictionary ". Pakistan: Oxford University Press. ISBN 978-019-597994-7(Introduction Chapter) [6] Kachru, Yamuna (2008), Braj Kachru; Yamuna Kachru; S. N. Sridhar, eds., Hindi-Urdu-Hindustani, Language in South Asia, Cambridge University Press, p. 82, ISBN 978-0-521-78653-9 [7] Peter Austin (1 September 2008). One thousand languages: living, endangered, and lost. University of California Press. pp. 120–.ISBN 978-0-520-25560-9. Retrieved 29 December 2011. [8] Urdu and the Borrowed Words, Dawn Media Group [9] John R. Perry, "Lexical Areas and Semantic Fields of Arabic" in Éva Ágnes Csató, Eva Agnes Csato, Bo Isaksson, Carina Jahani,Linguistic convergence and areal diffusion: case studies from Iranian, Semitic and Turkic, Routledge, 2005. pg 97: "It is generally understood that the bulk of the Arabic vocabulary in the central, contiguous Iranian, Turkic and Indic languages was originally borrowed into literary Persian between the ninth and thirteenth centuries" [10] Ethnologue. Retrieved 5 March 2013. [11] Faruqi, Shamsur Rahman (2003), Sheldon Pollock, ed., A Long History of Urdu Literary Culture Part 1, Literary Cultures in History: Reconstructions From South Asia, University of California Press, p. 806, ISBN 0-520-22821-9 [12] Rahman, Tariq (2001). From Hindi to Urdu: A Social and Political History. Oxford University Press. pp. 1– 22. ISBN 978-0-19-906313-0. [13] Rahman, Tariq (2000). "The Teaching of Urdu in British India".The Annual of Urdu Studies 15: 55. [14] The Urdu Language. Retrieved 18 December 2011. [15] Rahman, Tariq (2014), Pakistani English, Quaid-i-Azam University=Islamabad, p. 9. [16] Government of Pakistan: Population by Mother Tongue Pakistan Bureau of Statistics [17] In the lower courts in Pakistan, despite the proceedings taking place in Urdu, the documents are in English, whereas in the higher courts, i.e. the High Courts and the Supreme Court, both documents and proceedings are in English. [18] Zia, Khaver (1999), "A Survey of Standardisation in Urdu". 4th Symposium on Multilingual Information Processing, (MLIT-4),Yangon, Myanmar. CICC, Japan [19] Rahman, Tariq (2010). Language Policy, Identity and Religion. Islamabad: Quaid-i-Azam University. p. 59. [20] "Urdu in Bihar". Language in India. Retrieved 17 May 2008. [21] http://jkgad.nic.in/statutory/Rules-Costitution-of-J&K.pdf [22] "Importance Of Urdu". GeoTauAisay.com. Retrieved 8 August 2010. [23] Paul D B, Baker J 1992 The design for the wall street journal-based CSR corpus. In Human Language Technology Conference: Proceedings of the Workshop on Speech and Natural Language, pp. 357–362, Harriman, NY [24] Pinto J, Yegnanarayana B, Hermansky H, Magimai-Doss M 2008 Exploiting contextual information for improved phoneme recognition. In Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), Volume 4, Issue 2, February 2015 Page 70 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 pp. 4449–4452 [25] Pinto J, Sivaram G S V S, Magimai-Doss M, Hermansky H, Bourlard H 2011 Analysis of MLP based hierarchical phoneme posterior probability estimator. IEEE Trans. Audio Speech Lang. Process. 19(2): 225–241 [26] Pinto J P 2010 Multilayer Perceptron Based Hierarchical Acoustic Modeling for Automatic Speech Recognition. PhD thesis, Ecole Polytechnique Fédérale de Lausanne, Lausanne, Switzerl and Rabiner L R 1989 A tutorial on hidden Markov models and selected applications in speech recognition. Proc. IEEE 77(2): 257–286 [27] Saheer L, Dines J, Garner P N, Liang H 2010a Implementation of VTLN for statistical speech synthesis. In Proc. 7th Speech Synthesis Workshop, Kyoto, Japan [28] Saheer L, Garner P N, Dines J 2010b Study of Jacobian normalisation for VTLN. Idiap-RR Idiap-RR-252010, Idiap Research Institute, Martigny, Switzerland [29] Saheer L, Garner P N, Dines J, Liang H 2010c VTLN adaptation for statistical speech synthesis. In Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), pp. 4838–4841, Dallas, USA [30] Schlüter R, Macherey W, M"uller B, Ney H 2001 Comparison of discriminative training criteria and optimization methods for speech recognition. Speech Commun. 34(3): 287–310 [31] Schultz T 2006 Multilingual acoustical modeling. In (eds) T Schultz, K Kirchoff, Multilingual Speech Processing, chapter 4, pp. 71–122. Academic Press, USA [32] Schultz T,Waibel A 1997a Fast bootstrapping of LVCSR systems with multilingual phoneme sets. In Proc. European Conference on Speech Communication and Technology (EUROSPEECH), vol. 1, pp. 371–374, Rhodes, Greece [33] Schultz T,Waibel A 1997b Fast bootstrapping of LVCSR systems with multilingual phoneme sets. In Proc. European Conference on Speech Communication and Technology (EUROSPEECH), pp. 371–374 [34] Schultz T, Waibel A 1998 Language independent and language adaptive large vocabulary speech recognition. In Proc. Int. Conf. Spoken Lang. Process. (ICSLP), pp. 1819–1822 [35] Schultz T, Waibel A 2001 Language-independent and language-adaptive acoustic modeling for speech recognition. Speech Commun. 35(1–2): 31–50 [36] Schultz T, Rogina I,Waibel A 1996 LVCSR-based language identification. In Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), vol. 2, pp. 781–784 [37] Siemund R, Höge H, Kunzmann S, Marasek K 2000 SPEECON – speech data for consumer devices. In Proc. 2nd Int. Conf. Language Resources & Evaluation, pp. 883–886, Athens, Greece [38] Silén H, Hel E, Nurminen J, Gabbouj M 2009 Parameterization of vocal fry in HMM-based speech synthesis. In Proc. Interspeech, pp. 1775–1778, Brighton, UK [39] Sivadas S, Hermansky H 2004 On Use of Task Independent Training Data in Tandem Feature Extraction. In Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), pp. I–541–I–544 [40] Sproat R (ed) 1997 Multilingual Text-to-Speech Synthesis: The Bell Labs Approach. Kluwer Academic Publishers, Norwell, Massachussetts, USA [41] Stolcke A, Grézl F, Hwang M-Y, Lei X, Morgan N, Vergyri D 2006 Cross-domain and cross-lingual portability of acoustic features estimated by multilayer perceptrons. In Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), pp. I–321–I–324 [42] Suendermann D, Hoege H, Bonafonte A, Ney H, Hirschberg J 2006 TC-Star: Cross-language voice conversion revisited. In Proc. TC-Star Workshop, Barcelona, Spain [43] SugiyamaM1991 Automatic language recognition using acoustic features. In Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), pp. 813–816 [44] Tokuda K, Masuko T, Miyazaki N, Kobayashi T 2002 Multi-space probability distribution HMM. IEICE Trans. Inf. Syst. E85-D(3): 455–464 [45] Toth L, Frankel J, Gosztolya G, King S 2008 Cross-lingual portability of MLP-based tandem features a case study for English and Hungarian. In Proc. Interspeech, pp. 2695–2698 [46] Traber C, Huber K, Nedir K, Pfister B, Keller E, Zellner B 1999 From multilingual to polyglot speech synthesis. In Proc. European Conference on Speech Communication and Technology (EUROSPEECH), pp. 835–838, Budapest, Hungary [47] Valente F 2009 A novel criterion for classifiers combination in multistream speech recognition. IEEE Signal Process. Lett. 16(7): 561–564. [48] Hervé Bourlard1,2,∗, John Dines1,Mathew Magimai-Doss1, Philip N Garner1,David Imseng1,2, Petr Motlicek1, Hui Liang1,2,Lakshmi Saheer1,2 And Fabio Valente1,,” Current Trends In Multilingual Speech Processing”, Sa¯Dhana¯ Vol. 36, Part 5, October 2011, Pp. 885–915._C Indian Academy Of Sciences [49] "Bringing Order to Linguistic Diversity: Language Planning in the British Raj". Language in India. Retrieved 20 May 2008. [50] "A Brief Hindi — Urdu FAQ". sikmirza. Archived from the original on 2 December 2007. Retrieved 20 May 2008. Volume 4, Issue 2, February 2015 Page 71 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 4, Issue 2, February 2015 ISSN 2319 - 4847 [51] "Hindi/Urdu Language Instruction". University of California, Davis. Archived from the original on 5 April 2008. Retrieved 20 May 2008. [52] "Ethnologue Report for Hindi". Ethnologue. Retrieved 26 February 2008. [53] "Urdu and its Contribution to Secular Values". South Asian Voice. Retrieved 26 February 2008. [54] The Annual of Urdu studies, number 11, 1996, “Some notes on Hindi and Urdu”, pp. 203–208. [55] Shakespear, John (1834), A dictionary, Hindustani and English, Black, Kingsbury, Parbury and Allen [56] Fallon, S. W. (1879), A new Hindustani-English dictionary, with illustrations from Hindustani literature and folklore, Banāras: Printed at the Medical Hall Press [57] Urdu: Language of the Aam Aadmi Times of India [58] 'Vishvās’: A word that threatens Pakistan Khaled Ahmed, The Express Tribune [59] Kids have it right: boundaries of Urdu and Hindi are blurredFirstpost [60] Colin P. Masica, The Indo-Aryan languages. Cambridge Language Surveys (Cambridge: Cambridge University Press, 1993). 466. [61] Afroz Taj (University of North Carolina at Chapel Hill. Retrieved 26 February 2008. [62] India: The Last Handwritten Newspaper in the World · Global Voices. Globalvoicesonline.org (2012-03-26). Retrieved on 2013-07-12. [63] The News, Karachi, Pakistan: Roman Urdu by Habib R Sulemani [64] http://www.ukindia.com/zurdu1.htm. [65] http://www.unicode.org/charts/PDF/U0600.pdf [66] "A dictionary, Hindustani and English". Dsal.uchicago.edu. 29 September 2009. Retrieved 18 December 2011. [67] "A dictionary, Hindustani and English". Dsal.uchicago.edu. Retrieved 18 December 2011. Volume 4, Issue 2, February 2015 Page 72