International Journal of Application or Innovation in Engineering & Management... Web Site: www.ijaiem.org Email: , Volume 2, Issue 8, August 2013

advertisement

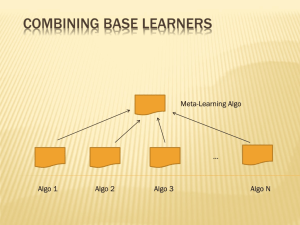

International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 2, Issue 8, August 2013 ISSN 2319 - 4847 An Experimental Study on Ensemble of Decision Tree Classifiers G. Sujatha1, Dr. K. Usha Rani2 1 Assistant Professor, Dept. of Master of Computer Applications Rao & Naidu Engineering College, Ongole 2 Associate Professor Department of Computer Science Sri Padmavati Mahila Viswavidyalayam (Women’s University), Tirupati Andra Pradesh, India ABSTRACT Data mining is the process of analyzing large quantities of data and summarizing it into useful information. Over the years, Classification has been playing an important role in the fields of data mining as well as in the studies of machine learning, statistics, neural networks and many expert systems. Different Classification algorithms have been successfully implemented in various applications. Among them, some of the popular implications of classification algorithms are scientific experiments, image processing, fraud detection, medical diagnosis and many more. In recent years, medical data classification especially tumor data classification has caught a huge interest among the researchers. Decision tree classifiers are used extensively for different types of tumor cases. In this study ID3, C4.5 and Simple CART classifier with ensemble techniques such as boosting and bagging have been considered for the comparison of performance of accuracy and time complexity for the classification of two tumor datasets. Keywords: Data mining, Classification, Decision trees, Bagging, Boosting, Tumor datasets 1. INTRODUCTION Large collections of medical data are valuable resources from which potentially new and useful knowledge can be discovered through data mining. Data mining is an increasingly popular field that exploits statistical, visualization, machine learning, and other data manipulation and knowledge extraction techniques. Data mining [1] is the process of analyzing data from various perspectives and summarizing it into useful, meaningful with all relative information. There are many data mining algorithms and tools that have been developed for feature selection, clustering, rule framing and classification. Data mining tasks can be divided into 2 types: Descriptive – to discover general interesting patters in the data and Predictive – to predict the behavior of the model on available data. Key steps in Data Mining [DM] are: Problem definition, Data exploration, Data preparation, Modeling, Evaluation and Deployment [2]. Data mining is interesting due to falling cost of large storage devices and increasing ease of collecting data over networks; development of robust and efficient machine learning algorithms to process the data; and falling cost of computational power, enabling use of computationally intensive methods for data analysis [3]. The application of DM facilitates systematic analysis of medical data that often contains huge volumes and in unstructured format. Classification, a data mining task is an effective method to classify the data in the process of Knowledge Data Discovery. A Classification method, Decision tree algorithms are widely used in medical field to classify the medical data for diagnosis. For the classification activity, the highly impact tool is Decision Tree [4]-[7]. In this, knowledge is represented in the form of rules. This makes the user to understand the model in an easy way. In a decision tree, each node represents a test on an attribute, the result of the test is a branch and a leaf node represents a label or class. To classify an unknown record, its attributes are tested in the tree from the root until a leaf, a path is defined. Though each leaf node has an exclusive path from the root, many leaf nodes can make the same classification. By combing individual opinions of several experts the most informed final decision may be reached through automated decision making applications. This procedure also known under various names, such as Committee of Classifiers, Multiple Classifier Systems, Mixture of Experts or Ensemble based Systems produce most favorable results than singleexpert systems under a variety of scenarios for a broad range of applications. Some of the ensemble-based algorithms are Bagging, Boosting, AdaBoost, Stacked Generalization and Hierarchical Mixture of Experts. Medicine is an age-old field which contains higher complexities and data rather than any other field. Data mining on medical data can help in simple classification to highly accurate predictions. The advantage over using classification on medical data would be to get overall idea of the data based on various attributes, so that the complexity can be reduced and detection of anomalies could become easier. Cancer is one such disease that has wider range of spread in India. Statistically, India is found to have higher rate of increase in cancer patients. The main reason of cancer is tumor. Tumor is abnormal cell growth that can be either benign Volume 2, Issue 8, August 2013 Page 300 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 2, Issue 8, August 2013 ISSN 2319 - 4847 or malignant. Benign tumors are non invasive while malignant tumors are cancerous and spread to other part of the body. With the rapid advancement in information technology, many different data mining techniques and approaches have been applied to complementary medicines for tumors [8]. Cancer data has higher complexities due to various types of cancer and various methods of diagnosis. The organization of the paper is as follows: Section 2 deals with related work, decision tree construction and ensemble systems. In Section 3 experimental results are compared and section 4 presents the conclusion. 2. BACK GROUND 2.1Overview of Related Work A literature survey showed that there have been several studies on the survivability prediction problem using ID3, C4.5 and Simple CART algorithms. However, we could find a few studies related to medical diagnosis and survivability using data mining approaches like decision trees. Aik Choon Tan et.al. [9] Focus on three different supervised machine learning techniques in cancer classification, namely C4.5 decision tree, and bagged and boosted decision trees. They had performed classification tasks on seven publicly available cancerous microarray data and compared the classification/prediction performance of these methods. They observed that ensemble learning (bagged and boosted decision trees) often performs better than single decision trees in this classification task. Jinyam LiHuiqing Liu et.al. [10] Experimented on ovarian tumor data to diagnose cancer using C4.5 with and without bagging. Tan AC, et.al. [11] used C4.5 decision tree, bagged decision tree on seven publicly available cancerous micro array data and compared the prediction performance of these methods. Abdelghani Bellaachia, et.al. [12] Conducted experiments on SEER (Surveillance, Epidemiology, and End Results) public-use datasets using naive-bayes, back propagated neural networks and C4.5 decision tree algorithms. They found that C4.5 exhibited better result than remaining algorithms. Parvesh Kumar, et.al. [13] Conducted experiments on colon data set using different decision tree algorithms. In these C4.5 and Bayesian logistic regression have less value of absolute relative error. Kotsiantis et.al. [14] Did a work on Bagging, Boosting and Combination of Bagging and Boosting as a single ensemble using different base learners such as C4.5, Naive Bayes, OneR and Decision Stump. These were experimented on several benchmark datasets of UCI Machine Learning Repository. J.R.Quinlan [15] performed experiments with ensemble methods Bagging and Boosting by choosing C4.5 as base learner. In[16] , S Seema et.al., used an ensemble classification technique applied to various micro array gene expression cancer datasets to distinguish between healthy samples and cancerous samples. The results showed that ensemble classifiers give a better performance in terms of accuracy over individual classifiers when applied to various cancer datasets. Dong-Sheng Cao et.al., [17] proposed a new Decision Tree based ensemble method combined with Bagging to find the structure activity relationships in the area of Chemo metrics related to pharmaceutical industry. CaiLing Dong et.al. [18] Proposed a modified Boosted Decision Tree for breast cancer detection to improve the accuracy of classification. Jaree Thangkam et.al. [19] Performed a work on survivability of patients from breast cancer. The data considered for analysis was Srinagarind hospital databases during the period 1990-2001. In this approach CART is used as a base learner. D.Lavanya et.al. [20] Proposed a hybrid method to enhance the classification accuracy of Breast cancer data sets. In this feature selection methods used to eliminate those attributes that have no significance in the application process. The experimental results of a hybrid approach with the combination of preprocessing, bagging with CART demonstrated the enhanced classification accuracy of the selected data sets. D.Lavanya et.al. [21] Experimented simple CART on medical dataset with feature selection and ensemble techniques. They proved that, Bagging is preferable for diagnosis of breast cancer data than Boosting. 2.2Ensemble Methods Ensemble methods are learning algorithms that construct a set of classifiers and then classify new data points by taking a vote of their predictions. The original ensemble method is Bayesian averaging, but more recent algorithms include errorcorrectingoutput coding, Bagging, and Boosting. The approach of Ensemble systems is to improve the confidence with which we are making right decision through a process in which various opinions are weighed and combined to reach a final decision. Some of the reasons for using Ensemble Based Systems [22]: • Too much or too little data: The amount of data is too large to be analyzed effectively by a single classifier. Resampling techniques can be used to overlap random subsets of inadequate training data and each subset can be used to train a different classifier. Volume 2, Issue 8, August 2013 Page 301 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 2, Issue 8, August 2013 ISSN 2319 - 4847 • Statistical Reasons: Combining the outputs of several classifiers by averaging may reduce the risk of selecting a poorly performing classifier. • Confidence Estimation: A properly trained ensemble decision is usually correct if its confidence is high and usually incorrect if its confidence is low. Using this approach the ensemble decisions can be used to estimate the posterior probabilities of the classification decisions. • Divide and Conquer: A particular classifier is unable to solve certain problems. The decision boundary for different classes may be too complex. In such cases, the complex decision boundary can be estimated by combing different classifiers appropriately. • Data Fusion: A single classifier is not adequate to learn information contained in data sets with heterogeneous features (i.e. data obtained from various sources and the nature of features is different). Applications in which data from different sources are combined to make a more informed decisions are referred as Data Fusion applications and ensemble based approaches are most suitable for such applications. 2.2.1 Bagging Bagging [23], a name derived from bootstrap aggregation, was the first effective method of ensemble learning and is one of the simplest methods of arching. The meta-algorithm, which is a special case of model averaging, was originally designed for classification and is usually applied to decision tree models, but it can be used with any type of model for classification or regression. The method uses multiple versions of a training set by using the bootstrap, i.e. sampling with replacement. Each of these data sets is used to train a different model. The outputs of the models are combined by averaging (in the case of regression) or voting (in the case of classification) to create a single output. Bagging is only effective when using unstable (i.e. a small change in the training set can cause a significant change in the model) non-linear models. 2.2.2 Boosting (Including AdaBoost) Boosting [24], [25] is a meta-algorithm which can be viewed as a model averaging method. It is the most widely used ensemble method and one of the most powerful learning ideas introduced in the last twenty years. Originally designed for classification, it can also be profitably extended to regression. One first creates a ‘weak’ classifier, that is, it suffices that its accuracy on the training set is slightly better than random guessing. A succession of models are built iteratively, each one being trained on a data set in which points misclassified (or, with regression, those poorly predicted) by the previous model are given more weight. Finally, all of the successive models are weighted according to their success and then the outputs are combined using voting (for classification) or averaging (for regression), thus creating a final model. The original boosting algorithm combined three weak learners to generate a strong learner. AdaBoost AdaBoost [26], short for ‘adaptive boosting’, is the most popular boosting algorithm. It uses the same training set over and over again (thus it need not be large) and can also combine an arbitrary number of base learners. AdaBoost is adaptive in the sense that subsequent classifiers built are tweaked in favor of those instances misclassified by previous classifiers. AdaBoost is sensitive to noisy data and outliers. In some problems, however, it can be less susceptible to the overfitting problem than most learning algorithms. The classifiers it uses can be weak (i.e., display a substantial error rate), but as long as their performance is slightly better than random (i.e. their error rate is smaller than 0.5 for binary classification), they will improve the final model. Even classifiers with an error rate higher than would be expected from a random classifier will be useful, since they will have negative coefficients in the final linear combination of classifiers and hence behave like their inverses. AdaBoost generates and calls a new weak classifier in each of a series of rounds t=1,….,T For each call, a distribution of weights Dt is updated that indicates the importance of examples in the data set for the classification. 3. EXPERMINTAL RESULT The Primary tumor data is collected from UCI machine learning Repository [27] and Colon tumor data is collected from Bioinformatics Group Seville [28], which are publicly available. Experiments are conducted on these datasets. Though our previous study [29] it is observed that C4.5 is the best for the said tumor datasets, if available datasets are used. Further it is observed that ID3 and C4.5 are best for enhanced data sets and exhibit equal classification accuracy on both datasets. The previous study results are mentioned in Table2 for reference. The following table shows the characteristics of selected tumor datasets. Table 1-Characteristics of Data sets Volume 2, Issue 8, August 2013 Page 302 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 2, Issue 8, August 2013 ISSN 2319 - 4847 If the selected data contains missing values or empty cell entries, it must be preprocessed. For the preprocessing those entries are replaced with the corresponding mean of the respective attributes. The Table 2 shows the accuracy of ID3, C4.5 and CART algorithms with normal size and double size datasets using 10fold cross validation is observed as follows: Table 2- Correctly classified instances In this study, to further analyze the decision tree classifier on these data sets using ensembling techniques i.e., Bagging and Boosting are experimented with ID3, C4.5 and Simple CART algorithms. Experiments are conducted with Weka tool on the data using 10-fold cross validation to compare the classification accuracies. The accuracies of these algorithms with Boosting is represented in Table 3 and graphical representation in Figure 1. Table 3- ID3, C4.5 and Simple CART with Boosting-Accuracy to build a model By observing the table, Accuracy of C4.5 with Boosting gives better accuracy than other algorithms in case of PrimaryTumor and for Colon-Tumor C4.5 and CART both exhibit equal accuracy with ensemble of boosting. ID3 accuracy is less for both algorithms. The classifier accuracy on two datasets is represented in the form of a bar graph in fig 3.1. Fig 3.1: Comparison of Algorithms with Boosting The accuracies of methods- ID3, C4.5 and CART with Bagging is represented in Table 4. Table 4- ID3, C4.5 and Simple CART with Bagging-Accuracy to build a model By observing the table Accuracy of C4.5 with Bagging gives better result than Simple CART and ID3 bagging for the both data sets. The classifier accuracy on two datasets is represented in the form of a bar graph in fig 3.2 Volume 2, Issue 8, August 2013 Page 303 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 2, Issue 8, August 2013 ISSN 2319 - 4847 Fig 3.2: Comparison of Algorithms with Bagging The comparison of the accuracies of methods- ID3, C4.5 and CART with Bagging and Boosting is represented in Table 5. Table 5-ID3, C4.5 and Simple CART with Bagging and Boosting-Accuracy to build a model Accuracy (%) Data set ID3+ Bagging ID3+ Boosting C4.5+ Bagging C4.5+ Boosting Simple CART + Bagging PrimaryTumor 38.05 38.64 43.95 40.41 43.36 38.05 ColonTumor 66.13 54.84 82.26 77.42 80.65 77.42 Simple CART +Boosting By observing the above table, Accuracy of C4.5 with Bagging gives better result than other algorithms. The Accuracies are represented in the form of bar graph in the following figure. Figure 3.3: Comparison of classifiers with ensemble methods It is observed that C4.5 with Bagging has classified on two data sets in higher rates than other techniques. Table 6-ID3, C4.5 and Simple CART with Bagging and Boosting-Execution Time to build a model Volume 2, Issue 8, August 2013 Page 304 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 2, Issue 8, August 2013 ISSN 2319 - 4847 From the table it is also clearly observed that the execution time with ensemble of C4.5 with Bagging is very less for the two data sets than other techniques. To observe the performances of classifiers on enhanced data sets, number of instances are doubled and considered for experiment. The performance in terms of accuracy and time complexity are presented in Table 7 and Table 8. Table 7-Enhanced Datasets-Accuracy Accuracy (%) Data set ID3+ Bagging ID3+ Boosting C4.5+ Bagging C4.5+ Boosting Simple CART + Bagging Simple CART +Boosting PrimaryTumor 77.58 77.73 66.37 62.98 62.24 74.04 ColonTumor 96.78 95.97 91.94 93.55 95.97 95.16 From the Table 7 it is observed that the accuracy of ID3 algorithm is high for the Primary-Tumor with boosting technique whereas for the Colon-Tumor ID3 algorithm is high but with the ensemble of Bagging for Enhanced datasets. The time complexities to build a decision tree model using ID3, C4.5 and CART Classifiers with ensemble techniques on enhanced tumor data sets are represented in Table 8. Table 8-Enhanced Datasets-Execution time This table show that time complexity of C4.5 with boosting is less to build a model among the three classifiers. Accuracy is more important for the classification of tumor data sets than execution time. In this context, ID3 algorithm with ensemble exhibit better accuracy than other methods. 5. CONCLUSION In this study ID3, C4.5 and Simple CART classifier with boosting and bagging techniques have been considered for the comparison of performance of accuracy and time complexity for the classification of various tumor datasets. By conducting the experiments it is observed that C4.5 with Bagging is the best algorithm for finding out whether the tumor is benign or malignant on the tumor datasets which are used as they are available. On increasing the number of instances of the data sets ID3 with boosting is best for Primary tumor data set and ID3 with bagging is best for Colon tumor data set. References [1] [2] [3] [4] [5] [6] J. Han and M. Kamber, “Data Mining: Concepts and Techniques”, Morgan Kaufmann Publishers, 2000. T. M. Mitchell, “Machine learning and Data mining”, Communication. ACM, vol. 42, no: 11, 1999. J. Han and M. Kamber, “Data Mining: Concepts and Techniques”, Morgan Kaufmann Publishers, 2001. Zhou ZH, Jiang Y. “Medical diagnosis with C4.5 Rule proceeded by artificial neural network Ensemble”,IEEE Trans Inf Technol Biomed.2003 Mar; 7(1):Pg.no:37-42. Lundin M, Lundin J, Burke HB, Toikkanen S, Pylkkanen L, Joensuu H. “Artificial neural networks applied to survival prediction in breastcancer”.,Oncology 1999; 57:281-6. [7] Delen D, Walker G, Kadam A. “Predicting breast cancer survivability: a comparison of three data mining methods”, Artificial Intelligence in Medicine. 2005 Jun; 34(2):113-27. [8] k.Balachandran and Dr. R.Anitha, “supervisory expert system approach for pre-diagnosis of lung cancer”, IJAEA, January 2010. Volume 2, Issue 8, August 2013 Page 305 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 2, Issue 8, August 2013 ISSN 2319 - 4847 [9] Tan,Gilbert, “ Ensembling machine learning on gene expression data for cancer classification”, Proceedings of New Zealand Bioinformatics Conference, Te Papa, Wellington, New Zealand, ,13-14 February 2003. [10] Jinyan LiHuiqing Liu, See-Kiong Ng and Limsoon Wong,” Discovery of significant rules for classifying cancer diagnosis data”, Bioinformatics 19(Suppl. 2) Oxford University Press 2003. [11] Tan AC, Gilbert D. “Ensemble machine learning on gene expression data for cancer classification”, Appl Bioinformatics. 2003; 2 (3 Suppl):Pg.no:75-83. [12] Abdelghani Bellaachia and Erhan Guven,”Predicting breast cancer survivability using data mining techniques”, www.siam.org, mas id: 5251529 [13] Parvesh Kumar, Siri Krishan Wasan ,”Analysis Of Cancer Datasets Using Classification Algorithms”,IJCSNS ,Vol 10 No.6,June 2010 Pg.no:175-182. [14] S.B.Kotsiantis and P.E.Pintelas,”Combining Bagging and Boosting”, International Journal of Information and Mathematical Sciences, 1:4 , 2005. [15] J.R. Quinlan,”Bagging,Boosting and C4.5”, In Proceedings Fourteenth National Conference on Artificial Intelligence”,1994. [16] S seema,Srinivas KG et.al.,”Ensemble Classifiers with Stepwise Feature Selection For Classification of Cancer Data”,International Journal of Pharamaceutical science and Health care,Issue 2, Pg.no:48-61,Vol6,December 2012. [17] Dong-Sheng Cao, Qing-Song Xu ,Yi-Zeng Liang and Xian Chen, “Automatic feature subset selection for decision tree-based ensemble methods in the prediction of bioactivity”, Chemo metrics and Intelligent Laboratory Systems,2010. [18] CaiLing Dong, YiLong Yin and XiuKun Yang, “Detecting Malignant Patients via Modified Boosted tree”, Science China Information Sciences, 2010. [19] Jaree Thangkam Guandong Xu, Yanchun Zang and Fuchun Huang,”HDKM’08 Proceedings of the second Australian workshop on Health data and Knowledge Management, Vol 80. [20] D.Lavanya and Dr.K.Usha Rani, “Ensemble Decision Tree Classifiers for Brest Cancer Data”, International Journal of Information Technology Convergence and Services, Vol.2, No.1, Feb2012, Pg.no:17-24 [21] D.Lavanya and Dr.K.Usha Rani,” Ensemble Decision Making System for Breast Cancer Data”, International Journal of Computer Applications, Vol.51-No.17, August 2012, Pg.no:19-23. [22] Robi Polikar, “Ensemble Based Systems in Decision Making”, IEEE Circuits and Systems Magazine, 2006. [23] L. Breiman, “Bagging predictors, Machine Learning”, 26, 1996, Pg.no:123-140. [24] Y. Freund and R.E. Schapire” A short introduction to boosting” J. Jpn, Soc. Artificial Intelligence.14 (5):771, 1991. [25] Y. Freund and R.E. Schapire. “Experiments with a new boosting algorithm” in: Proceedings Of the 13th International Conference on Machine Learning, Pg.no: 148-156, 1996. [26] Y. Freund and R.E. Schapire, “Decision-theoretic Generalization of Online Learning and an Application to Boosting”, Journal of Computer and System Sciences, vol. 55, no.1, Pg.no:119-139, 1997. [27] UCI Irvine Machine Learning Repository www.ics.uci.edu/~mlearn/ MLRepository.html. [28] Bioinformatics Group Seville, http://www.upo.es/eps/bigs/datasets.html [29] G.sujatha and Dr.k.Usha Rani, “Evaluation of Decision Tree Classifiers on Tumor Data sets”, The International Journal of Emerging Trends & Technology in Computer Science (IJETTCS) accepted for publication in Vol.2, Issue 4, July-August 2013. Volume 2, Issue 8, August 2013 Page 306