DISSERTATION PROPOSAL DEFENSE

advertisement

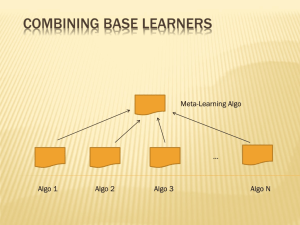

Boosting and Bagging For Fun and Profit Hal Elkins David Lucus Keith Walker Ensemble Methods Improve predictive performance of a given statistical model fitting technique. Run a base procedure many times while changing input data Estimates are linear or non-linear combinations of iteration estimates Originally used for machine learning and data and text mining Attracting attention due to relative simplicity and popularity of bootstrapping Are ensemble methods useful to academic researchers? Bagging Bootstrap aggregating for improving unstable estimation schemes - Breiman (1996) Variance reduction for base procedure – Bühlmann and Yu (2002) Bagging requires user specified input models Step 1) construct bootstrap sample with replacement. Step 2) compute estimator Step 3) repeat Base procedure bias is increased Boosting Boosting proposed by Schapire (1990) and Freund (1995) Nonparametric optimization - useful if we have no idea for a model Bias reduction Step 1) initialize – apply base procedure Step 2) compare residuals Step 3) repeat Variance is increased Enterprise Miner: Bagging & Boosting Enterprise Miner: Bagging Control: Ensemble (under model menu) Inputs The outputs of other models Regression Decision Tree Neural Networks Settings Limited and VERY BLACK BOX Enterprise Miner: Bagging How to view output Connect Ensemble output to Regression node Use Comparison node to: Compare Bagged model with input models Note: Ensemble will only out perform the input models IF, there is large disagreement in the input models. (AAEM_61 Manual) Year 1 Analysis Year 2 Analysis Enterprise Miner: Boosting Control Gradient Boosting (under model menu) Input Data Partition or Dataset Settings (MANY) Assessment Measures Tree Size Settings Iterations Etc. Enterprise Miner: Boosting Outputs List variable importance List # of decision rules containing each variable Hook to regression node for more information Compare with other models Use Model Comparison node Example Gradient Boost Regression AIC: -5868.21 Base Regression AIC: -2866.43 Base Neural Network AIC: -2981.86 Enterprise Miner: Boosting A Boosting Story (Another data set) Prediction of graduation from TTU Data 2004-2007 (SAT, ACT, HSRank%, Parent Education, Income Level) Texas Census Level (Matched on Student’s High School county code (20+ variables) After boosting 1 variable had prediction power of graduation from TTU Previous Use Manescu, C. & Starica, C. 2009. Do corporate social responsibility scores explain and predict firm profitability? A case study on the publishers of the Dow Jones Sustainability Indexes. Working Paper, Gothenburg University. Bagging and Boosting to determine if CSR measures affect ROA Model Comparisons – Data Courtesy Dr. Romi Dependent Variable (Change in CSR Performance)i,t+n OLS = α + β1[CSO]i,t + β2COMMITTEEi,t + β3∆SIZEi,t+1 + β4∆ROAi,t+1 + β5ΔFINi,t+1 + β6ΔLEVi,t+1 + β7GLOBALi,t + β8CEOCHAIRi,t + β9HIERi,t + β10ESIi,t + β11LITIGATIONi,t + β12EXPERTi,t + ε Boosting = α + β1[CSO]i,5 + β2COMMITTEEi,t + β3GLOBALi,t + β4CEOCHAIRi,t + β5HIERi,t + ε Bagging All = α + β1[CSO]i,4 + β2[CSO]i,5 + β3COMMITTEEi,t + β4GLOBALi,t + β5EXPERTi,t + ε Is Either Useful to Us? What we thought Both useful in model selection and refinement What we concluded Bagging for settling different model possibilities Bagging helps determine model disagreement on “black box” models Boosting for grounded theory Boosting for starting model point References Breiman, L.: Bagging predictors. Mach. Learn. 24, 123–140 (1996a) Bühlmann, P.,Yu, B.: Analyzing bagging. Ann. Stat. 30, 927–961 (2002) Freund, Y.: Boosting a weak learning algorithm by majority. Inform. Comput. 121, 256–285 (1995) Schapire, R.E.: The strength of weak learnability. Mach. Learn. 5, 197– 227 (1990) Course Notes George, Jim et al: Applied Analytics Using SAS Enterprise Miner 6.1, Course Notes, 2009 Working Paper Manescu, C. & Starica, C. 2009. Do corporate social responsibility scores explain and predict firm profitability? A case study on the publishers of the Dow Jones Sustainability Indexes. Working Paper, Gothenburg University. THANK YOU! QUESTIONS?