International Journal of Application or Innovation in Engineering & Management... Web Site: www.ijaiem.org Email: , Volume 1, Issue 1, September 2012

advertisement

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com

Volume 1, Issue 1, September 2012

ISSN 2319 - 4847

A Method for Preserving Secret Information in

the Data Mining through Cryptography

K. Marco Dlfonse

Department Of Electronics Computer Engineering

Islamic Azad University, Iran, Arak

ABSTRACT

Data mining will extract vital information from massive knowledge collections – however generally these collections square

measure split among numerous parties. Real knowledge is just too valuable and therefore troublesome to get. thus we have a

tendency to should offer Privacy for that knowledge. Privacy considerations could stop the parties from directly sharing the

info, and a few forms of info regarding the info. during this paper, we have a tendency to 1st offer an outline of privacy

conserving data processing specializing in knowledge sources, then we have a tendency to compare 2 technologies employed in

privacy conserving data processing. the primary technology is knowledge obscuration. The second technology is cryptological

(Encryption primarily based and Secret-sharing). Secret-sharing is recently being thought-about as a a lot of economical

approach.

Keywords: Privacy Preserving, data mining, SMC, Cryptography

1. INTRODUCTION

There has recently been a surge in interest in privacy conserving information mining [2, 1, 17, 14, 22, 18]. Even the

favored press has picked informed this trend[10, 16]. However, the construct of what's meant by privacy isn’t clear.

during this paper we have a tendency to define a number of the ideas that square measure self-addressed during this

line of analysis, and supply a roadmap for outlining and understanding privacy constraints and conjointly we have a

tendency to discuss numerous techniques for conserving privacy. typically once individuals verbalise privacy, they

assert “keep data concerning Pine Tree State from being on the market to others”. However, their real concern is that

their data not be exploited. The concern is that after data is discharged, it'll be not possible to stop misuse.The real

information is incredibly valuable and troublesome to get. therefore we have a tendency to should offer security to it

information. Utilizing this distinction – guaranteeing that {a data|a knowledge|an data} mining project won’t alter

misuse of non-public information – opens opportunities that “complete privacy” would stop. To do this, we'd like

technical and social solutions that guarantee information won't be another read is company privacy – the discharge {of

information|of information|of knowledge} a couple of assortment of information instead of a personal data item. i'll not

fret concerning somebody knowing my birthdate, mother’s cognomen, or Social Security number; however knowing all

of them permits fraud. This collected data downside scales to giant, multiindividual collections likewise. a way that

guarantees no individual information is unconcealed should still unharness data discharged describing the gathering as

a full. Such “corporate information” is mostly the goal of information mining, however some results should still result

in issues (often termed a secrecy, instead of privacy, issue.)

Data mining is intended by the large-scale information assortment efforts by firms and government organizations

with the aim of turning huge amounts of information into helpful data. Machine learning, computing, Statistics, and

Databases square measure used in data processing so as to return up with information centrical techniques for

extracting models from huge information collections. The extracted models can be in several forms, like rules, patterns,

or call trees. Most of the profitable applications of information mining concern humans. thus a substantial proportion of

the collected information is concerning individuals and their activities. this is often why data processing and privacy

discussions square measure indivisible currently. in reality some data processing comes weren't funded thanks to

privacy issues. The analysis efforts on privacy conserving data processing. during this paper we have a tendency to

show numerous techniques and that we conjointly compare crytographic techniques with information obscuration .

Cryptography is a crucial component of any strategy to handle message transmission security needs. Cryptography is

that the study of ways of sending messages in disguised kind therefore that solely the meant recipients will remove the

disguise and browse the message. it's the sensible art of converting messages or knowledge into a different kind, such

no-one will browse them while not having access to the 'key'. The message could also be regenerate employing a 'code'

(in that case every character or group of characters is substituted by associate degree alternative one), or a 'cypher' or

'cipher' (in that case the message as a full is converted, instead of individual characters).Cryptology is that the science

Volume 1, Issue 1, September 2012

Page 50

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com

Volume 1, Issue 1, September 2012

ISSN 2319 - 4847

underlying cryptography. Scientific discipline is the science of 'breaking' or 'cracking' encryption schemes, i.e.

discovering the decryption key. Cryptologic systems are generically classified on 3 independent dimensions [2].

Methodology for remodeling plain text to cipher text: All coding algorithms area unit supported two general

principles: substitution, in which every component within the plaintext is mapped into another component, and

transposition, during which parts within the plaintext area unit rearranged. The fundamental demand is that no

information be lost.

Methodology for variety of keys used: If each sender and receiver use a similar key, the system is spoken as

symmetric, single-key, secret-key, or conventional coding. If the sender and receiver every use a distinct key, the system

is spoken as isosceles, two keys, or public-key coding.

Methodology for process plain text: A block cipher processes the input one block of parts at a time, producing an

output block for every input block. A stream cipher processes the input elements unceasingly, manufacturing output one

component at a time, because it goes on.

The planned formula uses a substitution cipher technique. It is a symmetric key formula mistreatment the technique

of stream cipher.

2. DATA OBSCURING

One approach to privacy is to obscure or disarrange the knowledge: creating non-public data accessible, however

with enough noise additional that precise values (or approximations decent to permit misuse) can't be determined. One

approach, usually employed in census knowledge, is to combination things. Knowing the typical financial gain for a

part isn't enough to see the particular financial gain of a resident of that neighborhood. an alternate is to feature

random noise to knowledge values, then mine the distorted knowledge. whereas this lowers the accuracy of knowledge

mining results, analysis has shown that the loss of accuracy will be little relative to the loss of ability to estimate a

private item. we are able to reconstruct the first distribution of a set of obscured numeric values, sanctioning higher

construction of call trees[2, 1]. this might change knowledge collected from an online survey to be obscured at the

supply – the proper values would ne'er leave the respondent’s machine – making certain that precise (misusable)

knowledge doesn’t exist. a way has conjointly been developed for association rules, sanctioning valid rules to be learned

from knowledge wherever things are indiscriminately additional to, or off from, individual transactions[13].

Consider a state of affairs during which 2 or a lot of parties owning confidential databases would like to run an

{information} mining formula on the union of their databases while not revealing any unnecessary information. as an

example, think about separate medical establishments that would like to conduct a joint analysis whereas conserving

the privacy of their patients. during this state of affairs it's needed to shield privileged data, however it's conjointly

needed to change its use for analysis or for alternative functions. especially, though the parties understand that

combining their knowledge has some mutual profit, none of them is willing to reveal its information to the other party.

In this case, there's one central server, and lots of shoppers (the medical institutions), every having a bit of data. The

server collects this data and builds its combination model exploitation, as an example, a classification formula or

associate formula for mining association rules. usually the ensuing model now not contains in person distinctive data,

however contains solely averages over massive teams of shoppers.

The usual answer to the on top of downside consists in having all shoppers send their personal data to the server.

However, many of us are getting progressively involved regarding the privacy of their personal knowledge. they'd wish

to avoid giving out way more regarding themselves than is needed to run their business with the corporate. If all the

corporate wants is that the combination model, an answer is most well-liked that reduces the speech act of personal

knowledge whereas still permitting the server to make the model.

One risk is as follows: before causation its piece of knowledge, every consumer perturbs it in order that some true

data is got rid of and a few false data is introduced. This approach is termed organization or knowledge obscuration.

Another risk is to decrease preciseness of the transmitted knowledge by miscalculation, suppressing sure values,

substitution values with intervals, or substitution categorical values by a lot of general classes up the categorization

hierarchy. The usage of organization for conserving privacy has been studied extensively within the framework of

applied math databases. in this case, the server incorporates a complete and precise information with the data from its

shoppers, and it's to create a version of this information public, for others to figure with. One necessary example is

census data: the govt. of a rustic collects non-public data regarding its inhabitants, and so must flip this knowledge into

a tool for analysis and economic designing.

2.1 Numerical Randomization

Let every shopper Ci, i = 1, 2, . . . ,N, have a numerical attribute xi. Assume that every xi is AN instance of variate

Xi, wherever all Xi area unit freelance and identically distributed. The additive distribution operate (the same for each

Xi) is denoted by FX. The server needs to be told the operate FX, or its shut approximation; this is often the mixture

Volume 1, Issue 1, September 2012

Page 51

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com

Volume 1, Issue 1, September 2012

ISSN 2319 - 4847

model that the server is allowed to grasp. The server will recognize something regarding the shoppers that's derived

from the model, however we'd wish to limit what the server is aware of regarding the particular instances xi.

The paper [4] proposes the subsequent resolution. every shopper randomizes its xi by adding thereto a random shift

Yi. The shift values Yi area unit freelance identically distributed random variables with additive distribution operate

FY; their distribution is chosen before and is understood to the server. Thus, shopper Ci sends randomised price zi = xi

+ Yi to the server, and also the server’s task is to approximate operate FX given FY and values z1, z2, . . . , zN. Also,

it's necessary to know a way to select FY so

The server will approximate FX moderately well, and

The worth of zi doesn't disclose an excessive amount of regarding xi.

The amount of revelation is measured in [4] in terms of confidence intervals. Given confidence came around, for

every randomised price z we are able to outline AN interval [z − w1, z + w2] such for all nonrandomized values x

we've

P [Z − w1<= x <= Z + w2 |Z = x + Y,Y~fy ] >= C%.

In alternative words, here we tend to think about AN “attack” wherever the server computes a c%-likely interval for

the non-public price x given the randomised price z that it sees. The shortest breadth w = w1 + w2 for a confidence

interval is employed because the quantity of privacy in school confidence level. Once the distribution operate FY is

decided and also the information is randomised, the server faces the reconstruction problem: Given FY and also the

realizations of N i.i.d. random samples Z1, Z2, . . , ZN, wherever Zi = Xi + Yi, estimate FX. In [4] this drawback is

resolved by AN reiterative algorithmic rule supported Bayes’ rule. Denote the density of Xi (the by-product of FX) by

fX, and also the density of Yi (the by-product of FY) by fY ; then the reconstruction algorithmic rule is as follows:

1. f 0X : = uniform distribution;

2. j:= zero // Iteration number;

3. Repeat

4. till (stopping criterion met).

For potency, the density functions fjX area unit approximated by piecewise constant functions over a partition of the

attribute domain into k intervals I1, I2, . . . , Ik. The formula within the algorithmic rule higher than is approximated

by (m(It) is that the centre of It):

It can also be written in terms of cumulative distribution functions, where FX((a, b]) = FX(b) − FX(a) = P[a <X <= b]

and

N(Is)

is

the

number

of

randomized

values

zi

inside

interval

Is:

Experimental results show that the category prediction accuracy for call trees made over randomised information (using

By category or Local) within reason shut (within 5%–15%) to the trees made over original information, even with

significant enough organisation to own 95%-confidence intervals as wide because the whole vary of Associate in

Nursing attribute. The coaching set had a hundred,000 records.

2.2 Itemset organisation

Papers [6 ; 7] think about organisation of categorical information, within the context of association rules. Suppose

that every consumer Ci features a group action ti, that may be a set of a given finite set of things I, |I| = n. For any set A

I, its supporting the dataset of transactions T = is outlined because the fraction of transactions containing A as their

subset:

an itemset A is frequent if its support is a minimum of a precise threshold smin. Associate in Nursing association

rule A B may be a try of disjoint itemsets A and B; its support is that the sup-port of A B, and its confidence is that the

fraction of transactions containing A that conjointly contain B:

Volume 1, Issue 1, September 2012

Page 52

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com

Volume 1, Issue 1, September 2012

ISSN 2319 - 4847

An association rule holds for T if its sup-port is a minimum of smin and its confidence is a minimum of cmin, that is

another threshold. Association rules were introduced in [2], and [3] presents economical algorithmic program Ap-riori

for mining association rules that hold for a given dataset. the thought of Ap-riori is to create use of antimonotonicity

property:

Conceptually, it 1st finds frequent 1-item sets, then checks the support of all 2-item sets whose 1-subsets ar frequent,

then checks all 3-item sets whose 2-subsets ar frequent, etc. It stops once no candidate itemsets (with frequent subsets)

may be fashioned. it's simple to visualize that the matter of finding association rules may be reduced to finding frequent

itemsets. A natural thanks to disarrange a group things|of things} is by deleting some things and inserting some new

items. A select-a-size organisation operator is outlined for a set group action size |t| = m and has 2 parameters: a

organisation level 0-ρ one and a chance distribution (p[0], p[1], . . . , p[m])over set .Given a group action t of size m,

the operator generates a randomised transaction t1 as follows:

1. The operator selects Associate in Nursing whole number j at ran-dom from the set so that P[j is selected] = p[j].

2. It selects j things from t, uniformly willy-nilly (without replacement). this stuff, and no alternative things of t, are

placed into t1.

3. It considers every item a t successively and tosses a coin with chance ρ of “head-s” and 1 − of “tails”. All those

things that the coin faces “heads” ar accessorial to t1. If totally different shoppers have transactions of various

sizes, then select-a-size parameters have to be compelled to be chosen for every group action size. So, this

(nonrandomized) size needs to be transmitted to the server with the randomised group action.

Data obscuration is effective each within the net and company model. Obscuration may be done by the individual (if

the receiver isn’t trusted), or by the holder of information (to cut back issues concerning broken security.) However,

obscuring information falls into a legal area. Rules like Europe 95/46 and HIPAA would most likely read on an

individual basis placeable information with values obscured as protected, notwithstanding the precise values ar

unknowable. However, obscuration may well be as or more practical than aggregation ways used on in public on the

market census information at protective actual information values. Demonstrating the effectiveness {of information|of

knowledge|of information} obscuration compared with census data might improve public acceptance, and cause

changes in legal standards. information obscuration techniques might even be accustomed make sure that otherwise

placeable information isn’t on an individual basis placeable. Re-identification experiments have shown that information

that may be viewed as non-identifiable, like birth date and code, will together enable identification of a personal [21].

Obscuring the information might create reidentification not possible, so meeting each the spirit and letter of privacy

laws.

3. CRYPTOGRAPHY-BASED TECHNIQUES

One problem with the above is the tradeoff between privacy and accuracy of the data mining results. Can we do

better? Using the concept of Secure Multiparty Computation, the answer is clearly yes – in the “web survey” example,

the respondents can engage in a secure multiparty computation to obtain the results, and reveal no information that is

not contained in the results. However getting thousands of respondents to participate synchronously in a complex

protocol is impractical. While useful in the corporate model, it is not appropriate for the web model. Here we present a

solution based on a moderately trusted third party – the party is not trusted with exact data, but trusted only not to

collude with the “data receiver”. There are various means of achieving privacy, both technical and nontechnical. Part of

the problem is the need to create a solution which is feasible in terms of efficiency, security, and without limitations in

usability. Technical solutions can be formulated without restrictions in usability, by making suitable assumptions. By a

judicious use of nontechnical mechanisms, we can realize these assumptions in real life.

As an example of a definition of privacy, and its limitations, let us look at Secure Multiparty Computation

(SMC)[23, 11]. The idea of SMC is that the parties involved learn nothing but the results. Informally, imagine we have

a trusted third party to which all parties give their input. The trusted party computes the output and returns it to the

parties. SMC enables this without the trusted third party. There may be considerable communication between the

parties to get the final result, but the parties don’t learn anything from this communication. The computation is secure

if given just one party’s input and output from those runs, we can simulate what would be seen by the party. In this

case, to simulate means that the distribution of what is actually seen and the distribution of the simulated view over

many runs are computationally indistinguishable. We may not be able to exactly simulate every run, but over time we

cannot tell the simulation from the real runs. Since we could simulate the runs from knowing only our input and

output, it makes sense to say that we don’t learn anything from the run other than the output. This seems like a strong

guarantee of privacy, and has been used in privacy preserving data mining work[15, 7, 8]. We must be careful when

using Secure Multiparty Computation to define privacy. For example, suppose we use a SMC technique to build a

Volume 1, Issue 1, September 2012

Page 53

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com

Volume 1, Issue 1, September 2012

ISSN 2319 - 4847

decision tree from databases at two sites[15], classifying people into high and low risk for a sensitive disease. Assume

that the non-sensitive data is public, but the sensitive data (needed as training data to build the classifier) cannot be

revealed.

The SMC computation won’t reveal the sensitive data, but the resulting classifier will enable all parties to estimate

the value of the sensitive data. It isn’t that the SMC was “broken”, but that the result itself violates privacy. Perfect

privacy in the SMC sense implies that there is absolutely no release of any meaningful information to any third party.

Current e-commerce transactions have a trusted (central) third party with access to all the information. The “trust” is

governed by legal contracts enjoining the improper release of information. In some cases, the third party is dispensed

with and contracts exist between the interested parties themselves. This is obviously insecure from the technical

perspective. Though it has been proven that a SMC solution does exist for any functionality, the computation and/or

communication required may be high. Other factors, such as the need for continual online availability of the parties,

create further restrictions and problems in real world settings such as a web-based survey.

However, if we jettison the idea of using only the interested parties, we can obtain a middle ground solution that does

not require a fully trusted third party. We can instead use a fixed number of untrusted, noncolluding parties/sites to do

the computation.

Assume the existence of k untrusted, noncolluding sites.

Untrusted implies that none of these sites should be able to gain any useful information from any of the inputs

of the local sites.

Noncolluding implies that none of these sites should collude with any other sites to obtain information beyond

the protocol.

Then, all of the local parties can split their local inputs into k random shares which are then split across the k

untrusted sites. Each of these random shares are meaningless information by themselves. However, if any of the parties

combined their data, they would gain some meaningful information from the combined data. For this reason, we

require that the sites be noncolluding. We believe this assumption is not unrealistic. Each site combines the shares of

the data it has received using a secure protocol to get the required data mining result.

Some of the well-known public-key secret writing algorithms ar RSA and ElGamal. RSA encrypts messages of

roughly 1024 bits in ciphertexts of 1024 bits. ElGamal is AN elliptic curve primarily based secret writing will|which

may|which might} handle messages (typically around a hundred and sixty bits) that ar a lot of smaller than what RSA

can handle. Public key secret writing schemes ar less difficult to use and manage, however ar slower than the therefore

referred to as bilaterally symmetrical key secret writing schemes. DES and AES ar standard bilaterally symmetrical key

secret writing schemes. They cypher messages of sixty four and 128 bits severally, and generate cipher texts of constant

length.

3.1 Circuit analysis

Many of the protocols supported secret writing use the thought introduced by Yao [18][19]. In Yao's protocol one in

every of the parties reason a disorganized version of a mathematician circuit for evaluating the specified operate. The

disorganized circuit consists of encryptions of all potential bit values on all potential wires within the circuit. the

quantity of encryptions is more or less 4m, wherever m is that the range of gates within the circuit. The secret writings

will be bilaterally symmetrical key encryption, that contains a typical cipher text-length of sixty four bits. The

disorganized circuit is distributed to the opposite party, which may then measure the circuit to induce the ultimate

result. several privacy protective data processing protocols use the thought of disorganized circuits, however so as to

limit the overhead of disorganized circuits, they solely use disorganized circuits as sub-protocols to reason bound easy

functions .

3.2 Homomorphic secret writing

A powerful tool in computing a good vary of functions with procedure security is homomorphic secret writing. With

homomorphic secret writing we will avoid the bit-wise secret writing from the disorganized circuits represented in

Section three.1. Homomorphic secret writing schemes ar a special category of public key secret writing schemes. the

primary homomorphic cryptosystem, referred to as the Goldwasser-Micali (GM) cryptosystem, was projected in

1984[12]. thanks to its preventative message enlargement throughout secret writing (i.e. every little bit of plaintext is

encrypted as a cipher text of eat least 1024 bits), it's not sensible for data processing applications. The natural extension

of the gramme

Cryptosystem is that the Benaloh cryptosystem [7], that permits the secret writing of larger block sizes at a time.

though the message enlargement isn't as unhealthy as within the gramme cryptosystem, it's still not appropriate for data

processing applications. moreover, the actual fact that the cryptography is predicated on complete search over all

potential plain-texts additionally makes the Benaloh cryptosystem unpractical for privacy protective data processing. A

more moderen theme is that the Paillier cryptosystem [19], that avoids several of the drawbacks of the sooner

homomorphic cryptosystems. The Paillier cryptosystem provides quick secret writing and cryptography algorithms, and

it encrypts 1024-bit messages in cipertexts of a minimum of 2048-bits, that is cheap if we tend to work with massive

plaintexts.

Volume 1, Issue 1, September 2012

Page 54

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com

Volume 1, Issue 1, September 2012

ISSN 2319 - 4847

Homomorphic secret writing permits America to reason bound functions a lot of with efficiency compared to

disorganized circuits. Authors in [10] use homomorphic secret writing for computing secure scalar product employed

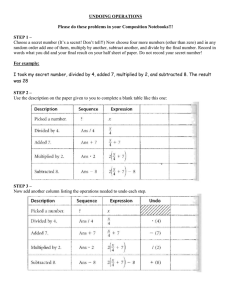

in privacy protective data processing. The protocol is shown in Fig. 1, wherever 2 players, A and B, reason the dot

product of vectors v = (v1; : : : ; vd), and w’ = (w1; : : : ;wd), such solely B learns the dot product, and A learns nothing

in the least.

Figure 1: Computationally secure scalar product protocol (D(c) = v!. w!).

4. SECRET SHARING

Secret sharing was introduced severally by Shamir[12] and Blakley[8] in 1979. the thought is that one party contains

a secret that it distributes among n alternative parties during a method that none of the n parties alone will recover the

key. As a matter of reality the key is shared during a method that the knowledge of a minimum of t of the n parties is

required to recover the key, wherever t could be a predefined threshold. Any try by but t parties to recover the key can

fail and that they won't learn something concerning the key. A (t; n) secret sharing theme could be a set of 2 functions

S and R. The operate S could be a sharing operate and takes a secret s as input and creates n secret shares:

S(s) = (s1,………….., sn). the 2 functions ar elect during a method that, for any set (1,……….., n) of t indices = s.

moreover we tend to need that it's not possible to recover s from a group of t ¡ one secret shares. A secret sharing theme

is additively homomorphic if a awfully easy (n; n) additive secret sharing theme is S(s) = (r1,……., rn-1, r),

where Ocean State is random for i , and

To recover s all secret shares ar added:

If even one secret share is missing nothing is thought concerning s. Shamir secret sharing was utilized by Ben-Or

et.al [6] in 1988 to indicate that any operate of n inputs will be computed by n parties such no coalition of but n=3 of

the parties will gain any data concerning the honest parties inputs (even if they are doing not behave in keeping with

the prescribed algorithm). If we tend to assume that all parties behave semi-honestly (i.e. they follow the protocol), then

no coalition of but n=2 of the parties will gain any data concerning the inputs of the honest parties. The protocol uses

the additively homomorphic property of Shamir secret sharing. the thought is that addition and multiplication along is

enough to judge any operate (in explicit addition and multiplication over Z2 could be a universal set of mathematician

operations). The bottleneck of the rule in [6] is multiplication. Since Shamir secret sharing isn't multiplicatively

homomorphic, so as to perform a multiplication, a special \degree reduction step" must be performed. This degree

reduction needs that every one parties secret share a brand new range (a total of n2 new messages for every

multiplication). for many data-mining applications this degree reduction step is just too expensive, since a colossal

range of multiplication is common. Another limitation of the generic multi party computation supported secret sharing

is once some parties might behave venally. they'll attempt to gain further data by deviating from the prescribed

protocol. To avoid this, a special variant of secret sharing is employed. This variant, referred to as variable secret

sharing adds further data to every secret share, such any set of players, at any time within the protocol, will verify that

the shares they need ar consistent. each the additional data within the secret shares, and also the interaction needed to

verify a secret sharing adds further communication overhead to the protocols.

4.1 The Coopetative Model

Data holders that participate in distributed data processing have naturally AN interest within the results of the

information mining. They are, however, intelligibly reluctant to share their personal knowledge with others to either

defend their interests or meet privacy necessities obligatory by their purchasers. Knowledge holders, in alternative

words, ar able to collaborate with one another to extract helpful data from combined knowledge whereas competition

among them dictates that individual knowledge isn't disclosed to others. The term coopetation is employed in economic

science to sit down with cooperation between competitory entities to boost the worth of their market. this is often quite

almost like the distributed data processing state of affairs wherever knowledge holders behave with similar motivations.

within the coopetative model, knowledge holders offer inputs to a comparatively tiny set of information mining servers

some referred to as third parties, that are assumed semi-honest (i.e. they're honest however curious; they follow the

protocol steps, however have an interest in any leaked information). a number of the information holders will actively

participate within the distributed data processing taking part in the role of third parties. The non-collusion property

Volume 1, Issue 1, September 2012

Page 55

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com

Volume 1, Issue 1, September 2012

ISSN 2319 - 4847

should be happy by bound set of third parties. At the top of the protocol the information laborer, which may be either a

separate entity or one in every of the information holders, can have the result as AN output. a number of the advantages

of the coopetative model are:

Terribly economical data processing protocols will be created

A significant work will be placed on alittle set of dedicated servers that ar higher protected and controlled.

Solely these tiny set of servers ought to posses the required hardware, computer code and ability to perform data

processing.

Secret writing is avoided, so key distribution is not any longer a drag.

The basic version of the coopetative model [11] needs 2 dedicated third parties and a laborer. knowledge holders

secret shares their knowledge and send every share to 1 third party. For sake of simplicity we will assume that nonpublic input of every knowledge holder is AN number x and also the knowledge holder creates 2 shares r and x - r

wherever r is haphazardly elect number. The share r is distributed to the primary third party and also the share x- r is

distributed to the opposite. Clearly each shares ar random once discovered alone and no single entity (adversary, third

party, or miner) will get any data concerning the personal input x. The personal input is disclosed once 2 shares ar

place along, that ne'er happens within the coopetative model.

The third parties work on the individual shares and reason pure mathematics operations like numerical distinction

and comparison on the shares, that ar the elemental operations in several data processing applications (e.g. constructing

call trees, association rule mining and clustering). The results of these operations ar the shares of the ultimate outcome

of the computation, which may be obtained solely by the information laborer. so as for the third parties to figure on

shares, they have to use secret sharing schemes that is homomorphic with relation to the operations they perform. as an

example, additive secret sharing represented on top of is homomorphic with relation to addition (and subtraction):

adding shares try wise provides AN additive sharing of the add of the secrets. Therefore, the additive secret sharing

theme will directly be employed in numerical distinction operations in clump algorithms.

5. DISCUSSION AND COMPARISONS

When examination the potency of the assorted techniques one must think about the subsequent factors. Accuracy,

speed, communication value.

Data obscuration is effective each within the net and company model. Obscuration will be done by the individual (if

the receiver isn’t trusted), or by the holder of information (to scale back issues concerning broken security.) One

drawback with the information obscuration is that the exchange between privacy and accuracy of the information

mining results.

Public key secret writing schemes ar (by definition) supported computationally troublesome issues, and so need

valuable operations like standard involution of enormous numbers (in the order of one thousand bits). In distinction it's

terribly economical to reason secret shares once victimization e.g. Shamir secret sharing or the easy additive secret

sharing represented in Section four. Sharing a secret with Shamir secret sharing consists in selecting a random

polynomial and evaluating it in n points. The polynomial is chosen over constant field because the secret, which

implies that sometimes all computation ar through with standard integers. public key secret writing schemes produce

ciphertexts of a minimum of 1024 bits (with the exception of Elliptic curve primarily based secret writing schemes).

If we would like to use the homomorphic properties of AN secret writing theme we've got to cypher every input in it's

own cipher text. typically this can mean that we tend to cypher 32-bit numbers in 1024 bits (giving AN overhead of

32). If we tend to use circuit analysis techniques we tend to ar forced to cypher every bit as a minimum of a hundred

and sixty bits if we tend to use elliptic curve cryptography. In distinction, secret sharing creates n shares of every input,

wherever every share is of constant size because the secret. we tend to so forever have AN overhead of n..

If Shamir secret sharing is employed as represented in Section four every multiplication needs that every try of

parties exchange data. Having to attend for the transmission of those messages at every multiplication clearly slows

down a protocol, compared Yao's circuit analysis solely needs one spherical of communication. Some work has been

done to reduce the quantity of rounds required by secret sharing primarily based techniques [10], although they are

doing not offer constant spherical complexness as within the case of Yao's protocol. it's still AN open drawback to

totally classify the issues which may be resolved with a relentless range of rounds with unconditional security. we

should always note that not all issues will be resolved with unconditional security. a awfully necessary reality, from an

information mining purpose of read, is that flatly secure scalar product between 2 parties is not possible. Any two-party

data processing rule that applies scalar product (between secret vectors control by the 2 parties) must have confidence

either secret writing primarily based techniques, or external parties.

6. CONCLUSIONS

Privacy protective data processing has the potential to extend the reach and advantages of information mining

technology. However, we tend to should be ready to justify that privacy is preserved. For this, we'd like to be ready to

Volume 1, Issue 1, September 2012

Page 56

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com

Volume 1, Issue 1, September 2012

ISSN 2319 - 4847

communicate what we tend to mean by “privacy protective” as a result of Privacy preserving data processing is AN

current analysis space and there ar lots of problems that must be self-addressed. initial of all, the databases that ar

collected for mining ar Brobdingnagian, and climbable techniques for privacy protective data processing ar required to

handle these knowledge sources. during this paper we've got bestowed 3 ways for guaranteeing privacy. Secret sharing

primarily based ways will be thought-about one revolution in climbable privacy protective data processing. The degree

of information distribution might even be a drag once we think about a grid, peer-to-peer, or omnipresent computing

environments. Techniques that minimize the number of computation and knowledge transfer ar required in extremely

distributed environments. New knowledge sorts like spatio-temporal knowledge collected by location-based services,

and alternative mobile service supplier cause new kinds of threats to privacy, and existing techniques for privacy

protective data processing might not be equal to handle these kinds of knowledge.

REFERENCES

[1] C. C. Aggarwal. On randomization, public information and the curse of dimensionality. In ICDE, pages 136{145,

2007.

[2] C. C. Aggarwal and P. S. Yu. A condensation approach to privacy preserving data mining. In EDBT, pages

183{199, 2004.

[3] R. Agrawal and R. Srikant. Privacy-preserving data mining. In Proceedings of the 2000 ACM SIGMOD

International Conference on Management of Data, May 16-18, 2000, Dallas, Texas, USA, pages 439{450.

ACM, 2000.

[4] C. Asmuth and J. Bloom. A modular approach to key safeguarding. IEEE Transactions on Information Theory,

29(2):208{210, March 1983.

[5] D. Beaver, S. Micali, and P. Rogaway. The round complexity of secure protocols. In STOC '90: Proceedings of

the twenty-second annual ACM symposium on Theory of computing, pages 503{513. ACM, 1990.

[6] D. Agrawal and C. C. Aggarwal. On the design and quantification of privacy preserving data mining algorithms.

In Proceedings of the Twentieth ACM SIGACT-SIGMOD-SIGART Symposium on Principles of Database

Systems, pages 247 255, Santa Barbara, California, USA, May 21-23 2001. ACM.

[7] R. Agrawal and R. Srikant. Privacy-preserving data mining. In Proceedings of the 2000 ACM SIGMOD

Conference on Management of Data, pages 439–450, Dallas, TX, May 14- 19 2000. ACM.

[8] M. Atallah, E. Bertino, A. Elmagarmid, M. Ibrahim, and V. Verykios. Disclosure limitation of sensitive rules. In

Knowledge and Data Engineering Exchange Workshop (KDEX’99), pages 25–32, Chicago, Illinois, Nov. 8

1999.

[9] Special issue on constraints in data mining. SIGKDD Explorations, 4(1), June 2002. [5] C. Clifton. Using sample

size to limit exposure to data mining. Journal of Computer Security, (4):281–307, Nov. 2000.

[10] R. Cramer and I. Damgºard. Secure distributed linear algebra in a constant number of rounds. In Advances in

Cryptology - CRYPTO 2001: 21st Annual International Cryptology Conference, pages 119{138, 2001.

[11] M. Kantarcioglu and C. Clifton. Privacy-preserving distributed mining of association rules on horizontally

partitioned data. In The ACM SIGMOD Workshop on Research Issues on Data Mining and Knowledge

Discovery (DMKD’02), pages 24–31, June 2 2002

[12] A. Shamir. How to share a secret. Communications of the ACM, 22(11):612- 613, November 1979.

[13] Standard for privacy of individually identifiable health information. Federal Register, 66(40), Feb. 28 2001.

[14] O. Goldreich. The Foundations of Cryptography | Volume 2, Basic Applications. Cambridge University Press,

May 2004.

[15] S. Goldwasser and S. Micali. Probabilistic encryption. J. COMP. SYST. SCI., 28(2):270{299, March 1984.

[16] M. Kantarcioglu and C. Clifton. Privacy-preserving distributed mining of association rules on horizontally

partitioned data. IEEE Trans. Knowl. Data Eng., 16(9):1026{1037, 2004.

[17] S. V. Kaya, T. B. Pedersen, E. Sava»s, and Y. Saygin. E±cient privacy preserving distributed clustering based

on secret sharing. In LNAI 4819 PAKDD 2007, pages 280{291. Springer, 2007.

[18] A. C. Yao. Protocols for secure computations (extended abstract). In 23rd Annual Symposium on Foundations of

Computer Science. IEEE, 1982.

[19] A. C. Yao. How to generate and exchange secrets. In Proceedings of the twenty- seventh annual IEEE

Symposium on Foundations of Computer Science, pages 162{167. IEEE Computer Society, 1986.

Volume 1, Issue 1, September 2012

Page 57