ANALYSIS OF TIME SERIES

NONLINEAR DYNAMICS II, III:

ANALYSIS OF TIME SERIES

Jaroslav Stark

Centre for Nonlinear Dynamics and its Applications,

University College London,

Gower Street,

London, WC1E 6BT.

SUMMARY

Perhaps the single most important lesson to be drawn from the study of non-linear dynamical systems over the last few decades is that even simple deterministic non-linear systems can give rise to complex behaviour which is statistically indistinguishable from that produced by a completely random process. One obvious consequence of this is that it may be possible to describe apparently complex signals using simple non-linear models. This has led to the development of a variety of novel techniques for the manipulation of such “chaotic” time series. In appropriate circumstances, such algorithms are capable of achieving levels of performance which are far superior to those obtained using classical linear signal processing techniques.

1. INTRODUCTION

Traditionally, uncertainty has been modelled using probabilistic methods: in other words by incorporating random variables and processes in a model. Such stochastic models have been extremely successful in a wide variety of applications and over the years a vast range of techniques for estimating and manipulating such models has been developed. These form the subject matter of the other lectures making up this course.

By contrast, it is only relatively recently that mathematicians, scientists and engineers have come to realize that uncertainty can also be generated in certain circumstances by purely deterministic mechanisms. This phenomenon has come to be known as “chaos” and is primarily due to the capacity of many nonlinear dynamical systems to rapidly amplify an insignificant amount of uncertainty about the initial state of a system into an almost total lack of knowledge of its state at a later time.

This aspect of nonlinear systems has already been dealt with in some detail in Colin Sparrow’s lecture on Chaotic Behaviour.

One of the most promising applications of this observation is within the field of time series analysis, where nonlinear dynamics offers the potential of a new class of models which in certain circumstances can lead to forecasting and filtering algorithms of vastly superior performance compared to traditional signal processing methods based on the stochastic paradigm. The aim of these two lectures is therefore to outline the framework within which such models are constructed and to describe some of the uses to which these models can be put to.

Before we do this, however, a word of caution is in order. Within the last few years some rather extravagant claims for this so called “theory of chaos” have been made, particularly in the popular media. Many of these are difficult to justify and it is important to stress that “chaotic time series” models are only appropriate in certain classes of problems; they are not a universal panacea. In particular, the sorts of models that we shall describe are deterministic and dynamic. We should only attempt to apply them in situations where we have reasonable cause to suspect that deterministic mechanisms are important, and where we are interested in longer term behaviour rather than single events. Some promising applications include:

• Diagnostic monitoring of engineering systems such as turbines, generators, gear-boxes. These all undergo complex deterministic vibrations and it is often important to detect as early as possible slight changes in their operating behaviour which indicate some malfunction such as a shaft, turbine blade or gear tooth beginning to crack.

• Similarly many biological systems exhibit highly deterministic nonlinear oscillations, e.g. the heart beat, circadian rhythms, certain EEG signals etc. There is thus scope for medical applications of techniques based on nonlinear dynamics, for instance in foetal distress monitoring during childbirth.

• Agglomerated properties of populations can in some circumstances show strongly deterministic features. Apart from obvious applications such as forecasting the transmission and development of epidemics, there is also interest developing and validating models of ecological systems and their interaction with man.

Considerable effort has also been made to apply these techniques to financial and economic data such as foreign exchange rates and stock market indices. On the whole, methods based on non-linear dynamics do not perform too badly, but nor do they perform spectacularly well. It seems clear that the behaviour of such systems has more structure than can be explained by traditional linear stochastic models, but the best way of incorporating concepts from nonlinear dynamics remains to be seen. This is currently the subject of intense research activity.

Friday, November 18, 1994.

1 Jaroslav Stark, Analysis of Time Series

2. TIME SERIES GENERATED BY CHAOTIC SYSTEMS

Recall from Colin Sparrow’s lecture that the state of a deterministic dynamical system at a given time t

0 is described by a point x lying in IR m

The time evolution of the system is given by a map

x(t

0

)

→

x(t) which gives the state of the system at time t given that it was initially in state x(t

0

) at time t

0

. Often this map will obtained as the solution of some ordinary differential equation.

In many situations, however, we do not have access to the state x and the map x(t

0

)

→

x(t) is unknown. All that we can do is observe some function ϕ

(x) of the state x, where ϕ

: IR m →

IR is some

measurement function. It corresponds to measuring some observable property of the system such as position or temperature. The evolution of this quantity with time is then given by the time series ϕ

(x(t)). In practice we can only observe this at discrete time intervals. For simplicity we assume that these intervals are all the same so that in fact we observe the discrete time series ϕ

= 1,2, … where

τ

is some sampling interval.

n

= ϕ

(x(n

τ

)) for n

Note that in some circumstances we may be able to measure more than one observable simultaneously. This gives rise to a multi-variable time series. For simplicity, we shall not consider this case, but all the methods described below are equally applicable to such time series, with essentially only trivial modifications. In practice it often turns out that there is no advantage in taking more variables

(e.g. Muldoon [1994]). One interesting idea in this context is that we can use nonlinear dynamics techniques to easily test whether or not two simultaneous time series have been generated by the same dynamical system. Smith [1993] has suggested using this to test for sensor malfunction in multi-sensor systems.

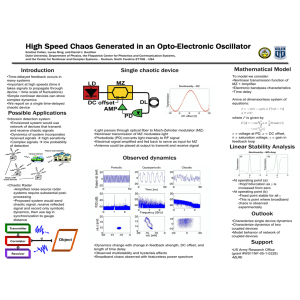

A simple example of such a time series is given in Figure 1, which was obtained by observing the x co-ordinate of the Hénon map x

n+1 y

n+1

=

=

1 - a(x n

) 2 + y n bx n

(1a)

(1b) where a and b are parameters which in this case are taken to be a = 1.4 and b = 0.3. In this case the observation function is just ϕ

(x n

,y n

) = x n

, and ϕ n

= x n

.

1.5

1

0.5

0

-0.5

- 1

-1.5

0 200 400 600 800 n

Figure 1. Sample chaotic time series { x n

} derived from the Hénon map (Eq. 1).

1000

Friday, November 18, 1994.

2 Jaroslav Stark, Analysis of Time Series

20

15

10

5

-10

-15

0

- 5

-20

0 200 400 600 800 n

Figure 2. Time series { ϕ n

} derived from the Lorenz equations (Eq. 2).

1000

Figure 2 shows another example, this time taken by observing the z co-ordinate of the Lorenz equations

˙z

˙x

˙y

=

=

=

10(y - x)

-xz + 28x - y

xy -

8

3 z

(2)

These were integrated using a Runge-Kutta fourth order scheme with a time step of 10

-2

and sampled every 10 integration steps (i.e. so that ϕ n

= x(0.1n)).

In contrast to the simple form of the equations generating them, the behaviour in Figure 1 and 2 appears to be rather complex, and particularly in the case of the first figure, to be more or less random and unpredictable. Indeed, most conventional statistical tests of such data would conclude that nearly all of the variation in this time series was due to random fluctuations. Classically, it would be modelled by linear stochastic processes, such as for instance an autoregressive moving average

(ARMA) model: ϕ n

=

α

0

+ k ∑

α j ϕ

n-j

+ j

=

1 l ∑

β j

ε

n-j j

=

0

(3)

Here

ε n

is a sequence of uncorrelated random variables and the

α i

and

β i

are the parameters of the model. Such a model would assign most of the complexity seen in Figure 1 to the stochastic terms represented by the

ε n

and hence would lead to rather poor estimates of the future behaviour of the time series. By contrast, as we shall see below, methods based on nonlinear dynamics are able to make extremely good short term forecasts of these times series, even when the underlying dynamical equations (e.g. Equation 1 or 2) are not known. This has led to a re-examination of many systems to determine whether or not their behaviour could be predicted using such techniques. Apart from the inherent interest in making predictions in their own right, the predictability or not of a

Friday, November 18, 1994.

3 Jaroslav Stark, Analysis of Time Series

given set of data can yield important clues about the mechanisms which generated it (see [Sugihara and May, 1990] and [Tsonis and Elsner, 1992]). Finally, such chaotic prediction algorithms in turn can be used as the basis of novel techniques for signal processing including filtering, noise reduction and signal separation (see §5 below).

Examples of the sorts of time series that have been analysed, often with mixed results, include data from electronic circuits, fluid dynamics experiments, chemical reactions, sunspots, speech, electroencephalograms and other physiological time series, population dynamics and economic and financial data (see for instance [Casdagli, 1992] and the references therein). An example of such real data is shown in Figure 3. This represents the square root of the amplitude of the output of a semiconductor laser, digitized using an 8 bit A/D converter.

16

14

12

10

8

6

4

2

0

0 200 400 600 n

Figure 3. Time series of observed laser data.

800 1000

Before we describe some of the algorithms used to model such time series, it would seem appropriate to analyse the way in which uncertainty manifests itself in time series such as those in Figure 1 and 2. At first sight, it might seem strange that a purely deterministic system, such as that given by

Equation 1 or 2, should have any uncertainty at all associated with it. After all, if say we know

(x

0

,y

0

) for the Hénon map, then in principle we can predict (x n

,y n

) perfectly for all time. However, as Colin Sparrow has already described, even the tiniest inaccuracies in our knowledge of either

(x

0

,y

0

) or of the equations themselves will be rapidly amplified. In the context of time series, this is illustrated in Figure 4, where the initial conditions (x

0

,y

0

) used to generate Figure 1 were slightly perturbed to give ( x

′

0

, y

′

0

) where x

′

0

= x

0

+ 10

-8

and y

′

0

= y

0

+ 10

-8

. We see that although the gross features of the resulting time series are very similar to Figure 1, detailed inspection shows that x n and x log

x

′ n

rapidly diverge from each other. This is much more apparent in Figure 5 where we plot n

- x

′ n

. We see that for about the initial 50 iterations there is essentially no correlation left between x state of the system of the order of 10

-8 n

and x

′ n

∆ n

∆ n

=

increases more or less linearly until

. In other words, an initial uncertainty in the

results after 50 time steps in a complete lack of knowledge of its behaviour. The rate at which uncertainty grows is given by the initial slope of the graph of log

x n

- x

′ n

and corresponds to the largest Liapunov exponent which was introduced in Colin

Sparrow’s lecture.

Friday, November 18, 1994.

4 Jaroslav Stark, Analysis of Time Series

-2

-4

-6

1.5

1

0.5

0

-0.5

- 1

-1.5

0 200 400 600 800 n

Figure 4. Time series derived from Eq. 1 and modified initial conditions ( x '

0

, y '

0

).

1000

2

0

- 8

0 200 400 600 800 n

Figure 5. Separation

∆ n

= log

x n

- x

′

between Figure 1 and Figure 4.

1000

Such exponential growth of small perturbations is characteristic of chaotic systems and indeed is usually used to define chaos. It places severe limits on the long term predictability of such systems.

This is because in practice we never know the initial state of the system to infinite precision. Any errors, no matter how small, in the determination of this state will grow as above and rapidly render our long term forecasts meaningless. We thus have the dichotomy that chaotic systems are highly predictable in the short term (due to their deterministic time evolution) but completely unpredictable in the long term (due to their sensitive dependence on initial conditions).

Friday, November 18, 1994.

5 Jaroslav Stark, Analysis of Time Series

The above discussion indicates that the apparent uncertainty in a chaotic time series will depend on the choice of time scales, and in particular on the choice of the sampling interval

τ

. Recall Figure 2, and note that this has much more structure and appears much less random than the Figure 1. If however we choose a larger

τ

, we get a much more random looking time series, as in Figure 6, where

τ

= 1 is used.

- 5

-10

-15

15

10

5

0

20

15

10

5

-10

-15

0

- 5

-20

0 200 400 600 800 n

Figure 6. Time series derived from the Lorenz equations with

τ

= 1.

1000

One the other hand, if we choose to sample much more frequently, the deterministic nature of the behaviour becomes much more apparent, as in Figure 7, which was generated using

τ

= 0.01.

-20

0 200 400 600 800 n

Figure 7. Time series derived from the Lorenz equations with

τ

= 0.01.

1000

Friday, November 18, 1994.

6 Jaroslav Stark, Analysis of Time Series

3. RECONSTRUCTING THE DYNAMICS

0.4

0.3

0.2

0.1

0

-0.1

-0.2

-0.3

-0.4

-1.5

For the Hénon map of Equation 1 the time evolution of x n

depends on both x and y. Thus to generate

Figure 1, we had to compute both x n

and y n

for n = 1, …, 1000. Similarly, for the Lorenz equations we had to compute x(t), y(t) and z(t) simultaneously. More generally, as described at the beginning of §2, the state x will lie in IR m

and we will need all m co-ordinates to determine the future behaviour of the system. On the other hand, the observed time series ϕ first sight it might thus appear that ϕ n

is only one dimensional. At n

contains relatively little information about the behaviour of

x(t) and that the fact that

x and the dynamics x(t

0 ϕ n

was generated by a deterministic system is of little value when the state

)

→

x(t) are not kown. Remarkably, this intuition turns out to be false due to a powerful result known as the Takens Embedding Theorem ([Takens, 1980], see also [Sauer et al.,

1991] and [Noakes, 1991]). This shows that for typical measurement functions ϕ

, and typical dynamics x(t

0

)

→

x(t), it is possible to reconstruct the state x and the map x((n-1)

τ

)

→

x(n

τ

) just from knowledge of the observed time series { ϕ n

}. More precisely this theorem says that typically there exists an integer d (called the embedding dimension) and a function G : IR d

→

IR such that ϕ n

satisfies ϕ n

= G( ϕ

n-d

, ϕ

n-d+1

, …, ϕ

n-1

) (4) for all n. Furthermore if we define the point v n

∈ IR d by v n

= ( ϕ

n-d+1

, ϕ

n-d+2

, …, ϕ n

) (5) then the dynamics on IR d given by v

n-1

→

v equivalent to the original dynamics x((n-1) n

= ( ϕ

n-d+1

, ϕ

n-d+2

, …, ϕ

n-1

, G(v

n-1

)) is completely

τ

)

→

x(n

τ

) under some (unknown) smooth invertible coordinate change. In other words by working with the points v n

we can reconstruct both the original state space and the dynamics on it. This procedure is illustrated by the next few figures.

Figure 8 shows 5000 points of the attractor of the Hénon map, plotted in the state space given by

(x n

,y n

). Figure 9 by contrast, shows the reconstructed attractor in (x n

,x

n-1

) space, using the above procedure. We stress that this figure was plotted using only the observed time series x n

(i.e. as in

Fig. 1) with no knowledge of the variable y n

. Apart from the scale change, the figures are identical.

- 1 1 1.5

1.5

1

0.5

0

-0.5

- 1

-1.5

-1.5

- 1 1 1.5

-0.5

0 x n

Figure 8

0.5

-0.5

0 x n

Figure 9

0.5

Friday, November 18, 1994.

7 Jaroslav Stark, Analysis of Time Series

In the case of the Hénon map, is easy to see why this procedure works: Eq. 1b gives a simple relation between y n

and x

n-1

. This however is a very special feature of the Hénon map, and will not hold for more general dynamics, such as those of the Lorenz equations. The above reconstruction procedure will then yield a somewhat different looking picture of the dynamics as is seen in Figures 10 and 11. The first of these shows a plot of Lorenz attractor projected onto the (x(t),y(t)) plane, from the full three dimensional state space given by (x(t),y(t),z(t)). Figure 10 shows a reconstruction using the x co-ordinate alone: thus x(t) is shown plotted against x(t-

τ

) with

τ

= 0.1. The similarity between the two figures is obvious.

30

20

10

0

-10

-20

20

15

10

5

0

- 5

-10

-15

-20

-20 -15 -10 - 5 x

0 n

-30

-20 -15 -10 - 5 x

0 n

5 10 15 20 5 10 15 20

Figure 10 Figure 11

Note that the map v

n-1

→

v n

just advances a block of elements of the time series { ϕ n

} forward by one time step. Thus, whilst the original state space and dynamics might be inaccessible to us, the reconstructed dynamics (which is completely equivalent to the original dynamics) operates purely in terms of the observed time series can be approximated using any one of a wide range of non-linear function fitting algorithms (some of which are described in the next section). We thus conclude that despite the fact that ϕ n

is one dimensional whilst x(t) is m-dimensional, all of the important information about the behaviour of x(t) is actually encoded in ϕ n

. It is this fundamental fact which lies behind all practical schemes for processing chaotic time series.

At this point, the observant reader will ask: how do I know what value of d to take? Clearly, for efficiency and numerical accuracy, this should be chosen as small as possible whilst still ensuring that we have a proper reconstruction. Takens in fact shows that any value of d

≥

2m+1 always results in an equivalence between the original and the reconstructed system, though in fact often a smaller value will also be sufficient (e.g. for the Hénon map above we took d = m = 2). Unfortunately, this is not as useful a bound as it might at first seem, since usually m itself will also be unknown. In practice d therefore has to be determined empirically; one possibility is to compute a trial G for each value of d and then use the smallest d which gives a good fit to Eq. 4. This is discussed in more detail below in §7 since a value of d is obtained as a by-product of most tests for the presence of chaotic behaviour in a time series.

In practical applications the choice of and 7 that

τ

can also be crucial. We have already seen in Figures 2, 6

τ

can have profound effect on the apparent complexity of the observed time series. Now, as far as Takens’s theorem is concerned, any value of

τ

is as good as any other (provided that certain genericity conditions are met). However, in practice, some values of

τ

will give far “better” reconstructions than others. Thus if

τ

is too small, since ϕ

(x(t)) is continuous as a function of t, suc-

Friday, November 18, 1994.

8 Jaroslav Stark, Analysis of Time Series

cessive values of ϕ n

will be almost identical and hence information beyond that already obtained from ϕ ϕ

n-d+2

,

…

, ϕ n

will contain very little useful

n-d+1

. In such circumstances, if measurements are made only to a finite precision, it will be difficult to make reasonable predictions of the future behaviour of the time series. On the other hand if

τ

is too large then the map G will become very complicated and difficult to estimate accurately. A number of methods for choosing a suitable value have been proposed ([Fraser and Swinney, 1986], [Liebert and Schuster, 1989], [Liebert et al.,

1991] and [Buzug and Pfister, 1992]). The last two of these references attempt to determine an optimal choice for both d and

τ

simultaneously. Note that the issue of choice of time delay arises even if we are just given a time series { ϕ n

} and have no direct control over the original sampling interval

τ

. This is because in such a case we still have the option of resampling the time series at some integer multiple of the original rate, in other words of using reconstruction co-ordinates of the form (

q(d+1)

, ϕ

n-q(d+2)

,

…

, ϕ n

) for any positive integer q.

ϕ

n-

4. PREDICTING CHAOTIC TIME SERIES

So, what do we gain from the above reconstruction procedure? Well, consider Eq. 4: this tells us that ϕ n

is uniquely determined in terms of the previous d values of the time series. We can thus use the function G to predict the future behaviour of the time series. In this sense Eq. 4 is a non-linear deterministic analogue of the ARMA model in Eq. 2; we have simply removed the stochastic terms due to the

ε n

and replaced the linear combination

α d ϕ

n-d

+

α

d-1 ϕ

n-d+1

+

…

+

α

1 ϕ

n-1

by the nonlinear function G.

The big problem, of course, is to determine G. In the case of the Hénon map, we can in fact write down G for the time series in Fig. 1 in closed form. From Eq. 1b, we have that y

n-1

= bx

n-2

and substituting this into Eq. 1a we get x n

= 1 - a(x

n-1

) 2 + bx

n-2

(6)

However, this is a very special feature of the Hénon map: in general, for typical dynamical systems and typical observation functions such an explicit derivation is not possible. However, given a sample { ϕ n

: n = 1, …, N} of the time series we can use any one of a large variety of non-linear function fitting techniques to approximate G.

Such techniques fall into two categories: local and global (e.g. [Farmer and Sidorowich, 1987],

[Crutchfield and McNamara, 1987], [Lapedes and Farber, 1987], [Abarbanel et al., 1989], [Giona et al., 1991], [Pawelzik and Schuster, 1991], [Linsay, 1991], [Jiménez et al., 1992] and [Casdagli,

Examples include polynomials, rational functions, various kinds of neural networks and radial basis functions (see below).

By contrast, local techniques estimate the value of G(v) using only a small number of data points v n

G v

, usually in the form of a low order polynomial (i.e. linear or quadratic). Note that if we simply take the nearest point to v and employ a 0 th order estimate (i.e. a constant function) we end up with the well known technique of “forecasting by analogy”. This is because a point v in our reconstructed state space corresponds to a pattern of successive values of the observable (Figure 12). To predict the next value in

( the sequence we look through our past history of the data { ϕ

j-d+1

, ϕ

j-d+2

, …, ϕ j

) which most closely matches v (Figure 13). The value following v j

is just

= G(v j

): hence our best 0 th G v ϕ n

: n = 1, …, N} to find the pattern v j

= ϕ

j+1

(v) following v is ϕ

j+1

. More sophisticated

G v

(v) using other previously seen nearby patterns. Observe that one big disadvantage of this approach is that we have to permanently store

Friday, November 18, 1994.

9 Jaroslav Stark, Analysis of Time Series

the sample data { ϕ n

: n = 1, …, N}, and we need to search through this looking for nearby points each time we want to make a prediction. Sophisticated algorithms exist to make this feasible, even for large data sets, but nevertheless local prediction methods are much more computationally intensive and much less straightforward to implement than global algorithms. The latter are also easier to explain and conceptually much more elegant. On the other hand local methods will probably yield more accurate approximations, particularly if the derivatives of G are required.

v

Figure 12 The point v corresponds to a pattern of successive values of the time series

Figure 13 Previous patterns which are a good match to v .

In these notes we shall concentrate on global techniques. One of the most successful methods has been that of radial basis function approximation. This was originally conceived of as a interpolation technique (see for instance Powell [1987]) but was subsequently generalized to an approximation method using linear least squares estimation (Broomhead and Lowe [1988]). Within this context radial basis function methods have also received considerable attention within the field of system identification (Chen et al [1990 a,b and 1991], [Pottmann and Seborg, 1992]). Also note that radial basis function approximations can be regarded as a particular class of neural networks; indeed this was the context of Broomhead and Lowe’s original approach.

Friday, November 18, 1994.

10 Jaroslav Stark, Analysis of Time Series

The basic idea behind radial basis function approximation is to choose a finite number of points, called radial basis centres, w

1

,

…

, w p

∈ IR d and some fixed radial basis function

ρ

: IR

→

IR . In the examples described below we use the basis function

ρ

(r) =

√

(r 2 + c) with an appropriately chosen constant c, but many other choices are possible. We then look for an approximation G λ of a given function G : IR d →

IR in the form of

G λ (v) = p ∑ j

=

1

λ j

ρ

( v

− w j

)

(7) where

λ

= (

λ

1

, …,

λ p

) are parameters which determine the function G λ and

•

is the Euclidean norm on IR d .

(

Observe that, although G λ (v) is a nonlinear function of v, it depends linearly on the parameters

λ

1

, …,

λ dard linear least squares methods to obtain an estimate of

λ

from a sample of data { ϕ

N}. More precisely, we choose

λ

to minimize the least squares error

λ

= p

). As first suggested by Broomhead and Lowe [1988], this means that we can use stann

: n = 1, …,

E 2 =

N ∑ n

= d

(

G λ (v n

)

−

G(v n

)

) 2

(8) where of course v n

= ( ϕ

n-d+1

, serve that G(v n

) = ϕ ϕ

n-d+2

, …, ϕ n

) are just the points drawn from the data sample. Ob-

n+1

, so that substituting Eq. 7 into Eq. 8, we get

E 2 = we can rewrite this as

N ∑ n

= d

p ∑ j

=

1

λ j

ρ

( v n

− w j

)

− ϕ

( n

+

1

If we now define the (N-d)

×

p matrix A by A nj

=

ρ v n

2

− w j

)

and the vector b ∈

(9)

IR N-d by b n

= ϕ

n+1

,

E 2 =

N ∑ n

= d

p ∑

− b n j

=

1

A nj

λ j

2

(10)

= A

λ − b

2

In other words, minimizing E is equivalent to finding a

λ

such that the vector A

λ

- b has minimum

Euclidean norm. This is a completely standard linear least squares problem which can be solved in a variety of ways (e.g. [Stoer and Bulirsch, 1980] or [Lawson and Hanson, 1974]). The best approach is generally considered to be via the orthogonal decomposition of A. We shall explain how such a decomposition can be obtained below, but first let us show how it leads to the solution of the least squares problem. So, suppose that we have found p+1 mutually orthogonal vectors q

1

, … , q m

, q such that

A b

= Q K

= Q k + q

(11) where Q is the (N-d)

×

p whose columns consist of the vectors q

1

, … , q m

, and the p

×

p matrix K is upper triangular with unit diagonal elements (i.e. K ij

= 0 for i>j and K ii

= 1 for all i). Then

A

λ

- b = Q K

λ

- Q k - q

Friday, November 18, 1994.

11 Jaroslav Stark, Analysis of Time Series

and hence

A

λ − b

2

= q

2

(

λ − k

) 2

- 2 q † Q (K

λ

- k ) where † indicates transpose. But q is orthogonal to q

1

, … , q p

, so q † Q = 0. Hence

A

λ − b

2

= q

2

(

λ − k

) 2

Thus the required

λ

to minimize A

λ − b

2

can be obtained as a solution of K

λ

- k . Since K is upper triangular, this can be solved extremely easily by backsubstitution, i.e. set

λ p

= k p

and

λ i

= k i

- p ∑

K ij

λ j j

= i

+

1

(12) inductively for i = p-1, …, 1.

Note that we allow any of the q’s to be zero: all that we require is that q i

† q j

= 0 for all i

≠

j and q i

† q

= 0 for all i. If one of the q i

= 0, this means that the columns of A are linearly dependent, in which case the minimizing

λ

is not unique (in fact

λ

is minimizing if and only if QK

λ

= Q k ). If q = 0, this simply means that the residual A

λ

- b is zero.

The q’s can be obtained using a variety of algorithms. Standard techniques are based on the Gram-

Schmidt algorithm or on Givens or Householder transformations (e.g. [Stoer and Bulirsch, 1980] or

[Lawson and Hanson, 1974]). Here we shall present the Gram-Schmidt algorithm because it is the simplest to describe and will already be familiar to many readers.

Recall that given a set of vectors a

1

, …, a p

∈ IR N-d (which in this context are the columns of A) the

Gram-Schmidt algorithm generates a set of mutually orthogonal vectors q

1

, …, q p

such that q

1

, …, q j

spans the same subspace of IR N-d as a

1

, …, a j

, for any 1

≤

j

≤

p. This is achieved by setting q

1

= a

1

and then inductively q j

= a j

- j

−

1 ∑

K ij

q i i

=

1 where for i < j

(13)

K ij

= a j

† q i q i

† q i

0 q i

≠

0 q i

=

0

(14)

Note that this is precisely the K which gives the required orthogonal decomposition in Eq. 11. We also need to find q and k , this is done by treating b as a

p+1

in the above procedure (so that q is then given by q

p+1 and k i

by K

ip+1

). Also observe that sometimes one also requires the q j

to be orthonormal. This can easily be achieved by normalizing the q j

as defined above, but for our purposes it is more convenient not to do so.

In practice, is preferable to calculate the q’s in a slightly different fashion using the so called

Modified Gram-Schmidt algorithm (e.g. [Lawson and Hanson, 1974]). This leads to exactly the same Q, q ,K and k but is numerically better conditioned. It makes use of a set of auxiliary vectors q

j(i)

for i

≤

j defined by q

j(i+1)

= q

j(i)

- K ij

q i

(15) with q

j(1)

= a j

and q

i

= q

i(i)

. By induction we see that

Friday, November 18, 1994.

12 Jaroslav Stark, Analysis of Time Series

K ij

=

0 q

(i)† j q i q i

† q i q q i i

≠

=

0

0

(16)

Thus q

j(i+1)

can be computed directly from the q

j(i)

. The decomposition of b is similarly performed using q (i) for i = 1, …, p+1 q (i+1) = q (i) - k i

q i with q (1) = b and q = q (p+1) . In terms of q (i) , the k i

are given by

(17) k i

= q

(i)† q i q i

† q i

0 q i

≠

0 q i

=

0

(18)

Note that despite initial appearances the Modified Gram-Schmidt algorithm requires no more storage than the conventional Gram-Schmidt scheme since at each stage q

j(i+1)

can be used to overwrite q (i) .

The one remaining issue we need to address before we have a complete algorithm is the choice of the basis centres w j

. In Powell’s original approach, these were placed uniformly on a grid, and it was assumed that G(w j

) was known, i.e. that the centres were chosen from amongst the data points. In our application to chaotic time series, we cannot of course ensure that the data points are arranged in a regular fashion, but it still seems desirable that the centres should be reasonably distributed throughout the region where the data lies. In the absence of any a priori knowledge it is thus still usual to choose the centres from amongst the data points v n

= ( ϕ

n-d+1

, ϕ

n-d+2

, …, ϕ n

), so that w j

= v

n(j)

for some n(j).This was for instance done by Casdagli [1989] who was the first to apply radial basis function to the prediction of chaotic time series (though he used interpolation rather than least squares approximation). Since we require the number of centres to be substantially smaller than the number of data points, the simplest solution is to choose the centres randomly from amongst the data set. A more sophisticated approach might be to require that none of the centres lie closer together than some predetermined minimal distance. More complex criteria are discussed in [Smith,

1992], whilst Chen et al. [1990 a,b and 1991] (see also [Pottmann and Seborg, 1992] and Stark

[1994]), present a model selection algorithm whereby initially a very large number of centres is chosen and then all but the most significant are discarded during the estimation procedure. Note that in all of these approaches the matrix elements A ij

an then be expressed directly in terms of the observed sample data { ϕ n

: n = 1, …, N} as

A ij

=

ρ

(r

in(j)

) (19) where r ij

is the Euclidean distance between v i

and v j

, given by r ij

=

d

−

1 ∑ k

=

0

( ϕ i

− k

− ϕ j

− k

)

2

1

2

(20)

Friday, November 18, 1994.

13 Jaroslav Stark, Analysis of Time Series

THE COMPLETE ALGORITHM

To summarize, here is the complete algorithm:

1. Choose the centres w j

= v

n(j)

from amongst the data points {v n

= ( ϕ

n-d+1

, ϕ

n-d+2

, …, ϕ n

) : n =

1, …, N}.

2. Compute the distances r

in(j)

using Eq. 20 and define the vectors q

1(1)

, …, q

p(1)

by [q

j(1)

] i

=

ρ

(r

in(j)

), where [q

j(1)

] i

is the i th component of [q

j(1)

].

3. For i = 1, …, p-1, set q

i

= q

i(i)

, and compute K ij

for j > i using Eq. 16, and then form q

i(i+1)

using Eq. 15.

4. Define q (1) by [ q (1) ] i

= ϕ

i+1

.

5. For i = 1, …, p, compute k i

using Eq. 18, and then form q (i+1) using Eq. 17.

6. Set

λ p

= k p

and compute

λ

p-1

, …,

λ

1

(in that order) using Eq. 12.

The resulting (

λ

1

, …,

λ p

) is the parameter vector

λ

which gives the optimal approximation G λ of G.

Let us give some examples of the application of this algorithm. Figure 14 shows the one step prediction error

ε n

= ϕ n

- G λ ( ϕ

n-2

, ϕ

n-1

) for the Hénon time series of Fig. 1. The first 500 data points were used as the data sample and the first 150 were used to define the radial basis centres. The basis function used was

ρ

(r) =

√

(r 2 + 10). The error is down to the level of 10 -9 or less. Furthermore, observe that the in-sample error for the first 500 points is indistinguishable from the out-of-sample error for the second half of the time series. This indicates that a good overall fit to G has been obtained and “overfitting” has not occurred.

1 10 - 7

5 10 - 8

0

-5 10 - 8

-1 10 - 7

0 200 400 600 800 n

Figure 14. One step prediction error

ε n

for radial basis prediction of Fig. 1

1000

Of course this example is no great test of the technique: all we are trying to do is fit the function

G(x

n-2

,x

n-1

) = 1 - 1.4(x

n-1

) 2 + 0.3x

n-2

, (albeit without any knowledge of its functional form). A more difficult example Lorenz time series of Fig. 2; recall that for this no explicit expression for G is available. The results are shown in Figure 15. As before, the first 500 data points were used as the data sample and the first 150 were used to define the radial basis centres. The basis function was

Friday, November 18, 1994.

14 Jaroslav Stark, Analysis of Time Series

ρ

(r) =

√

(r 2 + 4000), and the embedding dimension was d = 4. The error is much larger than in the

Hénon case, but even so is on average on the order of 5

×

10 -3 of the amplitude of the original time series. This is more than adequate for most applications, and indeed is more accurate than the basic precision of any data we would be able to collect in most real world situations. More importantly the predictions are at least 10 -2 better than those which we would get by predicting ϕ ϕ n

to be the same as

n-1

(which is perhaps the simplest prediction technique possible, and a fundamental baseline against which we should always test).

0.1

0.05

0

-0.05

-0.1

0 200 400 600 800 n

Figure 15. One step prediction error

ε n

for radial basis prediction of Fig. 2

1000

Figure 16. A trajectory segment corresponding to poor predictions in Fig. 15.

The occasional very poor predictions, which are such a noticeable feature of Fig. 15, are due to the fact that close to the origin (x,y,z) = (0,0,0) the Lorenz system is much more sensitive to small errors than in other parts of the phase space. In particular, in this region it is very difficult to tell into which of the two halves of the attractor (contained in x > 0 or x < 0, respectively) the orbit will go next.

Friday, November 18, 1994.

15 Jaroslav Stark, Analysis of Time Series

This is illustrated in Figure 16, where we highlight a segment of the trajectory corresponding to one of the “glitches” in Figure 15.

Such variability in the predictability of the system throughout the state space appears to occur in a wide variety of systems, and is currently the subject of much interest (e.g. [Abarbanel et al., 1991],

[Smith, 1992], [May, 1992] and [Lewin, 1992]). One approach to quantifying such differences in predictability is through the concept of local Liapunov exponents ([Abarbanel et al., 1991] and

[Wolff, 1992]).

Finally in Figure 17 we apply the above algorithm to the laser data from Fig. 3. Again, the first 500 data points were used as the data sample, but now only the first 75 points were used as basis centres.

The basis function was

ρ

(r) =

√

(r 2 + 640), and the embedding dimension was d = 5. Despite the very poor quality of the original data (only 8 bit precision) we find that we are still able to model the dynamics to an accuracy of better than 5% throughout most of the data. Furthermore, if we use more data points we can do much better. Figure 18 shows the prediction error if we use a sample of 1500 points to estimate G. In this case the increased amount of data even allowed us to reduce the number of radial basis functions down to 50.

0.5

0

-0.5

- 1

-1.5

2

1.5

1

- 2

0 200 400 600 800 n

Figure 17. One step prediction error

ε n

for laser data of Fig. 3

1000

It must be stressed is that the prediction errors shown in Fig. 14-18 are the result of only predicting forward one step at a time. Thus for each n the prediction for last d observed values ϕ

n-d

, ϕ

n-d+1

, …, ϕ ϕ n

is obtained by applying G λ to the

n-1

. In many applications one may wish to forecast further ahead. This can be done either by directly estimating a function which predicts k steps ahead, or by iterating a one step forecast. In general, the latter method appears to be preferable ([Farmer and

Sidorowich, 1988] and [Casdagli, 1989]).

Both techniques, however, will rapidly encounter fundamental limits to the long term predictability of chaotic time series due to the exponential divergence of trajectories found in such systems (again see [Farmer and Sidorowich, 1988] and [Casdagli, 1989] for a fuller discussion). This is illustrated n

= x n

- ˆx n

for multi-step predictions, always predicting from the same point v

500

= (x

499

,x

500

). Here ˆx n

is defined by iterating G λ forward from this point. Thus ˆx n

= G λ ( ˆx n

−

2

, ˆx n

−

1

) with initial conditions ( ˆx

499

, ˆx

500

) = (x

499

,x

500

). Other details are as for Fig. 14. We see that the error rapidly rises so that it is impossible to make any kind of

Friday, November 18, 1994.

16 Jaroslav Stark, Analysis of Time Series

-4

-6

- 8

0

-2 prediction beyond about 50 time steps ahead. Notice the obvious similarity to Fig. 5. Sugihara and

May [1990] have suggested that this phenomenon can be used to detect chaotic behaviour in time series (see also [Tsonis and Elsner, 1992]).

0.5

0

-0.5

- 1

-1.5

2

1.5

1

- 2

0 500 1000 1500 2000 2500 n

Figure 18. Prediction error

ε n

for the laser data using a data sample of 1500 points

2

-10

500 700 900 1100 1300 n

Figure 19. Multi step prediction error

ε n

for Hénon time series.

1500

Friday, November 18, 1994.

17 Jaroslav Stark, Analysis of Time Series

RECURSIVE PREDICTION

One disadvantage of the algorithm described above is that it is very much a “batch” technique. It thus calculates an estimate G λ of G once and for all using a predetermined block of observations ϕ

1

,

…, ϕ

N

. There is then no way of updating G λ using further observations ϕ

N+1

, ϕ

N+2,

… as they are made. Should one decide to use a larger data sample to estimate G one has to discard the previous estimate and recalculate a new estimate from the beginning. This leads to several disadvantages a) it limits the number of data points ϕ

1

, …, ϕ

N

that can be used in the estimation process. This is because for a given value of N, we have to form and manipulate the (N-d)

×

p matrix A and hence the above algorithm has a memory requirement of order Np. When p is of the order of

10 2 as above, or even larger, this rapidly becomes a serious restriction on the size of N.

b) no useful predictions can be made until all the observations ϕ

1

, …, ϕ

N

have been made and processed. In many applications it would be preferable to start making predictions (albeit rather bad ones) right from the start and have their quality improve as more and more data is assimilated.

c) in many situations the function G may not be stationary but will vary slowly with time, or occasionally change suddenly. In such an environment a batch estimation scheme will be very unsatisfactory since it will repeatedly have to discard its previous estimate of G and compute a new one starting from scratch. Furthermore, as mentioned in b) during each such recalculation there will be a delay before the estimate based on new data becomes available.

It would thus be better, particularly for real time signal processing applications to use prediction algorithms which continuously update the estimate G λ using new observations ϕ

N+1

, ϕ

N+2

, … as they are made. It turns out that this can be done using the framework of recursive least squares estima-

tion (e.g. [Young, 1984]). Recursive least squares techniques are of course well known in linear signal processing and form the basis of most adaptive filter architectures (e.g. [Alexander, 1986]). Unfortunately the standard least squares algorithms used in such linear schemes are not sufficiently stable or accurate for application to chaotic time series and in proves necessary to use more sophisticated recursive approaches such as the Recursive Modified Gram-Schmidt (RMGS) algorithm of

Ling et. al. [1986]. This yields results comparable to those obtained from the batch procedure described above [Stark, 1993].

5. NOISE REDUCTION AND SIGNAL SEPARATION

So far we have considered the time series { ϕ n

} in isolation. In most applications, however, we are unlikely to be given such a pure chaotic signal. Instead, we will be asked to manipulate a mixture

ψ n

= ϕ n

+

ξ n

of a chaotic time series { ϕ n

} and some other signal { in which case we want to remove it from { case we want to extract it from {

ψ

ψ n

} and discard { ϕ

ξ n

}. The latter may represent noise, n

}, or it may be a signal that we wish to detect, in which n

}. An example of the latter might be a faint speech signal {

ξ n

} masked by deterministic “noise” { ϕ n

} coming from some kind of vibrating machinery, such as an air conditioner (e.g. [Taylor, 1991]). In both cases, the mathematical problem that we face amounts to separating {

ψ n

} into its two components { ϕ n

} and {

ξ n

}. This is of course closely related to the property of “shadowing” which is found in many chaotic systems, and has already been mentioned by Colin Sparrow. This is because in the reconstructed state space the points u n

= ( ϕ

ψ

n-d+1

,

ψ

n-d+2 n

). Thus finding { ϕ

, …,

ψ n n

) form a pseudo-orbit close to the real orbit v n

= (

}, given {

ψ ϕ

n-d+1

, ϕ

n-d+2

, …, n

}, amounts to finding a real orbit which shadows the given pseudo-orbit.

Friday, November 18, 1994.

18 Jaroslav Stark, Analysis of Time Series

Several schemes have been developed in the last few years to perform this task (e.g. [Kostelich and

Yorke, 1988], [Hammel, 1990], [Farmer and Sidorowich, 1991], [Taylor, 1991], [Schreiber and

Grassberger, 1991], [Stark and Arumugam, 1992] and Davies [1992, 1993]). Here, we shall outline the overall framework behind all these approaches and illustrate it with a particularly simple method which is aimed at the situation when {

ξ n

} is a relatively slowly varying signal [Stark and

Arumugam, 1992].

First observe that the decomposition of { ϕ

ˆ ϕ

1 ϕ d some additional constraints on {

ξ

ψ n

−

1

) and obtain the trivial decomposition n

} into {

ψ n n ϕ n n

} and {

+ (

ψ n

ξ n

} is not unique. In fact, we can n ϕ n

= G( ˆ n

− d

). It is thus necessary to impose n

}. The most common is to minimize the size of {

ξ ϕ n

− d

+

1

,

…

, n

} with respect to some appropriate norm [Farmer and Sidorowich, 1991]. In many cases, just requiring {

ξ n

} to be

“small” for all n is sufficient to establish uniqueness. To see this, note that if { for most choices of ˆ

Hence if {

ξ n

1

, …, ˆ

ξ n

= ϕ

ψ d n

, the distance ϕ n

= ϕ n ϕ

n ϕ n

+

- ˆ

ξ ϕ n ϕ n

} is chaotic, then

will grow rapidly (if it is not large already).

n

will be large for at least some values of n. This is in fact the same argument that shows that for many chaotic systems there can only be one shadowing orbit which stays close to a given pseudo-orbit.

From now on we shall therefore restrict ourselves to the situation where {

ξ n

} is small in comparison to { ϕ n

}. The problem of separating {

ψ n

} into its components then naturally falls into two parts: a) Performing the decomposition when the function G is known.

b) Estimating the function G from the combined time series {

ψ n

} (rather than from { ϕ n

}).

We already have all the tools required to solve the second problem. The basic idea is to apply the techniques of the last section to a large sample of {

ψ n

}, in which case, with some luck, most of the effects of {

ξ n

} will average out and a reasonable estimate of G can be made. We can then proceed n

} of {

ξ n

ψ n

=

ψ n n

should be much more deterministic than {

ψ n

} and hence we should be able to obtain a better estimate of G from it. This procedure can then be repeated as often as necessary.

1.5 10 - 4

1 10 - 4

5 10 - 5

0 10 0

-5 10 - 5

0 200 400 600 800 n

Figure 20. Signal added to Hénon time series for signal extraction examples.

1000

Friday, November 18, 1994.

19 Jaroslav Stark, Analysis of Time Series

Let us thus turn our attention to the first problem, namely that of performing the decomposition when G is known. The basic approach is to look for a discrepancy

δ

ψ n

=

ψ zero value of

δ

ψ ξ n

will therefore indicate the presence of some non-trivial signal. This is illustrated by

Figures 20 and 21. The signal {

ξ

ξ ξ

ψ n

- G(

ψ

n-d

,

ψ

n-d+1

, …,

ψ

G(

n-1

) between the observed value of

ψ

n-d

,

n-d+1

, …,

n-1

). If

n-d

= n

and that which is predicted by the deterministic dynamics

n-d +1

=

…

= n

= 0 then this discrepancy will be zero. A nonn

} from Fig. 20 was added to the Hénon time series from Fig. 1. An estimate G λ of G was made from the combined time series { made, just as for Fig. 14. This was then subtracted from {

ψ

ψ n

} and a one step prediction was n

} to yield {

δ n

} as shown in Fig. 21. It is clear that this procedure is able to detect the presence of the pulse and even to some extent extract its qualitative features, but does not yield much in the way of quantitative information.

4 10 - 4

2 10 - 4

0 10 0

-2 10 - 4

0 200 400 600 800 n

Figure 21. Discrepancy

δ n

when above signal is added to Hénon time series.

1000

To proceed further we expand

δ n

assuming that {

ξ n

} is small:

δ n

=

ψ n

- G(

ψ

n-d

,

ψ

n-d+1

, …,

ψ

n-1

)

= ϕ n

+

ξ n

- G( ϕ

n-d

+

ξ

n-d

, ϕ

n-d+1

+

ξ

n-d+1

, …, ϕ

n-1

+

ξ

n-1

) (21)

≅ ξ n

- d ∑

ξ

n-i

∂

G(

ψ n

− d

∂ψ

,

K

,

ψ n

−

1

)

(22) i

=

1 n

− i

Allowing n to vary, Eq. 22 gives a set of simultaneous linear equations for {

δ n

}. These lie at the heart of most approaches to signal separation. Although several different techniques can be used to solve these equations, great care has to be taken when the dynamics of { ϕ n

} is chaotic, since in that case this set of equations becomes very badly conditioned. One possibility is to use the singular value decomposition, which is able to cope with very badly conditioned linear problems. This is essentially the technique used by Farmer and Sidorowich [1991]. They in fact solve Eq. 22 repeatedly, regarding it as a Newton step in solving the full non-linear problem given by Eq. 21. They also impose additional equations designed to ensure that the final { ϕ n

} has minimal norm. Davies [1992 and 1993] gives an elegant framework for this kind of approach.

Friday, November 18, 1994.

20 Jaroslav Stark, Analysis of Time Series

Here, however, we shall describe a simpler approach that can be used if we make additonal assumptions about the properties of {

ξ n

}. In particular we assume that {

ξ n

} is slowly varying. This for instance is the case for the signal in Fig. 20, except in a small neighbourhood of the transient (and as we shall see our scheme works reasonably well even there). We thus assume that

in comparison to

ξ n

. It is then reasonable to set

ξ

n-d

≅ ξ

n-d+1

≅ … ≅ ξ n

ξ n

-

ξ

n-1

is small

in Eq. 22, which gives

δ n

≅ ξ n

(1 - J n

) (23) where

J n

= d ∑ i

=

1

∂

G(

ψ n

− d

∂ψ

,

K

, n

− i

ψ n

−

1

)

(24)

This gives

ξ ˜ n

≅

δ n

1

−

J n

(25) as an estimate for

ξ

ξ n

ξ n

+

1

ξ n

. This turns out to work extremely well, except for the occasional cases when J n comes close to 1. This can be overcome by noting that under the assumption n

, Eq. 25 is an equally valid estimate for any of proportional to

1 - J n

ξ n

+ d

ξ

n-d

,

ξ

n-d+1

, …,

ξ

ξ

n-d

≅ ξ

n-d+1

≅

…

≅ n

. Thus, it is reasonable to use

J k

is maximized. Let k(n) be this value, so that n

≤

k(n)

≤

n+d and

1 - J

n+d. Our best estimate for

ξ

as an estimate for n

is then

ξ

k(n)

ξ n

. Since the error in Eq. 21 is essentially inversely

ξ k

for which the corresponding

1 -

k(n)

≥

1 - J i

for all n

≤

i

≤

. This is shown plotted in Figure 22, and as we can see has managed to recover the original pulse almost perfectly. We stress all the information required for this has come from combined signal {

ψ n

} and in particular the dynamics G λ was estimated from this.

1.5 10 - 4

1 10 - 4

5 10 - 5

0 10 0

-5 10 - 5

0 200 400 600 800 1000 n

Figure 22. Recovered signal

ξ ˜ k( n )

.

Observe that in traditional signal processing terms, if we identify { the signal { ϕ n

} as the “noise” contaminating

ξ n

}, we are able to recover the signal at a signal to noise ratio of -80dB. This is of course far in excess of what could be done using conventional linear filtering. In this context, it should be

Friday, November 18, 1994.

21 Jaroslav Stark, Analysis of Time Series

pointed out that, in common with most such schemes, the above algorithm’s performance increases as the amplitude of {

ξ n

} decreases, down to some lower limit set by numerical inaccuracy. This is in complete contrast to conventional signal processing techniques where signal extraction performance deteriorates with decreasing signal amplitude.

6. CONTROLING CHAOTIC SYSTEMS

So far, we have considered the presence or absence of chaos in a given system as something beyond our control. In many cases however, we would like to avoid chaotic behaviour, or at least modify it in such a way as to perform a useful function. One approach would be to make large and possibly costly alterations to the system to completely change its dynamics and ensure that the modified system is not capable of chaotic behaviour. This may, however, not be feasible for many practical systems. An alternative, due to Ott et. al. [1990] is to attempt to control the system using only small time dependent perturbations of an accessible system parameter. The key fact behind their idea is that a typical chaotic system, such as the Hénon map, will contain an infinite number of unstable periodic orbits. Normally, these will not be observed, precisely because they are unstable, but any typical trajectory of the system will come arbitrarily close to them infinitely often. This can be seen in

Fig. 1, where {x n

} comes close to an unstable fixed point near to x = 0.6, at about n = 150 and again at n = 640 and n = 690.

The effects of the unstable periodic orbits can thus be seen in an observed time series { ϕ n

}. Several groups have shown that an accurate estimate of their position and eigenvalues can be derived from the time series (e.g. [Auerbach et al., 1987] and [Lathrop and Kostelich, 1989]). Such estimates make use of many of the techniques for modelling chaotic time series that we have described in the previous sections. As usual, Takens’s theorem is used to reconstruct the original dynamical system, whilst a local estimate to the function G around a periodic orbit can be used to calculate the orbit’s eigenvalues.

The basic idea behind controlling a chaotic system is to choose one of these periodic orbits and attempt to stabilize it by small perturbations of a parameter. This is possible precisely because chaotic systems are so sensitive to small changes. Normally, such sensitivity simply leads to instability and complex behaviour of the kind seen in Fig. 1. However, if the perturbations are carefully chosen, they can push the system into the desired periodic regime and then keep it there. Ott et. al. [1990] first demonstrated the feasibility of their algorithm using numerical simulations, but since then it has been applied successfully to the control of a variety of real systems (Ditto et al., 1990], [Singer et al., 1991] and [Hunt et al., 1991]). Once again, this algorithm relies on a local approximation of the function G around the periodic orbit.

One of the great advantages of this approach is that there is potentially a large number of different periodic orbits which we can stabilize. We can thus choose precisely that orbit which gives the best system performance in a given application. Furthermore, we can easily switch amongst the different orbits available, again using only small changes in the control parameter. In principle, it should thus be possible to obtain many substantially different classes of behaviour from the same chaotic system. This is in complete contrast to systems which lack a chaotic attractor and operate at a stable equilibrium or periodic orbit. For such systems, small parameter perturbations can only move the orbit by a small amount, but cannot generally lead to dramatically different behaviour. One is thus essentially restricted to whatever behaviour is given by the stable orbit and it is difficult to make substantial improvements in performance without major changes to the system.

A further extension to this idea is described in [Shinbrot et al., 1990]. Here, rather than aiming to operate the system in a given periodic steady state, one is trying to direct the state of the system to a

Friday, November 18, 1994.

22 Jaroslav Stark, Analysis of Time Series

desired target state in as short a time as possible using only small perturbations of the control parameter. It turns out that essentially the same framework as above can be used to achieve this, and once again a chaotic system’s extreme sensitivity to small perturbations can be used to our advantage.

7. CHARACTERIZING CHAOTIC TIME SERIES

Given an apparently complex time series { ϕ n

} such as those in Fig. 1-3, how do we tell whether it has come from a deterministic chaotic system or from some kind of stochastic process such as the

ARMA model of Eq. 2? A variety of techniques for answering this question exist, all based upon the reconstruction technique described in §3.

Thus, from the observed series { ϕ

{v n

= ( ϕ

n-d+1

, ϕ n

: n = 1, …, N} we form the d-dimensional reconstructed orbit

n-d+2

, …, ϕ n

) : n = d, …, N}. As mentioned above, this is in some sense equivalent to the original orbit {x n

= x(n

τ

) : n = d, …, N} in the unknown phase space; more precisely the coordinate independent properties of {v n

} are the same as those of {x n

}. The basic idea for characterizing chaotic behaviour is therefore to compute one more or more quantities which are invariant under co-ordinate changes and indicate the presence or absence of chaos.

As already described in Colin Sparrow’s lecture, and in §2, one of the most fundamental characteristics of chaotic behaviour is the exponential divergence of nearby trajectories. We saw this in Fig.

5, and saw its effects on our ability to make long range predictions in Fig. 19. Recall that the rate of this divergence is measured using Liapunov exponents. Thus if x

′ n

is a small perturbation of x n

we expect to see that x

′ n

− x n

~ e

λ n (26) for where some constant

λ

. In fact, there will be m different values of

λ

, depending on the direction of the initial perturbation. These m values are known as the Liapunov exponents of the original dynamical system (e.g. [Eckmann and Ruelle, 1985], [Abarbanel et al., 1993]) and it is usual to describe the system as chaotic if at least one is positive. Now, note that if x

0

was an equilibrium point then

λ

would then just be an eigenvalue of the linearized differential equation around this equilibrium. It is well known that eigenvalues are preserved under co-ordinate changes (this point was already implicit in our discussion of chaotic control above) and in exactly the same way it can be shown that Liapunov exponents remain invariant under smooth co-ordinate changes, and in particular that the exponents for the reconstructed system are the same as those for the original dynamics.

In other words we can estimate the original Liapunov exponents by observing the separation of two nearby reconstructed trajectories v

′ n

and v n

. The best way of doing this is to estimate the local dynamics at v n

for each n, as briefly described under local prediction in §3. The linear part of this (i.e.

the Jacobian matrix) gives us the behaviour of small perturbations close to v n

. The resulting linearizations for each n can then be composed (i.e. by multiplication of the corresponding matrices) to yield an estimate over the whole of the observed time series of the rate of divergence. Details of practical algorithms can be found in [Eckmann and Ruelle, 1985], [Wolf et al., 1985], [Eckmann et al., 1986] and [Abarbanel et al., 1993].

Such numerical determination of Liapunov exponents has been very popular, and has been attempted with a wide variety of observed time series. Unfortunately, estimates of Liapunov exponents tend to converge rather slowly and hence large quantities of high quality data are required to obtain accurate results. In many cases sufficient data will not be available and attempting to draw conclusions from such estimates is at best a waste of time and at worst can be highly misleading. It

Friday, November 18, 1994.

23 Jaroslav Stark, Analysis of Time Series

is probably fair to say that many of the published papers claiming to have detected chaos in observed time series should therefore be treated with healthy scepticism.

An alternative invariant is a quantity called the correlation dimension D

C

(e.g. [Eckmann and

Ruelle, 1985], [Abarbanel et al., 1993]). This ignores the dynamics and concentrates on the properties of the attractor in IR m

on which the points {x n

} lie. In particular it attempts to measure the number of variables required to describe this set. If all the x n

are identical (so that all the ϕ n

are constant) then D

C

will be zero. If they lie on some smooth curve then D

C

will equal 1 and if they fill a plane it will equal 2. At the other extreme, if the x n

completely fill IR m

then we will have D

C

= m.

Intriguingly, D

C

need not be an integer (it is about 1.21… for the Hénon map) and is thus an example of a fractal dimension.

As with the Liapunov exponents, the correlation dimension is co-ordinate independent and hence is the same for {x n

} and {v n

}. It can be estimated from a finite sample {v n

: n = 1, …, N} as follows.

First form all the N 2 possible pairs (v i

,v j

) of such points. Calculate the Euclidean distance r ij

between each pair v i

and v j

. Recall that an expression for this in terms of the original time series { ϕ n

} was already given in Eq. 20.

Now, for a given

ε

, let N(

ε

) be number of pairs (i,j) such that r ij

≤ ε

. Then C(

ε

) = N(

ε

)/N 2 is the proportion of pairs of points within a distance of

ε

of each other. A simple calculation shows that if all the points {v n

} lie randomly on some curve we have roughly C(

ε

) ~

ε

for large N and small

ε

. Similarly if the {v n

} lie on a surface we get C(

ε

) ~

ε

2 . This suggests that C(

ε

) behaves exponentially for small

ε

with the exponent giving the dimension of the set on which the {v n

} lie. This motivates the definition of the correlation dimension

D

C

= lim

ε→

0 log C(

ε

) log

ε

(27)

The quantity C(

ε

) is called a correlation integral and can be readily estimated numerically. The main difficulty lies in finding all the points within a distance

ε

of a given point v i

. Several efficient algorithms exist for doing this, even for moderately large data sets (e.g. N = 10 6 ) (e.g. [Theiler,

1987], [Bingham and Kot, 1989], [Grassberger, 1990]). Note that exactly the same problem (of finding all the points in a given neighbourhood) arises in the local prediction algorithms referred to in

§3, and indeed such searching is by far the slowest part of such techniques. Once has been computed for a range of

ε

, we can estimate D

C

by plotting log C(

ε

) against log

ε

and estimating the slope of the resulting line. Again, a reasonable size data set is required to obtain meaningful values of D

C

, but probably not as large as is needed for the computation of Liapunov exponents. Nevertheless published conclusions should be treated with some caution.

So far, we have assumed that we know the size of d required to reconstruct the time series { ϕ n

}.

When this is not the case, we proceed by trial and error. Thus, we calculate a correlation dimension

D

C

(d) for each trial choice of d. When d is too small the set {v n

} will completely fill IR will get D

C

(d)

≅

d (we must of course always have 0

≤

D m

and we

C

(d)

≤

d). Conversely once d is sufficiently large the computed value D

C

(d) should stabilize at approximately the correct correlation dimension of {x n

}. As an example, for the Hénon map we get D

C

(1)

≅

1 and D

C

(2)

≅

D

C

(3)

≅

D

C

(4)

≅

1.21.

When D

C

is not an integer, as in this case, we say that the system contains a strange attractor. This is usually a sign of chaos (as indicated by a positive Liapunov exponent), although strange nonchaotic systems do exist (but are currently believed to be pathological). Note also that many other fractal dimensions exist to characterize fractal sets, but D

C

is probably the easiest to estimate numerically.

Friday, November 18, 1994.

24 Jaroslav Stark, Analysis of Time Series

It may of course happen that D

C

(d) continues to grow with d. This usually suggests that the time series { ϕ n

} was generated by a stochastic process rather than by a chaotic dynamical system. Thus for example, a random process such as white noise will have D

C

=

∞

. Of course, we would also get this result if the original dynamical system was genuinely infinite dimensional, but from many points of view such a system is indistinguishable from a random one. A variety of statistical sets for distinguishing chaos from stochastic noise have been devised but their utility in real applications is still rather limited.

In the above procedure, the smallest value of d at which D

C

(d) begins to stabilize yields the minimal embedding dimension required to adequately represent the dynamics of the system. Computing the correlation dimension in this way thus yields both a measure of a time series’s complexity, and an estimate of the embedding dimension required for any further processing. It is thus usually the first step in analysing time series which we suspect might have been generated by a chaotic system. In practice, lack of data and numerical precision limit calculations to about d

≤

10 (and hence D

C

≤

10). From the point of view of these notes, therefore, any time series with D

C

appreciably larger than 10 can be treated more or less as a truly random one.

Recently, several authors ([

(

Cenys and Pyragas, 1988], [Liebert et al., 1991], [Kennel et al., 1992] and [Buzug and Pfister, 1992]) have pointed out that estimating d by the above procedure is unnecessarily complicated and that one can attempt to do this directly. The basic idea is that if d is sufficiently large then if two points ( ϕ ϕ ϕ then if we increase d by one, the two points (

j+1

) should still be close in IR

i-d+1

,

i-d+2

, …, ϕ i

,

i+1

) and (

d+1

. One the other hand if d is too small then (

i+1

) and (

i-d+2

, …, ϕ ϕ

j-d+1

, ϕ i

) and (

j-d+2

, …, ϕ

j-d+1

, ϕ close then so must the values ϕ j

, ϕ

j-d+2 ϕ ϕ

i-d+1

j+1

) should be much further away from each other than ( ϕ

, …, ϕ

, ϕ

i-d+2

, …, ϕ ϕ i

) and ( ϕ ϕ

j-d+1

, ϕ ϕ

j-d+2

, …, ϕ ϕ ϕ j

) are close in IR

j-d+1

i-d+1

,

, ϕ ϕ

j-d+2

i-d+2

, …, ϕ

, …, ϕ

i-d+1 d j

, i

, j

) were. Of course all this is saying is that if d is sufficiently large to give us a proper reconstruction then if ( ϕ

i-d+1

, ϕ

i-d+2

, …, ϕ i

) and ( ϕ

j-d+1

, ϕ

j-d+2

, …, ϕ j

) are

i+1

and ϕ

j+1

. Hence in the context of §3, a local 0 th predictor (which

, we called “forecasting by analogy”) will yield reasonable forecasts. We thus see that the above approach is simply testing the crude predictability of the time series for various values of d. There seems to be no reason to restrict oneself to 0 th order local predictors which suggests that the best way of determining d is a purely empirical one: estimate G for each candidate value of d and use the smallest one which gives a good fit.

Friday, November 18, 1994.

25 Jaroslav Stark, Analysis of Time Series

FURTHER READING

The recently published reprint collection by Ott et al. [1994] contains an excellent selection of the most important papers in this area, and includes a 60 page introduction by the authors. Two recent reviews, by Abarbanel et al. [1993] and by Grassberger et al. [1992] also provide comprehensive surveys of chaotic time series analysis, with many examples of applications. Finally a large part of volume 54 of the Journal of the Royal Statistical Society B is devoted to this subject, and may be particularly useful to those interested in the more statistical aspects of chaotic time series.

REFERENCES AND BIBLIOGRAPHY

H. D. I. Abarbanel, R. Brown and J. B. Kadtke, 1989, Prediction and System Identification in

Chaotic Time Series with Broadband Fourier Spectra, Phys. Lett. A, 138, 401-408.

H. D. I. Abarbanel, R. Brown, J. J. Sidorowich, L. S. Tsimring, 1993, The Analysis Of Observed

Chaotic Data In Physical Systems, Rev. Mod. Phys., 65, 1331-1392.

S. T. Alexander, 1986, Adaptive Signal Processing, Theory and Applications, Springer-Verlag.

D. Auerbach, P. Cvitanovi c

′

, J.-P. Eckmann, G. Gunaratne and I. Procaccia, 1987, Exploring

Chaotic Motion Through Periodic Orbits, Phys. Rev. Lett., 58, 2387-2389.

S.A. Billings, M.J. Korenberg and S. Chen, 1988, Identification of Non-linear Output-Affine Systems Using an Orthogonal Least Squares Algorithm, Int. J. Systems Sci., 19, 1559-1568.

S. Bingham and M. Kot, 1989, Multidimensional Trees, Range Searching, and a Correlation Dimension Algorithm of Reduced Complexity, Phys. Lett. A, 140, 327-330.

A. Blyth, 1992, Least Squares Approximation of Chaotic Time Series Using Radial Basis Functions,

MSc Dissertation, Department of Mathematics, Imperial College.

D. S. Broomhead and D. Lowe, 1988, Multivariable Functional Interpolation and Adaptive Networks, Complex Systems, 2, 321-355.

J. R. Bunch and C. P. Nielsen, 1978, Updating the Singular Value Decomposition, Numerische

Mathematik, 31, 111-129.

Th. Buzug and G. Pfister, 1992, Optimal Delay time and Embedding Dimension for Delay-Time

Coordinates by Analysis of the Global Static and and Local Dynamical Behaviour of Strange

Attractors, Phys. Rev. A, 45, 7073-7084.

M. Casdagli, 1989, Nonlinear Prediction of Chaotic Time Series, Physica D, 35, 335-356.

M. Casdagli, 1992, Chaos and Deterministic versus Stochastic Non-linear Modelling, J. Roy. Stat.

Soc. B, 54, 303-328.

M. Casdagli, S. Eubank, J. D. Farmer, and J. Gibson, 1991, State Space Reconstruction in the Presence of Noise, Physica D, 51, 52-98.

A.

(

C enys and K. Pyragas, 1988, Estimation of the Number of Degrees of Freedom from Chaotic

Time Series, Phys. Lett. A, 129, 227-230.

S. Chen, S.A. Billings and W. Luo, 1989, Orthogonal Least Squares Methods and Their Application to Non-linear Systems Identification, Int. J. Control, 50, 1873-1896.

S. Chen, S.A. Billings, C. F. N. Cowan and P. M. Grant, 1990a, Practical Identification of NAR-

MAX Models Using Radial Basis Functions, Int. J. Control, 52, 1327-1350.

Friday, November 18, 1994.

26 Jaroslav Stark, Analysis of Time Series

S. Chen, S.A. Billings, C. F. N. Cowan and P. M. Grant, 1990b, Non-linear Systems Identification

Using Radial Basis Functions, Int. J. Systems Sci., 21, 2513-2539.

S. Chen, C. F. N. Cowan and P. M. Grant, 1991, Orthogonal Least Squares Learning Algorithm for

Radial Basis Function Networks, IEEE Trans. Neural Networks, 2, 302-309.

J. P. Crutchfield and B. S. McNamara, 1987, Equations of Motion from a Data Series, Complex

Systems, 1, 417-452.