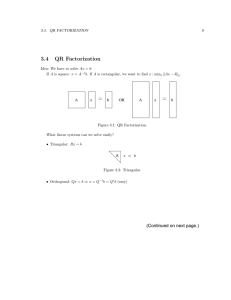

Example of QR Decomposition Using Projections

advertisement

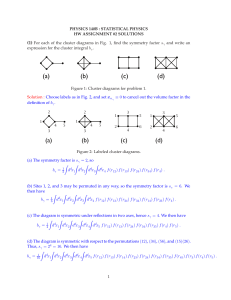

Example of QR Decomposition Using Projections The third semester of calculus tends to start with analytic geometry. The prerequisite for analytic geometry is very basic algebra, geometry, and good knowledge of trigometry. In relation to linear algebra, a topic of special interest is the QR decomposition. Most beginning linear algebra books present the necessary background well before the QR decomposition is discussed. Therefore, the student who has not had third semester calculus should pick up the facts in earlier parts of the text. In terms of notation, define the projection of ~u onto ~v as proj~v ~u = ||~u|| cos θ ||~~vv|| v = ||~u|| ||~u~u||·~||~ v || ~v ||~v || ~v ~v ||~v || ||~v || = ~u · Note that the norm of ~v /||~v || is 1. Consider the matrix A = [~v1 , ~v2 , ~v3 ] = 4 6 5 −2 3 0 1 −7 −9 8 0 1 . There are two ways to think of the first procedure. Either, project ~v1 onto itself or factor ~v1 as a product of its length times a vector of length 1 in the same direction. That is, define q~1 = ~v1 /||~v1 || and r11 = ||~v1 ||. From this ~v1 = projq~1 v~1 = ||~v1 ||~q1 = ~q1 r11 The results are r11 = ||~v1 || = and √ 85 = 9.2195 ~q1 = ~v1 = ||~v1 || .4339 −.2169 .1085 .8677 At this stage the first column of A factors such that [~v1 ] = [~q1 ][r11 ] .4339 −.2169 = .1085 .8677 [9.2195] For the next step, decompose ~v2 into orthogonal vectors by projection ~v2 onto ~q1 and defining w ~ 2 as the remaining portion(see below) in a direction orthogonal to ~q1 . That is, ~v2 = projq~1 ~v2 + w ~2 = (||~v2 || cos ψ) ~q1 + w ~2 ~v2 · ~q1 = ||~v2 || ~q1 + w ~2 ||~v2 || ||~q1 || = (~v2 · ~q1 )~q1 + w ~2 = (~q1T ~v2 )~q1 + w ~2 = ~q1 r12 + w ~2 where r12 = ~q1T ~v2 . A graph of a triangle with hypotenuse ~v2 , lower side in the direction of ~q1 and opposite side w. ~ For our example r12 = ~q1T ~v2 = 1.1931 w ~2 = 5.4824 3.2588 −7.1294 −1.0353 Most often ||w ~ 2 || 6= 1. Assume w ~ 2 = ~q2 r22 such that ||~q2 || = 1. That is, let 1 r22 = ||w ~ 2 || = 9.6217 and ~q2 = ||w|| ~ At this stage, the first two columns ~ w. of A factor such that " [~v1 , ~v2 ] = [~q1 , ~q2 ] = r11 r12 0 r22 # .4339 .5698 −.2169 .3387 .1085 −.74102 .8677 −.1076 " 9.2195 1.1931 0 9.6217 # For the final step, decompose ~v3 into the terms; i.e., ~v3 = projq~1 ~v3 + projq~2 ~v3 + w ~3 = (||~v3 || cos α)~q1 + (||~v3 || cos β)~q2 + w ~3 = (~v3 · ~q1 )~q1 + (~v3 · ~q2 )~q2 + w ~3 = (~q1T ~v3 )~q1 + (~q2T ~v3 )~q2 + w ~3 = ~q1 (~q1T ~v3 ) + ~q2 (~q2T ~v3 ) + w ~3 = ~q1 r13 + ~q2 r23 + w ~3 First results are r13 = 2.0608, r23 = 9.4101, and w ~3 = −1.2559 −2.7401 −2.2509 0.2243 Then r33 = ||w ~ 3 || = 3.7686. Since ~q3 = ~q3 = . 1 ~3 ||w ~ 3 || w −0.3333 −0.7271 −0.5973 0.0595 Finally, r11 r12 r13 [~v1 , ~v2 , ~v3 ] = [~q1 , ~q2 , ~q3 ] 0 r22 r23 0 0 r33 = 0.4339 0.5698 −0.3333 9.2195 1.1931 2.0608 −0.2169 0.3387 −0.7271 0 9.6217 9.4101 0.1085 −0.7410 −0.5973 0 0 3.7688 0.8677 −0.1076 0.0595 Because the vectors ~qj , j = 1 : 3 are constructed to be orthonormal, the matrix Q whose columns are the vectors ~qj is such that QT Q = I3×3 . Note that QQT4×4 6= I4×4 When solving systems of the form Am×n ~x = ~b, if the system has an inverse when m = n or is overdetermined, then it can be solved with A~x = ~b QR~x = ~b R~x = QT ~b Since for our particular example, the system is overdetermined, it is unlikely that ~b − A~x = ~0. The mean squared error would be Error = = where n = 4. n 1X ||A~x − ~b||2 n j=1 n 1X ||R~x − QT ~b||2 n j=1 Example 2 - Starting from Different Vectors Consider a QR decomposition of the following vectors. ~v1 = −3 2 4 1 , ~v2 = 6 1 −3 2 , ~v3 = 8 0 9 0 Step 1: Begin with w ~ 1 = ~v1 , r11 = ||~v1 || = 5.4772 and ~q1 = ~v1 = w ~ 1 = ~q1 r11 = −.54772 .36514 .73029 .18257 w ~1 ||w ~ 1 || . That is [5.4772] Step 2: Bring in ~v2 by projecting onto ~q1 with remains in the vector w ~ 2. The components of ~v2 are orthogonal. That is ~v2 = projq~1 ~v2 + w ~2 = (~q1 · ~v2 )~q1 + w ~2 = ~q1 r12 + w ~2 where r12 = ~q1 · ~v2 = −4.7469 and q1 · w ~ 2 = 0. To compute use w ~2 = ~v2 − (~q1 r12 ), followed by r22 = ||w ~ 2 || and ~q2 = ||w~12 || w ~ 2 . That is, w ~2 = 3.4 2.73333 .46666 2.86666 and has norm ||w ~ 2 || = 5.24086. Normalizing w ~ 2 results in ~q2 . That is r22 = ||w ~ 2 || ~q2 = 5.24086 1 = w ~2 ||w ~ 2 || .64874 .52154 = .089043 .54698 At this point the first two columns can be express as " [~v1 , ~v2 ] = [~q1 , q~2 ] r11 r12 0 r22 # Step 3: Bring in ~v3 by projecting onto ~q1 and ~q2 with remains in the vector w ~ 3 . That is, ~v3 = projq~1 ~v3 + projq~2 ~v3 + w ~3 = (~q1T ~v3 )~q1 + (~q2T ~v3 )~q2 + w ~3 = ~q1 r13 + ~q2 r23 + w ~3 where r13 = ~q1 · ~v3 = 2.19089 r23 = ~q2 · ~v3 = 5.99137 Solving gives w ~ 3 = ~v3 − ~q1 r13 − ~q2 r23 5.3130 −3.92475 = 6.86650 −3.67718 r33 = ||w ~ 3 || ~q3 = 10.21290 .52023 −.38429 = .67233 −.36005 In conclusion, r11 r12 r13 [~v1 , ~v2 , ~v3 ] = [~q1 , ~q2 , ~q3 ] 0 r22 r23 0 0 r33 Now let A = [~v1 , ~v2 , ~v3 ] and consider solving A~x = ~b. Most often a solution would not exist. However, the least squares solution can be found via the QR dcomposition as discussed in the last example. Once again, starting with A~x = ~b is rewritten and manipulated as follows. A~x = ~b QR~x = ~b R~x = QT ~b The final equation should be solved using backward substitution.