Resolving Information Asymmetries in Markets: The Role of Certified Management Programs

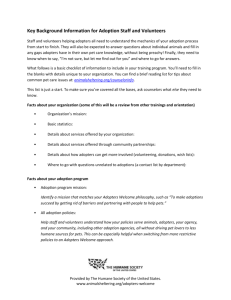

advertisement