Towards efficient multiagent task allocation Fernando dos Santos

advertisement

Auton Agent Multi-Agent Syst (2011) 22:465–486

DOI 10.1007/s10458-010-9136-3

Towards efficient multiagent task allocation

in the RoboCup Rescue: a biologically-inspired approach

Fernando dos Santos · Ana L. C. Bazzan

Published online: 22 May 2010

© The Author(s) 2010

Abstract This paper addresses team formation in the RoboCup Rescue centered on task

allocation. We follow a previous approach that is based on so-called extreme teams, which

have four key characteristics: agents act in domains that are dynamic; agents may perform

multiple tasks; agents have overlapping functionality regarding the execution of each task but

differing levels of capability; and some tasks may depict constraints such as simultaneous

execution. So far these four characteristics have not been fully tested in domains such as the

RoboCup Rescue. We use a swarm intelligence based approach, address all characteristics,

and compare it to other two GAP-based algorithms. Experiments where computational effort,

communication load, and the score obtained in the RoboCup Rescue are measured, show that

our approach outperforms the others.

Keywords Optimisation in multiagent systems · Task allocation · Robocup Rescue ·

Swarm intelligence

1 Introduction

In multiagent systems, task allocation is an important part of the coordination problem. In

complex, dynamic and large-scale environments arising in real world applications, agents

must reason with incomplete and uncertain information in a timely fashion. Scerri et al.

[17] have introduced the term extreme teams that is related to the following four characteristics in the context of task allocation: (i) these teams act in domains whose dynamics

may cause new tasks to appear and existing ones to disappear; (ii) agents in these teams may

F. dos Santos · A. L. C. Bazzan (B)

PPGC—Universidade Federaldo Rio Grande do Sul, Caixa Postal 15064,

Porto Alegre, RS 91.501-970, Brazil

e-mail: bazzan@inf.ufrgs.br

F. dos Santos

e-mail: fsantos@inf.ufrgs.br

123

466

Auton Agent Multi-Agent Syst (2011) 22:465–486

perform multiple tasks subject to their resource limitation; (iii) many agents have overlapping

functionality to perform each task, but with differing levels of capability; and (iv) some tasks

in these domains are inter-task (e.g. must be simultaneously executed).

In the RoboCup Rescue for example, different fire fighters and paramedics comprise an

extreme team; while fire fighters and paramedics have overlapping functionality to rescue

civilians, one specific paramedical unit may have a higher capability due to their specific

training and current context.

The problem of task allocation among teams can be modelled as that of finding the assignment that maximizes the overall utility. The generalized assignment problem (GAP) and its

extension, E-GAP, can be used to formalize some aspects of the dynamic allocation of tasks

to agents in extreme teams.

In [17], Scerri et al. have performed experiments using their algorithm, Lowcommunication Approximate DCOP (LA-DCOP), in two kinds of scenarios. In an abstract

simulator, all four characteristics mentioned above were used. In another scenario, the RoboCup Rescue simulator, they only deal with one kind of team of agents, the fire brigade, and

hence characteristic (iii) was only partially addressed.

In [5], Ferreira Jr. et al. use the RoboCup Rescue scenario including the various actors,

thus addressing characteristics (i), (ii), and (iii). However, it does not deal with inter-task

constraints in an efficient way, especially considering the full RoboCup Rescue scenario.

In the present paper we consider all four characteristics of extreme teams and test our

approach both on an abstract simulator for task allocation, as well as on the RoboCup Rescue. In the latter, characteristic (iv) is needed in situations such as the following: if a task

is large, a single agent will not be able to perform it successfully. For instance, a single fire

brigade will not extinguish a big fire; a single ambulance may require too much time to rescue

a civilian, ending up with no reward if this civilian dies before being rescued.

We expect an improvement in the overall performance with some tasks being jointly performed by the agents. For this purpose, we decompose the tasks into inter-related subtasks

which must each be simultaneously executed by a group of agents. Thus, we fully address

the concept of extreme teams proposed by Scerri et al.

To this aim we propose and use eXtreme-Ants, an algorithm that is inspired in the ecological success of social insects, which have the characteristics of extreme teams. We compare

eXtreme-Ants with two other algorithms that are GAP-based and show that, by decomposing

tasks, eXtreme-Ants outperforms other approaches in the RoboCup Rescue scenario.

In the remaining sections of this paper we describe the model of division of labor in social

insects and the process of recruitment used by ants to cooperatively transport large prey

(Sect. 2). In Sect. 3 we briefly describe E-GAP as a task allocation model, while related work

regarding distributed task allocation is presented in Sect. 4, followed by the description of

eXtreme-Ants in Sect. 5. Section 6 models the RoboCup Rescue as an E-GAP, identifying

inter-constrained tasks and defining agent’s capabilities. An empirical study is presented in

Sect. 7, together with a discussion about the results achieved. Finally, Sect. 8 points out the

conclusion and future directions.

2 Social insects: division of labor and recruitment for cooperative transport

An effective division of labor is key for the ecological success of insect societies. A social

insect colony with hundreds of thousands of members operates without explicit coordination. An individual cannot assess the needs of the colony; it has just a fairly simple local

information. A complex colony behavior emerges from individual workers aggregation.

123

Auton Agent Multi-Agent Syst (2011) 22:465–486

467

The key point is the plasticity of the individuals, which allows them to engage in different tasks responding to changing conditions in the colony [2].

This behavior is the basis of the theoretical model described by Theraulaz et al. [21]. In this

model, interactions among members of the colony and individual perception of local needs

result in a dynamic distribution of tasks. The model is based on individuals’ internal response

threshold related to tasks stimuli. Assuming the existence of J tasks to be performed, each

task j ∈ J has an associated stimulus s j . The stimulus is related to the demand for the task

execution. Given a set of I individuals that can perform the tasks in J , each individual i ∈ I

has an internal response threshold θi j , which is related to the likelihood of reacting to the

stimulus associated with task j. This threshold can be seen either as a genetic characteristic

that is responsible for the existence of differences in morphologies of insects belonging the

same society, or as a temporal polyethism (in which individuals of different ages tend to

perform different tasks), or simply as individual variability.

In the model of Theraulaz et al. [21] the individual internal threshold θi j and the task

stimulus s j are used to compute the probability (tendency) Ti j (s j ) of the individual i to

perform task j, as shown in Eq. 1.

Ti j (s j ) =

s 2j

s 2j + θi2j

(1)

The use of the tendency replicates the plasticity of individuals because any individual

is able to perform any task if the corresponding task stimulus is high enough to overcome

the individual’s internal threshold. This flexibility enables the survival of the colony in an

eventual absence of specialized individuals, since other individuals start to perform the tasks

as soon as the stimulus exceeds their thresholds.

Another useful characteristic found among social insects is that, in some species of ants,

the transportation of large prey in a cooperative way involves two or more ants that cannot

do the transport alone [15]. The main purpose of the cooperative transport is to maximize

the trade-off between the gained energy (food) and the energy spent to take the prey to the

nest. Further, this process speeds up the transport.

The group involved in the cooperative transport is formed by means of a process called

recruitment. When a single scout ant discovers a prey, it firstly attempts to seize and transport

it individually. After unsuccessful attempts, the recruitment process starts. In order to recruit

nestmates, ants employ a mechanism called long-range recruitment (LRR). Some species

also employ a second mechanism called short-range recruitment (SRR). In both mechanisms

ants use communication through the environment (stigmergy) [9].

In the SRR the scout that discovers the prey releases a secretion. Shortly thereafter, nestmates in the vicinity are attracted to the prey location by the secretion odor. When the prey

cannot be moved by the scout and the ants recruited via SRR, one of the ants begins the LRR.

Hölldobler et al. report that SRR is sufficient to summon enough ants to transport the prey

in the majority of the cases [9].

In the LRR the scout ant that discovers the prey returns to the nest to recruit nestmates.

In the course towards the nest, the scout lays a pheromone trail. Nestmates encountered in

the course are stimulated by the scout via direct contact (e.g. antennation, trophallaxis), thus

establishing a chain of communication among nestmates. After, the stimulated nestmate also

begins to lay a pheromone trail even though it had not yet experienced the prey stimulus

itself. When the scout arrives at the nest, nestmates are attracted by the pheromone and run

to the prey site.

123

468

Auton Agent Multi-Agent Syst (2011) 22:465–486

After the recruited ants arrive at the prey site, they begin the cooperative transport. The

number of ants engaged in the transport is regulated on site and depends on the prey characteristics, such as weight, size, etc. [8]. When the number of ants present is not enough to

move the prey, more ants are recruited by one of the aforementioned processes, until the prey

is successful transported. Although in a more economic approach the scout ant should recruit

the exact number or nestmates, it was suggested that the scout cannot make a fine assessment

of the number of ants required to retrieve the prey [15]. Therefore, the most effective strategy

may be to recruit a given number of ants followed by a regulation of the group size during

the transport.

In summary, the recruitment for cooperative transport consists in three steps:

1. The scout ant that has discovered the prey starts the recruitment, inviting nestmates;

2. The ants that accept to join move to the prey site;

3. The size of the transportation group is regulated according to the prey characteristics.

3 Task allocation

In many real-world scenarios, the scale of the problem involves both a large number of agents

and a large number of tasks that must be allocated to these agents. Besides, these tasks and

their characteristics change over time and little information about the whole scenario, if any,

is available to all agents. Each agent has different capabilities and limited resources to perform each task. The problem is how to find, in a distributed fashion, an appropriate task

allocation that represents the best match among agents and tasks.

The generalized assignment problem (GAP) is one possible model used to formalize a

task allocation problem. It deals with the assignment of tasks to agents, constrained to agents’

available resources, and aims at maximizing the total reward.

A GAP is composed by a set J of tasks to be performed by a set I of agents. Each agent

i ∈ I has a capability to perform each task j ∈ J denoted by Cap(i, j) → [0, 1]. Each agent

also has a limited amount of resource, denoted by i.r es, and uses part of it (Res(i, j)) when

performing task j. To represent the allocation, a matrix M is used where m i j is 1 if task j is

allocated to agent i and 0 otherwise.

The goal in the GAP is to find an M that maximizes the allocation reward, which is given

by agents’ capabilities (Eq. 2). This allocation must respect all agents’ resources limitations

(Eq. 3) and each task must be allocated to at most one agent (Eq. 4).

M = argmax

Cap(i, j) × m i j

(2)

M

∀i ∈ I ,

i∈I j∈J

Res(i, j) × m i j ≤ i.r es

(3)

mi j ≤ 1

(4)

j∈J

∀j ∈ J,

i∈I

The GAP model was extended by Scerri et al. [17] to incorporate two features related

to extreme teams: scenario dynamics and inter-task constraints. This extended model was

called extended generalized assignment problem (E-GAP).

There are some types of inter-task constraints; here we focus on constraints of type AND,

meaning that all tasks that are related by an AND must be performed. In other words the

agents only receive a reward if all constrained tasks are simultaneously executed. We remark

that this kind of formalization can be extended to other types of constraint as well.

123

Auton Agent Multi-Agent Syst (2011) 22:465–486

469

AND-constrained tasks can be viewed as a decomposition of a large task into interdependent subtasks. The execution of only some subtasks does not lead to a successful execution

of the overall task, wasting the agents’ resources and not producing the desired effect in

the system. For example, in the case of physical robots, they can be damaged while trying

to perform an effort greater than their capabilities (e.g., trying to remove a large piece of

collapsed building from a blocked road).

To formalize AND-constrained tasks, the E-GAP model defines a set = {α1 , . . . , α p }

containing p sets α of AND-constrained tasks in the form αk = { j1 ∧ . . . ∧ jq }. Each ANDconstrained task j belongs

to at most one set αk . The number of tasks that are being performed

in a set αk is xk = i∈I j∈αk m i j .

Let vi j = Cap(i, j) × m i j . Given the constraints of , the reward V al(i, j, ) of an

agent i performing the task j is given by Eq. 5.

⎧

⎨ vi j if ∀αk ∈, j ∈ αk

(5)

V al(i, j, ) = vi j if ∃αk ∈ with j ∈ αk ∧ xk = |αk |

⎩

0 otherwise

To represent the dynamics in the scenario all E-GAP variables are indexed by a time step t.

−

→

The goal is to find a sequence of allocations M , one for each time step t, as shown in Eq. 6. In

this equation, a delay cost function DC t ( j t ) can be used to define the cost of not performing

a task j at time step t.

−

→

(V al t (i t , j t , t ) × m it j )

f (M) =

t i t ∈I t j t ∈J t

−

t j t ∈J t

(1 −

i t ∈I t

m it j ) × DC t ( j t )

(6)

The E-GAP also extends the GAP in what regards agents’ resource limitations at each

time step t, as well as the issue that each task must be allocated to at most one agent. This

way, in the E-GAP, Eqs. 3 and 4 are rewritten as Eqs. 7 and 8 respectively.

∀t, ∀i t ∈ I t ,

Res t (i t , j t ) × m it j ≤ i t .r es

(7)

j t ∈J t

∀t, ∀ j t ∈ J t ,

m it j ≤ 1

(8)

i t ∈I t

Several task allocation situations in large scale and dynamic scenarios can be modeled as

an E-GAP, including the RoboCup Rescue domain.

4 Related work

This section discusses some pieces of work related to distributed task allocation, concentrating on existing approaches for flexible task allocation. We remark that a complete review of

the subject is outside the scope of this paper; thus we focus on classical approaches as well

as on GAP-based ones. For each presented approach, we point out their limitations for the

case of extreme teams.

4.1 Contract net protocol and auction based methods

One of the earliest propositions in multiagent systems for task allocation was the contract

net protocol (CNP) [20]. In the CNP, the communication channel is intensively used: when

123

470

Auton Agent Multi-Agent Syst (2011) 22:465–486

an agent perceives a task, it follows a protocol of messages to establish a contract with other

agents that can potentially perform the task. This fact, allied with the time taken to reach a

contract, severely limits the application of CNP to allocate tasks among agents in extreme

teams.

Auctions are market-based mechanisms. Hunsberger and Grosz [10] present an approach

for task allocation based on combinatorial auctions. Items on sale are tasks and the bidders

are the agents. These put bids to an auctioneer, depending on their capabilities and resources.

After receiving all bids, the auctioneer makes the allocation of the tasks among the bidders.

Bids are put via communication. Thus, as pointed out by [22], auctions require high amounts

of communication for task allocation. In recent works, Ham and Agha [6,7] evaluate the

performance of different auction mechanisms. However, the tackled scenario is composed

only by homogeneous agents, and is thus different from extreme teams.

4.2 Coalition based methods

Shehory and Kraus [18] propose a coalition approach for task allocation. In this approach,

the process of coalition formation is composed of three steps. In the first step all possible

coalitions are generated. In the second step, coalition values are calculated, and, in the last

step, the coalitions with the best values are chosen to perform the tasks.

The generation of all possible coalitions is exponential in the number of agents. Given a

coalition structure, a further problem is to assign values to each coalition, taking into account

the capabilities of all the agents. To accomplish this, one possibility is to use communication. These facts (size of coalition structures, determination of values, and finding the best

coalition) suggest scalability issues regarding extreme teams in large scale scenarios.

Recently, Rahwan and Jennings [14] have proposed a new approach that does not require

additional communication to decide which agent will compute the value of each coalition.

However, to compute the value of a coalition, each agent still needs to know the capabilities

of other agents.

4.3 DCOP based methods

A distributed constraint optimization problem (DCOP) consists of a set of variables that can

assume values in a discrete domain. Each variable is assigned to one agent which has the

control over its value. The goal of the agents is to choose values for the variables in order

to optimize a global objective function. This function can be described as an aggregation

over a set of cost functions related to pairs of variables. A DCOP can be represented by a

constraint graph, where vertices are variables and edges are cost functions between variables.

An E-GAP can be converted to a DCOP taking agents as variables and tasks as the domain

of values. However, this modeling leads to dense constraint graphs, since one cost function

is required between each pair of variables to ensure the mutual exclusion constraint of the

E-GAP model.

In order to deal with these issues, Scerri et al. present an approximate algorithm called

Low-communication Approximate DCOP (LA-DCOP) [17] that uses a token-based protocol; agents perceive a task in the environment and create a token to represent it, or they

receive a token from another agent. An agent decides whether or not to perform a task (thus

retaining the token) based both on its capability and on a threshold. Varying this threshold

makes the agent more or less selective and has implications on the computational complexity

as discussed next. If an agent decides not to perform a task it sends the token to another

123

Auton Agent Multi-Agent Syst (2011) 22:465–486

471

randomly chosen agent. To deal with inter-task constraints, LA-DCOP uses a differentiated

kind of token, called potential token.

If an agent in LA-DCOP is able to perform more than one task, then it must select those that

maximize its capability given its resources. This maximization problem can be reduced to a

binary knapsack problem (BKP), which suggests that the complexity of LA-DCOP depends

on the complexity of the function implemented to deal with the BKP.

As mentioned, Scerri et al. have proposed the concept of extreme teams, and tested it

using an abstract simulator that allows experimentation with very large numbers of agents

[17]. In the same paper, the LA-DCOP algorithm was used in a RoboCup Rescue scenario in

which characteristics (i) and (ii) described in Sect. 1 were present. However, in this particular

scenario, as they only deal with one kind of team of agents, the fire brigade, characteristics

(iii) and (iv) were only partially or potentially addressed.

4.4 Swarm intelligence based methods

The use of swarm intelligence techniques for task allocation have been reported in the literature. Cicirello and Smith [3] used the metaphor of division of labor to allocate task to

machines in a plant. However, the approach does not take into account dynamic or interconstrained tasks. Kube and Bonabeau [13] have used the metaphor of cooperative transport

in homogeneous robots that must forage and collect items. Since the environment is fully

observable, the authors just explore the adaptation of the transportation group to the size of

the item (step three of the recruitment process discussed in Sect. 2). Both Agassounon and

Archerio [1] and Krieger et al. [12] have used the metaphors of division of labor and recruitment for cooperative transport in teams of homogeneous forager robots. However, neither of

these approaches deal with inter-related tasks.

Swarm-GAP [4] resembles LA-DCOP in the sense that it is also GAP-based, is approximate, uses tokens for communication, and deals with extreme teams. An agent in Swarm-GAP

decides whether or not to perform a task based on the model of division of labor used by

social insects colonies, which has low communication and computation effort. The former

stems from the fact that Swarm-GAP (as LA-DCOP) uses tokens; the latter arises from the

use of a simple probabilistic decision-making mechanism based on Eq. 1. This avoids the

complexity of the maximization function used in LA-DCOP. A key parameter in the approach

is the stimulus s discussed in Sect. 2 as it is responsible for the selectiveness of the agent

regarding perceived tasks.

In order to deal with inter-task constraints, agents in Swarm-GAP just increase the tendency to perform related tasks by a factor called execution coefficient. The execution coefficient is computed using the rate between the number of constrained tasks which are allocated

and the total number of constrained tasks (details in [4]).

In [5] Ferreira Jr. et al. tested Swarm-GAP considering the various actors in the RoboCup

Rescue, thus addressing characteristics (i), (ii), and (iii). However, the issue of inter-task

constraints was not addressed in an efficient way.

5 eXtreme-Ants

5.1 Probabilistic nature of the algorithm

eXtreme-Ants [16] is a biologically-inspired, approximate algorithm that solves E-GAPs. It

is based on LA-DCOP (discussed in Sect. 4.3) but differs from it mainly in the way agents

123

472

Auton Agent Multi-Agent Syst (2011) 22:465–486

make decisions about which tasks to perform. An agent running LA-DCOP receives n tokens

containing tasks and chooses to perform those which maximize the sum of its capabilities,

while respecting its resource constraints. This is a maximization problem that can be reduced

to a binary knapsack problem (BKP), which is proved to be NP-complete. The computational

complexity of LA-DCOP depends on the complexity of the function it uses to deal with the

BKP. Since in large scale domains the number of tasks perceived by agents can be high, the

time consumed to decide which task to accept may restrict the LA-DCOP applicability.

On the other hand, eXtreme-Ants uses a probabilistic decision process, hence the computation necessary for agents to make their decision is significantly lower than in LA-DCOP. In

eXtreme-Ants, agents use the model of division of labor among social insects (presented in

Sect. 2) to decide whether or not to perform a task. Stimuli produced by tasks that need to be

performed are compared to the individual response threshold associated with each task. An

individual that perceives a task stimulus higher than its own threshold has a higher probability

to perform this task.

In eXtreme-Ants, the response threshold θi j of an agent i regarding a task j is based on

the concept of polymorphism, which is related to the capability Cap(i, j) as shown in Eq. 9.

If an agent is not capable of performing a particular task, then its internal threshold is set to

infinity, avoiding the allocation of the task to this agent.

θi j =

1 − Cap(i, j) if Cap(i, j) > 0

∞

otherwise

(9)

Each task j ∈ J has an associated stimulus s j . This stimulus controls the allocation of

tasks to agents. Low stimuli mean that the tasks will only be performed by agents with low

thresholds (thus, more capable). High stimuli increase the chance of the tasks to be performed,

even by agents with high thresholds (less capable).

Algorithm 1 presents eXtreme-Ants. We remark that this is the algorithm that runs in each

agent. When the agent perceives a set Ji of non inter-constrained tasks (line 1) it creates a

token to store the information about them. The use of tokens to represent the tasks ensures

the mutual exclusion constraint of the E-GAP model, which imposes that each tasks must be

allocated to at most one agent. A token thus contains a list of tasks it represents. The agent

that holds a token has the exclusive right to decide whether or not to accept and perform the

tasks contained in the token. The decision takes into account the tendency and the available

resources (lines 21–28). The tendencies are normalized.

When an agent accepts a task, it decreases the available resources by the amount required.

If some tasks contained in the token remain unallocated, the agent sends the token to a

randomly selected teammate.

As in Swarm-GAP, the use of a model of division of labor yields fast and efficient decision-making: the computational complexity of eXtreme-Ants is O(n) where n is the number

of tasks perceived by the agent. This is different from LA-DCOP, as discussed at the end

of Sect. 4.3. Thus, the agents can act on dynamic environments such as the RoboCup Rescue, fulfilling characteristic (i) of extreme teams. Characteristic (ii), which relates to agents

being able to perform multiple tasks subject to their resource limitation, is realized by taking

into account both the agents capabilities and the available resources (line 24 in Algorithm 1).

Characteristic (iii), i.e. agents having overlapping functionality to perform tasks (though with

differing levels of capability) is carried out by the response threshold (Eq. 9).

123

Auton Agent Multi-Agent Syst (2011) 22:465–486

473

Algorithm 1: eXtreme-Ants for agent i

1 when perceived set of tasks Ji

2

token := newToken();

3

foreach j ∈ J do

4

token.tasks := token.tasks ∪ j;

5

evaluateToken(token);

6 when perceived set α of constrained tasks

7

if has enough resources to perform all tasks of α then

compute and normalize Ti j of each j ∈ α;

8

9

foreach j ∈ α do

if r oulette() < Ti j then

10

11

accept task j;

12

13

14

15

16

17

18

if all tasks of α were accepted then

perform the tasks and decrease resource i.r es;

else

discard all accepted tasks in α;

performRecruitment(α);

else

performRecruitment(α);

19 when received token

20

evaluateToken(token);

21 procedure evaluateToken(token)

compute and normalize Ti j of each j ∈ token.tasks;

22

23

foreach j ∈ token.tasks do

if r oulette() < Ti j and i.r es ≥ Res(i, j) then

24

25

perform task j and decrease i.r es;

26

token.tasks := token.tasks − j;

27

28

if some task in token.tasks were not performed then

send token to a randomly selected teammate;

5.2 Recruitment

To deal with AND-constraints among tasks, i.e. characteristic (iv), agents in eXtreme-Ants

reproduce the three steps of the recruitment process for cooperative transport observed among

ants (presented at the end of Sect. 2). The recruitment process is used in eXtreme-Ants to form

a group of agents committed to the simultaneous execution of the inter-constrained tasks.

When an agent perceives a set of inter-constrained tasks α (line 6), it acts as a scout ant. Firstly

it attempts to perform all the constrained tasks. If it fails, then it begins a recruitment process.

This process is based on communication i.e. message exchange. These messages are part

of the protocol presented in Algorithm 2. There are five kinds of messages used in the

recruitment protocol of eXtreme-Ants:

request:

to invite an agent to join the recruitment for a task j ∈ α and to commit to it;

committed: to inform that the agent joined the recruitment for a task j ∈ α and is committed to it;

engage:

to inform that the agent was indeed selected to perform the task j ∈ α;

release:

to inform that the agent was not selected to perform the task j ∈ α and must

decommit to it.

timeout:

to inform that a request for a task j ∈ α has reached its timeout, thus preventing deadlocks.

123

474

Auton Agent Multi-Agent Syst (2011) 22:465–486

Algorithm 2: Recruitment for agent i

1 procedure performsRecruitment(AND set α)

2

repeat

3

j := pick a task from α;

4

send (“request”, j) to a teammate;

5

until the maximum number of requests sent for all j ∈ α is reached or the recruitment for α is

finished or aborted.;

6 when received (“request”, j) from agent i s

7

/* decides whether or not to commit */;

if r oulette() < Ti j and i.r es ≥ Res(i, j) then

8

9

join the recruitment and commit to j;

10

send (“committed”, j) to i s ;

11

12

13

14

15

else

if request timeout reached then

send (“timeout”, j) to i s ;

else

forward (“request”, j) to a teammate;

16 when received (“committed”, j) from i c

17

α := AND group which contains j;

18

if recruitment for α is finished or aborted then

19

send (“release”, j) to i c ; return ;

20

21

22

23

24

25

26

if at least one agent committed to each j ∈ α then

recruitment for αk is finished!;

/* forms the group of engaged agents */;

foreach j ∈ α do

pick a committed agent i p with probability ≈ to Cap(i p , j);

send (“engage”, j) to i p ;

send (“release”, j) to non selected agents;

27 when received (“engage”, j)

28

perform task j and decrease i.r es;

29 when received (“release”, j)

30

decommit to j;

31 when received (“timeout”, j)

32

α := AND group that contains j;

33

if the number of received timeouts for each j ∈ α is equal to the number of requests sent then

34

recruitment for αk is aborted!;

35

foreach j ∈ α do

36

send (“release”, j) to committed agents;

For the first step of the recruitment, the scout agent sends a certain number of request messages for each task j ∈ α (lines 1–5). These requests are sent to randomly selected agents

and correspond to the role of the pheromone released in the air (SRR) or released on the

way to the nest (LRR). As it occurs with the scout ant, which recruits a fixed number of

nestmates independently of prey characteristics, eXtreme-Ants fixes a maximum number of

requests that must be sent for each AND-constrained task. This maximum number must be

experimentally determined to maximize the total reward.

In the second step, the agents must decide whether or not to join the recruitment. When

an agent receives a request originated by a scout agent i s for a task j (lines 6–15), it uses the

tendency (Eq. 1) to decide if it accepts the request and then joins the recruitment, avoiding

double commitment. If the request is accepted, the agent commits to the task, reserving the

123

Auton Agent Multi-Agent Syst (2011) 22:465–486

475

amount of resources required; a committed message is sent to the scout. If the request is not

accepted, the agent forwards it to another agent, reproducing the chain of communication

present in the LRR.

In the third step, the size of the group of agents engaged in the simultaneous execution

of the AND-constrained tasks must be regulated. In eXtreme-Ants this regulation is done by

the scout agent. When it receives enough commitments for each constrained task j ∈ α (line

20), it forms the group of agents that will execute the tasks simultaneously. Following the

E-GAP definition, just one agent must be selected among those committed for each constrained task. The scout then performs a probabilistic selection, picking an agent i p with

probability proportional to its capability Cap(i p , j). The scout then informs i p that it was

the selected one and thus must engage in the execution of j (via an engage message, line

25). All other non-selected agents are released (via a release message, line 26). Agents that

commit to an already allocated task are also released to avoid deadlocks. At this moment

the recruitment is finished. As a result, a group of agents is formed, in which each agent is

allocated to a task j ∈ α, enabling the simultaneous execution of all AND-constrained tasks

in α.

After the group of engaged agents is formed, the requests not yet accepted by other

agents become obsolete. To avoid agents from passing obsolete requests back and forth,

eXtreme-Ants uses a timeout mechanism. The timeout is given by the number of agents that

a recruitment request is allowed to visit. When the timeout is detected (line 12), the scout

is notified (timeout message, lines 31–36). It then checks if a timeout was received for all

recruitment requests for each AND-constrained task j ∈ α. If it is the case, it aborts the

recruitment process and releases the committed agents.

It is important to note that due to the algorithm asynchronism, the scout agent can perform

other actions during the recruitment. These actions comprise the perception and allocation

of other tasks, and even a recruitment for other AND-constrained task groups. Although

eXtreme-Ants reproduces the inter-agent communication via messages, it can be easily modified to use some kind of indirect communication (e.g., pheromones) when the environment

allows it.

6 Modelling RoboCup Rescue as an E-GAP

The goal of the RoboCup Rescue Simulation League (RRSL) [11,19] is to provide a testbed for simulated rescue teams acting in situations of urban disasters. Some issues such as

heterogeneity, limited information, limited communication channel, planning, and real-time

characterize this as a complex multiagent domain [11]. RoboCup Rescue aims at being an

environment where multiagent techniques that are related to these issues can be developed

and benchmarked. Currently the simulator tries to reproduce conditions that arise after the

occurrence of an earthquake in an urban area, such as the collapsing of buildings, road blockages, fire spreading, buried and/or injured civilians. The simulator incorporates some kinds

of agents which act trying to mitigate the situation.

In the RoboCup Rescue simulator,1 the main agents are fire brigades, police forces, and

ambulance teams. Agents have limited perception of their surroundings; can communicate,

but are limited on the number and size of messages they can exchange. Regarding information and perception, agents have knowledge about the map. This allows agents to compute

the paths from their current locations to given tasks. However this does not mean that these

1 available at www.robocuprescue.org.

123

476

Auton Agent Multi-Agent Syst (2011) 22:465–486

paths are free since an agent has only limited information about the actual status of a path,

e.g. whether or not it is blocked by debris.

To update the current status of the objects in the map, each agent has a function called

“sense”. More precisely, the RoboCup Rescue simulator provides to the agent information

about the current time step and about the part of the map where it is located. This information

contains attributes related to buildings, civilians, and blockages for instance. Regarding the

civilians these attributes are the location, health degree (called HP), damage (how much the

HP of those civilians dwindles every time step), and buriedness (a measure of how difficult it

is to rescue those particular civilians). The attributes related to buildings are location, whether

or not it is burning, how damaged it is, and the type of material used in the construction. All

this is abstracted in an attribute called fieriness. Finally the attributes of blockages are location in the street, cost to unblock, and their size (a measure of how they hinder the circulation

of vehicles and people through the street).

To measure the performance of the agents, the rescue simulator defines a score which is

computed, at the end of the simulation, as shown in Eq. 10, where P is the quantity of civilians

alive, H is a measure of their health condition, Hinit is a measure of the health condition of

all civilians at the beginning of the simulation, B is the building area left undamaged, and

Bmax is the initial building area.

B

H

∗

(10)

Scor e = P +

Hinicial

Binicial

In order to model task allocation in the RoboCup Rescue as an E-GAP and use

eXtreme-Ants, we need to identify which are the tasks that must be performed by the agents

as well as the agent’s capabilities regarding these tasks.

6.1 Identifying tasks

In the rescue scenario, the tasks that must be performed by the agents are the following:

– Fire fighting. The goal is to minimize the building area damaged. These tasks are primarily

performed by fire brigade agents.

– Cleaning road blockages. The goal is to minimize the number of blocked roads in order

to improve the traffic flow. These tasks are performed by police force agents.

– Rescuing civilians. The goal is to maximize the number of civilians alive. These tasks

are normally performed by ambulance agents and comprise the rescue and transport of

injured (eventually buried) civilians to a refuge.

If a task is large and complex, a single agent will not be able to perform it successfully.

For instance, a single fire brigade will not extinguish a big fire; a blocked road will be cleared

more efficiently if many police forces act simultaneously; a buried civilian will be quicker

rescued if many ambulance teams act jointly. For this purpose, we decompose the tasks into

inter-constrained subtasks that must be simultaneously performed by groups of agents. In the

following we present the approach used to decompose these tasks.

6.1.1 Decomposing fire fighting

The approach takes into account the sites located near a building, in a radius of 30 meters,

which is the maximum distance a fire brigade can dump water. This pragmatic approach

is motivated by the fact that two fire brigades cannot occupy the same physical space.

123

Auton Agent Multi-Agent Syst (2011) 22:465–486

477

Defining d(s, b) as the Euclidean distance between site s and building b, the decomposition of a fire fighting task into a set αff of inter-constrained subtasks is formalized as:

αff = s | s is a site with d(s, b) ≤ 30 meters

6.1.2 Decomposing cleaning of road blockages

The decomposition of these tasks takes into account the cost to clean the road. The cleaning

cost corresponds to the number of time steps a single police force would take to completely

clean the road. Alternatively, the cost can be seen as the number of police forces that must act

simultaneously to clean the road in a single time step. However, there are blockages in which

the cost (number of police forces to clean it in a single cycle) is greater than the number of

lanes in the road. Due to physical restrictions, the decomposition of cleaning tasks takes into

account both the cleaning cost and the number of lanes in the road. The decomposition of a

cleaning blockage task on a road r into a set αcb of inter-constrained subtasks is formalized

as:

0 < j ≤ lanes(r )

if clean cost (r ) > lanes(r )

αcb = j|

0 < j ≤ clean cost (r ) otherwise

6.1.3 Decomposing rescue of civilians

To decompose a task of rescuing civilians, their buriedness factor, HP, and their health conditions are taken into account. The buriedness is related to the number of time steps a single

ambulance team would take to completely remove the debris over a civilian. Alternatively,

the buriedness can be seen as the number of ambulance teams that must act simultaneously to

rescue a civilian in a single time step. The ambulance teams do not have restrictions regarding

the physical space, meaning that the value of the buriedness attribute can be used to define

the exact number of ambulances that are necessary to rescue a single civilian. However, it is

not efficient to allocate a large number of ambulance teams to a single civilian. We must take

into account the life expectancy of the civilians to better define the number of ambulances

that are needed. The life expectancy shown below specifies how many time steps the civilian

civ will remain alive given its HP and damage:

li f e ex pect (civ) :=

hp(civ)

damage(civ)

To be rescued, an agent with low life expectancy requires more ambulance teams acting

simultaneously. On the other hand, an agent with high life expectancy can be rescued by a

single ambulance team. The decomposition of the task of rescuing a civilian c into a set αc

of inter-constrained subtasks is formalized as follows:

⎧

if c is not buried or

⎪

⎨0 < j ≤ 1

li

f e ex pect (c) > remaining time

αc = j|

⎪

⎩0 < j ≤ buriedness(c)

otherwise

li f e ex pect (c)

The first case generates a single task (in fact no subtasks) and corresponds to a civilian

that is not buried or will remain alive until the end of the simulation. In this case just one

ambulance team is enough. The second case computes the necessary number of subtasks to

keep the civilian alive.

123

478

Auton Agent Multi-Agent Syst (2011) 22:465–486

6.2 Defining agent’s capabilities

To define the agents’ capabilities we use the Euclidean distance from the agent to the tasks.

Equation 11 shows how the capability Cap(i, j) of an agent i is computed regarding each

perceived task j ∈ J . In this equation d(i, j) is the Euclidean distance between agent i

and task j. We adopt the distance as the agent’ capability based on our observation that the

position of the agent has an effect on its performance. Close tasks require few or no movement by the agent. Thus, an agent saves more time when performing close tasks than when

spending time moving to distant tasks.

⎧

if d(i, j) = 0

⎨1

d(i, j)−min d(i,k)

Cap(i, j) =

(11)

k∈J

⎩ 1 − max d(i,k)−min d(i,k) otherwise

k∈J

k∈J

The first case in Eq. 11 corresponds to the maximal capability (task and agent are located in

the same position). Regarding fire brigades, this means that the maximal capability is associated with tasks located in the 30 meters radius in which the fire brigade can dump water.

The second case defines capability as inversely proportional to the distance from the agent

to the task.

7 Experiments and results

We use the eXtreme-Ants algorithm to allocate tasks to agents in two scenarios that have the

characteristics associated with extreme teams. One is the abstract simulator used by Scerri

et al. in [17], while the other is the RoboCup Rescue scenario. In both cases eXtreme-Ants

was compared to Swarm-GAP and LA-DCOP. In all experiments, we tested thresholds

(LA-DCOP) and stimuli (eXtreme-Ants and Swarm-GAP) in the interval [0.1, 0.9].

In the next subsection we address the rescue scenario in details. Section 7.2 presents only

the main conclusions of the use of eXtreme-Ants in the abstract scenario; for more details

please see [16].

Regarding the metrics used, the following was measured: communication, computational

effort, and performance measures that are particular of each domain. The former is important

in scenarios with communication constraints. Some scenarios require fast decision-making.

Thus, the computational effort is also an issue.

7.1 RoboCup Rescue simulator

We have run our experiments in a map that is frequently used by the RRSL community,

namely the so-called K obe_4. To render this scenario more complex, we have substantially

increased the number of agents and tasks regarding the default quantities used in the RRSL.2

In our experiments there are 50 fire brigades, 50 police forces, and 50 ambulance teams.

There are also 150 civilians and 50 fire spots. We remark that the number of fire spots

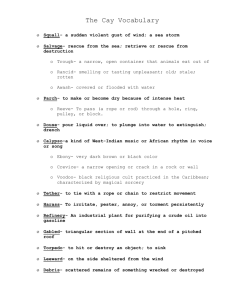

may increase as fires propagate. Figure 1 shows the K obe_4 map, along with agents’ initial

positions.

Each simulation consists of 300 time steps (default of the RRSL). Each data point in the

plots represents the average over 20 runs, disregarding the lowest and highest value. The

2 The quantities used in RRSL are the following: fire brigades: 0–15; police forces: 0–15; ambulance teams:

0–8; civilians: 50–90; fire spots: 2–30.

123

Auton Agent Multi-Agent Syst (2011) 22:465–486

479

Fig. 1 K obe_4 map used in

experiments with all three types

of agents

Fig. 2 Score achieved by each

algorithm as a function of

threshold (LA-DCOP) or

stimulus (eXtreme-Ants and

Swarm-GAP)

250

245

eXtreme-Ants

Swarm-GAP

LA-DCOP

240

Score

235

230

225

220

215

210

205

200

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

Stimulus or Threshold

perception of each agent is not restricted to the tasks it can perform (e.g., a fire brigade also

perceives civilians and blocked roads). Agents’ capabilities are computed as described in

Sect. 6.2. The tasks an agent perceives but somehow cannot perform have their capabilities

set to zero.

Next we discuss the performance of the algorithm regarding score, computational effort,

and communication.

7.1.1 Score

Score at the end of the RoboCup Rescue simulation is measured as in Eq. 10. Figure 2 shows,

for each stimulus or threshold tested, the score achieved by each algorithm. The best performance of eXtreme-Ants occurs when the stimulus s is low. In this case, Tθi j , as defined in

Eq. 1, is high for the tasks an agent is highly capable of performing because θi j = 1 − Capi, j

(Eq. 9). Conversely, LA-DCOP uses a threshold T that represents the minimum capability

that an agent must have in order to perform the task represented by the token. Hence, the

higher the T , the more selective the process. This however does not mean that the higher the

T (lower s), the higher the score. A degrading in performance occurs when T is too high

because agents turn too selective and start rejecting tasks. Similarly, for eXtreme-Ants, when

s is too low, agents have a high tendency of doing only tasks they are capable of, thus ending

up being too selective as well. At the end, in both cases, tasks remain unallocated when T is

too high or s is too low.

123

480

Table 1 Scores for the best and

worst threshold/stimulus for each

algorithm

Auton Agent Multi-Agent Syst (2011) 22:465–486

Algorithm

Best case

Score

Worst case

Thresh. / Stim.

Score

Thresh. / Stim.

eXtreme-Ants

233.0

0.1

225.0

0.8

Swarm-GAP

212.3

0.1

208.3

0.9

LA-DCOP

226.7

0.7

222.2

0.2

A summary of the information in Fig. 2 appears in Table 1. This table shows, for each

algorithm, the best and worse scores observed, as well as the corresponding threshold or

stimulus. As we can see, the best score for LA-DCOP is roughly the same as the worst score

of eXtreme-Ants (t-test, 99% confidence).

The performance of LA-DCOP can be explained by the way agents decide which tasks

to execute, as discussed in Sect. 4.3. Because an agent always performs the task for which it

has maximal capability, tasks with sub-optimal capabilities are never performed.

On the contrary, the probabilistic decision-making of eXtreme-Ants allows agents to perform sub-optimal tasks from time to time, leading to a better distribution of agents in the

environment. The spreading is effective in RoboCup Rescue because it tackles tasks that are

large or difficult (e.g., avoiding fire propagation to neighbor buildings and the worsening of

civilians’ health).

The scores of eXtreme-Ants and LA-DCOP are superior to Swarm-GAP due to the way

Swarm-GAP deals with constrained tasks; in fact it does not ensure the simultaneous execution of the constrained subtasks. Besides, frequently, agents in Swarm-GAP tend to select

tasks that are dispersed thus spending precious time moving to tasks rather than performing

the close ones.

We would like to remark that in the present work we are primarily concerned with task

allocation. Because we try to avoid ad hoc methods and/or heuristics for specific behavior

or unnecessary communication among agents in that scenario, our algorithm is of course

outperformed by the winner of the rescue competition as we do not deal with aspects of the

simulator other than task allocation. For instance we have turned off all central components,

namely those that centralize information that aim at facilitating the coordination of the agents.

These components are the fire station, the police office, and the ambulance center, which are

in charge of facilitating the communication and coordination of firemen, police forces, and

ambulance teams respectively.

However, for sake of completeness we have performed a comparison with the vicechampion of the RRSL in 2008.3 The reason for not using the champion is that, due to

certain restrictions the authors of this code have made, it is not possible to use the higher

number of agents we have used in our experiments.

The score of the vice-champion is only 11.1% superior to that of eXtreme-Ants (19.8%

superior to Swarm-GAP and 14.2% superior to LA-DCOP), using a t-test, 99% confidence.

We consider this a very good result given that the participants at RRSL intensively exploit the

characteristics of the simulator (including the central components) in order to develop their

strategies which do include a lot of situation-specific coding and ad hoc methods. However

3 Code available at https://roborescue.svn.sourceforge.net/svnroot/roborescue/robocup2008data/.

123

Auton Agent Multi-Agent Syst (2011) 22:465–486

1000

900

Evaluated tasks by agent

Fig. 3 Computational effort of

each algorithm as a function of

threshold (LA-DCOP) or

stimulus (eXtreme-Ants and

Swarm-GAP)

481

eXtreme-Ants

Swarm-GAP

LA-DCOP

800

700

600

500

400

300

200

100

0

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

Stimulus or Threshold

this is not generalizable. We see the big advantage of using GAP-based approaches in the

fact that this modelling allows the code to be used in situations with little or no change.

7.1.2 Computational effort

Agents in RoboCup Rescue have limited time (half second) to decide which tasks to perform.

Thus, the decision-making must be fast or the allocation becomes obsolete. Therefore, the

intuition is that low computational effort methods are required. To evaluate whether this is

the case we measure the number of operations that are performed by an agent during the

decision-making, in each time step.

Figure 3 shows the average computational effort of each algorithm, for different values of

stimuli and thresholds. The lower effort seen for Swarm-GAP is due to its simple probabilistic

decision-making. However, as mentioned previously, the score obtained by Swarm-GAP is

worse than that of eXtreme-Ants. The higher effort of LA-DCOP relates to the fact that the

agents must solve a BKP to decide which tasks to perform. To solve a BKP our implementation of LA-DCOP uses a greedy approach, which sorts the tasks by the agent’s capability and

then selects the task to perform i.e. the complexity is O(n log n). The computational effort in

LA-DCOP decreases when the threshold increases due the fact that higher thresholds imply

that agents are more selective and thus perceive less tasks (i.e. n decreases), which has an

impact in the maximization function. In other words, low thresholds mean more perceived

tasks and hence higher computational effort.

7.1.3 Communication channel

In all three algorithms, when an agent decides not to perform a task, it passes on the task

to a teammate. To evaluate the efficient use of the communication channel, we measure the

total of bytes sent during the simulation, regardless of message type (e.g. token, recruitment

request, etc.). This is shown in Fig. 4.

eXtreme-Ants sends fewer bytes than LA-DCOP and Swarm-GAP. This is explained by

the fact that the use of the communication channel is directly related to the score (see Fig. 2).

For instance, when a fire is efficiently extinguished, it does not propagate to other buildings.

Thus, an algorithm with good task handling and execution reduces the chances of appearance

of related tasks (e.g. fire spreading) in the long run, with a consequent reduction in the need

for communication.

123

482

4.5e+04

4.0e+04

Sent bytes

Fig. 4 Use of the

communication channel (bytes

sent) over time as a function of

threshold (LA-DCOP) or

stimulus (eXtreme-Ants and

Swarm-GAP)

Auton Agent Multi-Agent Syst (2011) 22:465–486

3.5e+04

3.0e+04

2.5e+04

eXtreme-Ants

Swarm-GAP

LA-DCOP

2.0e+04

1.5e+04

0

0.1 0.2

0.3 0.4

0.5 0.6 0.7

0.8 0.9

1

Stimulus or Threshold

7.2 Abstract simulator

The abstract simulator used by Scerri et al. allows experimentation in scenarios comprising

a number of agents and tasks that are not supported by the rescue (thousands). On the other

hand, it is not realistic in the sense that it does not address issues such as consequences of

not handling a task such as a fire that can then spread creating new tasks. In other words, task

appearance and disappearance in the abstract simulator obey a time-independent statistical

distribution. This renders this scenario less complex than the rescue, at least from the point

of view of task allocation.

As in [17], each experiment consists of 2000 tasks, grouped into five classes, where each

class determines the task characteristics. The number of agents varies from 500 to 4000.

This means that the load (ratio between tasks and agents) is 4 in the first case and 0.5 in

the latter. Each agent has a 60% probability of having a non-zero capability for each class.

In this case the agent has a randomly assigned capability ranging from 0 to 1. Regarding

the AND-constraints among tasks, 60% of the tasks are related in groups of five tasks. The

communication channel is reliable (every message sent is received) and fully connected (each

agent is connected to every other agent). Each experiment consists of 1000 time steps. The

total number of tasks is kept constant. At each time step, each task has a probability of 10% of

being replaced by a task potentially requiring a different capability. The tasks are persistent,

which means that non allocated tasks are kept in the next time step. At each time step, each

token or message is allowed to move from one agent to another only once. Despite the fact

that each task may have a particular stimulus value, we adopt the same value for all tasks.

Each datapoint in the plots we show here represents the average over 20 runs.

7.2.1 Performance regarding total reward

As defined by the E-GAP, the goal is to maximize the total reward i.e. the sum of the reward

at each time step (Eq. 6). As in the RoboCup Rescue scenario, we have tested each algorithm with threshold (for LA-DCOP) and stimulus (for eXtreme-Ants and Swarm-GAP) in

the interval [0.1, 0.9]. Additionally, in the case of eXtreme-Ants the number of recruitment

requests is five for each AND-constrained task, and the timeout is 20.

Results from the experiments (details in [16]) show that low stimuli (0.2) yields higher

rewards for both eXtreme-Ants and Swarm-GAP, whereas LA-DCOP achieves the best

reward when the threshold tends to 0.6, as already expected given hints by Scerri et al.

123

Auton Agent Multi-Agent Syst (2011) 22:465–486

483

The explanation for this behavior is the same as already discussed in Sect. 7.1.1, namely

selectiveness given the threshold or stimulus.

On average, eXtreme-Ants yields rewards that are 25% higher than those of Swarm-GAP

and 19% lower than those of LA-DCOP (t-test, 99% confidence). Rewards achieved by

Swarm-GAP are worse than those achieved by eXtreme-Ants and LA-DCOP because agents

accept tasks according to the available resources, but this allocation does not necessarily

translate into a reward because some AND-constrained tasks are not performed. LA-DCOP

yields higher rewards than eXtreme-Ants because each agent maximizes its capability when

accepting the tasks, taking into account the available resources. On the other hand, agents in

eXtreme-Ants make a simple one-shot decision to allocate tasks that has a positive impact

in the communication/computational effort.

7.2.2 Communication channel

Communication is measured as the sum of messages sent by the agent over all time steps,

regardless of message type. On average, agents in eXtreme-Ants sent 121% fewer messages

than those in LA-DCOP and 80% more messages than those in Swarm-GAP. The smallest difference to LA-DCOP occurs with 3000 agents. Even in this case LA-DCOP sends

66% more messages than eXtreme-Ants. These results are statistically significant at 99%

confidence t-test.

7.2.3 Computational effort

Here computational effort also means the number of operations that are performed by an

agent in the decision-making about which tasks to perform at each time step. This number is computed as follows. Each time an agent decides whether or not to accept a task, an

internal counter is incremented. In the case of eXtreme-Ants and Swarm-GAP, each probabilistic decision causes just one increment in the counter. On the other hand, since an agent

in LA-DCOP solves a BKP to decide which tasks to accept, the increment in the counter is

related to the number of retained tasks.

The computational effort of Swarm-GAP is, on average, 55% lower than those from

eXtreme-Ants. Since in Swarm-GAP there is no explicit coordination mechanism to deal

with AND-constrained tasks, agents do not have to make additional evaluations regarding the simultaneous allocation of constrained tasks, reducing the computational effort of

Swarm-GAP. However, as shown previously, the absence of such mechanism affects the

total reward. The higher computational effort of both eXtreme-Ants and LA-DCOP are due

to the presence of an explicit coordination mechanism to deal with constrained tasks.

eXtreme-Ants outperforms LA-DCOP, with computational efforts on average 151% lower

than those from LA-DCOP. The most significant result is for the case of 500 agents, in which

the computational effort of LA-DCOP is 493% higher than that of eXtreme-Ants.

7.3 Discussion about the experiments

Comparing the results obtained in both environments, the performance of eXtreme-Ants was

higher than LA-DCOP in the RoboCup Rescue simulator, but not in the abstract simulator.

This is due to the way the dynamics is simulated in these environments.

In the abstract simulator, the dynamics is simulated through changes in the characteristics

of the tasks, but the total number of tasks is fixed. This means that tasks not performed in

a time step do not influence the number of tasks that will appear in the future. Therefore,

123

484

Auton Agent Multi-Agent Syst (2011) 22:465–486

LA-DCOP is more efficient as it performs only tasks that maximize the current total reward.

In the abstract simulator this greedy approach has few consequences.

On the other hand, in the RoboCup Rescue simulator the characteristics and quantity of

tasks are changed throughout the simulation, these changes being influenced by the behavior

of agents themselves (when performing the tasks). For example, the tasks not performed in a

time step may contribute to the emergence of new tasks, as for example when a fire that was

not extinguished spreads to nearby buildings. The performance of eXtreme-Ants in this case

is higher since the probabilistic decision-making allows agents to perform tasks for which its

capabilities are not necessarily the best (suboptimal), causing a better distribution of agents

in the environment. This seems to be more efficient in RoboCup Rescue since it reduces the

amount of tasks that arise on the fly during the simulation.

It is indeed the case as discussed in Sect. 7.1. When the score is considered, the best score

for LA-DCOP is roughly the same as the worst score of eXtreme-Ants. Regarding computational effort (number of operations that are performed by an agent for the decision-making, at

each time step), in the case of eXtreme-Ants this is a one-shot decision for each agent, while

agents in LA-DCOP solve, each, a BKP. Finally, regarding communication load, the ANDconstrained tasks are well handled by eXtreme-Ants, reducing the chances of appearance of

related tasks, with a consequent reduction in the need of communication.

In summary, as shown in the experiments, the swarm intelligence based approaches (probabilistic allocation based on the model of division of labor as well as the recruitment) present in

eXtreme-Ants reduce the amount of communication and the computational effort, and is able

to increase the score in highly dynamic scenarios. However, we emphasize that the choice of

one particular algorithm must be related to the constraints of the scenario. LA-DCOP seems

to be a good choice when one or more of these conditions hold: there are no constraints in

the communication channel or in the time that the agents have to make decisions; the amount

of tasks is fixed; tasks not performed in an time step do not contribute to the emergence

of new tasks. However, in scenarios with communication or time constraints, and where

the number of tasks is not fixed or is influenced by other tasks, eXtreme-Ants seems more

appropriate.

8 Conclusions

In this paper we have addressed the RoboCup Rescue as a problem of multiagent task allocation in extreme teams. We have extended the works previously presented in [5,17] in what

regards the implementation of the concept of extreme teams (as proposed by Scerri et al. and

discussed in the introduction of this paper) applied to the full version of RoboCup Rescue

scenario, i.e. with all 3 kinds of agents. Previously, this concept was tested only in an abstract

task allocation scenario [17], or in the RoboCup Rescue itself but in this case, with a simpler

implementation of the issue of inter-tasks constraints [5].

We have presented an empirical comparison between our swarm intelligence based

method, called eXtreme-Ants and two other methods for task allocation, both based on

the GAP (generalized assignment problem). The experimental results show that the use of

the model of division of labor in eXtreme-Ants allows agents to make efficient coordinated actions. Since the decision is probabilistic, it is fast, efficient, and requires reduced

communication and computational effort, enabling agents to act efficiently in environments

where the time available to make a decision is highly restricted, which is the case of RoboCup Rescue. Moreover, the recruitment process incorporated into eXtreme-Ants provides

efficient allocation of constrained tasks that requires simultaneous execution, leading to

123

Auton Agent Multi-Agent Syst (2011) 22:465–486

485

better performances. The efficiency of eXtreme-Ants regarding score (in the RoboCup Rescue), communication, and computational effort suggests that techniques that are biologically

inspired can be considered for multiagent task allocation, especially in dynamic and complex

scenarios such as the RoboCup Rescue.

We consider that an investigation about how the efficiency of eXtreme-Ants can be

improved while keeping its swarm intelligence flavor is the main suggestion for future work.

The following are possible directions.

As defined in the model of division of labor, stimuli values reflect the need and urgency

regarding the execution of given tasks. Thus, we intend to work in the direction of changing

stimuli values dynamically, indicating different priorities in the execution of tasks, as well as

constraints related, for instance, to a physical impossibility of performing a given set of tasks

simultaneously. Currently, the tendency to select a task is a function of the capability (via

response threshold) and task stimulus only. Other possibility is to incorporate the resources

that are required by a task in the computation of the tendency. With such modifications, we

expect to deal with capabilities and resources in a better way, leading to a higher overall

performance.

The complete elimination of direct communication among the agents can also be considered. To replace the direct communication, we suggest the use stigmergy. This can be

achieved by incorporating the ability to deposit and detect pheromone in the environment.

However, the counterpart of using stigmergy is the need for agents to move through the

environment to release pheromones.

Acknowledgments This research is partially supported by the Air Force Office of Scientific Research

(AFOSR) (grant number FA9550-06-1-0517) and by the Brazilian National Council for Scientific and Technological Development (CNPq). We also thank the anonymous reviewers for their suggestions.

References

1. Agassounon, W., & Martinoli, A. (2002). Efficiency and robustness of threshold-based distributed

allocation algorithms in multi-agent systems. In Proceedings of the first international joint conference

on autonomous agents and multiagent systems, AAMAS 2002 (pp. 1090–1097). New York, NY, USA:

ACM.

2. Bonabeau, E., Theraulaz, G., & Dorigo, M. (1999). Swarm intelligence: From natural to artificial

systems. New York, USA: Oxford University Press.

3. Cicirello, V., & Smith, S. (2004). Wasp-like agents for distributed factory coordination. Journal of

Autonomous Agents and Multi-Agent Systems, 8(3), 237–266.

4. Ferreira, P. R., Jr., Boffo, F., & Bazzan, A. L. C. (2008). Using swarm-GAP for distributed task

allocation in complex scenarios. In N. Jamali, P. Scerri, & T. Sugawara (Eds.), Massively multiagent

systems, volume of 5043 in lecture notes in artificial intelligence (pp. 107–121). Berlin: Springer.

5. Ferreira, P. R., Jr., dos Santos, F., Bazzan, A. L. C., Epstein, D., & Waskow, S. J. (2010). Robocup

Rescue as multiagent task allocation among teams: Experiments with task interdependencies. Journal

of Autonomous Agents and Multiagent Systems, 20(3), 421–443.

6. Ham, M., & Agha, G. (2007). Market-based coordination strategies for large-scale multi-agent

systems. System and Information Sciences Notes, 2(1), 126–131.

7. Ham, M., & Agha, G. (2008). A study of coordinated dynamic market-based task assignment in

massively multi-agent systems. In N. Jamali, P. Scerri, & T. Sugawara (Eds.), Massively multiagent

systems, volume of 5043 in lecture notes in artificial intelligence (pp. 43–63). Berlin: Springer.

8. Hölldobler, B. (1983). Territorial behavior in the green tree ant (Oecophylla smaragdina). Biotropica,

15(4), 241–250.

9. Hölldobler, B., Stanton, R. C., & Markl, H. (1978). Recruitment and food-retrieving behavior in

Novomessor (formicidae, hymenoptera). Behavioral Ecology and Sociobiology, 4(2), 163–181.

10. Hunsberger, L., & Grosz, B. J. (2000). A combinatorial auction for collaborative planning. In

Proceedings of the fourth international conference on multiAgent aystems, ICMAS (pp. 151–158).

Boston.

123

486

Auton Agent Multi-Agent Syst (2011) 22:465–486

11. Kitano, H., Tadokoro, S., Noda, I., Matsubara, H., Takahashi, T., Shinjou, A., & Shimada, S. (1999).

Robocup Rescue: Search and rescue in large-scale disasters as adomain for autonomous agents

research. In Proceedings of the IEEE international conference on systems, man, and cybernetics, SMC

(Vol. 6, pp. 739–743) Tokyo, Japan: IEEE.

12. Krieger, M. J. B., Billeter, J.-B., & Keller, L. (2000). Ant-like task allocation and recruitment in

cooperative robots. Nature, 406(6799), 992–995.

13. Kube, R., & Bonabeau, E. (2000). Cooperative transport by ants and robots. Robotics and Autonomous

Systems, 30(1/2), 85–101. ISSN: 0921-8890.

14. Rahwan, T., & Jennings, N. R. (2007). An algorithm for distributing coalitional value calculations

among cooperating agents. Artificial Intelligence Journal, 171(8–9), 535–567.

15. Robson, S. K., & Traniello, J. F. A. (1998). Resource assessment, recruitment behavior, and organization

of cooperative prey retrieval in the ant Formica schaufussi (hymenoptera: Formicidae). Journal of

Insect Behavior, 11(1), 1–22.

16. Santos, F. D., & Bazzan, A. L. C. (2009). eXtreme-ants: Ant based algorithm for task allocation

in extreme teams. In N. R. Jennings, A. Rogers, J. A. R. Aguilar, A. Farinelli, & S. D. Ramchurn

(Eds.), Proceedings of the second international workshop on optimisation in multi-agent systems

(pp. 1–8). Budapest, Hungary, May.

17. Scerri, P., Farinelli, A., Okamoto, S., & Tambe, M. (2005). Allocating tasks in extreme teams. In

F. Dignum, V. Dignum, S. Koenig, S. Kraus, M. P. Singh, & M. Wooldridge (Eds.), Proceedings of

the fourth international joint conference on autonomous agents and multiagent systems (pp. 727–734).

New York, USA: ACM Press.

18. Shehory, O., & Kraus, S. (1995). Task allocation via coalition formation among autonomous agents.

In Proceedings of the fourteenth international joint conference on artificial intelligence (pp. 655–661).

Montréal, Canada: Morgan Kaufmann.

19. Skinner, C., & Barley, M. (2006). Robocup Rescue simulation competition: Status report. In

A. Bredenfeld, A. Jacoff, I. Noda, & Y. Takahashi (Eds.), RoboCup 2005: Robot Soccer world

cup IX, volume of 4020 in lecture notes in computer science (pp. 632–639). Berlin: Springer.

20. Smith, R. G. (1980). The contract net protocol: High-level communication and control in a distributed

problem solver. IEEE Transactions on Computers, C-29(12), 1104–1113.

21. Theraulaz, G., Bonabeau, E., & Deneubourg, J. (1998). Response threshold reinforcement and division

of labour in insect societies. In Royal Society of London Series B - Biological Sciences, 265, 327–332.

22. Xu, Y., Scerri, P., Sycara, K., & Lewis, M. (2006). Comparing market and token-based coordination. In

Proceedings of the fifth international joint conference on autonomous agents and multiagent systems,

AAMAS 2006 (pp. 1113–1115). New York, NY, USA: ACM.

123