Development of Wetland Quality and Function Assessment Tools and Demonstration

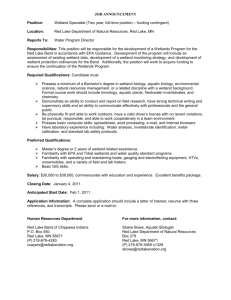

advertisement