Webinar_ST Table of Contents

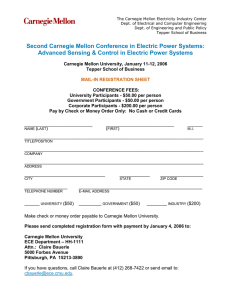

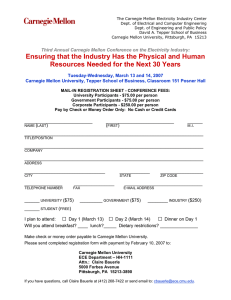

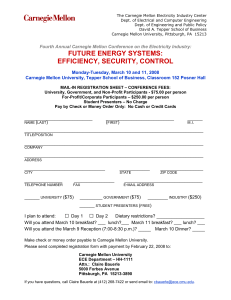

advertisement