Data & Knowledge Engineering on constrained cascade generalization

advertisement

Data & Knowledge Engineering 66 (2008) 368–381

Contents lists available at ScienceDirect

Data & Knowledge Engineering

journal homepage: www.elsevier.com/locate/datak

Entity matching across heterogeneous data sources: An approach based

on constrained cascade generalization

Huimin Zhao a,*, Sudha Ram b

a

b

Sheldon B. Lubar School of Business, University of Wisconsin–Milwaukee, P.O. Box 742, Milwaukee, WI 53201, USA

Department of Management Information Systems, Eller College of Management, University of Arizona, Tucson, AZ, USA

a r t i c l e

i n f o

Article history:

Received 10 March 2008

Received in revised form 18 April 2008

Accepted 22 April 2008

Available online 4 May 2008

Keywords:

Heterogeneous databases

Entity matching

Record linkage

Decision tree

Cascade generalization

a b s t r a c t

To integrate or link the data stored in heterogeneous data sources, a critical problem is

entity matching, i.e., matching records representing semantically corresponding entities

in the real world, across the sources. While decision tree techniques have been used to

learn entity matching rules, most decision tree learners have an inherent representational

bias, that is, they generate univariate trees and restrict the decision boundaries to be axisorthogonal hyper-planes in the feature space. Cascading other classification methods with

decision tree learners can alleviate this bias and potentially increase classification accuracy. In this paper, the authors apply a recently-developed constrained cascade generalization method in entity matching and report on empirical evaluation using real-world data.

The evaluation results show that this method outperforms the base classification methods

in terms of classification accuracy, especially in the dirtiest case.

Ó 2008 Elsevier B.V. All rights reserved.

1. Introduction

Modern organizations are increasingly operating upon distributed and heterogeneous information systems, as they continuously build new autonomous systems, powered by the rapid advancement of information technology. They are facing

challenges to integrate and effectively use the data scattered in different local systems. The need to integrate heterogeneous

data sources, both within and across organizations, is indeed becoming pervasive. Data ‘‘islands” that independently emerge

over time in different sections of an organization need to be integrated for strategic and managerial decision making purposes. Information systems previously owned by different companies need to be integrated following business mergers

and acquisitions. Business partners involved in joint ventures need to share or exchange information across their system

boundaries. The rapid growth of the Internet, especially the recent development of Web services, continuously amplifies

the need for semantic interoperability across heterogeneous data sources; related data sources accessible via different

Web services create new requirements and opportunities for data integration.

To integrate or link the data stored in a collection of heterogeneous data sources, either physically (e.g., by consolidating

local data sources into a data warehouse [12]) or logically (e.g., by building a wrapper/mediator system such as COIN [32]), a

critical prerequisite, among others, is to determine the semantic correspondences across the data sources [46]. Such correspondences exist on both the schema level and the instance level. Schema level correspondences consist of tables that represent the same real-world entity type and attributes that represent the same property about some entity type. Instance

level correspondences consist of records that represent the same entity in the real world.

In this paper, we deal with entity matching (i.e., detecting instance level correspondences) from heterogeneous data

sources. A closely related problem is duplicate detection, where multiple approximate duplicates of the same entity stored

* Corresponding author. Tel.: +1 414 229 6524; fax: +1 414 229 5999.

E-mail address: hzhao@uwm.edu (H. Zhao).

0169-023X/$ - see front matter Ó 2008 Elsevier B.V. All rights reserved.

doi:10.1016/j.datak.2008.04.007

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

369

in the same data source need to be identified [8]. The entity matching problem arises when there is no common identifier

across the heterogeneous data sources. For example, when two companies merge, the customer databases owned by the

companies need to be merged too, but the customer numbers used to uniquely identify customers are different across

the databases. In such situations, the correspondence between two records stored in different databases needs to be determined based on other common attributes.

The initial success of a recently-developed constrained cascade generalization method [63] in solving general classification problems has motivated us to investigate its applicability and utility in solving the important and difficult entity matching problem, which can be formulated as a classification problem. We have empirically evaluated the constrained cascade

generalization method using several real-world heterogeneous data sources. In this paper, we describe how this method

can be applied in learning entity matching rules from heterogeneous databases and report on some empirical results, which

show that this method outperforms the base classification methods in terms of classification accuracy, especially in the dirtiest case. We use the term ‘‘dirty data” to refer to data that contain substantial incorrect, inaccurate, imprecise, out-of-date,

duplicate, or missing items. As real-world data are often very dirty [25,49,52], the constrained cascade generalization method may be even more productive in performance improvement when matching real-world dirty data sources.

The rest of the paper is organized as follows. In the next section, we briefly review some past approaches to entity matching. We then describe the constrained cascade generalization method and its application in entity matching in Section 3. We

then report on some empirical evaluation for entity matching in Section 4. Section 5 outlines some future research directions.

Finally, we summarize the contribution of this work in Section 6.

2. Literature review

The various approaches to entity matching proposed in the literature can be classified into two broad categories: rulebased and learning-based. In rule-based approaches, domain experts are required to directly provide decision rules for

matching semantically corresponding records. In learning-based approaches, domain experts are required to provide sample

matching (and non-matching) records, based on which classification techniques are used to learn the entity matching rules.

In most of the rule-based approaches, the entity matching rules involve a comparison between an overall similarity measure for two records and a threshold value; if the similarity measure is above the threshold, the two records are considered

matching. The overall similarity measure is usually computed as a weighted sum of similarity degrees between common

attributes of the two records. Conversion rules can be defined to derive compatible common attributes by converting the

formats of originally incompatible attributes [7]. The weight of each common attribute needs to be specified by domain experts based on its relative importance in determining whether two records match or not [9]. Segev and Chatterjee [45] also

used statistical techniques, such as logistic regression, to estimate the weights and thresholds, besides consulting domain

experts. Hernández and Stolfo [25] developed a declarative language for specifying such entity matching rules.

Recently, classification techniques, especially decision tree techniques, such as CART [23] and C4.5 [21,54], have been applied in entity matching. Such techniques can automatically induce (learn) entity matching rules (classifiers) from prematched training samples. The learned classifiers can then be used to match other records not covered by the training

samples.

Statistical methods for entity matching, under the name of record linkage, have an even longer history. Newcombe et al.

[38] proposed the first probabilistic approach to record linkage, which computes odds ratios of frequencies of matches and

non-matches based on intuition and past experience. The research along this line was summarized by Newcombe [37].

Fellegi and Sunter [18] proposed a formal mathematical method for record linkage. This method can be seen as an extension

of the Bayes classification method with a conditional independence assumption (the matches on the individual attributes of

two records are assumed to be mutually independent given the overall match of the records). It allows users to specify the

minimum acceptable error rates and classifies record pairs into three categories: match, non-match, and unclassified.

Unclassified record pairs then need to be manually evaluated. Several record linkage systems, e.g., GRLS of Statistics Canada

[17] and the system of the US Bureau of the Census [57], have been developed based on Newcombe’s [37] approach and Fellegi and Sunter’s [18] theory of record linkage. Torvik et al. [51] recently proposed a probabilistic approach, which is indeed

similar to that of Fellegi and Sunter.

Further refinements over the basic probabilistic approach have also been developed. Dey et al. [13] and Dey [12] proposed

an integer programming formulation to minimize the total misclassification cost. Dey et al. [14] proposed another integer

programming formulation, using distances, instead of probabilities, as similarity measures. Torvik et al. [51] proposed methods for smoothing, interpolating, and extrapolating probability estimations and further adjusting predicted matching probabilities based on three-ways comparisons.

Other classification techniques, e.g., logistic regression [39], have also been used in learning entity matching rules. A wide

variety of classification techniques, including naive Bayes, logistic regression, decision tree/rule/table, neural network, and

instance-based learning, have been empirically compared in the context of entity matching [64]. There are abundant comparisons of different classification techniques in the literature (e.g., [55]).

Learning-based approaches have an advantage over rule-based approaches in hard applications where the entity matching rules are non-trivial, involving approximate comparisons of many attributes. In such complex situations, it may be much

more difficult for human experts to explicitly specify decision rules than to provide some classified examples.

370

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

Multiple learning techniques can be combined to further improve classification accuracy. Tejada et al. [50] combined

multiple decision trees using bagging. Zhao and Ram [64] applied various ensemble methods, including cascading [20], bagging [4], boosting [19], and stacking [59], to combine multiple base classifiers into composite classifiers. Empirical results

show that these methods can often improve classification accuracy. The cascading method used in that study was loose coupling [20]. Since then, a new method for cascade generalization [63] has been developed that is more general than the loose

coupling and tight coupling strategies used in the past [20]. Its utility in general classification problems was empirically

demonstrated [63]. However, its application in entity matching problems is yet to be evaluated.

Besides classification (supervised learning) techniques, clustering (unsupervised learning) techniques have also been applied in entity matching [40]. Clustering techniques identify groups of similar entities based on some heuristic similarity

measure without using pre-matched training examples. They do not support predictions on new data and need to be rerun

when data have been updated. They seem to be more suitable for matching schema elements (tables and attributes), which

are relatively static, than for matching records of entities, which are frequently updated in most operational systems [66].

3. Constrained cascade generalization for entity matching

Entity matching can be formulated as a classification problem. As discussed earlier, classification techniques, especially

decision tree learners, have been used in entity matching. Cascading other techniques with decision tree learners can alleviate the representational bias of decision tree learners and potentially improve accuracy. A recently-developed constrained

cascade generalization method [63] uses a parameter to constrain the degree of cascading and find a balance between model

complexity and generalizability. Empirical evaluation in solving general classification problems has shown that this method

outperformed the base classification methods and was marginally better than bagging and boosting on the average [63]. In

this section, we describe its application in entity matching.

3.1. Entity matching

It has been demonstrated that entity matching in large heterogeneous data sources is often very complex and time-consuming due to low data quality and various types of discrepancies across the data sources. For example, it was reported [56]

that a project to integrate several mailing lists for the US Census of Agriculture consumed thousands of person-hours even

though an automated matching tool was used. Another example is the Master Patient Index (MPI) project funded by the

National Institute of Standards, which aims to develop a unified patient data source, allowing care providers to access the

medical records of all patients in the US stored in massively distributed systems [1]; a key challenge in this large project

is reliable matching of medical records across heterogeneous local systems. Automated (or semi-automated) tools are

needed to facilitate human analysts in the entity matching process.

The entity matching problem can be defined as the following classification problem. Given a pair of records drawn from

semantically corresponding tables (i.e., the tables represent the same real-world entity type) in two heterogeneous databases, the objective is to determine whether the records represent the same real-world entity (e.g., whether the two given

records are about the same person). This is a binary (match or non-match) classification problem. Two records can be compared on semantically corresponding attributes (i.e., the attributes describe the same property of some entity type). Similarity measures between the records on semantically corresponding attributes form a vector of input features. A classifier needs

to be trained, based on a sample of pre-classified examples, to predict whether two records match or not based on these

input features.

The input features can be various types of attribute-value matching functions. For two semantically corresponding attributes whose domains are D1 and D2, an attribute-value matching function is a mapping f : D1 D2 ? [0, 1], which suggests the

degree of match between two values in D1 and D2, where 1 indicates a perfect match and 0 indicates a total mismatch.

The simplest attribute-value matching function is equality comparison. However, equality comparison is often insufficient due to various schema level and data level discrepancies across databases. Interested readers are referred to Kim

and Seo [27], Luján-Mora and Palomar [31] for more detailed discussions of semantic discrepancies across databases.

Special treatments are needed in comparing semantically corresponding attributes across data sources, accounting for the

various types of discrepancies. Transformation functions can be defined to convert corresponding attributes into compatible

formats, measurement units, and precisions. Approximate attribute-value matching functions can be defined to measure the

degree of similarity between two attribute values, even though they are not identical. There are many general-purpose

approximate string-matching methods, e.g. Soundex [6], Hamming distance, Levenshtein’s edit distance, longest common

substring, and q-grams [48], string-matching methods specifically developed for entity matching [3], and special-purpose

methods that are suitable for comparing special types of strings, e.g., addresses [56], entity names [11,30], and different

abbreviations [35]. Interested readers are referred to Stephen [48] and Budzinsky [6] for more detailed discussions of

string-matching methods. Long texts can be analyzed using natural language processing [34] and information extraction

[43] techniques.

Numeric attributes can be compared using normalized distance functions (possibly after transformation). Different coding schemes can be resolved using special dictionaries. Multiple transformation and attribute-value matching functions can

be combined to form an arbitrarily complex attribute-value matching function.

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

371

There are typically many more classification decisions to be made in entity matching than in other general classification

problems (e.g., [63]). Usually, in a general classification problem with n records, n classification decisions need to be made.

However, in an entity matching application involving two databases with m and n records, respectively, m n record pairs

need to be classified. Even a small improvement in the classification accuracy, which may be deemed negligible for a general

classification problem, such as credit rating, can be considered practically useful or even significant for an entity matching

application.

3.2. Cascade generalization

After the input features (i.e., attribute-value matching functions) have been defined and training examples prepared, classification techniques can be applied to learn entity matching rules. Recently, decision tree techniques have been frequently

used in entity matching applications [15,21,23,50,54].

However, most decision tree algorithms have an inherent representational bias, that is, they do not learn intermediate

concepts and use only one of the original features in the branching decision at each intermediate tree node [55]. The trees

such algorithms learn are called univariate trees [36], as each node involves a univariate test. The trees are also called orthogonal trees because the decision boundaries in the feature space are restricted to be geometrically orthogonal to the splitting

feature’s axis. In a two-dimensional feature space, the decision boundaries consist of a sequence of line segments that must

be parallel to one of the two axes. Consequently, this representational bias limits the ability of univariate decision trees to fit

the training data, likely hampering classification accuracy.

It is possible to combine other classification techniques with decision tree techniques to alleviate the representational

bias of decision trees and potentially improve the classification accuracy. Such generalization of decision trees is named cascade generalization [20]. It has been demonstrated that such generalized decision trees often outperform univariate decision

trees [20]. The generalized decision trees are named multivariate decision trees [5] because the branching decision at an

intermediate tree node is based on a multivariate test, which is a function of the original features learned by the cascaded

classification techniques.

Past methods for cascade generalization combines multiple classification techniques via either loose coupling or tight

coupling [20]. When the classification techniques are loosely coupled, they are applied sequentially. Some classification

methods are used first to learn some initial classifiers. The discriminant functions generated by the classifiers are then used

as additional features for learning the final generalized decision tree. Each method is applied only once. When the classification techniques are tightly coupled, the cascaded methods are applied locally at each tree node in learning the generalized

decision tree and construct additional features based on the training examples covered by each node.

The structured induction approach used by Shapiro [47] in solving chess ending problems also generates multivariate

decision trees with respect to the initially available low-level features. However, this approach is fundamentally different

from cascade generalization, in that the high-level features are not automatically learned by an algorithm but manually prescribed by a human expert. Given a chess ending game, a domain expert first decomposes the problem into a hierarchical

tree of subproblems (i.e., top-down decomposition), until the subproblem at each leaf node can be correctly solved by the

ID3 decision tree induction algorithm [41]. ID3 is then used to solve each of the subproblems from the bottom of the hierarchy to the top (i.e., bottom-up induction). The ID3 tree for solving a subproblem on the bottom of the hierarchy is a univariate tree with respect to the initially available low-level features. The solutions to the subproblems on lower levels of the

hierarchy are then given to ID3 as primitive attributes in solving subproblems on a higher level. Thus, the ID3 trees on higher

levels of the hierarchy are multivariate trees with respect to the initially available low-level features, although ID3 itself always induces univariate trees with respect to the features given to it. Structured induction driven by human expertise is necessary in solving sufficiently complex problems, where a direct application of a learning algorithm does not seem to be

promising [47].

3.3. Constrained cascade generalization

Cascade generalization increases the flexibility (due to higher model complexity) of the learned decision trees and tends

to improve apparent accuracy (i.e., accuracy on the training sample). In addition, tight coupling provides more flexibility than

loose coupling and therefore has more potential in performance improvement. In general, as the model complexity increases,

the model has more flexibility in fitting the training data and tends to do so better. However, as a more complex model fits

the training data better, it is more sensitive to the training sample and therefore more prone to the overfitting problem (i.e., a

model has high accuracy on training data but low predictive power on other data). Apparent accuracy is not a reliable measure of the true performance of a learned classifier. A classifier with high apparent accuracy may not necessarily generalize to

unseen data well. A classical example is that the simple normal linear classifier has been found to often beat a natural extension (with higher complexity), namely, the quadratic classifier [55].

There is always a trade-off between model complexity and generalizability. A critical issue in tuning learned classifiers,

when multiple levels of complexity fit are possible, is to search for an appropriate level of complexity fit such that the

learned classifiers fit the training data relatively well and, more importantly, generalize well to future data [55, p. 36]. As

Hand et al. [24, p. 138] state: ‘‘This issue of selecting a model of the appropriate complexity is always a key concern in any data

analysis venture where we consider models of different complexities.”

372

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

This trade-off is also known as bias-variance trade-off [16,53]. The error of a classifier can be explained by two components, bias and variance, besides unavoidable noise. Bias reflects the difference between a model and the optimal model

(assuming an infinite training sample is given), and is an indicator of the intrinsic capability of the model in fitting the training sample. Variance reflects the variations of the model across samples. In general, lower bias demands higher model complexity, which tends to lead to higher variance. In other words, a more complex model may be able to more closely

approximate the optimal model, but at the same time, will be more sensitive to the training sample. Finding a model that

balances the bias and variance components and has low overall error requires identifying the appropriate model complexity.

Cascade generalization is not exempt from this trade-off. While cascading increases the model complexity (and therefore

flexibility) by introducing more complex decision tree nodes and tends to increase classification accuracy on the training

data, it does not mean that the deeper the classification methods are cascaded the better. If the other classification methods

are fully cascaded with the decision tree learner (i.e., tight coupling), the local models learned by cascaded classification

methods at each tree node are based on fewer and fewer training examples, which also become more and more unbalanced

with more examples of the majority class covered by the current branch. Such local models become increasingly prone to

overfitting the local training data. Tight coupling may not outperform loose coupling, which in turn may not even outperform the underlying univariate decision tree learner. The most productive level of cascade generalization must be determined empirically for a particular classification problem.

A recently-developed generic method for constrained cascade generalization [63] uses the maximum cascading depth as a

parameter to simulate the trade-off between model complexity and generalizability, and to find the appropriate model complexity. This parameter effectively constrains cascading, and therefore model complexity, to some degree. Cascading methods proposed in the past, including loose coupling and tight coupling, are strictly special cases of this method. As the

cascading depth increases, the model complexity increases, lowering the bias, but the variance also increases, lowering

the generalizability. Constrained cascade generalization allows the evaluation of multiple cascading depths to find one that

gives appropriate complexity fit. Empirical evaluation in general classification problems, such as credit rating, disease diagnosis, labor relations, image segmentation, and congressional voting, has shown that this method tends to outperform the

base classification methods [63].

An algorithm of constrained cascade generalization is available in [63] and included here for the sake of convenience.

Note that because entity matching is a binary classification problem, the parameter corresponding to the class space in

the original algorithm is not necessary any more and is removed in the following modified algorithm.

Build_Generalized_Tree (N, x, S, d, dcascade, W, C)

N: A node in the generalized decision tree to be learned. N is the root node when the procedure is initially invoked. An

intermediate node may have an indefinite number q of children, which are denoted N.Child[i] (i = 1, 2, . . ., q).

x: A vector of input features.

S: A training sample, i.e., a set of n pre-classified problem instances.

d: The depth of N in the generalized decision tree. d = 1 when the procedure is initially invoked.

dcascade: The maximum cascading depth. dcascade is a parameter of the algorithm used to constrain the degree of

cascading.

W: A base decision tree inducer, e.g, C4.5.

C: A set of discriminant function inducers, e.g., {logistic regression}, which takes x and S as input and returns a set of

discriminant functions, which can be used as additional features for W.

Begin

x0 :¼ the empty set.

If d 6 dcascade,

x0 :¼ C(x, S).

x :¼ x [ x0 .

S :¼ S extended with the constructed features in x0 .

Select a splitting test using the goodness measure of W, by which S is split into q subsets Si (i = 1, 2, . . ., q).

If q = 1,

Mark N as a leaf node, with the majority class in S as the predicted class.

Else

For i = 1 TO q,

Generate the ith child of N, N.Child[i].

Build_Generalized_Tree(N.Child[i], x x0 , Si, d + 1, dcascade, W, C).

End.

The algorithm can be used to combine any discriminant function learners (i.e., the C) with any decision tree algorithms

(i.e., the W) to any tree depth (i.e., the dcascade). Loose coupling and tight coupling [20] are strictly special cases of this new

algorithm when dcascade = 1 and dcascade = the total height of the tree, respectively. The larger the dcascade value, the lower the

bias, but at the same time, the higher the variance. The overall performance may fluctuate as dcascade increases. An appropriate value for this parameter should be empirically determined for a given classification problem. A simple procedure is to

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

373

build and evaluate all generalized decision trees under different dcascade values and select the best tree. Empirical evaluation

in general classification problems has shown that this new method can find a more appropriate degree of cascading and

reach better classification accuracy than previous cascading methods [63]. In this paper, we apply this method in learning

entity matching rules and evaluate its utility using real-world heterogeneous databases.

Zhao and Sinha [65] proposed a more efficient algorithm that can learn an entire forest consisting of the same set of generalized decision trees under different dcascade values simultaneously without repeating any node that is common across

multiple trees and provided a complexity analysis of the algorithm. Let fW(n, m) and fC(n, m) denote the training times of

W and C, respectively, where n and m are the number of training cases and the number of features, respectively. The training

time of this efficient algorithm is not more than (k 1)fC(n, m) + k fW(n, m), where k is the number of trees in the forest and

is usually not larger than the depth of the univariate tree learned by W alone.

4. Application

We have implemented the efficient algorithm [65] for constrained cascade generalization on top of logistic regression

[26] and C4.5 [42] (i.e., C = {logistic regression}, W = C4.5) available in the Weka machine learning toolkit [58] and applied

the method to three real-world entity matching applications. We will report on some empirical results in this section.

4.1. Data description

To evaluate the usefulness of the constrained cascade generalization method under different situations, we have collected

three sets of real-world heterogeneous data sources with different degrees of heterogeneity in their schemas and data. In the

first application, we match passengers in flight ticket reservations maintained by an Application Service Provider (ASP) for

the airline industry. In the second application, we compare the records about books extracted from the catalogs of two leading online bookstores. In the third application, we link records about property items stored in two legacy databases managed

by two independent departments, named Property Management and Surplus, of a large public university. Hereafter, we will

refer to the three applications as Airline, Bookstore, and Property.

The Airline case consists of one database containing airline passenger reservation records. The ASP serves over 20 national

and international airlines and maintains a separate database for each airline. All the databases have the same schema. Even

though the database schemas are homogeneous, identifying duplicate passengers and potential duplicate passenger reservations (PNRs) is still not trivial due to various types of data errors and discrepancies in the databases. Currently the ASP

only identifies duplicate PNRs within every individual airline. In the future the service may be extended to identify duplicate

PNRs across airlines. The information relevant to passenger matching includes: passenger name, frequent flyer number, PNR

confirmation information, address, phone numbers, and itinerary segments. There are 351,438 passenger records in one

database snapshot provided by the ASP.

In the Bookstore case, we have book data maintained by two online bookstores and need to determine which two records

stored in different catalogs are about the same book. We have extracted 737 and 722 records from the two catalogs, respectively. There are several semantically corresponding attributes across the two catalogs, including ISBN, authors, title, list

price, our price, cover type, edition, publication date, publisher, number of pages, average customer rating, and sales rank.

In the Property case, we have access to two heterogeneous databases, both about property items, and need to determine

which two records stored in different databases are about the same property item. These two databases have been developed

at different times by different people for different purposes. The database maintained by the Property Management Department is managed by IBM IDMS. The Surplus database is managed by Foxpro. The Property Management Department manages all property assets owned by the various departments of the university and keeps one record in its database for every

property item. When some department wants to dispose an item, the item is delivered to the Surplus Office, where it is sold

to another department or a public customer. The Surplus Office keeps one record in its database for every disposed item.

There are 115 and 32 attributes in the Property Management and Surplus databases, respectively. Although the formats

of the two databases are very different, they share some semantically corresponding attributes, including tag number,

description, class description, purchasing account, payment account, acquisition date, acquisition cost, disposal date, disposal amount, owner type, model, serial number, and manufacturer. We took a snapshot of each database, containing

77,966 (Property Management) and 13,365 records (Surplus), respectively.

We evaluate the usefulness of the constrained cascade generalization method using the above-mentioned data sets. These

data sets have different degrees of heterogeneity in their schemas and data, allowing us to observe how the constrained cascade generalization method performs under different situations. In the Airline case, the schemas of the databases for different airlines are all identical. These databases can be easily merged using a simple union operation. The entity matching

problem reduces to detecting approximately duplicate records in a single database. The data stored in the databases are cleaner than the data in the other two cases. In the Bookstore case, the schemas of the two catalogs are different but contain very

similar attributes. Over 90% of the attributes in each catalog have corresponding ones in the other catalog. The data of the

two catalogs also significantly overlap with each other; there are many common books in the two catalogs. There are some

data discrepancies across the two catalogs. In the Property case, the two schemas are very different and overlap for only a

small portion (below 18%). The two databases contain some corresponding records. The data—especially in the Surplus

374

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

Table 1

Percentage of mismatching values on each corresponding attribute pair in the property case

Corresponding attribute pair

Mismatch (%)

Description

Class description

Manufacturer

Serial number

Model

Acquisition date

Acquisition cost

Purchasing account

Payment account

Owner type

Disposal date

Disposal amount

89

92

97

55

69

29

34

25

92

1

89

94

database—are very dirty. Table 1 lists the percentage of mismatching values on each corresponding attribute pair across the

two databases. Even the best matching attribute pair (purchasing account), except owner type, has about 25% of mismatching values. The owner type has only about 1% of mismatching values, but is not very useful in entity matching, as 99% of the

property items have the same owner type, the university. The mismatching values on other corresponding attribute pairs are

due to various kinds of errors (incorrect, inaccurate, imprecise, out-of-date, or missing values) and discrepancies. In addition,

there are some highly discriminative attributes in the Airline and Bookstore cases (i.e., passenger name in the Airline case

and book title and authors in the Bookstore case), but not in the Property case. Based on these characteristics, we expect that

the Property case is much harder than the other two cases for the entity matching task and the Airline case is the easiest.

4.2. Preparation of training samples

In all three cases, training examples can be easily generated as there is a (partial) common key attribute in each case. In

the Bookstore case, ISBN is a common key for book records. In the Airline case, frequent flyer program and frequent flyer

number can be used as a partial key, for passengers who use their frequent flyer numbers in reservations. Two records having

identical frequently flyer number for the same frequent flyer program are very likely to be about the same passenger, while

two records having different frequently flyer numbers for the same frequent flyer program are very likely to be about different passengers. In the property case, the two databases use the same tag number for each property item. It is therefore

easy to determine corresponding records in these cases. There is no need to train classifiers to match records in these cases

(except the Airline case where not every passenger uses a frequent flyer number). We intentionally chose these cases to evaluate various classification techniques in this study. We trained classifiers based on other attributes while withholding the

common keys. In other entity matching problems, when there is no common key, domain experts need to manually match

some records before training classifiers. In cases where some records contain a common key (such as in the Airline case),

these records can be used for training and the resulting classifier can then be used to match other records that do not have

the key.

Typically the two types of record pairs are extremely unbalanced in entity matching applications. There are usually many

more non-matching record pairs than matching ones, unless there are many duplicate records in each of the databases under

comparison. A representative sample of all record pairs would be a very sparse one. A trivial classifier that always predicts a

record pair as non-matching would achieve extremely high accuracy on such a sample but is useless. While there are many

different ways to deal with such extremely unbalanced data, Kubat and Matwin [29] proposed and argued for a simple onesided sampling method: keep all examples of the rare class and take a sample of the majority class, to get a more balanced

training set. Some learning algorithms, such as decision tree induction, are sensitive to an imbalanced distribution of examples and may suffer from serious overfitting, which cannot be addressed by pruning. This problem can be mitigated by balancing the training examples. The minority-class examples are too rare to be wasted, even under the danger that some of

them are noisy. Thus, it is recommended that all minority-class examples be kept and only majority-class examples be

pruned out. Kubat and Matwin [29] experimented on four largely unbalanced datasets and found that one-sided sampling

helped to improve the performance of nearest neighbor and C4.5 decision tree induction.

We basically adopted the one-sided sampling method [29] and used a balanced sample for each of the three cases in our

evaluation. In the airline case, we randomly selected 5000 matching record pairs and 5000 non-matching record pairs,

resulting in a sample size of 10,000. In the Bookstore case, we used all of the 702 available matching record pairs and randomly selected an equal number of non-matching record pairs, resulting in a sample size of 1404. In the Property case, we

used all of the 3124 available matching record pairs and randomly selected an equal number of non-matching record pairs,

resulting in a sample size of 6248. The characteristics of the three training samples are summarized in Table 2.

We designed multiple attribute-value matching functions for each semantically corresponding attribute pair and selected

the one that is most highly correlated with the dependent variable (i.e., whether two records match or not). We also tried

to combine multiple attribute-value matching functions. As a result, we selected 13, 16, and 12 (base or composite)

375

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

Table 2

Characteristics of the training samples for three entity matching applications

No.

1

2

3

Name

#Classes

Airline

Bookstore

Property

2

2

2

#Instances

10,000

1,404

6,248

#Features

Nominal

Numeric

Total

12

5

4

4

8

8

16

13

12

Table 3

Attribute-value matching functions for three entity matching applications

Case

Attribute

Attribute-value matching function

Range

Airline

First name

Last name

Confirmation fax

Confirmation email

Confirmation address

Address

City

State

Postal code

Boarding point

Off point

Home phone number

Business phone number

Agency phone number

Cell phone number

Fax number

Soundex + substring + edit distance

Soundex + substring + edit distance

Equality

Equality

Edit distance

Edit distance

Equality

Equality

Substring

Equality

Equality

Equality

Equality

Equality

Equality

Equality

[0, 1]

[0, 1]

{0, 1}

{0, 1}

[0, 1]

[0, 1]

{0, 1}

{0, 1}

{0, 1}

{0, 1}

{0, 1}

{0, 1}

{0, 1}

{0, 1}

{0, 1}

{0, 1}

Bookstore

Author

Title

List price

Our price

Cover type

Number of pages

Edition

Publication month

Publication year

Publication date

Publisher

Sales rank

Average customer rating

Edit distance

Edit distance

Normalized distance

Normalized distance

Equality + dictionary

Normalized distance

Equality

Equality

Equality

Normalized distance

Equality + dictionary

Normalized distance

Normalized distance

[0, 1]

[0, 1]

[0, 1]

[0, 1]

{0, 1}

[0, 1]

{0, 1}

{0, 1}

{0, 1}

[0, 1]

{0, 1}

[0, 1]

[0, 1]

Property

Description

Class description

Manufacturer

Serial number

Model

Acquisition date

Acquisition cost

Purchasing account

Payment account

Owner type

Disposal date

Disposal amount

First 5 characters + dictionary

First 5 characters + dictionary

Equality + dictionary

Substring + edit distance

Substring + edit distance

Normalized distance

Equality (round)

Equality

Equality

Dictionary

Normalized distance

Equality (round)

[0, 1]

[0, 1]

[0, 1]

{0, 1}

{0, 1}

{0, 1}

[0, 1]

[0, 1]

[0, 1]

[0, 1]

{0, 1}

[0, 1]

attribute-value matching functions as input features for the three cases, i.e., Bookstore, Airline, and Property, respectively.

The types of these matching functions included equality comparison (e.g., for edition, publish month, and publish year in

Bookstore), edit distance (e.g., for title in Bookstore), Soundex (e.g., for first name and last name in Airline), normalized distance (e.g., for list price, our price, and number of pages in Bookstore), special dictionary lookup (e.g., for owner type in Property), and combinations of multiple matching functions. Table 3 summarizes these attribute-value matching functions.

4.3. Empirical results

We ran logistic regression, C4.5, and constrained cascade generalization (specifically, cascading logistic regression with

C4.5) for the three entity matching applications. We used classification accuracy as the performance measure. In unbalanced,

cost-asymmetric binary classification problems, a trade-off between two separate measures (e.g., false positive error rate and

376

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

Table 4

Descriptive statistics about the classification accuracy (%) for three entity matching applications

Cascading

Airline

Bookstore

Property

Logistic

C4.5

Mean

StdDev

Mean

StdDev

Mean

StdDev

99.658

99.043

97.215

0.018

0.092

0.093

99.602

99.036

97.017

0.011

0.121

0.028

99.644

98.938

97.118

0.027

0.154

0.081

false negative error rate, sensitivity and specificity, precision and recall) needs to be made [58]. When the costs of the two

types of misclassification errors are known, the two separate measures can be combined into a convenient overall performance measure, e.g., expected misclassification cost. Accuracy is a special case of expected misclassification cost, when

the weights of the two classes are considered equal. Since we cannot assign a uniform weight ratio across the three applications, we decided to use accuracy as the performance measure in our evaluation.

We used 10-fold stratified cross-validation, a frequently recommended estimation method [28,58], to estimate the true

predictive accuracy of each learned classifier. All performance measures reported subsequently will be (10-fold) cross-validated accuracy. Cross-validation randomly divides a training data set into n (stratified) subsets, called folds, and repeatedly

selects each fold for testing while others are used for training. The average testing accuracy over the n runs is used as an

overall estimate of the performance of the learned classifier.

To statistically compare the performance of the three classification methods, we needed to perform cross-validation multiple times for each method on each case. The required sample size was determined as follows. Using a three-level (corresponding with the three classification methods) ANOVA, aiming at detecting a relatively large effect size in Cohen’s

recommended convention (f = 0.4),1 setting the power at 0.8 and the statistical significance level at 0.05, we needed 21 examples for each of the three levels (i.e., a total sample size of 63) [10]. We therefore performed 10-fold stratified cross-validation 21

times for each method on each case.

Table 4 summarizes some descriptive statistics of the performance of the three methods in the three applications. Table 5

summarizes the ANOVA results comparing the performance of the three methods for the three applications. Table 6 summarizes some post hoc test results. For the Airline case, a statistically significant difference (p < 0.001) among the three methods

is detected, with a large effect size (f = 1.239). Constrained cascade generalization statistically significantly outperforms logistic regression (p < 0.001). For the Bookstore case, a statistically significant difference (p < 0.05) among the three methods

is detected, with a medium effect size (f = 0.393). Constrained cascade generalization statistically significantly outperforms

C4.5 (p < 0.05). For the Property case, a statistically significant difference (p < 0.001) among the three methods is detected,

with a large effect size (f = 1.137). Constrained cascade generalization statistically significantly outperforms both logistic

regression (p < 0.001) and C4.5 (p < 0.001).

The base methods can already achieve classification accuracy over 99% in the Airline and Bookstore cases, leaving little

space for further improvement. In the Airline case, constrained cascade generalization improves the performance of logistic

regression by 0.056%. In the Bookstore case, constrained cascade generalization improves the performance of C4.5 by 0.105%.

However, the real significance of constrained cascade generalization comes into play when dealing with ‘‘dirty data”. The

performance improvement is larger on the dirty, hard case (i.e., Property) than on the other two relatively clean, easy cases.

In the Property case, constrained cascade generalization improves the performance of logistic regression by 0.198% and that

of C4.5 by 0.098%. As real-world data are often very dirty [25,49,52], the cascading method may be more productive in performance improvement when matching even dirtier data sources. In addition, since there are typically a large number of record pairs that need to be classified in an entity matching application—for two databases with m and n records, respectively,

m n record pairs need to be classified—even a small improvement in the classification accuracy may be considered practically useful or even significant.

While we have demonstrated that the constrained cascade generalization method has a merit in potentially improving

performance over the base methods and certainly deserves serious consideration, we do not suggest that it be preferred over

the base methods in every entity matching application, as there may be multiple factors (performance, comprehensibility,

efficiency, etc.) that should be considered in making the choice. Multiple promising methods should be evaluated before

the choice is made.

First, although our empirical evaluation shows that constrained cascade generalization tends to outperform the base classification methods in the three selected applications and never degrades the performance of the base methods, we cannot

conclude that constrained cascade generalization will universally outperform or at least match the performance of the base

classification methods in all entity matching applications. Indeed, the ‘‘no-free-lunch theorems” [60] have proven that there is

never a panacea; no method can be universally superior to others in all applications. In practice, for a given new classification

1

The f index is an indicator of the effect size of the F test on means in ANOVA. It is defined as the ratio between the standard deviation of the population

means and the standard deviation of the individuals in the combined population. Cohen [10] recommended a convention: f = 0.1, 0.25, and 0.4 for small,

medium, and large effect sizes, respectively.

377

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

Table 5

ANOVA for three entity matching applications

Airline

Bookstore

Property

F(2, 62)

Sig.

Cohen’s f

46.078

4.629

38.787

0.000

0.014

0.000

1.239

0.393

1.137

Table 6

Some post hoc tests (p values) for three entity matching applications

Cascading to logistic

Airline

Bookstore

Property

Cascading to C4.5

Tukey

Scheffé

Sidak

Tukey

Scheffé

Sidak

0.000

0.983

0.000

0.000

0.984

0.000

0.000

0.997

0.000

0.066

0.023

0.000

0.082

0.031

0.000

0.076

0.025

0.000

Table 7

Training times (in seconds) of different algorithms for three entity matching applications

Airline

Bookstore

Property

Cascading

Logistic

C4.5

44.84

4.05

27.33

12.19

0.78

3.06

0.97

0.06

0.41

problem, various methods need to be empirically evaluated to find the best ones, with previous empirical results as references. Constrained cascade generalization is not an exception.

Second, comprehensibility of the final model may be another important criterion, besides performance, especially in

applications where human analysts are assigned to manually review predicted matching record pairs. Comprehensible

explanations of the predictions made by the model may be very useful for the analysts. Cascade generalization may somewhat increase the complexity in interpreting the final model.

a

b

> 0.435

≤ 0.435

≤ 1.057

> 1.057

≤ 0.785

c

> 0.785

d

≤ 1.096

≤ 0.345

> 0.235

> 0.370 ≤ 0.235

≤ 0.370

≤ 1.096

> 1.096

> 0.345

≤ 0.841

> 1.096

> 0.841

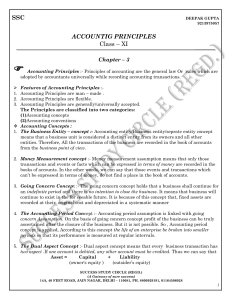

Fig. 1. Classification models learned by different classification methods for the bookstore application using two features. (a) Logistic regression, (b) C4.5, (c)

cascade generalization, dcascade = 1 and (d) cascade generalization, dcascade = 2.

378

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

Efficiency may be another criterion. This may be a less critical factor, as long as a method can finish training in acceptable

time period, as training typically only needs to be conducted once or once a while. The training time needed by the efficient

algorithm for constrained cascade generalization [65] is at most k times that needed by the base algorithms, where k is the

number of generalized trees and can be estimated with the depth of the univariate tree. The base algorithms can be used first

to assess whether it is feasible to use constrained cascade generalization in a particular application. Table 7 lists the training

times of different algorithms in the experiment, which was run on a Dell Optiplex/GX620 workstation configured with a

3 GHz Pentium D CPU and 1 GB of RAM, running the Windows XP operating system with Service Pack 2.

4.4. Visualizing the effects of constrained cascade generalization

The effects of the constrained cascade generalization method can be visualized in a two-dimensional feature space. For

this purpose, we ran the base and composite classification methods for the Bookstore application using just two input features (i.e., attribute-value matching functions), Author and Title. Fig. 1 shows the learned classifiers. Fig. 2 shows the decision

boundaries in the two-dimensional feature space of the learned classifiers.

Logistic regression (Fig. 2a) separates the two classes (i.e., match and non-match) using a linear boundary. The slope and

intercept of the discriminant line are chosen such that apparent accuracy is maximized. The decision boundary generated by

Fig. 2. Decision boundaries generated by different classification methods for the bookstore application using two features.

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

379

C4.5 (Fig. 2b) consists of a sequence of axis-parallel line segments. The directions of these line segments are not flexible.

When logistic regression and C4.5 are cascaded to some degree (Fig. 2c and d), some of the line segments are not restricted

to be axis-parallel any more and can be oblique. These flexible oblique line segments are learned by logistic regression.

The parameter dcascade effectively controls how many such oblique line segments are allowed and therefore how flexible

the decision boundary can be. As dcascade increases, the decision boundary becomes more flexible and the training data can be

fitted better. However, the more flexible decision boundary may not fit unseen data better as well. The oblique line segments

learned by logistic regression are based on fewer and fewer unbalanced local training examples and become increasingly

unreliable. In this particular simple example, dcascade = 1 corresponds to loose coupling and dcascade = 2 corresponds to tight

coupling. In a more complex application with more input features, larger trees may be constructed. An appropriate dcascade

value needs to be determined so that the learned generalized decision tree fits the training data well to an extent where it

also generalizes well to unseen data.

5. Discussions on current study and future directions

The current study has several limitations that need to be addressed in future research. First, while we have compared the

constrained cascade generalization method with the underlying base classification methods, we have not compared it with

other existing entity matching methods yet. Second, as the extent of performance improvement by the constrained cascade

generalization method seems to be small, we cannot conclude on the (statistical and practical) significance of this improvement. Third, only one of the three cases used in the current empirical evaluation contains substantially dirty data. More comprehensive experiments, involving other state-of-the-art entity matching techniques and more real-world dirty

heterogeneous databases, are needed to understand how well different techniques actually perform in real situations.

There are several other research issues that can be further investigated. First, in our empirical evaluation, we used classification accuracy as the performance measure, as it is impossible to uniformly assign an arbitrary weight ratio across the

three applications. Note that classification accuracy is a special case of a more general performance measure, expected misclassification cost, when the weights of the two classes (i.e., match and non-match) are considered equal. In some real-world

domains where the weights of the two classes are perceived as different, the two types of training examples need to be

re-weighted according to the perceived weight ratio during training and expected misclassification cost be used as the

performance measure [58]. Future research can evaluate classification techniques using expected misclassification cost as

the performance measure under a series of possible weight ratios.

Second, there are other possible parameters, such as statistical significance test of learned model, to constrain cascading.

In addition, these parameters are related to decision tree pruning parameters. The effects of different parameters and the

interactions among them can be further analyzed and evaluated.

Third, multiple generalized decision trees with different dcascade’s can be further combined via a ‘‘voting” mechanism. Such

an ensemble strategy can be analyzed and evaluated. Fourth, cascading decision tree learners with nonlinear model inducers,

such as Naive Bayes and artificial neural networks, can be investigated. Fifth, the accuracy of entity matching may be further

improved by a follow-up transitive closure inference [25,33] or an iterative learning procedure [2,44]. The technique evaluated in this paper is orthogonal to, and therefore can be easily combined with, these techniques.

Finally, we have focused on improving the performance of entity matching and left out the efficiency issue. Obviously, a

simple pair-wise comparison of the records across data sources is too time-consuming, with time complexity O(MN), where

M and N are the numbers of records in the two data sources, and the number of instances to be classified is M N. An

efficient algorithm, such as the sorted-neighborhood method [25], needs to be used when the learned entity matching rules

are applied on the entire data sources. When such a method is used, the data set on which to learn classifiers will be much

smaller and different, with many non-match instances filtered. This difference could improve the efficiency of the algorithm

and impact the selection of model parameters like dcascade.

A problem related to entity matching is schema matching, i.e., matching tables that represent the same real-world entity

type and attributes that represent the same property of some entity type, across heterogeneous data sources [61]. Indeed,

schema matching is a prerequisite for entity matching, as matching entities relies on comparing individual corresponding

attributes. There is a growing research interest in matching Web data sources, both their schemas and instances. For example, Wu et al. [61] proposed an approach to schema matching, identifying matching data elements across exposed query

interfaces (views) of data sources on the Web. The technique evaluated in this paper can then be used to match the instances

of such data sources. Techniques for solving the two problems can be incorporated into an iterative procedure, so that

correspondences on both the schema level and the instance level can be evaluated incrementally [62,66]. Such an iterative

procedure is worth further investigation.

6. Conclusion

Many organizations rely on a variety of distributed and heterogeneous information systems in their daily operations and

need to integrate the systems to make better use of the information scattered in different systems. Entity matching is a critical,

yet difficult and time-consuming, problem in integrating heterogeneous data sources and demands automated (or semi-automated) support. In this paper, we have described the application of a generic method for constrained cascade generalization of

380

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

decision tree inducers in entity matching. We have empirically evaluated the method with three entity matching problems.

Empirical results show that constrained cascade generalization tends to outperform or match base classification methods, such

as logistic regression and C4.5 decision tree learner. Larger performance improvement may be observed in dirtier situations.

This research has important practical implications to heterogeneous database integration applications. Constrained cascade generalization allows multiple base classification methods to be combined to an appropriate level to generate better

entity matching rules in such applications. Our empirical results show that this method outperforms the base classification

methods in terms of classification accuracy, especially in the dirtiest case.

Unfortunately, real-world data are often very dirty [25]. According to Strong et al. [49], ‘‘50% to 80% of computerized criminal

records in the US were found to be inaccurate, incomplete, or ambiguous.” ‘‘Poor-quality customer data costs US businesses some

$611 billion a year in postage, printing and staff overhead, according to the Seattle-based Data Warehousing Institute,” reported

by Trembly [52]. When dirty data are integrated from multiple sources, the quality of the resulting consolidated data may

further decrease (hence the saying ‘‘garbage in, garbage out”), consequently hampering the quality of decision support applications running on the integrated data. Data quality problems, such as duplicate records, due to numerous types of discrepancies across local data sources have been considered the biggest reason some data warehousing projects had failed [22].

The cascading method may be even more productive in performance improvement when matching dirty data sources in

real-world data integration applications. It can potentially produce better entity matching rules, which identify corresponding records across heterogeneous databases more accurately, and improve the data quality of the integrated databases, consequently enabling better decision support using the integrated databases and reducing the cost due to bad data.

References

[1] G.B. Bell, A. Sethi, Matching records in a national medical patient index, Communications of the ACM 44 (9) (2001) 83–88.

[2] I. Bhattacharya, L. Getoor, Iterative record linkage for cleaning and integration, in: Proceedings of the Ninth ACM SIGMOD Workshop on Research Issues

in Data Mining and Knowledge Discovery, 2004.

[3] M. Bilenko, R.J. Mooney, Adaptive duplicate detection using learnable string similarity measures, in: Proceedings of the Ninth ACM SIGKDD

International Conference on Knowledge Discovery and Data Mining, Washington DC, 2003, pp. 39–48.

[4] L. Breiman, Bagging predictors, Machine Learning 24 (2) (1996) 123–140.

[5] C.E. Brodley, P.E. Utgoff, Multivariate decision trees, Machine Learning 19 (1) (1995) 45–77.

[6] C.D. Budzinsky, Automated spelling correction, Unpublished Report, Statistics Canada, Ottawa, 1991.

[7] A. Chatterjee, A. Segev, Rule based joins in heterogeneous databases, Decision Support Systems 13 (3–4) (1995) 313–333.

[8] S. Chaudhuri, V. Ganti, R. Motwani, Robust identification of fuzzy duplicates, in: Proceedings of the International Conference on Data Engineering,

Tokyo, Japan, 2005, pp. 865–876.

[9] A.L.P. Chen, P.S.M. Tsai, J.L. Koh, Identifying object isomerism in multidatabase systems, Distributed and Parallel Databases 4 (2) (1996) 143–168.

[10] J. Cohen, Statistical Power Analysis for the Behavioral Sciences, revised ed., Academic Press, Orlando, FL, 1977.

[11] P.T. Davis, D.K. Elson, J.L. Klavans, Methods for precise named entity matching in digital collections, in: Proceedings of the 2003 Joint Conference on

Digital Libraries, 2003, pp. 27–31.

[12] D. Dey, Record matching in data warehouses: a decision model for data consolidation, Operations Research 51 (2) (2003) 240–254.

[13] D. Dey, S. Sarkar, P. De, A probabilistic decision model for entity matching in heterogeneous databases, Management Science 44 (10) (1998) 1379–1395.

[14] D. Dey, S. Sarkar, P. De, A distance-based approach to entity reconciliation in heterogeneous databases, IEEE Transactions on Knowledge and Data

Engineering 14 (3) (2002) 567–582.

[15] A. Doan, Y. Lu, Y. Lee, J. Han, Profile-based object matching for information integration, IEEE Intelligent Systems 18 (5) (2003) 54–59.

[16] P. Domingos, A unified bias-variance decomposition and its applications, in: Proceedings of 17th International Conference on Machine Learning, 2000,

pp. 231–238.

[17] M.E. Fair, Record linkage in an information age society, in: Proceedings of Record Linkage Techniques – 1997, 1997, pp. 427–441.

[18] I.P. Fellegi, A.B. Sunter, A theory of record linkage, Journal of the American Statistical Association 64 (328) (1969) 1183–1210.

[19] Y. Freund, R.E. Schapire, Experiments with a new boosting algorithm, in: Proceedings of the Thirteenth International Conference on Machine Learning,

1996, pp. 148–156.

[20] J. Gama, P. Brazdil, Cascade generalization, Machine Learning 41 (3) (2000) 315–343.

[21] M. Ganesh, J. Srivastava, T. Richardson, Mining entity-identification rules for database integration, in: Proceedings of the Second International

Conference on Knowledge Discovery and Data Mining (KDD-96), 1996, pp. 291–294.

[22] K. Gilhooly, Dirty data blights the bottom line, Computerworld, November 07, 2005.

[23] I.J. Haimowitz, Ö. Gür-Ali, H. Schwarz, Integrating and mining distributed customer databases, in: Proceedings of the Third International Conference on

Knowledge Discovery and Data Mining (KDD-97), 1997, pp. 179–182.

[24] D. Hand, H. Mannila, P. Smyth, Principles of Data Mining, MIT Press, Cambridge, MA, 2001.

[25] M.A. Hernández, S.J. Stolfo, Real-world data is dirty: data cleansing and the merge/purge problem, Data Mining and Knowledge Discovery 2 (1) (1998) 9–37.

[26] D.W. Hosmer, S. Lemeshow, Applied Logistic Regression, John Wiley & Sons Inc., 2000.

[27] W. Kim, J. Seo, Classifying schematic and data heterogeneity in multidatabase systems, IEEE Computer 24 (12) (1991) 12–18.

[28] R. Kohavi, A study of cross-validation and bootstrap for accuracy estimation and model selection, in: Proceedings of the Fourteenth International Joint

Conference on Artificial Intelligence, 1995, pp. 1137–1143.

[29] M. Kubat, S. Matwin, Addressing the curse of imbalanced training sets: Onesided sampling, in: Proceedings of the Fourteenth International Conference

on Machine Learning, 1997, pp. 179–186.

[30] W. Lam, R. Huang, P.-S. Cheung, Learning phonetic similarity for matching named entity translations and mining new translations, in: Proceedings of

the Twenty-seventh Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, 2004, pp. 289–296.

[31] S. Luján-Mora, M. Palomar, Reducing inconsistency in integrating data from different sources, in: Proceedings of the 2001 International Database

Engineering and Applications Symposium, IEEE Computer Society, Washington, DC, 2001, pp. 209–218.

[32] S.E. Madnick, Y.R. Wang, X. Xian, The design and implementation of a corporate householding knowledge processor to improve data quality, Journal of

Management Information Systems 20 (3) (2003) 41–69.

[33] A. McCallum, B. Wellner, Conditional models of identity uncertainty with application to noun coreference, Advances in Neural Information Processing

Systems 17 (2005) 905–912.

[34] E. Métais, Enhancing information systems management with natural language processing techniques, Data & Knowledge Engineering 41 (2–3) (2002)

247–272.

[35] A.E. Monge, C.P. Elkan, The filed matching problem: algorithms and applications, in: Proceedings of the second International Conference on Knowledge

Discovery and Data Mining, 1996, pp. 267–270.

H. Zhao, S. Ram / Data & Knowledge Engineering 66 (2008) 368–381

381

[36] S.K. Murthy, Automatic construction of decision trees from data: a multi-disciplinary survey, Data Mining and Knowledge Discovery 2 (4) (1998) 345–

389.

[37] H.B. Newcombe, Handbook of Record Linkage: Methods for Health and Statistical Studies Administration and Business, Oxford University Press, 1988.

[38] H.B. Newcombe, J.M. Kennedy, S.J. Axford, A.P. James, Automatic linkage of vital records, Science 130 (3381) (1959) 954–959.

[39] J.C. Pinheiro, D.X. Sun, Methods for linking and mining massive heterogeneous databases, in: Proceedings of the Fourth International Conference on

Knowledge Discovery and Data Mining, 1998, pp. 309–313.

[40] C. Pluempitiwiriyawej, J. Hammer, Element matching across data-oriented XML sources using a multi-strategy clustering model, Data & Knowledge

Engineering 48 (3) (2004) 297–333.

[41] J.R. Quinlan, Discovering rules by induction from large collections of examples, in: D. Michie (Ed.), Expert Systems in the Micro-electronic Age,

Edinburgh University Press, Edinburgh, 1979, pp. 168–201.

[42] J.R. Quinlan, C.45: Programs for Machine Learning, Morgan Kaufman, 1993.

[43] H. Saggion, H. Cunningham, K. Bontcheva, D. Maynard, O. Hamza, Y. Wilks, Multimedia indexing through multi-source and multi-language information

extraction: the MUMIS project, Data & Knowledge Engineering 48 (2) (2004) 247–264.

[44] S. Sarawagi, A. Bhamidipaty, Interactive deduplication using active learning, in: Proceedings of the Eighth ACM SIGKDD International Conference on

Knowledge Discovery and Data Mining, Edmonton, Alberta, 2002.

[45] A. Segev, A. Chatterjee, A framework for object matching in federated databases and its implementation, International Journal of Cooperative

Information Systems 5 (1) (1996) 73–99.

[46] L. Seligman, A. Rosenthal, P. Lehner, A. Smith, Data integration: where does the time go? IEEE Data Engineering Bulletin 25 (3) (2002) 3–10.

[47] A.D. Shapiro, Structured Induction in Expert Systems, Addison-Wesley, Wokingham, UK, 1987.

[48] G.A. Stephen, String Searching Algorithms, World Scientific Publishing Co. Pte. Ltd., River Edge, NJ, 1994.

[49] D.M. Strong, Y.W. Lee, R.W. Wang, Data quality in context, Communications of the ACM 40 (5) (1997) 103–110.

[50] S. Tejada, C.A. Knoblock, S. Minton, Learning object identification rules for information integration, Information Systems 26 (8) (2001) 607–633.

[51] V. Torvik, M. Weeber, D.R. Swanson, N.R. Smalheiser, A probabilistic similarity metric for Medline records: a model for author name disambiguation,

Journal of the American Society for Information Science and Technology 56 (2) (2005) 140–258.

[52] A.C. Trembly, Poor data quality: A $600 billion issue, National Underwriter Property & Casualty – Risk & Benefits Management, March 18, 2002 Edition.

[53] P.E. Utgoff, Shift of Bias for Inductive Concept Learning, in: R. Michalski, J. Carbonell, T. Mitchell (Eds.), Machine Learning: An Artificial Intelligence

Approach, Vol. II, Morgan Kaufmann, Los Altos, CA, 1986, pp. 107–148 (Chapter 5).

[54] V.S. Verykios, A.K. Elmagarmid, E.N. Houstis, Automating the approximate record-matching process, Information Science 126 (1–4) (2000) 83–98.

[55] S.M. Weiss, C.A. Kulikowski, Computer Systems That Learn – Classification and Prediction Methods from Statistics, Neural Nets, Machine Learning, and

Expert System, Morgan Kaufmann, 1991.

[56] W.E. Winkler, Matching and record linkage, in: Proceedings of Record Linkage Techniques – 1997, 1997, pp. 374–403.

[57] W.E. Winkler, Record linkage software and methods for merging administrative lists, Exchange of Technology and Know-How, Luxembourg, 1999, pp. 313–323.

[58] I.H. Witten, E. Frank, Data Mining: Practical Machine Learning Tools and Techniques, second ed., Morgan Kaufmann, 2005.

[59] D.H. Wolpert, Stacked generalization, Neural Networks 5 (2) (1992) 241–259.

[60] D.H. Wolpert, The relationship between PAC, the statistical physics framework, the Bayesian framework, and the VC framework, in: Proceedings of the

SFI/CNLS Workshop on Formal Approaches to Supervised Learning, Addison-Wesley, 1994, pp. 117–214.

[61] W. Wu, C. Yu, A. Doan, W. Meng, An interactive clustering-based approach to integrating source query interfaces on the deep Web, in: Proceedings of

the 2004 ACM SIGMOD International Conference on Management of Data, 2004, pp. 95–106.

[62] H. Zhao, Semantic matching across heterogeneous data sources, Communications of the ACM 50 (1) (2007) 45–50.

[63] H. Zhao, S. Ram, Constrained cascade generalization of decision trees, IEEE Transactions on Knowledge and Data Engineering 16 (6) (2004) 727–739.

[64] H. Zhao, S. Ram, Entity identification for heterogeneous database integration – a multiple classifier system approach and empirical evaluation,

Information Systems 30 (2) (2005) 119–132.

[65] H. Zhao, A.P. Sinha, An efficient algorithm for generating generalized decision forests, IEEE Transactions on Systems Man and Cybernetics Part A 35 (5)

(2005) 754–762.

[66] H. Zhao, S. Ram, Combining schema and instance information for integrating heterogeneous data sources, Data & Knowledge Engineering 61 (2) (2007)

281–303.

Huimin Zhao is an Assistant Professor of Management Information Systems at the Sheldon B. Lubar School of Business, University of Wisconsin-Milwaukee. He earned his Ph.D. in Management Information Systems from the University of Arizona, USA,

and B.E. and M.E. in Automation from Tsinghua University, China. His current research interests are in the areas of data integration, data mining, and medical informatics. His research has been published in several journals, including Communications of

the ACM, Data and Knowledge Engineering, IEEE Transactions on Knowledge and Data Engineering, IEEE Transactions on Systems,

Man, and Cybernetics, Information Systems, Journal of Database Management, Knowledge and Information Systems, and Journal

of Management Information Systems. He is a member of IEEE, AIS, IRMA and INFORMS Information Systems Society.

Sudha Ram is McClelland Professor of Management Information Systems in the Eller School of Management at the University of

Arizona. She received her MBA from the Indian Institute of Management, Calcutta in 1981 and a Ph.D. from the University of

Illinois at Urbana-Champaign, in 1985. Dr. Ram has published articles in such journals as Communications of the ACM, IEEE

Transactions on Knowledge and Data Engineering, Information Systems, Information Systems Research, Management Science,

and MIS Quarterly. Dr. Ram’s research deals with issues related to Enterprise Data Management. Her research has been funded by

organizations such as SAP, IBM, Intel Corporation, Raytheon, US ARMY, NIST, NSF, NASA, and Office of Research and Development

of the CIA. Specifically, her research deals with Interoperability among Heterogeneous Database Systems, Semantic Modeling, and

BioInformatics and Spatio-Temporal Semantics, Dr. Ram serves as a senior editor for Information Systems Research. She also

serves on editorial board for such journals as Decision Support Systems, Information Systems Frontiers, Journal of Information

Technology and Management, and as associate editor for Journal of Database Management, and the Journal of Systems and

Software. She has chaired several workshops and conferences supported by ACM, IEEE, and AIS. She is a cofounder of the