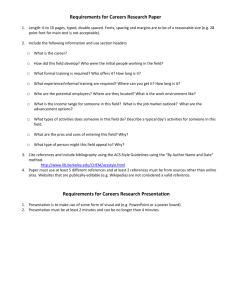

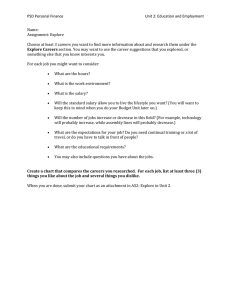

Stop and Measure the Roses

advertisement