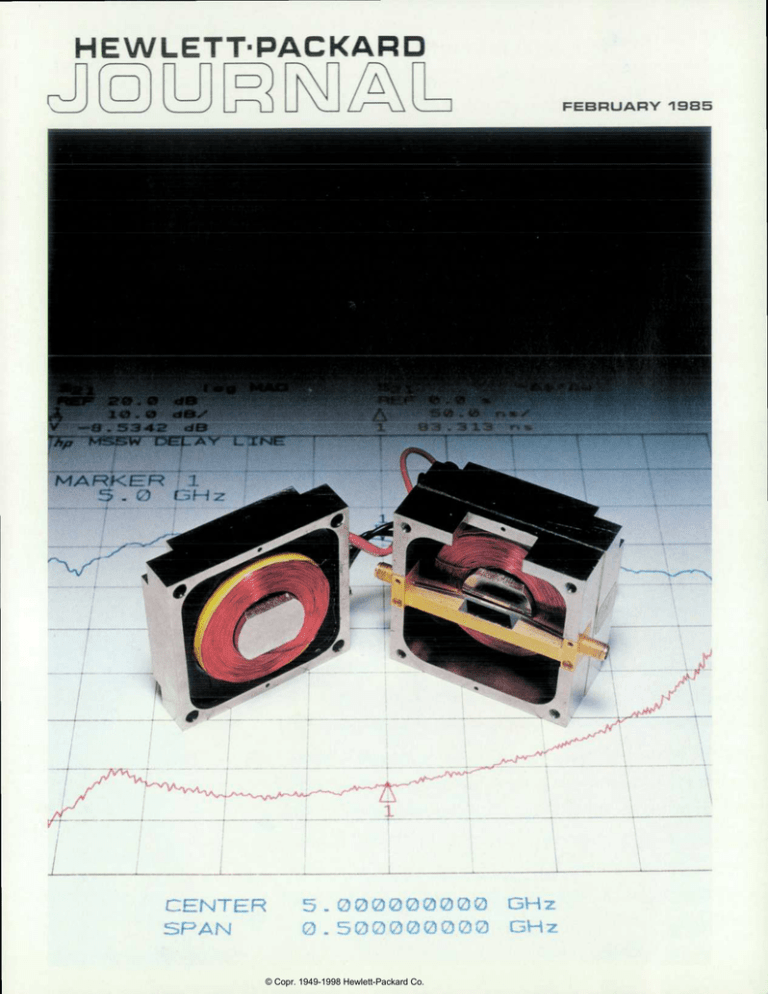

L E T T - P A C K A... C H N T E R 5 .... S P A N 0 . ... - M S S Ã ‡ y T...

advertisement