The Search for Benchmarks: When Do Crowds Provide Wisdom? Working Paper

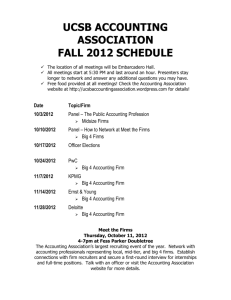

advertisement