Data analysis Ben Graham October 13, 2015 MA930, University of Warwick

advertisement

Data analysis

Ben Graham

MA930, University of Warwick

October 13, 2015

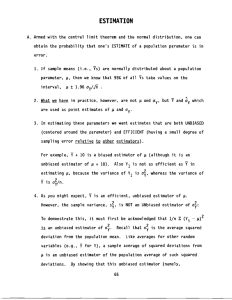

Ch4: Joint and Marginal Distributions

Def 4.1.1nAn n-dimensional random vector

is a function

n

X = (Xi )i from sample space S to R .

n = 2; roll two dice

=1

I

I

I

rnorm(10)

rbinom(10,5,0.5)

Discrete case: Joint p.m.f. fX (x , . . . , xn):

1

P[X ∈ A] =

X

x =(x1 ,...,xn )∈A

fX (x )

Continuous case: Joint p.d.f. fX (x , . . . , xn):

1

P[X ∈ A] =

ˆ

x =(x1 ,...,xn )∈A

fX (x )dx

1

. . . dxn

Marginal distributions

Discrete case: pmf fX Y : R

,

fX (x ) =

2

→R

.

X

y :fX ,Y (x ,y )>0

Continuous case: pdf fX Y : R

,

fX (x ) =

2

ˆ

fX ,Y (x , y )

.

→R

fX ,Y (x , y )dy

Example

Discrete

I

I

I

I

X , Y ∈ {1, . . . , 6} independent dice rolls

Z =X +Y

p.m.f. fX ,Y

p.m.f. fX ,Z

Example

Continuous

I

I

I

I

X , Y ∼ N (0p, 1) i.i.d.r.v

Z = ρX + 1 − ρ Y ∼ N (0, 1)

fX ,Y (x , y ) = fX (x )fY (y )

fX ,Z (x , z ) = fX (x )fZ |X (z | x ) [(Z | X ) ∼ N (ρX , 1 − ρ

2

bivariate normal distribution

2

)]

4.2 Conditional Distributions and Independence

Random variables X and Y are independent if

for all x , y , {X < x } and {Y < y } are independent

fX Y (x , y ) = fX (x )fY (y ) [continuous p.d.f.s or discrete p.m.f.s]

φX Y (t ) = φX (t )φY (t ) [characteristic functions]

Examples

X , Y ∼ Bernoulli (1/2)

X , Y ∼ N (0, 1)

Independence →Covariance=0

I

I

I

,

+

I

I

I

E[XY ] = E[X ]E[Y ]

Covariance=0 6→independence

X ∼ N (0, 1)

Y ∈ {−1, +1} independent of X

Cov(X,XY)=0

I

I

I

Sums of Normal distributions

Example 4.3.4.

I

I

I

I

X ∼ N (µX , σX )

Y ∼ N (µY , σY )

X , Y independent

Then X + Y ∼ N (µX + µY , σX + σY ).

2

2

2

Random sample

I

I

X

2

, . . . , Xn ∼ N (µ, σ 2 )

P

2

i Xi ∼ N (nµ, nσ )

Then

1

I

X̄

=

Z

=

X Xi

i

I

n

∼ N (µ, σ 2 /n)

X Xi − µ

√ ∼ N( , )

σ n

i

01

Sums of Poissons

Theorem 4.3.2

X ∼Poisson(θ)

Y ∼Poisson(λ)

X , Y independent.

Then X + Y ∼Poisson(θ + λ)

Ex 4.4.1 Conversely

Y ∼Poisson(λ)

X | Y ∼Bin(Y , p)

Then X ∼Poisson(λp)

Random Samples

X , . . . , Xn ∼Poisson(λ) i.i.d.r.v.

P

i Xi ∼Poisson(nλ) ≈ N (nλ, nλ)

I

I

I

I

I

I

I

I

1

I

I

X̄

∼≈ N (λ, λ/n)

Covariance and correlation

Def 4.5.1 Covariance:

Cov (X , Y ) = E [(X − EX )(Y − EY )] = E[XY ] − EX EY

Def 4.5.2 Correlation

Cov (X , Y )

Var (X )Var (Y )

Thm 4.5.6 If X and Y are r.v. and a, b ∈ R, then

ρXY = p

Var (aX + bY ) = a Var (X ) + b Var (Y ) + 2abCov (X , Y )

2

Special case X , Y independent.

2

Def 4.5.10 Bivariate distribution

I

µX , µY ∈ R

I

σx , σY >

I

pdf

0

fX ,Y (x , y )

I

Or pdf

1

2

1

exp 2 1

2

p

− ρ2 )−1

= ( πσX σY

x − µ 2

x

×

( − ρ2 )

σX

x − µ Y − µ Y − µ 2 x

Y +

Y

− ρ

σX

σY

σY

f (x ) = p

k = 2, x

1

exp

(2π)k |Σ|

∈ Rk , µ =

µx

µY

− (x − µ)Σ−1 (x − µ)

, Σ=

σX2

ρσX σY

ρσX σY

σY2

Def 4.6.2 Multinomial distribution

I

I

I

I

m trials / repeat events

n possible outcomes, probabilities p , . . . , pn sum to one.

Let xi ∈ {0, 1, . . . , n} count the number of type-i outcomes

1

joint pmf

f (x

1

I

I

, . . . , xn ) =

x

1

m!

! . . . xn !

px1 . . . pnxn

1

where X xi = m

i

Negative correlations Cov (Xi , Xj ) = −mpi pj (i 6= j )

Modeling tables of categorical variables.

Ch5 Random samples

I

I

I

I

X , . . . , Xn is called a random sample of size n from population

f (x ) if they are independent, identically distributed random

variables (i.i.d.r.v.) with marginal distribution function f (x ).

1

Think of them as being a random sample from a population

that is much larger than n. The probability distribution

represents the true distribution of values in the larger

population.

Condorcet's Jury Principle:

plot(sapply(seq(0, 1, 0.01), function(p) pbinom(6, 12, p)))

Getting representative samples can be hard, i.e. telephone

surveys.

I

I

I

I

Does everyone have a landline?

Does everyone choose to talk to strangers phoning you up

during dinner?

Are people shy about expressing some preferences?

http://www.bbc.co.uk/news/uk-politics-33228669

Parameters

I

I

Parameter θ controlling the distribution f (x | θ)

Frequentist statistics:

I

I

I

Bayesian:

I

I

I

θ is xed but unknown

Choose θ̂ = θ̂(data) to estimate θ.

Joint distribution f (θ)f (x | θ)

f (θ) is the prior distribution

Example.

I

I

I

Exponential f (x | θ) = θ exp(−θ

Q x ).

P

Joint distribution f (x | θ) = =1 f (x | θ) = θ exp(−θ

N.B. f (x | θ)only depends on the x via their sum.

n

n

i

i

i

i

x)

i

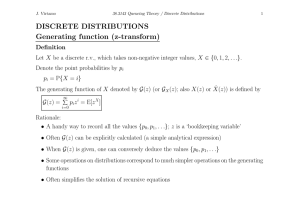

Statistics

Def 5.2.1

Let X , . . . , Xn ∼ f (x | θ)

Let T (x , . . . , xn) denote some function of the data,

i.e. T : Rn → R.

T is a statistic

I

1

I

1

I

I

I

I

I

I

T (x1 , . . . , x

T (x1 , . . . , x

n

n

) = x1

) = max

mean, median, etc.

i

x

i

, Var(X ), etc, are not statistics.

The probability distribution of T is called the sampling

distribution of T .

θ,EX

Common statistics

I

I

i.i.d.r.v.

Sample mean

X

1

, . . . , Xn

X̄

I

=

1

+ · · · + Xn

n

=

n

X

i =1

Xi

Sample variance

" n

#

n

X

X

1

1

S =

(Xi − X̄ ) =

Xi − nX̄

n−1 i

n−1 i

If the mean EXi and variance Var(Xi ) exist, then these are

unbiased estimates.

2

2

=1

I

X

2

=1

2

*Def 5.4.1 Order Statistics

I

The order statistics of a random sample X , . . . , Xn are the

values placed into increasing order: X , . . . , X n .

1

I

I

I

I

I

I

(1)

X(1) = min X

X(2) =second smallest

i

( )

i

...

X( ) = max X

The sample range is R = X n − X

The sample median is

n

i

i

( )

M=

(1)

X((n+ )/ )

n odd

X(n/ ) + X(n/ + ) n even

(

1

2

2

1

1

2

2

2

1

The median may give a better sense of what is typical than the

mean.

Quantiles

I

For p ∈ [0, 1], the p quantile is (R, method 7 of 9)

(1−γ)x j +γ x j , (n−1)p < j ≤ (n−1)p +1, γ = np +1−p −j

Trimmed/truncated mean

()

I

I

I

I

I

I

p ∈ [0, 1]

( +1)

Remove the smallest and biggest np items from the sample

Take the mean of what is left

i.e. LIBOR, 18 banks, top and bottom 4 removed

Cauchy location parameter

Theorem 5.4.4 Distribution of the order statistics

I

I

Sample of size n with c.d.f. FX and pdf fX

Binomial distribution:

n X

n

FX j (x ) =

[F (x )]k [1 − FX (x )]n

k X

( )

I

Dierentiate:

fX(j ) (x ) =

−k

k =j

n!

fX (x ) [FX (x )]j −

(j − 1)!(n − j )!

1

1

[ − FX (x )]n−j .

More sample mean

I

Characteristic function

φX̄ (t ) = [φX (t /n)]n ≈

I

+ o (t /n)

Thm 5.3.1: If Xi ∼ N (µ, σ ), then

2

I

I

I

I

1 + it EnX

X̄ and S 2 are independent

X̄ ∼ N (µ, σ2 /n).

(n − 1)S 2 /σ 2 ∼ χ2−1

Proof: For simplicity, assume µ = 0 and σ = 1.

n

2

n

Ingredients for the proof

I

If e , . . . , en form

an orthonormal basis for Rn.

n

Then (ei · X )i are i.i.d.r.v N (0, 1).

Let e = ( n , . . . , n )

The Γ(α, β) distribution is dened

f (x ) = C (α, β)x exp(−β x ).

The χk distribution is the Γ(k /2, 1/2) distribution.

If X ∼ N (0, 1), then X ∼ χ

The sum of k independent χ r.v. is χk .

1

=1

I

I

√1

1

√1

α−1

I

I

I

2

2

2

1

2

1

2

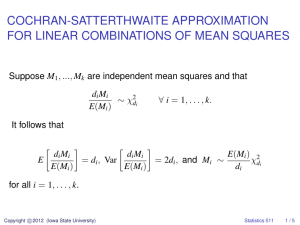

Derived distributions

I

If Z ∼ N (0, 1) and V ∼ χk then Z /pV /k ∼ tk (Student's t)

2

I

I

I

X̄ −√µ

∼t

S / n n−

If U ∼ χk and V ∼ χ are independent, then UV k ∼ Fk

Suppose X , . . . , Xm ∼ N (µX , σX ) and

Y , . . . , Yn ∼ N (µY , σY ). Then

2

/

/`

2

`

,`

2

1

1

2

SX /σX

SY /σY

I

1

2

2

2

2

∼ Fm−1,n−1

These distributions are also used for linear regression/ANOVA.