Creating Image Panoramas Using Homography Warping Rachumallu Balaramakrishna

advertisement

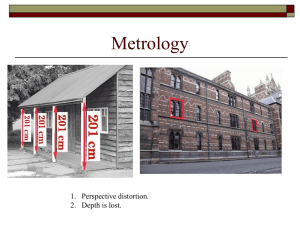

International Journal of Engineering Trends and Technology (IJETT) - Volume4Issue4- April 2013 Creating Image Panoramas Using Homography Warping Rachumallu Balaramakrishna#1, N Swetha#2, Pillem Ramesh#3 [1][2][3] Electronics and communication engineering, K. L. University Vijayawada, Andhra Pradesh, India Abstract--This paper studies a method to construct the panoramic image view from the individual images having overlapping region. A panorama is simply a wide angle view of a physical view. Generally several photographs are arranged horizontally to produce panoramic view. Our case study uses RANSAC based homography matrix calculation for image stitching. We need at least 8 feature points to calculate the homography matrix. Once the homography matrix is calculated between the two images we generate a panoramic view by wrapping the two images. We are using Harris corner detector to find the best matching points in the overlapping region of two images. Further these points are used to find the homography matrix of two images. To get the efficient homography matrix we have to select the feature points which fall onto the corresponding epipolar lines. The solution can be extended to stitch the n number of images. There are so many map projections to arrange the stitched images. We used rectilinear projections to project the resulting image. In rectilinear projections images are viewed on two dimensional planes. Keywords-- Harris Corner detector, panoramic view, Perspective transform, rectilinear projection, Feature points I. INTRODUCTION This paper is organized into three parts first part explains the various ways of extracting the feature points. And the second part explains the methods to calculate the homography from the feature points. Third and final section explains the image stitching from the calculated homography matrix to create expanded view and extending the same for multiple images. In recent years panoramic image creation received great deal of interest; it remains a challenging problem over years. In this paper we fallow two steps for producing panoramic images. We focus on finding the feature points of two images and using them for calculating RANSAC based homography matrix. When searching for interesting feature points in images corners come out as an interesting solution. Corners are also interesting because they are at the junction of two edges. These corners can be localized at sub-pixel accuracy also. There are various ways to find the outliers for homogaphy matrix calculation in those methods random sampling consensus methods gives the best estimate of the transformation matrix between the two image planes. In recent year’s large number of software’s are developed to create panoramic images like Auto Stitch, Photoshop cs5. II. In computer vision the concept of interest points also called key pints or feature points, is used to solve the many problem like object recognition, image registration, visual tracking and 3d reconstruction. In our case we have to register the image before expanding the field of view. The Harris feature detector is the general approach to detect the junction of two edges like corners. To find the corners in an image Harris looks at the average directional intensity change in a small area called window around an interested point. Consider the displacement vector (x, y) then the average intensity change can be represented as: R=∑ (I (u+ x, v+ y)-I (u, y))2 The average intensity change is calculated in all directions and the point at which the average intensity change is high in more than one direction is defined as corner. We can perform the corner detection as fallows obtain the direction of maximal average change of intensity, next find the intensity change in orthogonal direction also. If the intensity change is high in both the directions then the point is a corner. We can represent the average intensity change using Taylor expansion as: We can rewrite the Taylor expansion in matrix form which represents the covariance matrix that specifies the rate of change of intensity: ISSN: 2231-5381 FEATURE POINT ESTIMATION http://www.ijettjournal.org ≈ ∑( ( , ) + + =∑ − ( , ) + +2 ⎡∑ [ ] ≈ ⎢⎢ ⎢∑ ⎣ ∑ ∑ ⎤ ⎥ ⎥ ⎥ ⎦ Page 1285 International Journal of Engineering Trends and Technology (IJETT) - Volume4Issue4- April 2013 From the matrix we can find easily the average intensity change in the particular direction and the direction orthogonal to the previous direction using the Eigen values of the matrix. These values can be used to detect the position of the point like the point is on edge, in homogeneous region, or corner. If one values of Eigen vector is high and other is low the point lies on the edge. If the both values or low then the point is on homogeneous region. If both values are high then the points is corner. III. HOMOGRAPHY MATRIX CALCULATION When to cameras are observing the region and if they have overlapping viewing region then they observe the same points from different viewing angles. While matching the feature points in the images we have to consider the points that fall on to the epipolar lines. To find the exact transformation matrix between the mage planes we need good matching points. But this is not the case after applying the corner detector we get large number of corners from them we have to select the points which give better matrix. To get the better estimate of the matrix we use RANSAC based algorithm. The RANSAC algorithm estimates the good points from the available set of data which containing a number of outliers. The RANSAC method randomly selects some points and calculates the matrix and this is repeated over the set of available points. To find Homography matrix we need at least 8 number of point pairs. The RANSAC method produces the better estimate of the matrix if the data set is larger. The objective is picking eight random matches several times from V. the data points so that eventually we select the eight good matching points to produce the good estimate of the matrix. The homogrpahy matrix is 3x3 dimensional matrixes which relate the pixel coordinates in the two images. Consider a point x1= (u1, v1, 1) in one image and x2= (u2, v2, 1) in another image then they are related with a matrix M. M specifies the linear relation between the image points of the two images. 1 1 2 3 2 1 = 4 5 6 2 1 7 8 9 1 If we assume that the set is containing s% inliers that are good matches, then the probability of selecting the eight good matching points is 8s. if we made p number of choices from the set then the probability of having one set containing all good matched points is 1-(1-8s)p. To calculate the homography matrix from the set of points we have function called cvFindHomography. This takes six arguments and we have to provide the feature points of the two images as the first two arguments and the matrix in which the homography matrix is stored, and the RANSAC threshold. RANSAC threshold is the maximum distance from the epipolar line for a point to treat the points as the inliers. The more good matches we have in our feature point set the higher the probability that RANSAC will give correct homography matrix. ISSN: 2231-5381 IV. WRAPPING IMAGES to PRODUCE PANORAMIC VIEW When two image plane views are related by a homogrpahy matrix it becomes easy to determine where a given image point on one image is found on the other image. Since the second view shows a portion of the scene that is not visible in the first image. We can use the homography in order to expand the mage view by reading the pixel intensity values of the additional pixels. To transfer the points of one image to other image we need inverse homography. To expand the image view Open CV provides a function which does exactly the required thing for us to transfer one image points to other image. cvWarpPerspective function takes 4 arguments. We need to provide the one of the image as argument and the output image as second argument and it takes homography matrix as third argument to map the points from one image to other image. We need to specify the size of the output image. Here we explain to panoramas one expands the image view in horizontal direction and the second one expands the view in vertical direction. Consider we have homography matrix and the pixel coordinate value for which we need to find the corresponding point I the other image from the below liner relation we can find that . 2 2 = 1 1 2 1 2 3 2 = 4 5 6 1 7 8 9 1 For our case study we assumed the images have overlapping region on their extremes. Consider the figure 1 which is the first part of the panoramic view and the figure 2 as the second part of the panoramic view. We calculated the homography matrix for these two images and from this we wrapped the images to produce the final panoramic view. We can easily extend the method for any number of images provided the images must have over lapping region in both extremes except the first one and the last one. The first image and the second image have only one overlapping region. We have to apply the images in sequence to produce the final panoramic view. A. Algorithm We briefly explain the algorithm we implanted on the sample images to produce the panoramic view. Input: Two images with overlapping region Output: Panoramic image For each image do Apply the Harris corner detector Extract the feature points Keep each image feature points Separately Apply the RANSAC method for the feature points Find the better estimate of the homography matrix For each pixel http://www.ijettjournal.org Page 1286 International Journal of Engineering Trends and Technology (IJETT) - Volume4Issue4- April 2013 Wrap the image pixels from both the images on 2D plane using homography matrix. V. RESULTS Fig.3 Resulting Panoramic image . VI. CONCLUSIONS We have demonstrated how to use the homography warping to align images to produce the panoramic view. While this is easy for handling two images but we need to take more care when handling multiple images having overlapping region on both extremes. While applying the algorithm the images passed to the algorithm must be in sequence so that any misalignments may not happen. The resulting images can be post processed to remove any unnecessary pixel intensity variations and noise. A natural extension o four work is considering more number of images and expanding the view in horizontal and vertical directions. Fig. 1 Left Portion of the Image ACKNOWLEDGMENT We express our deep gratitude to Mr. P. Ramesh for the constant support and encouragement in carrying out this work. We thank the reviewers for their useful comments. Fig. 2 Right Portion of the Image REFERENCES In Fig. 1 we can see the mapped points with yellow color. Fig. 2 also contains the yellow colored circles. They are centered at the coordinate points with respective to the image. These points are the pixels with same intensity levels in both the images. These represent the panoramic creation in horizontal direction. The work can be extended to multiple number of images. [1] [2] [3] [4] [5] [6] [7] [8] ISSN: 2231-5381 M. A. Fischler and R. C. Bolles. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM, 24:381–395, June 1981 D. Milgram. Computer methods for creating photomosaics. IEEE Transactions on Computers, C-24(11):1113 – 1119,1975 R. Hartley and A. Zisserman. Multiview Geometry in Computer Vision. Cambridge University Press, 2004. H.-Y. Shum and R. Szeliski. Construction of panoramic image mosaics with global and local alignment. Internal Journal of Computer Vision, 36(2):101–130, 2000 D. G. Lowe. Distinctive image features from scale-invariantkeypoints. Int. J. Comput. Vision, 60:91–110, November 2004. M. Brown and D. Lowe. Automatic panoramic image stitching using invariant features. International Journal of Computer Vision, 74(1):59–73, 2007 M. Brown and D. Lowe. Recognising panoramas. In ICCV,2003 J. Jia and C.-K. Tang. Image registration with global and local luminance alignment. In ICCV, 2003 http://www.ijettjournal.org Page 1287