Su¢ cient Statistics for Welfare Analysis: Raj Chetty UC-Berkeley and NBER

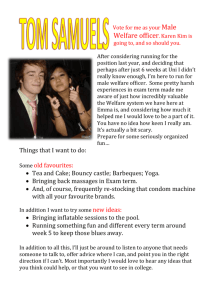

advertisement