Noise-Contrastive Estimation and its Generalizations Michael Gutmann

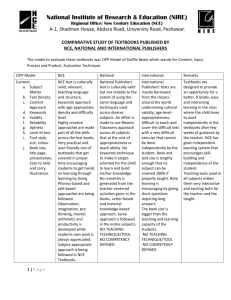

advertisement

Noise-Contrastive Estimation and its

Generalizations

Michael Gutmann

https://sites.google.com/site/michaelgutmann

University of Helsinki

Aalto University

Helsinki Institute for Information Technology

21st April 2016

Michael Gutmann

NCE and its Generalizations

1 / 27

Problem statement

I

Task: Estimate the parameters θ of a parametric model p(.|θ)

of a d dimensional random vector x

I

Given: Data X = (x1 , . . . , xn ) (iid)

I

Given: Unnormalized model φ(.|θ)

Z

φ(x; θ)

φ(ξ; θ) dξ = Z (θ) 6= 1

p(x; θ) =

Z (θ)

ξ

(1)

Normalizing partition function Z (θ) not known / computable.

Michael Gutmann

NCE and its Generalizations

2 / 27

Why does the partition function matter?

φ(x;θ)

Z (θ)

=

2

exp −θ x2

√

I

Consider p(x; θ) =

I

Log-likelihood function for precision θ ≥ 0

r

n

X

xi2

2π

−θ

`(θ) = −n log

θ

2

2π/θ

(2)

i=1

0

Log−likelihood

Data−independent term

Data−dependent term

−100

I

I

Data-dependent (blue) and

independent part (red)

balance each other.

If Z (θ) is intractable, `(θ)

is intractable.

−200

−300

−400

−500

−600

Michael Gutmann

0.5

1

Precision

NCE and its Generalizations

1.5

2

3 / 27

Why is the partition function hard to compute?

Z (θ) =

R

ξ

φ(ξ; θ) dξ

I

Integrals can generally not be solved in closed form.

I

In low dimensions, Z (θ) can be approximated to high

accuracy.

I

Curse of dimensionality: Solutions feasible in low dimensions

become quickly computationally prohibitive as the dimension

d increases.

Michael Gutmann

NCE and its Generalizations

4 / 27

Why are unnormalized models important?

I

I

Unnormalized models are widely used.

Examples:

I

I

I

I

models of images

models of text

models in physics

...

(Markov random fields)

(neural probabilistic language models)

(Ising model)

I

Advantage: Specifying unnormalized models is often easier

than specifying normalized models.

I

Disadvantage: Likelihood function is generally intractable.

Michael Gutmann

NCE and its Generalizations

5 / 27

Program

Noise-contrastive estimation

Properties

Application

Bregman divergence to estimate unnormalized models

Framework

Noise-contrastive estimation as member of the framework

Michael Gutmann

NCE and its Generalizations

6 / 27

Program

Noise-contrastive estimation

Properties

Application

Bregman divergence to estimate unnormalized models

Framework

Noise-contrastive estimation as member of the framework

Michael Gutmann

NCE and its Generalizations

7 / 27

Intuition behind noise-contrastive estimation

I

Formulate the estimation problem as a classification problem:

observed data vs. auxiliary “noise” (with known properties)

I

Successful classification ≡ learn the differences between the

data and the noise

I

differences + known noise properties ⇒ properties of the data

I

Unsupervised learning by

supervised learning

I

We used (nonlinear) logistic

regression for classification

Data or noise ?

Data

Michael Gutmann

NCE and its Generalizations

Noise

8 / 27

Logistic regression (1/2)

I

Let Y = (y1 , . . . ym ) be a sample from a random variable y

with known (auxiliary) distribution py .

I

Introduce labels and form regression function:

P(C = 1|u; θ) =

1

1 + G (u; θ)

G (u; θ) ≥ 0

(3)

Class 1 or 0?

I

Determine the parameters θ

such that P(C = 1|u; θ) is

I

I

large for most xi

small for most yi .

Data: class 1

Michael Gutmann

NCE and its Generalizations

Noise: class 0

9 / 27

Logistic regression (2/2)

I

Maximize (rescaled) conditional log-likelihood using the

labeled data {(x1 , 1), . . . , (xn , 1), (y1 , 0), . . . , (ym , 0)},

1

JnNCE (θ) =

n

I

n

X

log P(C = 1|xi ; θ) +

m

X

i=1

!

log [P(C = 0|yi ; θ)]

i=1

For large sample sizes n and m, θ̂ satisfying

G (u; θ̂) =

m py (u)

n px (u)

(4)

is maximizing JnNCE (θ). Without any normalization

constraints. (proof in appendix)

Michael Gutmann

NCE and its Generalizations

10 / 27

Noise-contrastive estimation

(Gutmann and Hyvärinen, 2010; 2012)

I

Assume unnormalized model φ(.|θ) is parametrized such that

its scale can vary freely.

θ → (θ; c)

I

φ(u; θ) → exp(c)φ(u; θ)

(5)

Noise-contrastive estimation:

1. Choose py

2. Generate auxiliary data Y

3. Estimate θ via logistic regression with

G (u; θ) =

Michael Gutmann

m py (u)

.

n φ(u; θ)

NCE and its Generalizations

(6)

11 / 27

Noise-contrastive estimation

(Gutmann and Hyvärinen, 2010; 2012)

I

Assume unnormalized model φ(.|θ) is parametrized such that

its scale can vary freely.

θ → (θ; c)

I

φ(u; θ) → exp(c)φ(u; θ)

(5)

Noise-contrastive estimation:

1. Choose py

2. Generate auxiliary data Y

3. Estimate θ via logistic regression with

G (u; θ) =

I

G (u; θ) →

m py (u)

n px (u)

⇒

m py (u)

.

n φ(u; θ)

(6)

φ(u; θ) → px (u)

Michael Gutmann

NCE and its Generalizations

11 / 27

Example

I

Unnormalized Gaussian:

u2

φ(u; θ) = exp (θ2 ) exp −θ1

2

I

,

θ1 > 0, θ2 ∈ R,

(7)

Parameters: θ1 (precision), θ2 ≡ c (scaling parameter)

0.5

0

I

Gaussian noise with

ν = m/n = 10

I

True precision θ1? = 1

I

Black: normalized models

Green: optimization paths

Normalizing parameter

Contour plot of JnNCE (θ) :

−0.5

−1

−1.5

−2

−2.5

Michael Gutmann

0.5

1

Precision

NCE and its Generalizations

1.5

2

12 / 27

Statistical properties

(Gutmann and Hyvärinen, 2012)

?

I

Assume px = p(.|θ )

I

Consistency: As n increases,

θ̂ n = argmaxθ JnNCE (θ),

(8)

converges in probability to θ ? .

I

Efficiency: As ν = m/n increases, for any valid choice of py ,

noise-contrastive estimation tends to “perform as well” as

MLE (it is asymptotically Fisher efficient).

Michael Gutmann

NCE and its Generalizations

13 / 27

Validating the statistical properties with toy data

I

Let the data follow the ICA model x = As with 4 sources.

4

X

√

log p(x; θ ? ) = −

2|b?i x| + c ?

(9)

i=1

with c ? = log |detB? | − 24 log 2 and B? = A−1 .

I

To validate the method, estimate the unnormalized model

log φ(x; θ) = −

4

X

√

2|bi x| + c

(10)

i=1

with parameters θ = (b1 , . . . , b4 , c).

I

Contrastive noise py : Gaussian with the same covariance as

the data.

Michael Gutmann

NCE and its Generalizations

14 / 27

Validating the statistical properties with toy data

I

Results for 500 estimation problems with random A, for

ν ∈ {0.01, 0.1, 1, 10, 100}.

I

MLE results: with properly normalized model

2

2

NCE0.01

NCE0.1

NCE1

NCE10

NCE100

NCE0.01

NCE0.1

1

1

NCE1

0

log10 squared error

log10 squared error

NCE10

NCE100

0

MLE

−1

−2

−1

−2

−3

−4

−3

−5

−4

2.5

3

3.5

log10 sample size

4

4.5

(a) Mixing matrix

Michael Gutmann

−6

2.5

3

3.5

log10 sample size

4

4.5

(b) Normalizing constant

NCE and its Generalizations

15 / 27

Computational aspects

I

I

The estimation accuracy improves as m increases.

Trade-off between computational and statistical performance.

Example: ICA model as before but with 10 sources. n = 8000,

ν ∈ {1, 2, 5, 10, 20, 50, 100, 200, 400, 1000}.

0

Performance

for 100 random estimation problems:

−0.5

−1

log10 sqError

I

NCE

−1.5

MLE

−2

−2.5

−3

1

1.5

2

2.5

3

3.5

Time till convergence [log10 s]

Michael Gutmann

4

NCE and its Generalizations

16 / 27

Computational aspects

How good is the trade-off? Compare with

1. MLE where partition function is evaluated with importance

sampling. Maximization of

!

n

m

1 X φ(yi ; θ)

1X

log φ(xi ; θ) − log

(11)

JIS (θ) =

n

m

py (yi )

i=1

i=1

2. Score matching: minimization of

n

10

1 XX 1 2

JSM (θ) =

Ψ (xi ; θ) + Ψ0j (xi ; θ)

n

2 j

(12)

i=1 j=1

with Ψj (x; θ) =

∂ log φ(x;θ)

∂xj

(here: smoothing needed!)

(see Gutmann and Hyvärinen, 2012, for more comparisons)

Michael Gutmann

NCE and its Generalizations

17 / 27

Computational aspects

I

NCE is less sensitive to the mismatch of data and noise

distribution than importance sampling.

Score matching does not perform well if the data distribution

is not sufficiently smooth.

0

NCE

IS

−0.5

SM

MLE

−1

log10 sqError

I

−1.5

−2

−2.5

−3

0.5

1

1.5

2

2.5

3

3.5

Time till convergence [log10 s]

Michael Gutmann

4

NCE and its Generalizations

4.5

18 / 27

Application to natural image statistics

I

I

Natural images ≡ images which we see in our environment

Understanding their properties is important

I

I

for modern image processing

for understanding biological visual systems

Michael Gutmann

NCE and its Generalizations

19 / 27

Human visual object recognition

I

Rapid object recognition by

feed-forward processing

I

Computations in middle

layers poorly understood

I

I

Faces,

objects, ...

?

(up to normalization)

?

?

Our approach: learn the

computations from data

Idea: the units indicate how

probable an input image is.

High-level

vision

?

?

?

?

?

?

?

?

Simple

features

(edges, ...)

?

?

?

?

?

?

Low-level

vision

(Adapted from Koh and Poggio,

Neural Computation, 2008)

(Gutmann and Hyvärinen, 2013)

Michael Gutmann

NCE and its Generalizations

20 / 27

Unnormalized model of natural images

I

I

I

Three processing layers (> 2 · 105 parameters)

Fit to natural image data (d = 1024, n = 70 · 106 )

Learned computations: detection of curvatures, longer

contours, and texture.

Curvature

Contours

Texture

Response

to local

gratings

Strongly

activating

inputs

(Gutmann and Hyvärinen, 2013)

Michael Gutmann

NCE and its Generalizations

21 / 27

Program

Noise-contrastive estimation

Properties

Application

Bregman divergence to estimate unnormalized models

Framework

Noise-contrastive estimation as member of the framework

Michael Gutmann

NCE and its Generalizations

22 / 27

Bregman divergence between two vectors a and b

2

1.5

1

0.5

0

−0.5

−2

−1.5

−1

−0.5

0

0.5

dΨ (a, b) = 0 ⇔ a = b

Michael Gutmann

1

1.5

2

dΨ (a, b) > 0 if a 6= b

NCE and its Generalizations

23 / 27

Bregman divergence between two functions f and g

I

Compute dΨ (f (u), g (u)) for all u in their domain; take

weighted average

Z

d̃Ψ (f , g ) = dΨ (f (u), g (u))dµ(u)

Z

= Ψ(f ) − Ψ(g ) + Ψ0 (g )(f − g ) dµ

(13)

(14)

I

Zero iff f = g (a.e.); no normalization condition on f or g

I

Fix f , omit terms not depending on g ,

Z

J(g ) =

− Ψ(g ) + Ψ0 (g )g − Ψ0 (g )f dµ

Michael Gutmann

NCE and its Generalizations

(15)

24 / 27

Estimation of unnormalized models

J(g ) =

R

− Ψ(g ) + Ψ0 (g )g − Ψ0 (g )f dµ

I

Idea: Choose f , g , and µ so that we obtain a computable cost

function for consistent estimation of unnormalized models.

I

Choose f = T (px ) and g = T (φ) such that

f = g ⇒ px = φ

(16)

Examples:

I

I

I

I

f = px , g = φ

px

φ

, g = νp

f = νp

y

y

...

Choose µ such that the integral can either be computed in

closed form or approximated as sample average.

(Gutmann and Hirayama, 2011)

Michael Gutmann

NCE and its Generalizations

25 / 27

Estimation of unnormalized models

(Gutmann and Hirayama, 2011)

I

Several estimation methods for unnormalized models are part

of the framework

I

I

I

I

I

I

Noise-contrastive estimation

Poisson-transform (Barthelmé and Chopin, 2015)

Score matching (Hyvärinen, 2005)

Pseudo-likelihood (Besag, 1975)

...

Noise-contrastive estimation:

Ψ(u) = u log u − (1 + u) log(1 + u)

(17)

νpy (u)

dµ(u) = px (u)du (18)

f (u) =

px (u)

(proof in appendix)

Michael Gutmann

NCE and its Generalizations

26 / 27

Conclusions

I

I

Point estimation for parametric models with intractable

partition functions (unnormalized models)

Noise contrastive estimation

I

I

I

I

Estimate the model by learning to classify between data and

noise

Consistent estimator, has MLE as limit

Applicable to large-scale problems

Bregman divergence as general framework to estimate

unnormalized models.

Michael Gutmann

NCE and its Generalizations

27 / 27

Appendix

Maximizer of the NCE objective function

Noise-contrastive estimation as member of the Bregman framework

Michael Gutmann

NCE and its Generalizations

1 / 10

Appendix

Maximizer of the NCE objective function

Noise-contrastive estimation as member of the Bregman framework

Michael Gutmann

NCE and its Generalizations

2 / 10

Proof of Equation (4)

For large sample sizes n and m, θ̂ satisfying

G (u; θ̂) =

m py (u)

n px (u)

is maximizing JnNCE (θ),

NCE

Jn

1

(θ) =

n

n

X

log P(C = 1|xi ; θ) +

i=1

m

X

!

log [P(C = 0|yi ; θ)]

i=1

without any normalization constraints.

Michael Gutmann

NCE and its Generalizations

3 / 10

Proof of Equation (4)

NCE

Jn

1

(θ) =

n

=

n

X

i=1

n

1X

n

log P(C = 1|xi ; θ) +

m

X

!

log [P(C = 0|yi ; θ)]

i=1

m

log P(C = 1|xi ; θ) +

t=1

m1 X

log [P(C = 0|yi ; θ)]

nm

t=1

Fix the ratio m/n = ν and let n → ∞ and m → ∞. By law of

large numbers, JnNCE converges to J NCE ,

J NCE (θ) = Ex (log P(C = 1|x; θ)) + νEy (log P(C = 0|y; θ)) (19)

With P(C = 1|x; θ) =

1

1+G (x;θ)

Michael Gutmann

and P(C = 0|y; θ) =

NCE and its Generalizations

G (y;θ)

1+G (y;θ)

...

4 / 10

... we have

J NCE (θ) = − Ex log(1 + G (x; θ)) + νEy log G (y; θ)−

νEy log (1 + G (y; θ))

(20)

Consider the objective J NCE (θ) as a function of G rather than θ,

J NCE (G ) = − Ex log(1 + G (x)) + νEy log G (y) − νEy log (1 + G (y))

Z

= − px (ξ) log(1 + G (ξ))dξ+

Z

ν py (ξ) (log G (ξ) − log(1 + G (ξ)))

Compute functional derivative δJ NCE /δG ,

px (ξ)

1

1

δJ NCE (G )

=−

+ νpy (ξ)

−

δG

1 + G (ξ)

G (ξ) 1 + G (ξ)

Michael Gutmann

NCE and its Generalizations

(21)

5 / 10

δJ NCE (G )

px (ξ)

1

1

=−

+ νpy (ξ)

−

δG

1 + G (ξ)

G (ξ) 1 + G (ξ)

1

px (ξ)

+ νpy (ξ)

=−

1 + G (ξ)

G (ξ)(1 + G (ξ))

!

=0

(22)

(23)

(24)

We obtain

px (ξ)

1

= νpy (ξ) ∗

∗

1 + G (ξ)

G (ξ)(1 + G ∗ (ξ))

G ∗ (ξ)px (ξ) = νpy (ξ)

py (ξ)

G ∗ (ξ) = ν

px (ξ)

m py (ξ)

=

n px (ξ)

(25)

(26)

(27)

(28)

Evaluating ∂ 2 J NCE /∂G 2 at G ∗ shows that G ∗ is a maximizer.

Michael Gutmann

NCE and its Generalizations

6 / 10

Appendix

Maximizer of the NCE objective function

Noise-contrastive estimation as member of the Bregman framework

Michael Gutmann

NCE and its Generalizations

7 / 10

Proof

In noise-contrastive estimation, we maximize

NCE

Jn

1

(θ) =

n

n

X

log P(C = 1|xi ; θ) +

i=1

m

X

!

log [P(C = 0|yi ; θ)]

i=1

Sample version of

J NCE (θ) = Ex (log P(C = 1|x; θ)) + νEy (log P(C = 0|y; θ))

With

P(C = 1|u; θ) =

1

1 + G (u; θ)

P(C = 0|u; θ) =

1

1 + 1/G (u; θ)

J NCE (θ) = −Ex log(1 + G (x; θ)) − νEy log(1 + 1/G (y; θ)) (29)

where G (u; θ) =

νpy (u)

φ(u;θ) .

Michael Gutmann

NCE and its Generalizations

8 / 10

The general cost function in the Bregman framework is

Z

J(g ) =

− Ψ(g ) + Ψ0 (g )g − Ψ0 (g )f dµ

(30)

With

Ψ(g ) = g log(g ) − (1 + g ) log(1 + g )

0

Ψ (g ) = log(g ) − log(1 + g )

(31)

(32)

we have

Z

J(g ) =

− g log(g ) + (1 + g ) log(1 + g )

+ log(g )g − log(1 + g )g

− log(g )f + log(1 + g )f dµ

Michael Gutmann

NCE and its Generalizations

(33)

9 / 10

Z

J(g ) =

Z

=

log(1 + g ) − log(g )f + log(1 + g )f dµ

(34)

log(1 + g ) + log(1 + 1/g )f dµ

(35)

With

f (u) =

νpy (u)

px (u)

g (u) = G (u; θ)

dµ(u) = px (u)du

(36)

we have

Z

J(G (.; θ)) =

px (u) log(1 + G (u; θ))du

+ νpy (u) log(1 + 1/G (u; θ))du

=−J

NCE

Michael Gutmann

(θ)

(37)

(38)

NCE and its Generalizations

10 / 10