Does Conflict of Interest Lead to Biased Coverage? Evidence

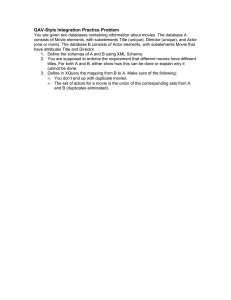

advertisement