Dan’s Multi-Option Talk

advertisement

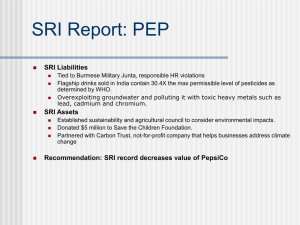

Dan’s Multi-Option Talk • Option 1: HUMIDRIDE: Dan’s Trip to the East Coast – Whining: High – Duration: Med – Viruses: Low • Option 2: T-Cell: Attacking Dan’s Cold Virus – Whining: Med – Duration: Low – Viruses: High • Option 3: Model-Lite Planning: Diverse Multi-Option Plans and Dynamic Objectives – Whining: Low – Duration: High – Viruses: Low © 2007 SRI International 1 Model-Lite Planning: Diverse MultiOption Plans and Dynamic Objectives Daniel Bryce William Cushing Subbarao Kambhampati © 2007 SRI International Questions • When must the plan executor decide on their planning objective? – Before synthesis? Traditional model – Before execution? Similar to IR model: select plan from set of diverse, but relevant plans – During execution? Multi-Option Plans (subsumes previous) – At all? “Keep your options open” • Can the executor change their planning objective without replanning? • Can the executor start acting without committing to an objective? © 2007 SRI International 3 Overview • Diverse Multi-Option Plans – Diversity – Representation – Connection to Conditional Plans – Execution • Synthesizing Multi-Option Plans – Example – Speed-ups • Analysis – Synthesis – Execution • Conclusion © 2007 SRI International 4 Diverse Multi-Option Plans Cost • Each plan step presents several diverse choices – – – – Option 1: Train(MP, SFO), Fly(SFO, BOS), Car(BOS, Prov.) Option 1a: Train(MP, SFO), Fly(SFO, BOS), Fly(BOS, PVD), Cab(PVD, Prov.) Option 2: Shuttle(MP, SFO), Fly(SFO, BOS), Car(BOS, Prov.) Option2a: Shuttle(MP, SFO), Fly(SFO, BOS), Fly(BOS, PVD), Cab(PVD, Prov.) • Diversity is Reliant on Pareto Optimality Fly(BOS,PVD) Car(BOS,Prov.) Fly(BOS,PVD) O1 O2a O1a Duration Diversity – Each option is non-dominated – Diversity through Pareto Front w/ High Spread Train(MP, SFO) Fly(SFO, BOS) O2 Cab(PVD, Prov.) O1a O1 Cab(PVD, Prov.) Fly(SFO, BOS) O2a Shuttle(MP, SFO) Car(BOS,Prov.) © 2007 SRI International O2 5 Dynamic Objectives Train(MP, SFO) Fly(SFO, BOS) Fly(BOS,PVD) Car(BOS,Prov.) Fly(BOS,PVD) Cab(PVD, Prov.) O1a O1 Cab(PVD, Prov.) Fly(SFO, BOS) O2a Shuttle(MP, SFO) Car(BOS,Prov.) O2 • Multi-Options Plans are a type of Conditional Plan – Conditional on the user’s Objective Function – Allow the objective Function to change – Ensured that, irrespective of their obj. fn., will have non-dominated options © 2007 SRI International 6 Executing Multi-Option Plans Local action choice corresponds to multiple options Cost O2 Option values Change at each step Cost O1 O2a O1a Duration Cost O1 O1 O1a O1a Duration Train(MP, SFO) Fly(SFO, BOS) Cost Duration Fly(BOS,PVD) Car(BOS,Prov.) Fly(BOS,PVD) O1 Duration Cab(PVD, Prov.) O1a O1 Cab(PVD, Prov.) Fly(SFO, BOS) O2a Shuttle(MP, SFO) Car(BOS,Prov.) © 2007 SRI International O2 7 Multi-Option Conditional Probabilistic Planning • (PO)MDP setting: (Belief) State Space Search – Stochastic Actions, Observations, Uncertain Initial State, Loops – Two Objectives: Expected Plan Cost, Probability of Plan Success Traditional Reward functions are linear combination of above. Assume objective fn. • Extend LAO* to multiple objectives (Multi-Option LAO*) – Each generated (belief) state has an associated Pareto set of “best” sub-plans – Dynamic programming (state backup) combines successor state Pareto sets Yes, its exponential time per backup per state ♦ There are approximations – Basic Algorithm While not have a good planS ♦ ♦ © 2007 SRI International ExpandPlan S RevisePlanS 8 Example of State Backup © 2007 SRI International 9 Search Example -- Initially Initialize Root Pareto Set with null plan and heuristic estimate Pr(G) 0.0 © 2007 SRI International C 10 Search Example – 1st Expansion Expand Root Node and Initialize Pareto Sets of Children with null plan And Heuristic Estimate Pr(G) Pr(G) Pr(G) 0.0 0.0 C 0.8 a2 a1 C 0.0 C 0.2 Pr(G) 0.0 © 2007 SRI International C 11 Search Example – 1st Revision Recompute Pareto Set For Root, find best heuristic Point is through a1 Pr(G) Pr(G) Pr(G) 0.0 0.0 C 0.8 a2 a1 C 0.0 C 0.2 Pr(G) a1 0.0 © 2007 SRI International C 12 Search Example – 2nd Expansion Expand Children of a1 and initialize their Pareto Sets with null plan and Heuristic estimate – Both children Satisfy the Goal with non-zero probability 0.7 Pr(G) Pr(G) C 0.5 C a4 Pr(G) Pr(G) 0.0 Pr(G) 0.0 C 0.8 a2 a1 C 0.0 C 0.2 Pr(G) a1 0.0 © 2007 SRI International a3 C 13 Search Example – 2nd Revision Recompute Pareto Set of both expanded nodes and the root node – There is a feasible plan a1, [a4, a3] that satisfies the goal with 0.66 probability and cost 2. The heuristic estimate indicates extending a1, [a4, a3] will lead to a plan that satisfies the goal with 1.0 probability 0.7 Pr(G) Pr(G) C 0.5 C a4 Pr(G) Pr(G) 0.0 Pr(G) 0.0 C 0.8 a2 a1 a4 a3 a3 C 0.0 C 0.2 Pr(G) a1,[a4|a3] a1,[a4|a3] 0.0 © 2007 SRI International a4 a3 C 14 Search Example – 3rd Expansion Expand Plan to include a7. There is no applicable action after a3 0.9 Pr(G) C a7 0.7 Pr(G) Pr(G) C 0.5 C a4 Pr(G) Pr(G) 0.0 Pr(G) 0.0 C 0.8 a2 a1 a4 a3 a3 C 0.0 C 0.2 Pr(G) a1,[a4|a3] a1,[a4|a3] 0.0 © 2007 SRI International a4 a3 C 15 Search Example – 3rd Revision Recompute all Pareto Sets that are Ancestors of Expanded Nodes. Heuristic for plans extended through a3 is higher because of no applicable action. Heuristic at root node changes to plans extended through a2 0.9 Pr(G) C a7 Pr(G) 0.7 Pr(G) a7 , a7 C C a4 Pr(G) 0.0 0.0 C 0.8 a2 a1 Pr(G) a ,a a4, a7 4 7 a4 C a3 0.5 a3 Pr(G) a3 C 0.0 0.2 Pr(G) 0.0 © 2007 SRI International a2 a1,[a4, a7|a3] a1,[a4|a3] C 16 Search Example – 4th Expansion 0.9 Expand Plan through a2, one expanding child satisfies the goal with 0.1 probability. Pr(G) 0.1 Pr(G) C a6 a5 Pr(G) 0.7 C 0.0 C 0.8 a2 a1 C Pr(G) a ,a a4, a7 4 7 a4 C a3 0.5 a3 Pr(G) a3 C 0.0 0.2 Pr(G) 0.0 © 2007 SRI International Pr(G) a7 a7 , C a4 0.0 C a7 Pr(G) 0.0 Pr(G) a a1,[a42, a7|a3] a1,[a4|a3] C 17 Search Example – 4th Revision 0.9 Recompute Pareto sets of expanded Ancestors. Plan a2, a5 is dominated at the root. 0.1 Pr(G) C a6 a5 0.7 C a5 0.0 C 0.8 a2 a1 0.0 C Pr(G) a ,a a4, a7 4 7 a4 C a3 0.5 a3 Pr(G) a3 C 0.0 0.2 Pr(G) a ,a a1,[a42, a76|a3] a1,[a4|a3] a2,a5 © 2007 SRI International Pr(G) a7 a7 C a4 Pr(G) a6 0.0 C a7 Pr(G) Pr(G) 0.0 Pr(G) C 18 Search Example – 5th Expansion Pr(G) 0.6 0.9 C a8 Pr(G) 0.1 Pr(G) C a6 a5 Pr(G) 0.0 a5 C a7 Pr(G) 0.0 Pr(G) 0.7 C 0.0 C 0.8 Expand Plan through a6 C a2 a1 Pr(G) a ,a a4, a7 4 7 a4 C a3 0.5 a3 Pr(G) a3 C 0.0 0.2 Pr(G) 0.0 © 2007 SRI International Pr(G) a7 a7 C a4 a6 a2, a6 a1,[a4, a7|a3] a1,[a4|a3] C 19 Search Example – 5th Revision 0.6 Pr(G) 0.9 C a8 0.0 Pr(G) a8 a8 Pr(G) 0.1 Pr(G) C a5 0.7 C 0.0 0.8 © 2007 SRI International a2 a1 0.0 Pr(G) a7 a7 C a4 Pr(G) a6,a8 a6, a8 0.0 a5 C Recompute Pareto Sets. Plans a2, a6, a8, and a2, a5 are dominated at root. C a7 a6 Pr(G) C a3 Pr(G) Pr(G) a ,a a4, a7 4 7 a4 C a3 0.5 a3 C 0.0 0.2 Pr(G) a2,a6,a8 a1,[a4, a7|a3] a1,[a4|a3] a2,a6,a8 a2,a5 C 20 Search Example – Final Pr(G) 0.6 0.9 C a8 0.0 Pr(G) a8 a8 Pr(G) 0.1 Pr(G) a5 0.7 C a6,a8 a6, a8 a5 C 0.0 0.8 a2 a1 C Pr(G) a ,a a4, a7 4 7 a4 C a3 0.5 a3 Pr(G) a3 C 0.0 0.2 Pr(G) 0.0 © 2007 SRI International Pr(G) a7 a7 C a4 Pr(G) 0.0 C a7 C a6 Pr(G) a2,a6,a8 a1,[a4, a7|a3] a1,[a4|a3] C 21 Speed-ups -domination [Papadimtriou & Yannakakis, 2003] • Randomized Node Expansions – Simulate Partial Plan to Expand a single node • Reachability Heuristics – Use the McLUG (CSSAG) © 2007 SRI International 22 -domination 1-Pr(G) Check Domination Non-Dominated Each Hyper-Rectangle Has a single point Multiply Each Objective By (1+) Dominated x x’ Cost x’/x = 1+ © 2007 SRI International 23 Synthesis Results © 2007 SRI International 24 Execution Results • Random Option: Sample Option, execute action • Keep Options Open – Most Options: Execute action in most options – Diverse Options: Execute action in most diverse set of options © 2007 SRI International 25 Summary & Future Work • Summary – Multi-Option Plans let executor delay/change commitments to objective functions – Multi-Option Plans help executor understand alternatives – Multi-Option Plans passively enforce diversity through Pareto set approximation • Future Work – Synthesis Proactive Diversity: Guide search to broaden Pareto set Speedups: Alternative Pareto set representation, standard MDP tricks – Execution Option Lookahead: how will set of options change? Meta-Objectives: Diversity, Decision Delay – Model-Lite Planning © 2007 SRI International Unspecified objectives (not just unspecified objective function) Objective Function preference elicitation 26 Final Options • Option 1: Questions • Option 2: Criticisms • Option 3: Next Talk! © 2007 SRI International 27