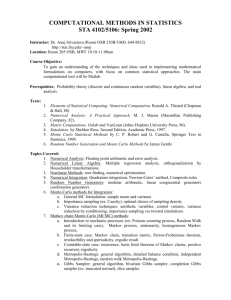

CONSTRUCTION OF STATIONARY TIME SERIES VIA THE GIBBS SAMPLER No 595

advertisement

CONSTRUCTION OF STATIONARY TIME SERIES

VIA THE GIBBS SAMPLER

WITH APPLICATION TO VOLATILITY MODELS

Michael K Pitt

and

Stephen G Walker

No 595

WARWICK ECONOMIC RESEARCH PAPERS

DEPARTMENT OF ECONOMICS

Construction of Stationary Time Series via the Gibbs Sampler

with Application to Volatility Models

MICHAEL K PITT and STEPHEN G WALKER

University of Warwick and University of Bath

M.K.Pitt@warwick.ac.uk s.g.walker@maths.bath.ac.uk

Abstract

In this paper, we provide a method for modelling stationary time series. We allow the

family of marginal densities for the observations to be specied. Our approach is to construct

the model with a specied marginal family and build the dependence structure around it.

We show that the resulting time series is linear with a simple autocorrelation structure.

In particular, we present an original application of the Gibbs sampler. We illustrate our

approach by tting a model to time series count data with a marginal Poisson-gamma density.

Some key words: ARCH, Exponential family, GARCH, Gibbs sampler, Filtering, Markov

chains, Markov chain Monte Carlo , Stochastic Volatility

1

1 INTRODUCTION

In this paper we focus on a general method for constructing stationary time series models

with marginals of choice. The construction of such time series is based on the Gibbs sampler.

Although principally used for Bayesian inference (see, for example, Smith & Roberts (1993)),

we intend to use the Gibbs sampler to design the model. Dynamic models are frequently derived

in terms of the conditional updates. Whilst this construction is appealing, the marginal density

(in cases where the time series is strictly stationary) of the observations belongs to a family of

densities which is typically unknown. Our construction allows this family to be specied.

A key paper on the construction of stationary time series with specied marginal densities

is Lawrence & Lewis (1985) and the debate about the starting point for modelling time series

appears in the discussion of Lawrence & Lewis (1985). There is also an interesting discussion

of stationary time series in Joe (1996) and in McDonald & Zucchini (1997, Chapter 1). The

current state of the art in the construction of such models, from a probabilistic perspective, may

be found in Jorgensen & Song (1998).

In a manner similar to Lawrence & Lewis (1985), we consider strictly stationary time series

with a known marginal density and with linear expectations. Statistically this has several

advantages. We can assign the correct stationary density to the initial observation, leading

to ecient likelihood estimation. The linear expectations also enable the autocorrelations of

the series to be easily obtained. In addition, point forecasting, several steps ahead, is also

possible due to the linear expectations. The marginal interpretation of the model, particularly if

exogenous covariates are included, is greatly facilitated by the fact that the marginal density of

our observations is known. As for standard Gaussian dynamic models, the introduction of time

dependence provides a direct generalisation of the assumptions of independence whilst retaining

a specied density. These advantages will be made more explicit later in the paper.

In applications, there may be reasons for assuming a particular marginal density. Lawrence

& Lewis (1985) consider a marginal Gamma density for wind speeds, as this appears to t the

data (once detrended) well marginally. For stationary processes, this forms a coherent modelling

strategy as the marginal density may be suggested by a histogram and the dynamics, suggested

by the correlogram, may then be incorporated into the model. In nancial applications, the

marginal density of stock and exchange rate returns is of interest. It is often argued, see for

instance Campbell et al. (1997, Chapter 1), that whilst the rst few moments (including the

second moment) of returns are nite, many of the higher moments may not be. In particular,

Praetz (1972) and Blattberg & Gonedes (1974) both model returns as independently identically

distributed arguing that the scaled Student-t distribution is appropriate as a marginal model

2

for both stock price indices and individual stock prices. This marginal density will be exploited

in Sections 25 and 3.

The structure of this paper is as follows. In Section 2 we derive rst-order autoregressive

processes with specied marginal families. These processes may be used to directly model our

observations in an analogous way to Gaussian autoregressive models. Alternatively, we may use

the process to specify a subordinate or latent structure. The models therefore belong to the

class of non-Gaussian state space models, see West & Harrison (1997). Within this section, we

consider general autoregressive results for the exponential dispersion family, together with two

examples from this family: Poisson and gamma marginals. We also consider inverse-gamma

marginals. The stochastic volatility example, a non-Gaussian state space model, is considered

in Section 25.

In Section 3, we examine the construction of models of ARCH(1)1 type. For the changing

variance model, we obtain a closed form update f (yt+1 jyt ). The model is linear in yt2 and the

marginal density of y is a scaled Student-t distribution. Other models of ARCH(1) structure are

examined and a general result for the exponential family is established in Section 33. Section 4

deals with models of GARCH(1,1) type, allowing direct feedback of the observations from the

preceding time step. Finally, in Section 5, a non-Gaussian state space model is proposed for

modelling a series of dependent counts. This model, introduced in Section 2, is estimated and

assessed using ecient Markov chain Monte Carlo sampling and recent particle ltering methods

respectively. Finally we conclude in Section 6.

Throughout this paper, we provide an innovative approach to introducing strictly stationary

processes. Some of these processes, such as the Poisson process of Section 22 are known but

most, to our knowledge, are not. In particular, we attempt to unify the derivation of such

models. Before considering the rst-order autoregressive processes of Section 2, we outline our

approach in the following section, Section 11, by examining some general properties of the Gibbs

sampler. In particular, we show the standard Gaussian AR(1) process may be derived via the

Gibbs sampler.

11 Gibbs sampler

In this paper we use the Gibbs sampler as a tool to construct models. The Gibbs sampler has

been widely used in recent years for Bayesian inference, the literature beginning with Gelfand &

Smith (1990). Reviews of the Gibbs sampler may be found in Tierney (1994), Chib & Greenberg

(1995) and Gilks et al. (1996).

Suppose we wish to generate observations y1 ; y2 ; : : : from a Markov chain which starts in

1 Autoregressive conditional heteroskedastic models, see Engle (1982).

3

equilibrium and has stationary density fY (y). In order to do this we introduce an auxiliary

variable x and consider the joint density

fX;Y (x; y) = fY (y)fX jY (xjy):

Clearly the marginal density for y is fY (y). Also,

(x; y) :

fY jX (yjx) = fX;Y

f (x)

X

If we generate y1 fY (y), then x1 fX jY (xjy1 ), y2 fY jX (yjx1 ) and so on, then f(xt ; yt )g will

yield a stationary sequence from fX;Y (x; y). In particular, fyt g will form a stationary Markov

sequence from fY (y). The sequence of sampling recursively from each conditional density is

known as the Gibbs sampler. It may be seen that the transition density of our Markov chain

for y is

Z

f (yt+1 jyt ) = fY jX (yt+1 jx)fX jY (xjyt ) dx:

In this paper, we construct a Markov chain for yt which leads to plausible time series models,

which may then be estimated and tested. We are regarding the yt as observations. The \auxiliary" variable x plays no role in the model, except as a tool for obtaining a transition density

f (yt+1 jyt), which ensures a marginal density of a specied form. The usual properties of the

Gibbs sampler remain; in particular, the Markov chain is reversible.

As a simple example, let us consider the Gaussian density for our marginal. Thus we wish to

form a Markov chain yt with stationary density fY (y ) = N (y; ; 2 ). We introduce an auxiliary

variable x and let fX jY (xjy) = N (x; y; 1). Thus

fX;Y (x; y) = fY (y) fX jY (xjy)

= N (y; ; 2 ) N (x; y; 1):

Therefore,

(11)

fY jX (yjx) = N y; x= + (1 ; ); =2 ;

where = 2 2 =(1 + 2 2 ). If we consider a Gibbs sampler generating y1 fY (y), x1 fX jY (xjy1 ), y2 fY jX (yjx1 ) and so on, then the transition density for y is

f (yt+1 jyt ) =

Z

fY jX (yt+1jx)fX jY (xjyt ) dx

= N yt+1 ; (1 ; ) + yt ; (1 ; 2 )2 :

That is,

yt+1 = (1 ; ) + yt + "t ;

4

(12)

;

;

where "t N 0; (1 ; 2 )2 and y1 N ; 2 . This is clearly the standard Gaussian AR(1)

process. In this case, our approach of constructing a stationary Markov chain for a Gaussian

target density is not necessary. However, the construction of stationary autoregressive processes

without the use of the Gibbs sampler would be dicult for many of the examples we consider

later on.

2 AUTOREGRESSIVE PROCESSES

In this section, we present some examples of the construction of stationary Markov chains via

the Gibbs sampler. We consider models of the form

yt+1 = (1 ; ) + yt + "t ;

(21)

where "t is a martingale dierence sequence. We show that the model in (21) may be used to

generate any distribution which is a member of the exponential family. The general approach

for the exponential dispersion family is discussed in Section 21. Two examples which lie in this

family, the Poisson and Gamma, are then considered. We also focus on the inverse Gamma

marginal in Section 24.

21 Exponential dispersion family

Recently, Joe (1996) developed and constructed an AR(1) model with marginal distribution

a member of the innitely divisible convolution-closed exponential family (see also Jorgensen

& Song (1998)). Here we demonstrate the construction has a Gibbs sampler representation.

Consider the exponential dispersion model with density function

fY (y; ; ) = c(y; ) expfy ; ()g;

where y 2 IR and 2 IR+ . We introduce an auxiliary variable x, as in (11), and dene the

joint density

fX;Y (x; y) = fY (y; ; )fX jY (x; 1 ; 2 ; y) ;

where

fX jY (x; 1 ; 2 ; y) = c(x; 1c)(cy(y; ;) x; 2 )

and 1 = , 2 = (1 ; ) with 0 < < 1. The full conditional for fX jY (j) is given above,

and we write a random variable from this conditional density as A(y; ), which is a `thinned'

variable (see Joe (1996); Jorgensen & Song (1998), for further details). The full conditional for

fY jX (j) can be obtained from the constructive form

y =x+

5

where , independent of x, has density function fY (; ; (1 ; )). Consequently, the Gibbs

sampler can be constructed via

yt = At (yt;1 ; ) + t ;

which is precisely the construction of Joe (1996). According to Joe (1996), EfAt (y; )g = y so

E(yt+1 jyt ) = yt + (1 ; )0 ();

and the autocorrelation function is given by r . In this respect, it is seen that our approach for

constructing rst-order stationary processes based on the Gibbs sampler generalises the approach

of Joe (1996).

22 Poisson marginals

Here we consider the joint density, through the introduction of an auxiliary variable x, given by

fY;X (y; x) = fY (y )fX jY (xjy )

= Po(y; ) bi(x; y; p);

where bi represents the binomial distribution and Po the Poisson distribution. Hence, constructively, yt+1 = x +(p), where p = 1 ; p and () denotes a Poisson random variable with mean

. Hence, if B (n; p) denotes a binomial random variable with parameters n and p, then

yt+1 = B (yt ; p) + (p):

This model has an easy to interpret form as a \simple-death with immigration" process. If the

population at time t is yt , the population at time t +1 is the sum of the remaining alive members

of the population from yt , that is B (yt ; p), and the new members who survive a unit of time,

which is the (p) random variable.

23 Gamma marginals

Here we look for a stationary sequence with a gamma marginal distribution, say ga(y; ; ). We

consider the joint density

fY;X (y; x) = fY (y)fX jY (xjy )

= ga(y; ; ) Po(x; y):

Then

yt+1 ga( + (yt ); + );

6

where, again, () denotes a Poisson random variable with mean . Therefore,

E(yt+1 jyt ) = (1 ; ) + yt ;

where = =( + ) and = = . It is immediate that the autocorrelation function of yt at

lag is .

24 Inverse Gamma marginals

Here we consider a stationary sequence with an inverse-gamma marginal distribution, Ig(y; ; ).

Note, this is not a member of the exponential family. Nevertheless, we can introduce a conjugate

style auxiliary variable, x. Consider the joint density,

fY;X (y; x) = fY (y)fX jY (xjy )

= Ig(y; ; ) ga(x; ; y;1 ):

We obtain fY jX (yt+1 jx) = Ig(yt+1 ; + ; + x) and hence

t

E(yt+1 jyt ) = ++y

; 1 = (1 ; ) + yt;

where = =( + ; 1) and = =( ; 1). and is the mean of the marginal distribution.

The autocorrelation function of yt is provided by ( ) = .

Consequently, we have a rst order autoregressive model which is linear in expectation and

has inverse-gamma marginals. This provides a useful evolution equation for the variance in a

stochastic volatility model.

25 State space form

It is possible to use our autoregressive models as latent or subordinated processes for the evolution of a parameter. These models are therefore of a state space type, see for example West

& Harrison (1997) and Harvey (1993). Examples of such models include the stochastic volatility model, see Shephard (1996), and the conditional Poisson models considered by Durbin &

Koopman (1997). Specically, we are considering models of the type

yt f (yt jst )

st+1 f (st+1 jst );

so that the observation yt is independent conditional upon the corresponding state st . Here our

notation has changed. We keep y as the observation variable. Now s is the state parameter,

is unobserved, and for the models we consider, is a Markov process of the kind considered in

Section 2.1, where it was labelled y. We shall illustrate by examining the construction of a

7

stochastic volatility model. In Section 5, we consider the estimation of a state space model for

count data.

The discrete time stochastic volatility model, see Jacquier et al. (1994) and Shephard (1996),

has been used to model the clustering of variance over time for returns. The model assumes

that the log variance follows a Gaussian autoregressive process. A notable exception to this

assumption in continuous time is given by Barndor-Nielsen & Shephard (2001). The implicit

assumption of the standard model is that marginally the variance is log-normally distributed.

The competing GARCH models have linear dynamics in the variance but the stationary density

is unknown.

To illustrate the latent models obtainable from constructions described in Section 2, let us

consider the volatility model, writing s = 2 ,

yt N (0; t2 )

t2+1 = (1 ; ) + t2 + t ;

where the evolution for t2 is as dened in Section 24. In fact, we use a special case of inversegamma model, Ig(=2; 2 =2). The point here is that marginally yt t , a scaled Student-t

distribution. Since yt N (0; t2 ); we have yt2 = t2 "2t , where "2t 21 . Thus yt2 = t2 + vt ; where

vt = yt2 ; t2 = t2 ("2t ; 1)2 . Consequently, we may obtain the autocorrelation function of yt2 , see

Harvey (1993, page 31), as

( ) = (1 + 2 =2 ) ;

= 2=( + 2 ; 2), 12 = 2 4 =f(

V

; 2)2 (

1

where ; 4)g and V2 = 2 4 =f( ; 4)( ; 2)g,

leading to ( ) = =( ; 1), provided > 4, the requirement for the variance of t2 to exist.

The autocorrelation function clearly has an interpretable form. As the degrees of freedom

! 1, thinner tails (until yt is marginally Gaussian), becomes smaller, as does the baseline

multiplier ( ; 1);1 . As becomes smaller, the baseline autocorrelation becomes larger. The

autocorrelation function in y2 is equivalent to an ARMA(1,1) model. This is the same as for

the highly successful GARCH(1,1) model. However, our model is more akin to the stochastic

volatility models, in the sense that we have a separate noise term in the evolution of the variance.

In the stochastic volatility (1) model, the marginal distribution however is a mixture of a normal

with the log-variance being normal.

26 Estimation

The estimation of the autoregressive processes is not particularly dicult from a Bayesian perspective, since we can sample the auxiliary variables given the parameters and the data. Then

we can sample the parameters given both the auxiliary variables and the data. Hence, we can us

8

Markov chain Monte Carlo methods for sampling from posterior distributions. The estimation

methods for non-Gaussian state space models, however, are non-trivial. The posterior correlation between the states and the parameters can cause Markov chain Monte Carlo methods to

mix poorly. Blocking methods, such as those used in Shephard & Pitt (1997), which sample

large parts of the state space simultaneously can, to some extent, circumvent such problems.

For example, in the stochastic volatility case is Section 2.3, we would wish to sample from the

conditional posterior of a block of states, say f (t ; : : : ; t+k jt;1 ; t+k+1 ; ; yt ; : : : ; yt+k ), where

= (; ; )0 : In Shephard & Pitt (1997), a Gaussian proposal formed by taking a second order Taylor expansion around the mode of this conditional posterior is used a an independence

Metropolis-Hastings proposal. However, due to the fact that the state evolution is no longer

Gaussian in the models we are now considering, it is unlikely that large blocks would be accepted frequently. The classical estimation approach via importance sampling (Durbin & Koopman

(1997)) would encounter similar problems. Therefore, we employ a dierent Markov chain Monte

Carlo strategy in this paper. This is illustrated in Section 5 for a count data which arises from

a Poisson distribution whose intensity parameter is determined by a gamma autoregression.

3 ARCH(1)-TYPE MODELS

Here we consider the ARCH(1) class of models, rst introduced for changing variance by Engle

(1982). It is generally assumed that the conditional distribution of the measurement, y, is known,

conditional on the parameter , for all time points t, say f (yt jt ). We consider a Markov chain

of the type shown by the top graph of Figure 1, where 2 replaces . Recent ideas concerning

Markov chains of ARCH type, particularly for modelling volatility appear in Barndor-Nielsen

(1997) and Vidoni (1998). In neither of these cases is there a known stationary form for the

evolution.

The simplest ARCH model is the ARCH(1). This has an explicit conditional density update,

given by

yt+1 N (0; a + byt2 ); a > 0; b 0;

the parameter constraints ensuring that the variance remains positive. This can be considered

in two stages, where yt N (0; t2 ) and t2+1 = a + byt2 . This updating allows the dependence

representation shown by the top graph of Figure 1. However, it should be emphasised that the

update for t2+1 jyt is deterministic rather than stochastic. The attractive statistical features of

the ARCH class of models is that the likelihood is available directly via the prediction decomposition. Estimation and testing are therefore quite straightforward. The stationary density for the

ARCH(1) model, when it exists, is unknown. Nelson (1990) showed that the condition necessary

9

for covariance stationarity for the ARCH(1) model is b < 3:5622. The ARCH class is generally

thought not to allow for sucient kurtosis to adequately model the marginal density of nancial

data; see, for example, Bollerslev (1987), and the discussion of Shephard (1996, Section 1.2.2).

Bollerslev (1987) notes from the literature that \the general conclusion to emerge from most of

these studies is that speculative price changes : : : are well described by a unimodal symmetric

distribution with fatter tails than the normal". In particular, Praetz (1972) and Blattberg &

Gonedes (1974), argue that the scaled Student-t distribution is appropriate as a marginal model

for both stock price indices and individual stock prices. Bollerslev (1987) introduced a Studentt for the conditional density of the ARCH model. However, this of course, does not lead to a

marginal Student-t distribution.

The ARCH(1) volatility model provides the rst example of our approach for a class of

models for which the conditional density f (yt jt ) is regarded as known. After consideration of

a duration model of this type, we shall, in Section 33, provide a version for the exponential

family.

In the general form of the ARCH(1) model we consider here we have our

observation yt depending only upon a time varying parameter st = t . We now form our model

in a similar manner to the methodology of Section 11. We consider the joint density

General approach

f;Y (; y) = fY j (yj)f():

In this case, the marginal density for y from this joint density is simply fY (y), where

fY (y) =

Z

f (yj)f ()d:

Y j

Let us suppose we also have the form of conditional density,

f (yj)f ()

fjY (jy) = Y j f (y) :

Y

As in Section 11 we consider the Gibbs sampler approach as generating y0 fY (y), 1 fjY (jy0 ), y1 fY j(yj1 ) and so on. Then f(t ; yt )g will yield a stationary sequence from

f;Y (; y). In particular fyt g will yield a stationary sequence from fY (y). This principle forms

the basis of the following results.

31 Volatility model

We consider the joint density

f (y; 2 ) = f (yj2 ) f (2 )

= N (y; 0; 2 ) Ig(2 ; =2; 2 =2):

10

Marginally, fY (y) = t (y; 0; 2 ), a scaled Student-t distribution. We also have the conditional

density f (2 jy ) = Ig(2 ; =2 + 1=2; 2 =2 + y2 =2).

Following the Gibbs sampler construction, if 02 Ig(=2; 2 =2) and then we cycle through

the two full conditionals, for t = 1; : : : ; n, we have constructed a stationary process for yt , the unconditional density being a scaled Student-t; that is, marginally yt = t . This Gibbs sequence

can itself be viewed as a model with a feedback interpretation2 . Since f: : : ; yt ; t2 ; yt+1 ; : : :g is a

Markov chain, we can integrate t2 out to calculate the transition density

Z

f (yt+1 jyt) = f (yt+1 jt2 )f (t2jyt ) dt2 ;

obtaining

s

2

2

yt+1 = yt1++

(31)

s +1 ;

where s +1 t +1 , a Student-t random variable with + 1 degrees of freedom. In the original

ARCH(1) model of Engle (1982) the s +1 random variable is replace with a standard normal

random variable. Our model is therefore dierent from the ARCH(1) model of Engle (1982).

However, our model arises as a restricted version of the extension provided by Bollerslev (1987).

The t-ARCH(1) of Bollerslev (1987) is given by

q

yt+1 = a + byt2 sm ;

where sm tm , a Student-t random variable with m degrees of freedom. Our t-ARCH model

therefore arises by setting a = 2 =(1 + ), b = 1=(1 + ) and constraining bm = 1. So a single

restriction on the model of Bollerslev (1987) provides a known, scaled t marginal density. This

new model is linear in yt2 . We have

2

2

E(yt2+1 jyt2 ) = (y(t+;1) ) ;

which implies the autocorrelation function of yt2 is ( ) = ( ; 1); .

32 Duration model

Models for explaining times between successive trades have recently been of interest, due to the

amount of data currently available on intra-daily market activity. The autoregressive conditional

duration model of Engle & Russell (1998) has been introduced to explain the slowly changing

mean structure of durations between trades. This work parallels the GARCH literature for

changing volatility. Here we consider a Gibbs structure for constructing an observation driven

model of this type.

2 See the top graph in Figure 1. This gives the dependency structure for our ARCH(1) model. Note the arrows

indicate the direction of dependence. These graphs are known as directed acyclic graphs (DAGs) in the statistical

community.

11

The standard assumption made in this context is that yi (the time between the ith and the

(i ; 1)th trade) is exponential with parameter i . The i (as for the variance in the volatility

model) is assumed to evolve over time. In the non-time varying context, it is often assumed

the i arise from a gamma distribution, ga(; ) (see, for example, Lancaster (1990)). We will

therefore take this as the marginal process for fi g. Now consider the joint density

fY;(y; ) = fY j(yj)f()

= Ep(y; ) ga(; ; );

where Ep denotes the exponential distribution. The conditional density if given y is ga( +

1; + y). The Gibbs sampler runs over the two conditional densities for i+1 and for yi+1 ,

iteratively. Further, it is clear that

+ yi

E(yi+1 jyi ) = E(i;1

+1 jyi ) = ;

similar to the specication of Engle & Russell (1998). We can integrate out , to obtain

f (yi+1 jyi ) = Gg(yi+1 ; + 1; + yi; 1);

where Gg represents the gamma-gamma distribution (see, for example, Bernardo & Smith

(1994)), allowing classical inference for the two parameters and via prediction decomposition.

33 Exponential family

More generally, we consider the situation when the conditional density of yt given t is a member

of the exponential family;

f (yt ; t) = c(yt ) exp fytt ; M (t )g ;

so that E(yt jt ) = (t ) = M 0 (t ). We choose f (t ) to be a member of the standard conjugate

family, see Gutierrez-Pena & Smith (1997);

f (t) = exp fst ; M (t ) + (s; )g ;

where > 0. Under mild regularity conditions (Diaconis & Ylvisaker (1979)), Ef(t )g = s=.

Consequently,

E(yt+1 jyt ) = s1 ++ yt

since

f (t+1 jyt) = expf(s + yt )t+1 ; (1 + )M (t+1 ) + (s + yt; 1 + )g

12

and E (yt+1 jt+1 ) = (t+1 ). It is clear that we can calculate f (yt+1 jyt ). As f: : : ; yt ; t+1 ; yt+1 ; : : :g

is a Markov chain we obtain,

f (yt+1 jyt ) = f (ytf+1(jt+1jy)f; y(t+1)jyt)

t+1 t t+1

(yt+1 ) expf(s + yt ; 1 + )g

= cexp

f(s + yt + yt+1; 2 + )g

and marginally fY (y) = exp f(s; )g c(yt ) expf;(s + yt ; 1 + )g.

34 Estimation

Estimation for all models of the above type is reasonably straightforward via prediction decomposition, as f: : : ; yt ; yt+1 ; yt+2 ; : : :g is a Markov chain. Denoting the unknown xed parameters

by , we have

n

Y

f (y; ) = f (y1; ) f (yt jyt;1; ):

t=2

The log-likelihood may be maximised with respect to via usual numerical procedures. Note

that likelihood inference is made more statistically ecient as we explicitly have the initial

density f (y1 j ) in the likelihood. This is a problem for ARCH and GARCH models as the

unconditional density is unknown.

4 GARCH(1,1)-TYPE MODELS

The GARCH(1,1), generalised ARCH, model of Bollerslev (1986), is one of the most widely used

for modelling volatility. The ARMA type structure allows sucient memory for most volatility

models applied to asset and exchange rate returns. There are many papers on the properties

of the standard GARCH(1,1) model. A recent look at the existence of marginal moments and

conditions for stationarity of the GARCH model is to be found in He & Tersvirta (1999). The

conditions for strict stationarity are quite complicated and the marginal density, if one exists,

is unknown. This is not the case for the models introduced in this section, which may usefully

be thought of as GARCH processes with the addition of a (heteroskedastic) error term in the

parameter evolution.

The GARCH(1,1) model diers from the state space formulation of Section 25 in that

observations can feedback directly into the prediction of the next observation. The GARCH(1,1)

model can be applied not only to volatility models, of course, but also to durations, see Engle &

Russell (1998), count models and so on. Indeed, the GARCH model provides a general formation

for the evolution of an unobserved parameter. The standard GARCH(1,1) model for volatility,

13

Bollerslev (1986), is

yt

N (0; t2 )

t2+1 = a + byt2 + ct2 :

Our models will dier from the above structure in that, in keeping with the spirit of this paper,

the marginal density of yt and t2 will be kept xed and known. In the following subsections we

consider the GARCH model for volatility, count data and the exponential family respectively.

41 Volatility model

Although the models introduced here are similar to GARCH(1,1), we are not able write down

f (yt+1 ; t2 ; yt ) explicitly, as we can for the standard GARCH(1,1) model. Our aim is to nd a

model for which marginally yt is Student-t and, if yt conditional on t2 is N (0; t2 ), then, for

some a, b, c,

E(t2+1 jyt ; t2 ) = a + byt2 + ct2 :

Of course, we still need t2 to be a stationary Ig(=2; =2) process. Following the development

of the Gibbs sampler in Section 3, we introduce the joint density

f (y; ; z) = N (y; 0; 2 ) Ig(2 ; =2; 2 =2) ga(z ; ; ;2 );

(41)

where z is an auxiliary variable; making no dierence to our marginal process, but allowing

longer range dependence. This GARCH model is based on a Gibbs sampler driven by the

full conditionals f (yj2 ), f (z j2 ) and f (2 jy; z ). Adding time subscripts to explicitly model

the process (illustrated in the middle graph of Figure 1) we update (from time t to t + 1) as

f (t2+1 jyt ; zt ), f (zt+1 jt2+1 ) and f (yt+1 jt2+1 ). This satises our requirements, since we obtain

2

2

2

E t2+1 jyt ; t2 = yt + +2+;21t :

We have three parameters ; and . The rst two represent aspects of the marginal density,

while again represents persistence. When = 0, we obtain the same variance evolution as our

t-ARCH model. As ;! 1, it is clear we obtain increased dependence. It is immediate that

we have yt = t as the marginal density for observations. An approach to estimation of this

model is outlined in the Appendix (Section 72). The update structure is given in the bottom

graph of Figure 1.

42 Poisson model

In order to show the generality of this method, let us consider a GARCH(1,1) structure for a

conditional Poisson model. We start with a joint density

f (y; ; z) = Po(y; ) ga(; a; b) Po(z; );

14

so here the marginal density for y is Poisson-gamma, Pg(a; b; 1), see Bernardo & Smith (1994,

page 119). Adding time subscripts to explicitly model the process (illustrated in the middle graph

of Figure 1 with replacing 2 ) we consider updating (from time t to t + 1) via f (t+1 jyt ; zt ),

f (zt+1 jt+1 ) and f (yt+1 jt+1 ). We obtain

f (t+1 jyt ; zt ) = ga(t+1 ; yt + a + zt ; 1 + b + );

so f (t+1 jyt ; t ) follows the evolution

t+1 ga(t+1 ; yt + a + (t ); 1 + b + ):

Therefore, we have

t

E(t+1 jyt ; t ) = a 1++ytb++

:

43 Exponential family

We now rely on the joint density

f (yj)f ()f (zj):

More generally, we consider the situation when the conditional density of yt given t is from

exponential family;

f (yt; t) = c(yt ) exp fyt t ; M (t )g ;

so E(yt jt ) = (t ) = M 0 (t ). As previously, we choose the mixing density f (t) to be a member

of the standard conjugate family,

f (t) = exp fst ; M (t ) + (s; )g :

For the GARCH version, we introduce

f (zt; t ) = c (zt ) exp fzt t ; M (t )g :

Then

and therefore

f (t+1 jzt ; yt ) / expf(s + yt + zt )t+1 ; (1 + + )M (t+1 )g

(t ) :

E(yt+1 jyt ; t ) = E Ef(t+1 )jyt ; zt g = s +1y+t + +

The stochastic volatility version follows by running the Gibbs sampler over f (z; ) and drawing

f (yj) at each iteration, see the bottom graph of Figure 1, replacing 2 by . In this case, we

have

(t ) :

E(t+1 jt ) = s + +

15

5 APPLICATION: POISSON-GAMMA MODEL

We shall illustrate the application of our model and the associated methods by examining the

data set considered by McDonald & Zucchini (1997, page 194-195). The data consists of weekly

rearm homicides in Cape Town from 1 January 1986 to 31 December 1991 (313 observations

in total), see Figure 4. These models are in the form of small counts. We shall assume that the

observations are Poisson, conditional upon a parameter which evolves according to the gamma

autoregressive process of Section 23. Formally we have,

yt

Po(xt );

t = 1; : : : ; n

xt+1 ga( + zt ; + ); zt Po(xt ):

(51)

Marginally, xt ga(; ) so the mean and variance of xt are = = and 2 = = 2 respectively.

The persistence parameter is = =( + ). Of course,

t

E(xt+1 jxt ) = ++x

= (1 ; ) + xt :

We may write yt = xt + vt ; where vt = yt ; xt = (xt ) ; xt , an uncorrelated zero mean process.

Therefore the autocorrelation function of yt is given by

( ) = 1 + :

51 Estimation Procedure

Maximum likelihood for the model (51) is dicult due to the non-conjugate structure of the

model. Quasi-maximum likelihood procedures are easier to apply. For instance, we can linearise

the above system directly, obtaining,

yt

V2 = ;

= xt + vt ;

xt+1 = (1 ; ) + xt + t; 2 = 2 (1 ; 2 );

(52)

where = = and 2 = = 2 . We could then treat the martingale terms vt and t as if

they were independent Gaussian, N (0; V2 ) and N (0; 2 ). This would then allow maximum

likelihood of the resulting state space form model to be carried out via the Kalman lter, see

for example West & Harrison (1997). However, this approach would lead to biased estimation.

For this reason, we choose a Bayesian Markov chain Monte Carlo approach for estimation of

the parameters = (; ; )0 and the underlying state x = (x1 ; : : : ; xn )0 : We obtain stationary

samples from the posterior density f (; xjy). A thorough review of Markov chain Monte Carlo

is given, for example, in Gilks et al. (1996). Here we outline the main steps of the Markov chain

16

Monte Carlo algorithm, relegating the details to the Appendix (Section 7.1). We retain our

auxiliary variables z = (z1 ; : : : ; zn;1 )0 in the Markov chain Monte Carlo analysis. The sampling

scheme works by iterating the following steps:

x f (xjz; ; y) :

f (xjz; ; y) = f (x1jz1 ; ; y1 )f (xnjzn;1 ; ; yn)

nY

;1

t=2

f (xtjzt ; zt;1 ; ; yt):

These univariate densities may be simulated from directly as they are of gamma form, see

Appendix (Section 7.1).

z f (zjx; ; y) :

f (z jx; ; y) =

nY

;1

t=1

f (zt jxt ; xt+1 ; ):

The univariate distributions may be bounded by a Poisson distribution allowing an ecient

accept-reject algorithm, see Appendix (Section 7.1).

f (jz; x; y) :

f (jz; x; y) / f (z; xj)f ()

= f (x1 j)

nY

;1

t=1

f (xt+1jzt ; )f (zt jxt ; )f ();

where f () is the prior. This is the full conditional posterior for : A Student-t distribution

is used as a proposal for each univariate element of = (; ; )0 . This proposal is accepted

or rejected as an independence Metropolis-Hastings candidate, see Appendix (Section 7.1).

; x f (; xjz; y) :

We have

f (; xjz; y) = f (xjz; ; y)f (jz ; y)

/ f (yjx)f (z; xj)f ();

Therefore,

f (jz ; y) / f (yjxf )(fx(jz;z; x;jy))f () :

This last move allows reduced conditioning, eectively integrating out x, and is necessary to

permit a reasonably ecient Markov chain Monte Carlo scheme. Again, a Student-t distribution

is used as a proposal within an independence Metropolis-Hastings scheme.

17

52 Results

Let us denote the three parameter model given by (51) as Pga(; ; ). We shall estimate this

model for the data set of weekly rearm homicides described above. However, prior to this

two simple alternative models were considered. In particular a simple one parameter Poisson

model, Po(), is considered together with a static Poisson model with gamma heterogeneity,

Pg(; ). The maximum likelihood results for these models are given in Table 1. The diagnostics

of the table will be examined in the following section. Note that there is a rise of 67:9 in the

log-likelihood in going from the basic Po() model to the more general Pg(; ) distribution.

As these are nested models, the usual 2 test is highly signicant. Therefore, heterogeneity (or

mixing) is present.

To estimate our Pga(; ; ) model we transform to (; 2 ; ). For brevity, we display the

resulting samples , the mean of xt , resulting from our Markov chain Monte Carlo scheme in

Figure 2. The Markov chain Monte Carlo sampler was run over 60 000 iterations. The scheme

was ecient as can be seen from the, almost, independent draws of . The marginal posteriors

for ( , ;)0 are given in Figure 2. The corresponding means and covariance matrix are given in

Table 1. Figure 3 displays the marginal posteriors for (; 2 ; ), perhaps a more interpretable

transformation. It can be seen that there is clear evidence of persistence in the time series.

There is no appreciable marginal posterior mass for below 0:65, however this parameter is also

not very close to 1, indicating that shocks are not highly persistent.

The particle lter of Pitt & Shephard (1999) was applied to the time series. Representing

Ft = (y1; : : : ; yt )0, the observations up to and including that of time t, the particle lter draws

samples from the ltering density f (xt jFt ; b). We x b at the posterior mean. In Figure 4,

we display the ltered mean over time together with the posterior mean under the smoothing

density f (xt jFn ); obtained from the Markov chain Monte Carlo output. It can be seen from the

top graph that both the ltered and smoothed estimates of the state follow the data closely. In

addition, the bottom graph shows that the ltered and smoothed state means are in alignment.

This is reassuring as a heuristic diagnostic since the ltered mean should be an unbiased estimate

of the smoothed mean only if the model is correct.

The log-likelihood for our model may be eciently estimated as a bi-product of the particle

lter, see Pitt & Shephard (2000). We have an estimate of our one-step ahead prediction density,

M

X

1

f (yt+1jFt ; ) = M

f (yt+1jxkt+1 );

b

b

k=1

where xkt f (xt jFt ; b) and xkt+1 f (xt+1 jxkt ; b), k = 1; : : : ; M . We may now estimate the

18

log-likelihood as,

log L(b) = log f (y1; : : : ; yn jb)

=

nX

;1

t=0

log fb(yt+1 jFt ; b):

This allows the calculation of the log-likelihood to be evaluated at the Bayesian mean b. In

Table 1 we show the log-likelihood of our model. Interpreting classically, the likelihood ratio

test indicates that the temporal parameter, ; is highly signicant compared with the nested

Pg(; ) model, for which = 0. This conrms the Bayesian result of the importance of the

time series aspect.

53 Diagnostics

We now look for evidence of departures from our model. In particular, we look at tests under

the null hypothesis that the model is correct, specically that our observations at time t have

associated density f (yt jFt;1 ; b). We have a discrete distribution function on the random variable

Yt , so F (yt ) = P (Yt yt jFt;1 ; b). We are denoting our observations as yt and the corresponding

random variable as Yt . We shall suppress the conditioning upon Ft;1 ; b at this juncture since it

does not aect our discussion. Suppose we sample ut from

f (utjyt ) =

(

1

Pr(yt ) ;

F (yt ; 1) < ut F (yt )

otherwise;

0;

(53)

where Pr(yt ) = F (yt ) ; F (yt ; 1). Note that the regions [F (yt ; 1), F (yt )] provide a partition

of [0; 1], for yt = 0; 1; 2; : : : . Under the hypothesis that yt F (yt ), we have that

f (ut) =

X1

yt =0 f (ut jyt ) Pr(yt ) = 1; ut 2 [0; 1];

as we have a partition of the space of ut . Therefore, if our model, and parameters, are correct, ut U(0; 1), independently, over time. This forms the basis of our diagnostic tests. Any

departure from our model, either marginally or dependence through time should be apparent

by examining these residuals. We restrict ourselves to two tests. Firstly, we transform our ut

via nt = ;1 (ut ). Under our null hypothesis these should be independently standard Gaussian.

This allows us to perform a standard Portmanteau test for autocorrelation. Clearly, this allows

us to test whether we have modelled temporal dependence adequately. Secondly, we perform a

2 test for goodness of t on the ut 's, comparing with a standard uniform.

In order to sample ut we need to calculate F (yt jb). This is can be explicitly calculated for the

Po() model and for the Pg(; ) distribution. Before commenting on the results, the details

of estimating F (yt+1 jFt ; b) for our Pga(; ; ) time series model should be outlined. From the

19

output of the particle lter which we recall yields samples from f (xt jFt ; b); the ltering density,

we have

M

X

F (yt+1 jxkt+1 );

Fb (yt+1jFt ; b) = M1

k=1

where xkt f (xt jFt ; b) and xkt+1 f (xt+1 jxkt ; b), k = 1; : : : ; M . Noting that F (yt+1 jxkt+1 ) is just

the distribution function for a Poisson, since yt Po(xt ); we arrive at ecient estimates of the

prediction distribution. This allows us to sample ut via (53) for t = 1; : : : ; n.

The details out of the way, we can look at the results. The last two columns of Table 1

give the goodness of t statistic for the three models and the Portmanteau statistic respectively.

Corresponding to the table we have Figure 5, showing the Gaussian residuals, together with

their correlogram and the standard quantile plot for the three models. It is clear from Table 1

that the simple independent Poisson model fails both tests at high signicance. The independent

Poisson-gamma model fails to capture dependence but does provide a good overall t, indicated

by the fact that at the 5% level the goodness of t test is passed. This suggests that the marginal

model of Poisson-gamma is a sensible choice. Our three parameter Poisson-gamma time series

model passes both tests with large p-values. The residuals in Figure 5 indicate hardly any

evidence of correlation over time and the quantile plot is close to linear. This indicates that

marginally and conditionally our model ts the data well.

Poisson model Po()

Par

ML

2:6198

V ar

0:00837

log L

;719:36

GOF

219 (p)

77:09 (0)

Q

215 (p)

281:51 (0:0)

Poisson-gamma model Pg(; )

2:1959 0:1108 0:0422

0:8382 0:0422 0:0180

;651:5

28:40 (0:076) 216:93 (0:0)

Poisson-gamma stationary model Pga(; ; )

2:7769 0:28254 0:1006 0:5855

1:0040

0:1006 0:0460 0:17188

6:1773

0:5855 0:17188 6:3061

;617:07

21:88 (0:290) 14:393 (0:496)

Table 1: Estimation results for the rearms homicide dataset: The goodness of t statistics and

the Portmanteau tests on the correlogram are reported for all three models.

20

6 CONCLUSIONS

In this paper we have shown how it is possible to assign a xed marginal density to a time series

model and to build the dependence structure around this. We have considered three dierent

classes of models; the ARCH(1), latent AR(1) and GARCH(1,1) classes. There is a degree of

exibility in this approach as we may choose which marginal family is most appropriate for the

problem under consideration. The incorporation of dependence whilst retaining the marginal

density clearly provides a natural generalisation over treating observations as independent. In

addition, the specication of the unconditional density means that the assignment of a density

for our initial observation is trivial. This can be more problematic for the observation driven

models such as the GARCH(1,1) for which the unconditional density is unknown.

The models obtained in this paper are all linear as the expectation of future observations

is linear as a function of the previous parameters and observations. This lends interpretability

to the models and allows direct evaluation of the autocorrelation. The inclusion of covariates

has not been considered in this paper but is quite straightforward. In the example considered

in Section 5 it was found that a simple three parameter state space model provided a very good

t to the data, both marginally and temporally. The Markov chain Monte Carlo estimation

procedure and the use of the particle lter provides straightforward estimation and evaluation

for models of this type.

61 Acknowledgements

We wish to thank a referee for constructive comments on a previous version of the paper.

References

Barndorff-Nielsen, O. E. (1997). Normal inverse Gaussian distributions and stochastic

volatility modelling. Scand. J. Statist 24, 1{14.

Barndorff-Nielsen, O. E. & Shephard, N. (2001). Non-gaussian ou based models and

some of their uses in nancial economics. J. R. Statist. Soc. B forthcoming.

Bernardo, J. M. & Smith, A. F. M. (1994). Bayesian Theory. John Wiley, Chichester.

Blattberg, R. C. & Gonedes, N. J. (1974). A comparison of the stable and student

distributions as statistical models for stock prices. J. Business 47, 244{280.

Bollerslev, T. (1986). Generalised autoregressive conditional heteroskedasticity. J. Econometrics 51, 307{327. Reprinted as pp. 42{60 in Engle, R.F.(1995), ARCH: Selected Readings,

Oxford: Oxford University Press.

21

Bollerslev, T. (1987). A conditional heteroskedastic time series model for speculative prices

and rates of return. Rev. Economics and Statistics 69, 542{47.

Campbell, J. Y., Lo, A. W., & MacKinlay, A. C. (1997). The Econometrics of Financial

Markets. Princeton University Press, Princeton, New Jersey.

Chib, S. & Greenberg, E. (1995). Understanding the Metropolis-Hastings algorithm. The

American Statistican 49, 327{35.

Diaconis, P. & Ylvisaker, D. (1979). Conjugate prior for exponential families. Ann. Statist.

7, 269{281.

Durbin, J. & Koopman, S. J. (1997). Monte Carlo maximum likelihood estimation of nonGaussian state space model. Biometrika 84, 669{84.

Engle, R. F. (1982). Autoregressive conditional heteroskedasticity with estimates of the variance of the United Kingdom ination. Econometrica 50, 987{1007. Reprinted as pp. 1{23 in

Engle, R.F.(1995), ARCH: Selected Readings, Oxford: Oxford University Press.

Engle, R. F. & Russell, J. R. (1998). Forecasting transaction rates: the autoregressive

conditional duration model. Econometrica 66, 1127{1162.

Gelfand, A. E. & Smith, A. F. M. (1990). Sampling-based approaches to calculating marginal densities. J. Am. Statist. Assoc. 85, 398{409.

Gilks, W. K., Richardson, S., & Spiegelhalter, D. J. (1996). Markov Chain Monte

Carlo in Practice. Chapman & Hall, London.

Gutierrez-Pena, E. & Smith, A. F. M. (1997). Exponential and Bayesian Conjugate Families: Reviews and Extensions. Test 6, 1{90.

Harvey, A. C. (1993). Time Series Models. Harvester Wheatsheaf, Hemel Hempstead, 2nd

edition.

He, C. & Tersvirta, T. (1999). Properties of moments of a family of garch processes. J.

Econometrics 92, 173{192.

Jacquier, E., Polson, N. G., & Rossi, P. E. (1994). Bayesian analysis of stochastic volatility

models (with discussion). J. Business and Economic Statist. 12, 371{417.

Joe, H. (1996). Time series models with univariate margins in the convolution-closed innitely

divisible class. Journal of Applied Probability 33, 664{677.

Jorgensen, B. & Song, P. (1998). Stationary time series models with exponential dispersion

model margins. Journal of Applied Probability 35, 78{92.

Lancaster, T. (1990). The Econometric Analysis of Transition Data. Cambridge University

Press, Cambridge.

Lawrence, A. J. & Lewis, P. A. W. (1985). Modelling and residual analysis of nonlinear

22

autoregressive time series in exponential variables. J. R. Statist. Soc. B 47, 165{202.

McDonald, I. & Zucchini, W. (1997). Hidden Markov and Other Models for Discrete-valued

Time Series. Chapman & Hall, London.

Nelson, D. B. (1990). Stationarity and persistence in the GARCH(1,1) model. Econometric

Theory 6, 318{334. Reprinted as pp. 176{192 in Engle, R.F.(1995), ARCH: Selected Readings,

Oxford: Oxford University Press.

Pitt, M. & Shephard, N. (2000). Auxiliary variable based particle lters. In Doucet, A.,

de Freitas, J. F. G., & Gordon, N., editors, Sequential Monte Carlo Methods in Practice.

Cambridge University Press.

Pitt, M. K. & Shephard, N. (1999). Filtering via simulation based on auxiliary particle

lters. J. American Statistical Association 94, 590{599.

Praetz, P. (1972). The distribution of share price changes. J. Business 45, 49{55.

Ripley, B. D. (1987). Stochastic Simulation. Wiley, New York.

Shephard, N. (1996). Statistical aspects of ARCH and stochastic volatility. In Cox, D. R.,

Hinkley, D. V., & Barndor-Nielson, O. E., editors, Time Series Models in Econometrics,

Finance and Other Fields, pages 1{67. Chapman & Hall, London.

Shephard, N. & Pitt, M. K. (1997). Likelihood analysis of non-Gaussian measurement time

series. Biometrika 84, 653{67.

Smith, A. F. M. & Roberts, G. (1993). Bayesian computations via the Gibbs sampler and

related Markov Chain Monte Carlo methods. J. R. Statist. Soc. B 55, 3{23.

Tierney, L. (1994). Markov Chains for exploring posterior distributions (with discussion).

Ann. Statist. 21, 1701{62.

Vidoni, P. (1998). Proper dispersion state space models for stochastic volatility. University of

Udine, working paper.

West, M. & Harrison, J. (1997). Bayesian Forecasting and Dynamic Models. SpringerVerlag, New York, 2 edition.

7 APPENDIX

71 Markov chain Monte Carlo for Poisson-gamma model.

Here we describe the details of the Markov chain Monte Carlo procedure of Section 51. Recall

we have

yt Po(xt );

t = 1; : : : ; n;

xt+1 ga( + zt ; + ); zt Po(xt ):

23

Our full data likelihood is therefore,

f (y; z; xj) / f (y1jx1 )f (x1j)

nY

;1

t=1

f (yt+1jxt+1 )f (xt+1 jzt ; )f (zt jxt ; ):

From this the following conditional steps can be derived.

Step 1:

Sampling from f (xjz; ; y). We obtain the closed forms,

f (xt jzt ; zt;1 ; ) = ga (xt jyt + zt + zt;1 + ; 1 + + 2) ;

f (x1 jz1 ; )

= ga (x1 jyt + z1 + ; 1 + + ) ;

f (xnjzn;1 ; ) = ga (xn jyn + zn;1 + ; 1 + + ) :

Sampling from f (z jx; ; y). An accept-reject scheme is used for our discrete valued

auxiliary variables zt . We obtain

Step 2:

f (zt jxt ; xt+1 ; ) / f (xt+1 jzt ; )f (ztjxt ; ):

We get the simplication,

log f (zt jxt ; xt+1 ; ) = c + zt log(t ) ; log(zt !) ; log ;( + zt );

where

log(t ) = log(xt ) + log( + ) + log(xt+1 ):

Let us denote l(zt ) = ; log ;( + zt ): Then we can form an ecient accept-reject algorithm,

see Ripley (1987), as follows, noting that l(zt ) is concave (and can therefore be bounded by a

straight line from above),

log f (zt jxt ; xt+1 ; ) = c + zt log(t ) ; log(zt !) + l(zt )

c + zt log(t ) ; log(zt !) + l(zbt ) + l0 (zbt )(zt ; zbt )

= k ; t + zt log(t ) ; log(zt !)

= k + log Po(zt ; t );

where log t = log(t ) + l0 (zbt ) and we take the expansion point as zbt = (xt + xt+1 )=2. We

therefore sample from Po(t ) until acceptance with probability

log Pr(accepting zt ) = l(zt ) ; l(zbt ) ; l0 (zbt )(zt ; zbt ):

In practice we found that this proposal was accepted more than 99% of the time.

24

Sampling from f (jz; x; y): In order to perform parameter sampling eciently we

transform from = (; ; )0 to e = (; 2 ; )0 where = ; and 2 = 2 and the persistence parameter, = + . This transformation is done to reduce the dependency between the

parameters. We place our weak prior on ;e f (e).

Step 3:

f (ejz; x; y) / f (z; xje)f (e)

= f (e)f (x1 je)

nY

;1

t=1

(71)

f (xt+1jzt ; e)f (zt jxt ; e):

We sample the elements of e in turn drawing from f (j2 ; ; z; x; y), f (2 j; ; z; x; y) and

f (j; 2 ; z; x; y), noting that each of these distributions can be derived, up to proportionality, from (71). In each case we iterate to the mode of the log of (71), recording the mode as m,

and the second derivative of the log of (71) l00 (m). We propose by drawing from a t-distribution

with degrees of freedom 5; mean m and variance ;1=l00 (m). We accept with probability given

by the usual independence Metropolis Hastings expression. In practice, we nd that moves are

frequently accepted, yielding an ecient algorithm.

Sampling from f (; xjz ; y): Despite the fact that the proposed moves in the above step

are frequently accepted, it is still the case that, due to high posterior correlation between the

states, x and z , and the parameters, e, the resulting Markov chain Monte Carlo method mixes

quite slowly. To combat this we eectively integrate out the x states and obtain samples from

f (;e xjz ; y). This is possible as we know the form of f (xjz; e; y); given previously as the product

of Gamma densities. We have,

Step 4:

f (;e xjz ; y) = f (xjz; e; y)f (ejz; y)

/ f (yjx)f (z; xje)f (e):

Therefore,

f (ejz; y) / f (yjx)f (z; exj)f () :

f (xjz; ; y)

We iterate through the elements of e in turn drawing from f (; xj2 ; ; z ; y), f (2 ; xj; ; z ; y)

and f (; xj; 2 ; z ; y). The Metropolis scheme is the same in each case so we shall detail sampling

from f (; xj2 ; ; z ; y).

We have f (; xj2 ; ; z ; y) = f (xjz; ; 2 ; ; y)f (j2 ; ; z ; y). Note that f (j2 ; ; z ; y) /

f (ejz; y), given above. Let us denote l() = log f (j2 ; ; z ; y). We iterate to the mode of l(),

m; recording l00 (m). We sample our proposal p g(jm; v), a t-density with mean m, variance

v = ;1=l00 (m) and degrees of freedom 5. We then sample xp f (xjz; ; 2 ; ; y). Letting and

e

25

e

x represent out current values, we accept the proposed values, p and xp with log probability,

log P (; x ! p ; xp ) = l(p ) ; log g(p jm; v) ; l() + log g(jm; v).

We nd that this independence Metropolis proposal accepts moves with high probability.

72 Estimation for GARCH Processes

The estimation procedure for the GARCH models is aided by the conditional updating structure

of the latent variables. Thus the Gibbs sampling scheme can be directly applied. We propose an

Markov chain Monte Carlo procedure which allows blocking for our GARCH structure models

of Section 4. Note that in this case we had

f (y; ; z) = N (y; 0; 2 ) Ig(2 ; =2; 2 =2) ga(z ; ; ;2 );

from which all our conditional densities arise. In order carry out a general Markov chain Monte

Carlo method we actually retain the auxiliary structure to perform estimation, rather than

attempting to integrate variables out analytically. Let us denote the unknown parameters by .

Then we can perform the following sweeps on a system, see middle graph in Figure 1;

1. for t = 1; : : : ; n sample zt from f (zt jt2 ; t2+1 ; yt ) / f (zt jt2 )f (t2+1 jzt ; yt ):

2. For t = 1; : : : ; n sample t2 from f (t2 jyt ; yt;1 ; zt ; zt;1 ) / f (yt jt2 )f (t2 jzt;1 ; yt;1 )f (zt jt2 ):

3. Sample from

f (jy; z; 2 ) / f ()f (z1 j)f (y1 j)

n

Y

t=2

f (yt jt2 ; )f (t2jzt;1 ; yt;1; )f (zt jt2 ; ):

The dependence upon the xed parameters has been suppressed from the notation of steps

(1) and (2). Note that in steps (1) and (2) we are, in fact, sampling directly from the reduced

conditionals f (z j2 ) and f (2 jy; z ) where z = (z1 ; : : : ; zn )0 and 2 = (12 ; : : : ; n2 )0 . Fortunately

the conditional independence structure means that this task reduces to univariate draws which

are usually straightforward. Indeed the densities in (2) will usually be of standard form and

the densities of (1) can generally be sampled from eciently using accept-reject sampling. The

procedure in (3) may be speeded up by using the reduced conditional densities f (yt jzt ) and

f (zt+1 jzt ; yt ) given for the specic forms of Section 4. In this case we use the following density

for step (3);

n

Y

f (jy; z ) / f ()f (z1j)f (y1 j) f (ytjzt ; )f (zt jzt;1 ; ):

t=2

26

Note that f () is the prior for , whilst f (z1 j), f (y1 j) are the stationary densities for the

rst latent variable and initial observation respectively. For large data sets a Gaussian proposal on these parameters (inside a Metropolis step) should work well as the posterior will be

asymptotically Normal.

73 GARCH volatility model

To illustrate how steps (1) and (2) of the above Gibbs procedure can be carried out eciently

in practice we illustrate by examining the GARCH structure for volatility. In this case step (2)

may be carried out directly as

f (2 jyt ; yt;1 ; zt ; zt;1 ) = Ig

t

t2 j 12 ( + 4 + 2); 21 ( 2 + yt2 + yt2;1 + 2zt + 2zt;1 )

:

Step (1) proceeds in the following manner. Let us examine the log-density

log f (zt jt2 ; t2+1 ; yt ) = c + log f (zt jt2 ) + log f (t2+1 jzt ; yt ):

2

2

Letting a = 2 =2 + yt2 =2, b = + 1=2 + =2 and d = 2+2+1+1 , we have

t

t

t

t

log f (zt jt2 ; t2+1 ; yt ) = c ; dzt + ( ; 1) log zt + b log(zt + a);

where support is on zt 0. By changing variable to x = zt + a, x > a, we have

log f (xjt2 ; t2+1 ; yt ) = c ; dx + ( ; 1) log(x ; a) + b log x

c ; dx + ( ; 1) log x + b log x = k + log g(x).

We may sample easily from the density g(x) which is ga(x; + b; d), truncated to be greater a,

then accepting the sample x with probability

exp[( ; 1)(log(x ; a) ; log x))]:

This is the rejection algorithm, see Ripley (1987). We may then transform back to get zt = x ; a,

yielding a direct sample from the required posterior.

27

y

y

2

1

σ

σ

2

1

2

2

3

y

y

2

1

σ

σ

2

1

z1

2

2

σ

2

3

z2

y

y

2

1

σ

z1

σ

2

σ

2

1

2

2

σ

z2

Figure 1: Dependency structure for the ARCH(1), GARCH and Stochastic Volatility models.

28

2

3

4.5

.75

4

3.5

f( v | y )

.5

3

.25

2.5

2

0

1000

2000

3000

4000

1

2

3

4

5

6

2

.2

1.5

f( beta | y )

.15

1

f( alpha | y )

.1

.5

.05

.5

1

1.5

2

0

5

10

15

Figure 2: Top left: 60000 draws from the posterior of . Top right: The posterior of . Bottom

left: The posterior of . Bottom right: The posterior of .

1.5

f( mu | y )

1

.5

2

2.25

2.5

2.75

3

3.25

.5

3.5

3.75

4

4.25

4.5

f( sigma2 | y )

.25

1

2

3

4

5

6

.7

.75

7

8

9

10

f( rho | y )

5

.55

.6

.65

.8

.85

.9

.95

Figure 3: Top: The posterior of . Middle: The posterior of 2 . Bottom: The posterior of .

29

15

10

5

0

25

50

75

100

125

150

175

200

225

250

275

300

0

25

50

75

100

125

150

175

200

225

250

275

300

10

7.5

5

2.5

Figure 4: Top: The number of rearm homicides per week in Cape Town from 1 January 1986

to 31 December 1991, together with (mean) smoothed and ltered estimates of the underlying

Poisson parameter. Original Series: Mean=2.617, variance=6.568. Bottom: The mean of the

ltered state (solid line) and the mean of the smoothed state (dashed line).

Correlogram

1

5

1

.5

0

0

100

200

300

Q plot

.5

0

1

10

Correlogram

20

30

1

.25

Q plot

.5

.75

1

.25

Q plot

.5

.75

1

.25

.5

.75

1

2.5

0

0

.5

-2.5

0

100

200

300

0

1

5

10

Correlogram

20

30

1

2.5

0

0

.5

-2.5

0

100

200

300

0

10

20

30

Figure 5: left to right, L: The nt residuals against time. M: The correlogram of the nt residuals.

R: The quantile plot of the ut residuals. TOP: Po model. MIDDLE: Pg model. BOTTOM: Pga

time series model.

30