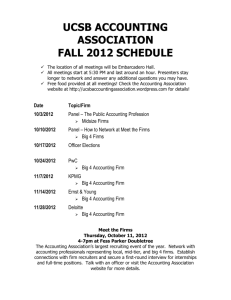

What are we learning from business training and

advertisement