Document 12300151

Explore ECON

Organised by Parama Chaudhury, Cloda Jenkins,

Christian Spielmann & Frank Witte

This Workshop is sponsored by the Department of Economics at UCL.

Programme

13:15-13:55

North Cloisters

Registration and Coffee

13:55-14:00

Events Marquee,

Front Quad

Welcome Address

Sir Professor Richard Blundell

14:00-15:00

Events Marquee,

Front Quad

Session 1 Presentations: Testing Economic Theories

Session Chair: Dr Marcos Vera-Hernández

Yihan Dong and Kin Fung : How does Tariff Reduction Affect Wages Across

Countries? How Important is the Total Factor Productivity in Wage

Determination

Mateusz Stalinski : Consequences of Incomplete Employment Contracts in a

Laboratory Experiment

Sukhi Wei : Open Source: In Search of Cures for Neglected Tropical Diseases

Paul Kimon Weissenberg : The Case for a Fiscal Union in the Eurozone

15:00-15:10

Events Marquee,

Front Quad

First Year Challenge Presentations/Awards

Introduced by Dr Parama Chaudhury

15:10-16:10

Events Marquee,

Front Quad

Session 2 Presentations: Policies and Interventions

Session Chair: Professor Martin Cripps

Nareen Baktor Sing : Realising the Microfinance Dream: How Can Microfinance

Really Help the Poor?

Daniel Sonnenstuhl : How Much would you Pay for Fair-Trade Clothes?

Teresa Steininger : Can Early Childhood Intervention Programs be Successful on a Large Scale: Evidence from Head Start

Leonie Westhoff : Economic Costs of Mental Illness in the United Kingdom: The

Case for Intervention

16:10-16:30

North Cloisters

Posters & Multimedia Presentations

Elliot Christensen , Amin Oueslati and Liese Sandeman: India and Competitive

Federalism

Wenhui Gao and Georgios Kotzamanis : How Has Syriza Affected the Greek

Economy

Nathaniel Greenwold and Dmitry Pastukhov : Is the Islamic State an

Economically Viable State?

Ali Merali and Ciprian Tudor : Is Your Degree Worthless? An Experiment

Delon Qiu : Is Deflation to Blame for Japan's Economic Woes?

Kristin Wende : An Evaluation of Economic Policy in Germany from 1918 to 2016

Yanhan Cui and Danyun Zhou : Stagnation in Student Performance, Can

Performance Related Pay be the Solution?

Coffee Break

16:30-17:45

Events Marquee,

Front Quad

Session 3 Presentations: Dissertation Research

Session Chair: Dr Valerie Lechene

You Lim : The Effect of Low Interest Rates on Household Expectation Formation in the U.S.

Robert Palasik : How to Reform Banking Governance?

Fran Silavong : The Impact of House Prices on Fertility Decision and its Variation based on Population Density

Fangzhou Xu : How to Measure the Economic Impact of UCL’s Newham Campus on the Local Region

17:45-18:30

Events Marquee,

Front Quad

Keynote Address

Sharon White , Ofcom

18:30-20:00

North Cloisters

Reception and Prize-giving

Judging Panel

Janet Henry , Global Chief Economist, HSBC

Gill Hammond , Director of the Centre for Central Banking Studies, Bank of England

Adam Lyons , Economics Advisor, Europe Group, Department for International Development, (DfiD,

UK Government)

Stephen Smith , Deputy Head of Department of Economics, UCL

Dobi

Dockes

Dong

Eaton

Farishta

Feldman

Fung

Christensen

Christoph

Cripps

Crockford

Cui

Darby

Denham

Abitbol

Anzolin

Azmi

Baktor Sing

Bowing

Carlin

Cattaneo

Cea Moore

Chairil

Chaudhury

Fung

Fung

Gao

Gitman

Greenwold

Handel Subbiah

Hoelscher

Hofmann

Ikanade-Agba

Noel

Caroline

Yihan

Rebeca

Rohail

Natasha

Dilly

Elliott

Joel

Martin

Viv

Yanhan

Rachel

Robert

Anthony

David

Syaza

Nareen

Flora

Wendy

Sofia

Camila

Amirah

Parama

Kin

Kenneth

Wenhui

Stefan

Nathaniel

Neerav

Patrick

Arne

Ben

Delegate List

Amin

Karl

Robert

Dmitry

Sophie

Ian

Delon

Lorenzo

Helen

Miriam

Ali

Konrad

Slava

Aishah

Amir

Cloda

Kieron

Pawel

Ye Huan

Andrey

Georgios

Sean

You

Chen Yue

Arturo

Jeff

Romero Yáñez

Rowley

Gyorgy Attila Ruzicska

Leise Sandeman

Balli

Simon

Sarkaria

Schröder

Toluwalase

Shuhaira

Seriki

Shaidan

Lotti

Matthews

Matthiessen

Merali

Mierendorff

Mikhaylov

Omar

Oueslati

Overdick

Palasik

Pastukhov

Peter

Preston

Qiu

Jabarivasal

Jenkins

Jones

Kaminski

Khor

Khvostov

Kotzamanis

Krishnani

Lim

Lok

Fran Silavong

Anthony

Daniel

Guglielmo

Christian

Mateusz

Teresa

Robert

Daniel

Rachel

Jin

Smith

Sonnenstuhl

Spalletti Trivelli

Spielmann

Stalinski

Steininger

Stevens

Szabo

Tan

Tan

Vincent

Hong

Guido

Ciprian

Marcos

Nirusha

Sukhi

Tong

Tran

Tubaldi

Tudor

Vera-Hernández

Vigi

Wei

Paul-Kimon Weissenberg

Kristin Wende

Leonie

Frank

Westhoff

Witte

Fangzhou

Anastasia

Huijing

Xu

Yermakova

Yu

Darya

Danyun

Henry

Zakharova

Zhou

Zhu

MAP

H

OW DOES TARIFF REDUCTION AFFECT WAGES

A

CROSS COUNTRIES

?

H

OW IMPORTANT IS THE TOTAL FACTOR PRODUCTIVITY IN WAGE

DETERMINATION ?

2 nd

Kin Kwan Fung

year

1

B.Sc Economics

University College London

Yihan Dong

B.Sc Statistics, Economics and Finance

2 nd year

University College London

Explore Econ Undergraduate Research Conference

March 2016

1

The assistance of our research supervisor, Dr. Parama Chaudhury, Senior Teaching Fellow at University

College London, is gratefully acknowledged.

How does tariff reduction affect wages in different countries?

Does total factor productivity play a role in wage determination?

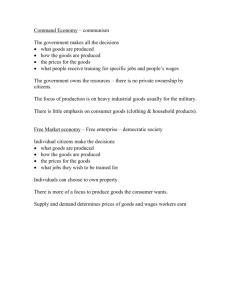

Part 1. Introduction

Is trade liberalisation beneficial to workers and the economy as a whole? The paper sets up a model based on the analytical framework of classical labour economics, in order to examine the long-standing stance held by the World

Bank and the IMF – opening up and trading are integral to successful economic reforms.

1.1 Defining trade liberalisation

The effect of trade liberalisation on the global economy is widely discussed by economists. However, as geographical and chronological effects are taken into account, the true influence seems ambiguous. Most policymakers at international economic organisations, such as the World Bank and the International Monetary Fund, would argue that opening up to trade is an integral part of any economic reform; they are unanimous in drawing the positive relationship between social welfare and openness to trade. This paper measures the impact of tariff change on wage levels in different industries. Many studies have documented that trade reforms increase efficiency and growth of the economy (Hay 2001; Muendler 2002). Nevertheless, according to Harrison and Hanson (1994), these papers are typically plagued by serious econometric and data problems.

Trade liberalisation is the process of decreasing or eliminating barriers to trade between countries, including reduction and abolition of tariffs, removal and augmentation of import quotas, removal of fixed exchange rates, reduction of regulation on imports and foreign exchange controls. (Black, Hashimzade and Myles 2012). Our paper focuses on the effects of tariff reduction on key labour market characteristics.

1.2 Previous research

Pavcnik et al. (2004) argues that industry affiliation is an important indicator of sensitivity of wage level to changes in trade policy. Therefore, studies that do not control for industry-specific variables may not generate reliable results. This argument is supported by Verhoogen (2008), who investigated panel data on Mexican manufacturing plants and found that more productive plants with a higher quality of production were more sensitive to depreciation of the exchange rate and exhibit greater wage changes. This finding explains why there was a larger wage inequality in Mexico during the peso-crisis period (1993-1997). However, the quality-upgrading mechanism driven by other forms of trade liberalisation was not examined in details before. This paper will analyse the relationship between tariffs and wages taking into account the effect of tariff changes on productivity growth, using panel data for different industries. Amiti and Davis (2008) did similar research on the topic using Indonesian manufacturing census data for the period 1991 to 2000. By separately examining the impacts of final and intermediate changes in the tariffs on goods, they found that reduction in input tariffs is more likely to raise wages for importdependent firms compared to local-sourcing firms. On the other hand, reduction in tariffs on output boosts wages of exportcompeting firms but decreases wages of importing firms.

The three studies above focused on three specific developing countries (Brazil, Mexico, and Indonesia). It is tempting to generalise these results, and claim that the indentified relationship between tariffs and wages hold for economies and industries not included in the studies. However, country-specific characteristics – such as population, level of technological development, phase of the business-cycle, and labour market policies – have to be taken into account before any conclusion is made. This paper will estimate this omitted effect using industry-specific data for multiple countries. In order to focus on estimating the effect of industry and country-specific variables, we exclude

the impact of intermediate tariff changes on goods from our model, by assuming that there is no correlation between input sources of a firm and its industry and country affiliation. We argue that the tariff level of intermediate goods make negligible differences to the cost of production. Under this assumption, the model is expected to indicate the unbiased and robust effect of industry and country characteristics on the relationship between tariff reduction and wage changes.

1.3 Structure of the paper

This research paper is divided into four parts. Following the introduction in Part 1 , Part 2 presents the analytical framework and the model we are using in our research. We will lay out the possible feedback channels for tariffs to have effect on wages. Part 3 provides a general description of the data. Meanwhile, Part 3 presents the empirical results of regression models used in the research. Part 4 exposes some future directions our research result can lead to.

Part 2. Methodology

1.1

Theoretical framework

Adopting a classical economics framework of international economics, the marginal productivity of labour within a specific industry of a specific country can be described by 𝜆 𝑖𝑗

(country i, industry j) such that: 𝜆 𝑖𝑗

=

𝜕𝐹(𝐶 𝑖

, 𝑁 𝑗

𝜕𝑁 𝑗

) where

𝑁 𝑗

≔ the total number of workers in the industry;

𝐶 𝑗

≔ the measure of national characteristics;

𝐹 ≔ the transformation that maps the technology level and the number of workers within the industry to an appropriate level of marginal productivity. As commonly noted, function 𝐹 is non-negative, decreasing and concave.

Reiterating that labour income equals the marginal revenue product of labor,

𝑊 𝑖𝑗

= 𝑃 𝑖𝑗 𝜆 𝑖𝑗 where

𝑃 ≔ the local price of the homogenous output (country i, industry j).

If labour is homogeneous and perfectly substitutable, which is a strong assumption upon which we are building our regression models and analytical framework, we can logically derive a labour market clearing condition that describe the non-existence of the situation that workers move to another industry for higher wages. In a particular country:

𝑊 𝑖

= 𝑃 𝑗 𝜆 𝑗

= 𝑃

−𝑗 𝜆

−𝑗

Here we denote −𝑗 for all other industries apart from 𝑗 .

This theoretical framework provides the backbone that supports our research paper.

2.2 Regression models

Our research method is akin to the one adopted by Stone and Cepeda (2011). The differenced are that we do not take into consideration heterogeniety of labour and we control for national characteristics. In Section 2.1 the following

condition was obtained by means of the marginal revenue product approach ( 𝑖 subscript corresponds to the 𝑖𝑡ℎ country, 𝑗 corresponds to the 𝑗𝑡ℎ industry, and 𝑡 denotes time):

𝑊 𝑖𝑗𝑡

= 𝑃 𝑖𝑗𝑡 𝜆 𝑖𝑗𝑡

Taking natural logarithms on both sides of the equation yields: 𝑙𝑛𝑊 𝑖𝑗𝑡

= 𝑙𝑛𝑃 𝑖𝑗𝑡

+ 𝑙𝑛𝜆 𝑖𝑗𝑡

This can be treated as a column vector if more than one factor of production is included (e.g. labour, capital, land).

In this case, elements of the vector 𝑊 𝑖𝑗𝑡

are the prices of factirs of production. Suppose that vector 𝑠 𝑖𝑗𝑡

denotes shares of every input in the production process. Then, our equilibrium condition can be generalised and written in a vector form: 𝑠 𝑖𝑗𝑡

𝑇 𝑙𝑛𝑊 𝑖𝑗𝑡

= 𝑙𝑛𝑃 𝑖𝑗𝑡

+ 𝑙𝑛𝜆 𝑖𝑗𝑡

Taking differences between two subsequent periods:

Δ𝑠 𝑖𝑗

𝑇 𝑙𝑛𝑊 𝑖𝑗

= Δ𝑙𝑛𝑃 𝑖𝑗

+ Δ𝑙𝑛𝜆 𝑖𝑗

In order to isolate effects of changes in trade on factor prices, the effects of general structural variables on prices and productivity have to be loosen up. It can be achieved by conducting regression in two steps. First, we regress changes in prices and productivity on a set of structural variables. Then, we use the estimation results from the first step to recover changes in primary factor prices attributable to each structural variable change. This procedure is useful because the set of dependent variables for stage two is not directly observable.

𝑆𝑡𝑎𝑔𝑒 1: Δ𝑙𝑛𝑃 𝑖𝑗

+ Δ𝑙𝑛𝜆 𝑖𝑗

= 𝛼 + 𝛾 𝑇 Δ𝑧 𝑖𝑗

+ 𝜀 𝑖𝑗

A vector Δ𝑧 𝑖𝑗

contains structural variables such as tariff level, level of import and export, contribution to the economy, etc. In the second step 𝑛 regressions are run (for each significant parameter ̂ ): 𝛾 𝑘 𝑘𝑖𝑗

= 𝜇 𝑘

+ 𝛿Δ𝑙𝑛𝑊 𝑘𝑖𝑗

+ 𝜈 𝑘𝑖𝑗

Although this methodology can be adopted to analyse multiple factors of production, only labour is considered in our research.

Part 3. Graphic analysis and regression results

3.1 Data Description (Trends in wages and tariffs)

We would first expose the trends of the two key parameters of interest, wages and tariffs, using the data from Trade,

Production and Protection 1976-2004 dataset by Nicita N. and M.Olarreaga (2006), We split the dataset into three parts by country classifications: developed countries, developing countries and countries in transition ( Table 5 ).

Countries are classed regarding Statistical Annex issued by the United Nations in 2012.

From 1976 to 2002, average wages in the developed countries that the data covers shows three episodes of increase (1976-1980, 1984-1994,

1999-2000) and three declines (1980-1984, 1994-1999, 2000-2002), while the average wages among the developing countries rose steadily from 1976 to 2001 ( Figure 1 ). It is obvious that the average wages of developed countries fluctuated more dramatically than those of the counterparts. We identify at least three reasons that can possibly lead to such an inaccuracy. First, the average wages, obtained by dividing the wage bills data by total numbers of employees in corresponding industries of each country, are nominal, thus not adjusted to real wages. The inflation bias is thus inherent in the data. Second, We obtain Figure 1 data by taking a simple average across industries, so

the numbers are not properly weighted. Third, the data calculated are highly dependent on raw data availability, which affected the representativeness of the outright figure.

To reduce the error arisen from ‘The average’ calculating method, we extract the weighted average wages by dividing the sum of all wage bills within one year by the corresponding total number of employees to get the weighted average wages ( Figure 2 ). Average wages of developed countries showed a consistent upward trend, while that of developing countries displayed a similar trend, albeit ascending slower. All three lines drop in 2002 due to deficiency of data, as pointed out in the prior paragraph.

Although this dataset gives useful information on industry total wage bills and total number of employees, it does not show comprehensive trend of the movement of wages. We will use an alternative dataset – the Occupational

Wages around the World (OWW) database by Freeman and Oostendorp (2012) to indicate trends in wages in the short-run in the following section.

Meanwhile, using the first dataset, we examine the trend of tariffs in developed and developing countries ( Figure 3,

4 and 5 ). Tariffs are classified by industry affiliation, which is indicated by the three-digit ISIC codes in the attachment. Industry description is affixed ( Table 6 ). Average tariff of tobacco manufactures (ISIC 314) is far higher than that in other industries, across countries. It ranges from 17.0% to 40.6% in developed countries and from

19.1% to 78.7% in developing countries. For other industries, in the developed countries, the tariffs remain below

10% and shows a mild decreasing trend (except 311, 313, 314, 322 and 324), while a more pervasive trend is observed for developing countries from 1976 to 2004. In Figure 6, where the average tariffs of all industries in developing and developed countries are calculated, it can be deduced that both developing and developed countries adopted trade liberalisation policies over the past two decades. Average tariff of developed countries was 8.0% in

1991. The figure showed an incessant decreasing trend and ended at 4.4% in 2004. Average tariff of developing countries has an upward movement from 1991 (18.6%). It peaked in 1994 (26.3%) and dropped in the following ten years to 11.5% in 2004.

3.2 Preliminary Regressions – analysis for the short run

We extract the adjusted hourly wage rates for different industries and occupations from the Occupational Wages around the World (OWW) database, produced by Freeman and Oostendorp (2012), and merge the information with trade and tariff data provided by Nicita N. and M.O Larreaga, in order to form a more reliable indicator of global trade and the labour market. Note that all the wage rates are reported in dollars, and values of import and export are reported in thousand dollars.

At the first stage of the empirical analysis, We choose both a developed country and a developing country to explore the effect of trade liberalisation policies on import, export and wage rates. The United Kingdom is chosen as a representative of developed countries, due to its long-standing status in the league. The weighted average tariff of manufacturing industries in the United Kingdom dropped from 5.7% in 1988 to 2.4% in 2003. The data covers the ten main manufacturing industries (311, 321, 332, 342, 351, 352, 371, 382, 383, 384) of the country. Among the developing countries, Pakistan has been a typical and thus representative country opening up to trade for the past two decades. Another reason for such a country-combination nomination also relies on the relatively sparring trade relation between Pakistan and the United Kingdom. The weighted average tariff of manufacturing industries in

Pakistan decreased from 52.8% in 1995 to 19.7% in 2004. The data covers the seven main manufacturing industries

(311, 321, 323, 342, 351, 352, 384) of the country.

As the Occupational Wages around the World (OWW) database only overlap with Trade, Production and Protection

1976-2004 dataset on a small proportion of industries and years, at this stage we only consider data collected in two subsequent time periods. We use a first differences specification to perform the analysis, where the years 2002 and

2003 are examined. The model is:

1) ∆𝑤𝑎𝑔𝑒_𝑈𝐾_𝑖 = 𝛽

0

+ 𝛽

1

∆𝑡𝑎𝑟𝑖𝑓𝑓_𝑈𝐾 𝑖

+ 𝛽

2

∆𝑖𝑚𝑝𝑜𝑟𝑡_𝑈𝐾 𝑖

+ ∆𝜇 𝑖

2) ∆𝑤𝑎𝑔𝑒_𝑃𝑎𝑘𝑖𝑠𝑡𝑎𝑛 𝑖

= 𝛿

0

+ 𝛿

1

∆𝑡𝑎𝑟𝑖𝑓𝑓_𝑃𝑎𝑘𝑖𝑠𝑡𝑎𝑛 𝑖

+ 𝛿

2

∆𝑖𝑚𝑝𝑜𝑟𝑡_𝑃𝑎𝑘𝑖𝑠𝑡𝑎𝑛 𝑖

+ 𝛿

3

∆𝑒𝑥𝑝𝑜𝑟𝑡_𝑃𝑎𝑘𝑖𝑠𝑡𝑎𝑛 𝑖

+ ∆𝜀 𝑖

Here 𝑖 denotes occupation types, and ∆ refers to the backward difference operator. This model is based on the assumption that fixed effects (including industry affiliation) has been eliminated by calculating the differences between paired observations. The value of import and export of each industries in the United Kingdom show collinearity in both 2002 and 2003 ( Table 1 and 2 ). Therefore, value of export is excluded from the model when analysing data of the United Kingdom. However, for Pakistan, total value of import and export of each industry show negligible correlation ( Table 3 and 4 ), so total value of export is included as a covariance. Regression results are displayed as below (p-values are reported in the brackets): 𝑖

( 1.7 × 10 −11

−1 ∆𝑡𝑎𝑟𝑖𝑓𝑓 𝑖

+ 5.783 × 10

) ( 0.486

) ( 0.774

)

−9 ∆𝑖𝑚𝑝𝑜𝑟𝑡 𝑖 𝑖

= 1.139 × 10 −2 − 1.085 × 10 −1 ∆𝑡𝑎𝑟𝑖𝑓𝑓 𝑖

− 1.979 × 10 −8 ∆𝑖𝑚𝑝𝑜𝑟𝑡 𝑖

− 6.657 × 10

(0.137784) (0.000676) (0.352747) (0.432064)

−9 ∆𝑒𝑥𝑝𝑜𝑟𝑡 𝑖

There is no significant evidence to reject that 𝛽

1

= 𝛽

2

= 0 in the case of the UK. This implies that, at least in the short run, trade liberalisation policies do not alter values of import and wage rates in the UK statistically significantly. In the Pakistan case, on the other hand, the coefficient of tariff changes in Pakistan is statistically negative. For the seven industries in Pakistan that the data covers, trade liberalisation results in an increase in the growth rate of wages. As yet, wages in developing countries are more sensitive to tariffs than in the counterparts.

However, due to the unavailable of sufficient data, this deduction cannot be testified by the statistical result.

It is reasonable to suggest that the reduction of tariffs boosts international trade. We analyse the effect of tariff reduction on the value of import to see if the argument is true:

∆𝑖𝑚𝑝𝑜𝑟𝑡 𝑖𝑗

= 𝛼

0

+ 𝛼

1

∆𝑡𝑎𝑟𝑖𝑓𝑓 𝑖𝑗

+ ∆𝛾 𝑖𝑗

where 𝑖 = 1 denotes observations in the UK and 𝑖 = 2 denotes Pakistan; 𝑗 signifies occupations. Here ∆𝛾 𝑖𝑗 error term. The results are shown below (p-values are reported in the brackets):

is an

̂

1𝑗

= 1037900 + 388496∆𝑡𝑎𝑟𝑖𝑓𝑓

(0.309) ( 0.828

)

1𝑗

∆𝑖𝑚𝑝𝑜𝑟𝑡

2𝑗

= 157219 − 681857∆𝑡𝑎𝑟𝑖𝑓𝑓

2𝑗

(0.1278) (0.0126)

There is significant evidence that reduction in tariffs raises the total value of import of the manufacturing industries in Pakistan. On the other hand, there is negligible evidence that total import value of the manufacturing industries in the UK is influenced by tariff levels. This result supports the argument that as trade liberalisation increases the competition for the domestic firms in developing countries, less developed countries are more likely to suffer from trade deficit after lowering the barrier to international trade. It is also argued that under trade liberalisation, the sector balance of developing countries is more likely to be shifted, causing a decrease in labour demand and structural unemployment, and, therefore, lower wages and social welfare. The first-half of the regression outputs also supports this argument. These issues will be studied further in Section 3.3

.

Table 1 . Correlation matrix - the UK 2002 wage02 tariff02 import02 export02 wage02 1 0.12803238 -0.05076964 -0.04467823 tariff02 0.12803238 1 0.17563327 -0.05871051 import02 -0.05076964 0.17563327 1 0.94721312 export02 -0.04467823 -0.05871051 0.94721312 1 wage03 tariff03 import03 export03

Table 2 . Correlation matrix - the UK 2003 wage03 tariff03 import03

1

-0.02088016

-0.07681046

-0.04378443

-0.02088016

1

0.28050322

0.00225885

-0.07681046

0.28050322

1

0.93488371 export03

-0.04378443

0.00225885

0.93488371

1 wage02 tariff02 import02 export02

Table 3 . Correlation matrix - Pakistan 2002 wage02

1

0.9055436

-0.00149325

-0.1508307 tariff02

0.9055436

1

-0.1953851

0.1094565 import02

-0.00149325

-0.1953851

1

-0.2001654 export02

-0.1508307

0.1094565

-0.2001654

1 wage03 tariff03 import03 export03

Table 4 . Correlation matrix - Pakistan 2003 wage03 tariff03 import03

1

0.89333272

0.21182433

-0.08875042

0.89333272

1

-0.02336664

0.19351617

0.21182433

-0.02336664

1

-0.19876383 export03

-0.08875042

0.19351617

-0.19876383

1

3.3 Regression results on structural factors and total factor productivity

After getting an idea of how trade liberalisation affects wages in developing and developed countries in different ways, we move on to investigate how variables related to trade can influence wages in a range of industries. In this chapter, we are going to follow the methodology outlined in Section 2.2

to analyse total factor productivity (TFP) and wages data of the United States from 1976 to 2004. We combine information of the US from the Trade,

Production and Protection Dataset by Nicita and Olarreaga (2006) (we call it TPP in short) together with the

NBER-CES Manufacturing Industry Database (1958-2009) (R. Becker, W. Gray, J. Marvakov, 2013). Recall the

Stage 1 regression model defined in Part 2 (Methodology) :

∆ ln 𝑃 𝑖

+ ∆ ln 𝜆 𝑖

= 𝛼 + 𝛾 𝑇 ∆𝑧 𝑖

+ 𝜀 𝑖 where 𝑖 denotes the ith industry. 𝑃 is the local price of homogenous output, and 𝜆 is defined as marginal productivity of labour. 𝑙𝑛𝜆 is assumed to be well approximated by the growth of total factor productivity (TFP). Here 𝑧 is a vector containing structural variables. Note that 𝛼 is the intercept, and 𝛾 is a vector containing the coefficients for each structural variable. The following analysis is conducted using a fixed effects model. Note that as local price of homogenous output of each industry does not vary as much as the marginal productivity of labour, and considerably hard to estimate accurately, here we assume that ∆𝑙𝑛𝑃 𝑖 effect on ∆𝑙𝑛𝑊 𝑖

(Recall from Section 2.2

that ∆𝑙𝑛𝑊 𝑖

≪ ∆𝑙𝑛𝜆

= ∆𝑙𝑛𝑃 𝑖 production – labour.) Our model estimated is displayed below: 𝑖

. Therefore the magnitude of Δ𝑙𝑛𝑃 𝑖

+ ∆𝑙𝑛𝜆 𝑖

has negligible

, here we only consider one factor of

Δ𝑙𝑛𝜆 𝑖

= 𝛼 + 𝛾

1

∆𝑡𝑎𝑟𝑖𝑓𝑓 𝑖

+ 𝛾

2

∆𝑖𝑚𝑝𝑜𝑟𝑡 𝑖

+ 𝛾

3

∆𝑒𝑥𝑝𝑜𝑟𝑡 𝑖

+ 𝛾

4

∆𝑐𝑜𝑛𝑡𝑟𝑖𝑏𝑢𝑡𝑖𝑜𝑛_𝑡𝑜_𝐺𝐷𝑃 𝑖

+ 𝜀 𝑖

Here 𝑡𝑎𝑟𝑖𝑓𝑓 represents import weighted average tariff rate applied on goods entering the US, 𝑖𝑚𝑝𝑜𝑟𝑡/𝑒𝑥𝑝𝑜𝑟𝑡 represents the value of imported/exported goods entering/leaving the US in thousand dollars, and 𝑐𝑜𝑛𝑡𝑟𝑖𝑏𝑢𝑡𝑖𝑜𝑛_𝑡𝑜_𝐺𝐷𝑃 represents the proportion of value added of the industry to the total GDP of the country. In

NBER-CES dataset, industries are coded with six-digit 1997 NAICS system. By matching each of these observations with the three-digit ISIC coded observations in TPP , we get average TFP data for 26 manufacturing industries in the US for the year 1976 to year 2004. In NBER-CES, TFP growth of every industry is provided in two different formats. Here we simply calculate the mean value of each pair of TFP growth value to provide an estimate.

The GDP data is provided by Conference Board Total Economy Database (2015), reported in 1990 US Dollars. In a fixed effects model, ∆𝑥 𝑗

is calculated by:

∆𝑥 𝑗

= 𝑥 𝑗𝑘

− 𝑛

1 𝑛

∑ 𝑥 𝑗𝑙 𝑙=1

Where 𝑗 denotes the 𝑗𝑡ℎ observation, 𝑘 denotes the base year and 𝑛 denotes the total number of years. The regression results are shown, as the p-values are specified in the parenthesis:

Δ𝑙𝑛𝜆 𝑖

= −0.0096 − 0.0051∆𝑡𝑎𝑟𝑖𝑓𝑓 𝑖

+ 0.0000∆𝑖𝑚𝑝𝑜𝑟𝑡 𝑖

+ 0.0000∆𝑒𝑥𝑝𝑜𝑟𝑡 𝑖

+ 8.5230∆𝑐𝑜𝑛𝑡𝑟𝑖𝑏𝑢𝑡𝑖𝑜𝑛_𝑡𝑜_𝐺𝐷𝑃 𝑖

(0.166) (0.042) (0.553) (0.388) (0.225)

Controlling for Δ𝑖𝑚𝑝𝑜𝑟𝑡 𝑖 i.e.

Δ𝑇𝐹𝑃 𝑖

, ∆𝑒𝑥𝑝𝑜𝑟𝑡 𝑖

and ∆𝑐𝑜𝑛𝑡𝑟𝑖𝑏𝑢𝑡𝑖𝑜𝑛_𝑡𝑜_𝐺𝐷𝑃

, and therefore determines 𝑙𝑛𝑤𝑎𝑔𝑒 𝑖 𝑖

, ∆𝑡𝑎𝑟𝑖𝑓𝑓 𝑖

has a significant effect on ∆𝑙𝑛𝜆 𝑖

,

according to our theoretical framework specified in Section 2.1

.

Using the result from Stage 1 , we now estimate the Stage 2 equation. Recall the model from Section 2.2

: 𝛾 𝑘 𝑘𝑖

= 𝜇 𝑘𝑖

+ 𝛿∆𝑙𝑛𝑤𝑎𝑔𝑒 𝑘𝑖

+ 𝜐 𝑘𝑖

As ̂

1

is the only significant parameter in Model 1 , we now estimate:

−0.005083Δ𝑡𝑎𝑟𝑖𝑓𝑓 𝑖

= 𝜇 𝑖

+ 𝛿Δ𝑙𝑛𝑤𝑎𝑔𝑒 𝑖

+ 𝜈 𝑖

Regression output:

−0.005083Δ𝑡𝑎𝑟𝑖𝑓𝑓 𝑖

= 0.003231 + 0.097589Δ𝑙𝑛𝑤𝑎𝑔𝑒 𝑖

(0.146) (0.110)

Here 𝛿̂ is positive, which can be interpreted as tariff reduction boosts wages, ceteris paribus , following our reasoning in Part 2 . However, the parameter is not significant enough (p-value>0.1).

As we are also interested in the characteristics of the magnitude of growth in total factor productivity (TFP), we run

Model 3 to determine Δ𝑙𝑛𝜆 𝑖 shown below:

using some independent variables that are intuitively related. The estimated equation is

Δ𝑙𝑛𝜆 𝑖

= 𝛽

0

+ 𝛽

1 𝑖𝑓𝑊𝑇𝑂 𝑖

+ 𝛽

2

Δ𝑝𝑜𝑝𝑢𝑙𝑎𝑡𝑖𝑜𝑛 𝑖

+ 𝛽

3

Δ𝐺𝐷𝑃 𝑖

+ 𝜃 𝑖

Here 𝑖 denotes the 𝑖𝑡ℎ country, and 𝑙𝑛𝜆 represents country-level TFP growth, which is regressed on three country specific characteristics: whether the country is a member of WTO, population and GDP. 𝑖𝑓𝑊𝑇𝑂 𝑖 is a binary variable, which takes value 1 if the 𝑖𝑡ℎ country is a member of WTO, and value 0 if the country is not. Fixed effects model is still applied here. The data from the Conference Board Total Economy Database (2015), where GDP is reported in 2014 US Dollars. Outcome:

Δ𝑙𝑛𝜆 𝑖

= −2.501 + 1.461𝑖𝑓𝑊𝑇𝑂 𝑖

+ 0.000Δ𝑝𝑜𝑝𝑢𝑙𝑎𝑡𝑖𝑜𝑛 𝑖

+ 0.000∆𝐺𝐷𝑃

(0.0015) (0.0676) (0.6391) (0.4058) 𝑖

As we see here, if the country is in WTO or not has a comparatively significant positive effect on the country’s overall TFP growth, ceteris paribus . To analyse the influence of WTO membership on a country’s TFP growth rate, now we exclude the non-WTO countries from the dataset, and replace 𝑖𝑓𝑊𝑇𝑂 𝑖 year the 𝑖𝑡ℎ country joined WTO. We get the following results:

by 𝑦𝑒𝑎𝑟𝑊𝑇𝑂 𝑖

, which indicates the

∆𝑙𝑛𝜆 𝑖

= 181.500 − 0.092𝑦𝑒𝑎𝑟𝑊𝑇𝑂 𝑖

+ 0.000Δ𝑝𝑜𝑝𝑢𝑙𝑎𝑡𝑖𝑜𝑛 𝑖

+ 0.000∆𝐺𝐷𝑃

(0.379) (0.377) (0.945) (0.638) 𝑖

In this equation, none of the parameters is significant. Model 3 is highly intuitive, and may consist of great bias in the parameters. One obvious weakness of the model is that growth in TFP is generally considered to have a reverse effect on GDP. Here we include GDP to control for potential omitted variable such as level of development of the country, which can be correlated with both membership in WTO and the increasing rate of TFP. However, it is very likely that there are other omitted variables or instrumental variables we did not control for. In Part 4 , we will put forward some directions to investigate the issue further.

Part 4. Discussion and conclusion

At the start of our discussion, we would like to provide a new definition to the overall remuneration of workers: wages. In reality, where laborers are heterogonous and different in skill level, they receive a markup of their wages

(basic wages) evaluated at the competitive equilibrium. The markup sometimes is more generally referred as wage premium. Thus workers receive the sum of “basic wages” and “wage premium” as the overall wages.

This paper estimated the effect of import tariffs on wages, and how it can vary depending on total factor productivity levels . Our empirical approach can be a potential way to overcome some weaknesses of current methodologies used to estimate the effects of trade liberalisation on the wage premium, for example, omission of the influence of TFP growth. In our analysis, we evaluated the impact of trade reforms on basic wages by regression output prices with relevant structural factors, which comprise tariff level, in the first stage. After that, the relationship between the tariff level and the wages was analysed in the second stage. Result has shown to be insignificant, ambiguous and thus inconclusive. As a potential direction for further research, we can assess the “market price” o f wage premium by solving the firm profit maximization problem with respect to wage premium, at the micro-level. Adopting the theoretical framework as same as Lourenco S. Paz (2014), we can strengthen his empirical estimation method by

assessing the relationship between tariff and technological progress (TFP), so that the total effect of a change in the tariff level on wage premium can be obtained.

However, this one-stage OLS regression approach may entice three potential problems:

First, there are possible endogenous variables. One example would be terms of trade and TFP. Terms of trade is put into the regression to control for the effect of the tariff level on TFP, however, there is a possible reverse causation of TFP on terms of trade. A stylistic example would be that a TFP increase reduces the production costs of firms, which then lead to a better terms of trade. Possible remedies include incorporating instrumental variables for each of the endogenous variables and conduct multi-stage OLS regression. Nomination of instrumental variables for terms of trade include home inflation rate, average import prices, and even the home central bank interest rate.

Second, the presence of omitted variables may lead to either upward or downward bias to the estimators, causing inconsistency. The regressions we run clearly omits the influence of the uneven government policies in supporting

Research and Development programme within the country across time. Another example will be the individual selfselection of labour force participation (opting out/in) due to wage change. The notion that trade reform driving out the unskilled workers from the home economy will affect the efficiency of tariff on TFP . Saying that, the timesensitive constant captures some general trends that apply to all countries, including the improving education level, increase of women participation in the workforce.

Finally, heteroscedasticity may be an important, but insignificant element to our research. It refers to the variance of u is non-uniform for at least some of the structural factors. This affect the consistency of estimators and thus our estimators are not BLUE (Best Liner Unbiased Estimators). Simple remedies include having running GLS and using heteroscedasticity-robust regression.

Therefore, we should try refining this model on the three aspects if further empirical analysis is carried out.

References

1.

Amiti, M., and Davis, D., (2008) “Trade, Firms, and Wages: Theory and Evidence.” NBER Working Paper,

14106.

2.

Becker, R., Gray, W., Marvakov J. (2013) NBER-CES Manufacturing Industry Database. http://www.nber.org/nberces/ Accessed 11 March 2016.

3.

Black, J., Hashimzade, N. and Myles, G. (2012) A Dictionary of Economics. 4th Ed. Oxford University

Press.

4.

Freeman, R. and Oostendorp, R. (2012) Occupational Wages around the World (OWW) . Available at www.nber.org/oww/ . Accessed 11 March 2016.

5.

Harrison, A., & Hanson, G. (n.d.). “Who Gains from Trade Reform? Some Remaining Puzzles.”

6.

Hay, D. (n.d.

). “The Post -1990 Brazilian Trade Liberalisation and the Performance of Large Manufacturing

Firms: Productivity, Market Share and Profits.” The Economic Journal, 620 -641.

7.

Lourenço S. Paz (2014) Trade liberalization and the inter -industry wage premium: the missing role of productivity, Applied Economics, 46:4, 408-419, DOI: 10.1080/00036846.2013.848031

8.

Nicita A. and M. Olarreaga (2006), Trade, Production and Protection 1976-2004, World Bank Economic

Review 21(1).

9.

Pavcnik, N., Blom, A., Goldberg, P. and Schady, N. (2004) “Trade liberalization and industry wage structure: Evidence from Brazil.” The World Bank Economic Review, 18(3). p. 319 -344

10.

Stone, S. and R. Cavazos Cepeda (2011), “Wage Implications of Trade Liberalisation: Evidence for

Effective Policy Formation”, OECD Trade Policy Papers , No.122, OECD Publishing.

11.

The Conference Board. (2015) The Conference Board Total Economy Database™, May 2015, http://www.conference-board.org/data/economydatabase/ Accessed 11 March 2016.

12.

“Trade, Technology, and Productivity: A Study of Brazilian Manufacturers, 1986 1998.” (2012, March 23).

Retrieved October 27, 2015.

13.

Verhoogen, E., (2008) “Trade, Quality Upgrading, and Wage Inequality in the Mexica n Manufacturing

Sector.” The Quarterly Journal of Economics, 123(2). p. 489 -530

Table 5. Country Coverage 1

Japan

Latvia

Lithuania

Malta

Netherlands

New Zealand

Norway

Poland

Portugal

Romania

Slovakia

Slovenia

Spain

Sweden

Switzerland

United Kingdom

United States

Developed

Country Name

Australia

Austria

Belgium-Luxemburg

Bulgaria

Canada

Cyprus

Czech Republic

Denmark

Finland

France

Germany (76-90 West)

Greece

Hungary

Iceland

Ireland

Italy

Developing

Code Country Name

AUS Algeria

AUT Argentina

BLX Bangladesh

BGR Benin

CAN Bolivia

CYP Botswana

CZE Brazil

DNK Cameroon

FIN Chile

FRA China

DEU Colombia

GRC Costa Rica

HUN Cote D'lvoire

ISL Ecuador

IRL Egypt

ITA El Salvador

JPN Ethiopia

LVA Gabon

LTU Ghana

MLT Guatemala

NLD Honduras

NZL Hong Kong SAR 2

NOR India

POL Indonesia

PRT Iran

Mexico

Code Mongolia

DZA Morocco

ARG Mozambique

BGD Myanmar

BEN Nepal

BOL Nigeria

BWA Oman

BRA Pakistan

CMR Panama

CHL Peru

CHN Philippines

COL Qatar

CRI Senegal

CIV Singapore

ECU South Africa

EGY Sri Lanka

SLV Taiwan Province of China

(United Republic of)

ETH Tanzania

GAB Thailand

GHA Trinidad and Tobago

GTM Tunisia

HND Turkey

HKG Uganda

IND Uruguay

IDN Venezuela

IRN Yemen

ROM Israel

SVK Jordan

SVN Kenya

ISR

JOR

KEN Armenia

In Transition

Country Name

ESP (Republic of) Korea KOR Azerbaijan

SWE Kuwait KWT Kyrgyzstan

CHE Macau SAR

GBR Malawi

MAC (Republic of) Moldova

MWI Russian Federation

USA Malaysia

Mauritius

MYS Ukraine

MUS

TZA

THA

TTO

TUN

TUR

UGA

URY

VEN

YEM

PER

PHL

QAT

SEN

SGP

ZAF

LKA

TWN

MEX

MNG

MAR

MOZ

MMR

NPL

NGA

OMN

PAK

PAN

Code

ARM

AZE

KGZ

MDA

RUS

UKR

1 Country coverage varies between regressions depending on data availability. Countries are classed regarding Statistical Annex issued by the

United Nations in 2012. Available at: http://www.un.org/en/development/desa/policy/wesp/wesp_current/2012country_class.pdf

2 Special Administrative Region of China.

Table 6. Industry Coverage 3

ISIC Code Industry Description

311 Food products

313 Beverages

314 Tobacco

321 Textiles

322 Wearing apparel except footwear

323 Leather products

324 Footwear except rubber or plastic

331 Wood products except furniture

332 Furniture except metal

341 Paper and products

342 Printing and publishing

351 Industrial chemicals

352 Other chemicals

353 Petroleum refineries

354 Miscellaneous petroleum and coal products

355 Rubber products

356 Plastic products

361 Pottery china earthenware

362 Glass and products

369 Other non-metallic mineral products

371 Iron and steel

372 Non-ferrous metals

381 Fabricated metal products

382 Machinery except electrical

383 Machinery electric

384 Transport equipment

385 Professional and scientific equipment

390 Other manufactured products

3 This paper contains analysis on 28 manufacturing industries listed above. Source: Trade, Production and Protection 1976-2004 dataset by

Nicita N. and M.Olarreaga (2006).

CONSEQUENCES OF INCOMPLETE EMPLOYMENT CONTRACTS

IN A LABORATORY EXPERIMENT

Mateusz Stalinski

1

B.Sc Economics

2 nd

year

University College London

Explore Econ Undergraduate Research Conference

March 2016

1

I am very grateful to Professor Antonio Cabrales for his invaluable guidance throughout the preparations as well as during the experiment. I would like to thank Dr Frank Witte, Dr Cloda Jenkins, and Dr Parama

Chaudhury for giving feedback on my experimental design. I would also like to express my sincere gratitude to to Noel Dobi (UCL), Ali Merali (UCL), Ciprian Tudor (UCL), and Aleksandra Goch (VILO Bydgoszcz) for helping me with organising the experiment.

1 Introduction

This experiment explored the impact of incomplete employment contracts on wages. The paper relied on data obtained in a series of laboratory experiments held in two locations: University

College London (36 participants), and the Upper School no. 6 in Bydgoszcz, Poland (168 participants). This experiment aimed to present an alternative model of the labour market with incomplete employment contracts, which provides numerical predictions for different input parameters. Behaviour of workers and firms is modelled separately using techniques of discrete dynamic programming. The experimental design allows for long-term employment contracts with firms deciding whether to dismiss some of their workers at the end of each period. In the model, shirking is associated with a risk of being dismissed, which reflects real-world features of the labour market. Furthermore, data collected in this experiment sheds light on determinants of dismissal decisions made by firms (a topic which has not been thoroughly studied thus far).

Finally, the model can be used to display workers’ best response functions for different scenarios

(parameters in the model).

The inability of employers to directly control workers’ effort levels has significant economic consequences. Firms are likely to pay a premium over perfectly competitive wages in a labour market in order to motivate employees to work efficiently. Secondly, it is important to check whether employers’ decision rules regarding dismissals vary with level of incomplete employment contracts. The research question was: to what extent are estimates computed by means of the proposed model consistent with observed empirical results?

The most well-known explanation of involuntary unemployment was proposed by Shapiro and

Stiglitz (1984). According to their model, shirking behaviour can be prevented by increasing expected cost of losing a job (by offering higher wages). Firms set wages so that workers never shirk ( non-shirking condition ). The model predicts that the lower the chance of being caught shirking ( q ), the higher the wage required to enforce the non-shirking condition. This hypothesis is supported by Altmann et al. (2013) who obtained estimates for two extreme values of q: 0

(treatment) and 1 (control). One of the main limitations of the Shapiro and Stiglitz framework is the fact that workers have only two actions: work (effort=1) or shirk (effort=0). The experiment provides justification for existence and optimality of the non-shirking condition even with more than two choices available to employees.

1

2 Methodology

The 204 students were randomly assigned to roles within groups and received printed instructions

(Figure 1). All participants were tested to ensure comprehension. Data collection (Figure 2) was facilitated by software programmed in o-tree (Chen, Schonger and Wickens, 2016) and z-tree

(Fischbacher, 2007). Participants were permitted to view their results afterwards (Figure 3).

Students were entered into a lottery and winners received £50 in cash (Figure 4). The probability of winning the lottery depended on performance during the game. Choosing lottery tickets as a reward medium and linking the reward to total profit obtained during all rounds of the experiment ensured: monotonicity , salience , and dominance – conditions required for a correct experimental design (Friedman, Cassar, and Selten, 2004). Each game consisted of 10 rounds.

Figure 1: All participants received written instructions and a piece of paper for making notes

Figure 2: Z-tree facilitates running sessions via the LAN

Figure 3: Students were very excited to see results of the group available on the experimenter’s computer

Figure 4: Deputy Headmaster of the Upper School no. 6 in Bydgoszcz congratulates the winner of the lottery

2

STEP 3:

3 The experimental design

Each game required 14 participants: 10 sellers (workers) and 4 buyers (employers). Employment contracts could last for more than one period. At the end of each period, employers decided which workers to dismiss. In the treatment condition, quality choices are revealed to employers with q=60% probability. It corresponds to incomplete employment contracts. In the control condition, all workers’ decisions are shown to their employers ( q=100% ). The timeline for each round is presented in the table below.

Table 1: Details of the experimental design (pictures of screens seen by participants provided)

FIRST ROUND

STEP 1:

Buyers make 0, 1, or 2 public offers to sellers, specifying one integer price .

STEP 2:

Each bid appears on the screen of every seller. The first participant who accepts a given offer becomes its creator’s trading partner. Each seller can deliver products for only one buyer. There are always at least two sellers without a trading contract.

Each seller who has a trading partner chooses their effort level: with precision 0.5.

3

STEP 4:

Sellers’ quality choices are revealed to buyers. For each trading partner there is a q% probability that their decision will be displayed. A buyer may terminate some of the contracts. All other sellers are automatically the buyer’s trading partners in the next period. It is not possible to terminate a contract with a seller whose effort level was not revealed.

STEP 5:

Payoffs for a given period are calculated and displayed to participants.

ALL OTHER ROUNDS

STEPS 1-2:

Buyers can make new offers if they have less than two trading partners. Buyers specify one price for all of their suppliers (old and new). New offers appear on the screen of every seller without a trading partner. The first participant who accepts a given offer becomes its creator’s trading partner.

STEPS 3-5:

These steps are exactly the same as in the first round.

Payoff function for sellers is defined as follows:

It is important to note that if a contract is terminated at the end of a round, a seller does not receive the price. Buyers’ payoffs are calculated according to the formula below:

.

4

4 Discussion of results

4.1

Termination of contracts

One aim of the study was to identify decision factors for terminating a contract. Clearly, effort

(quality) levels play a crucial role in the process. However, more factors should be taken into account. Employers, instead of basing their decisions solely on quality levels, used effort to wage ratio , which can be defined as a percentage of price which is offered as effort:

To check this hypothesis a probit model for specification with a latent variable was used:

Regressions of two types were estimated (in the first one – quality is excluded from the model).

Treatment and control conditions were analysed separately (Table 2).

Probit regression term perratio

_cons

Table 2: Probit regression excluding ‘quality’

Number of observations = 297 Pseudo R2 = 0.4367

( treatments )

Coefficient

-.0989009

3.475724

Standard Error

.0108186

.4231037 z

-9.14

8.21

P>z

0.000

0.000

Probit regression term perratio

_cons

Number of observations = 396

( controls )

Coefficient Standard Error

-.1156464

5.291947

.0131668

.6606748 z

Pseudo R2 = 0.2933

-8.78

8.01

P>z

0.000

0.000

5

For both treatments and controls, coefficients are statistically significant with p-values lower than

0.0001. The McFadden’s Pseudo R

2 s are high; values of 0.2-0.4 correspond to 0.7-0.9 OLS R

2

(Louviere, Hensher and Swait, 2000).

Coefficients yielded by the second type of regression are presented in Table 3.

Probit regression term perratio quality

_cons

Table 3: Probit regression including ‘quality’

Number of observations = 297 Pseudo R2 = 0.5016

( treatments )

Coefficient

-.0688369

-.7197774

4.322064

Standard Error

.0128159

.1476895

.5152203

z

-5.37

-4.87

8.39

P>z

0.000

0.000

0.000

Probit regression term perratio quality

_cons

Number of observations = 396

( controls )

Coefficient

-.0964069

Standard Error

.0138554

-.6997424

6.452646

.1324244

.734403

z

Pseudo R2 = 0.3653

P>z

-6.96

-5.28

8.79

0.000

0.000

0.000

In both treatment and control groups coefficients for quality are significantly different to 0. This shows that some part of the decision came directly from values of effort, regardless of the offered price. Yet, for the modelling part, it was assumed that the probabilities depended solely on the effort-wage ratio to keep the model tractable.

Figure 5 shows probabilities of contract termination estimated from the first set of regressions.

The graph shows predicted probabilities for different values of perratio. The firms’ decision rule on contract termination varies with degree of incomplete employment contracts. In the case of perfectly complete contracts, a higher quality/price ratio is necessary for a given probability of the termination.

6

Figure 5: Probability of contract termination given quality/price ratio (first set of regressions)

The two flat parts of the curve indicate two different effects. For values of perratio lower than

0.33, employers receive negative profit (as their payoff is a difference between quality multiplied by 3 and price). Thus, it is not surprising that for ratios below 0.33, probability of a contract’s termination is close to 1. The linear segment of the curves ends close to 0.5 in both cases.

Furthermore, the ratio of 0.5 occurred for 26% of all choices. The high frequency can be explained by existence of a 50-50 social normal .

4.2

Modelling firms’ and worker’s behaviour

In each period workers can be in one of the two states: employed, unemployed . For a given individual, their state in period t is denoted by . The value 1 corresponds to the state of employment, while the value 0 corresponds to the state of unemployment. In general, in each state they have a set of actions . Note that and .

Elements of the set are denoted by .

7

Workers are assumed to maximise their lifetime expected utility. This is equivalent to choosing an optimal set of actions for each state in every period

1

(in this case – optimal effort for ).

For each period we define a value of being in state : where u t

is a within-state utility function. To perform optimisation it is necessary to find transition probabilities (Table 4) – probabilities of moving from one state to another given actions taken. To compute the probabilities, estimates obtained for the probit regression (Table 2) are used. For simplicity, perratio is denoted as c .

Table 4: Transition probabilities for each possible pair of subsequent states

Transition (T)

1->1

1->0

0->1

0->0

Equation

2

Description

Firstly, workers, whose effort is not revealed, cannot be dismissed. This occurs with probability of 1-q . Secondly, some workers, whose effort level is revealed, will be retained.

The second equation follows from the first one and the fact that probabilities of events covering the sampling space add up to 1.

Values of a used are average probabilities of finding employment if unemployed in the samples: 0.5 in controls and 0.49 in treatments.

1

A formal proof of this fact can be found for example in Powell (2011) in Section 3.10.

2 stands for standard normal cumulative distribution function.

8

Finally, by substituting transitional probabilities, values of being in each state can be fully displayed:

In order to find workers’ optimal action(s) in the period t (

), it is necessary to find all maximising . The optimal x (if between 0 and 4) has the following form: where f(.) positively depends on w , q and and negatively on . The exact functional form is given in the footnote

3

. Workers exert more effort when:

employment contracts are less imperfect ( x* increases as q increases),

probability of finding new employment is lower i.e. duration of unemployment is higher

( x* increases as a falls),

employment rent is higher ( x increases as increases).

The original optimisation problem can be solved by backward induction. For the last round

, therefore, it is possible to perform maximisation and find x*. The estimated best response function is presented in Figure 6. The optimal points for employers (who are constrained by the workers’ best response curve) occur at the kinks: 6.3

for controls and 8.2

for treatments. Those values are used to calculate and , which are automatically and for the second last period. Then, the process is repeated. Optimal wages for each period are displayed in Figure 7 (generated by the model).

9

4

3,5

3

2,5

2

1,5

1

0,5

0

0

Control

Treatment

2 4 6

Wage

8 10 12

Figure 6: Best response curves generated by the model for the final round of the game

8,5

8

7,5

7

6,5

6

5,5

0 1 2 3 4 5 6 7 8 9 10

Period

Control

Treatment

Figure 7: Optimal wages by period in treatments in controls

According to the model, higher degree of incomplete employment contracts is associated with higher wage required to motivate workers to exert maximum effort (4.0). A more informal explanation is as follows. In the treatment condition employers are faced with significant risk – workers might try to shirk, believing that they are likely to avoid the termination of their contract.

10

In fact, in four out of ten cases, they would be right. Is there a way in which employers could make shirking too risky for employees? They can achieve this by offering sufficiently high wages. Furthermore, the model predicts a significant upward trend in wage rates for treatments and a much flatter increase for controls. This can be explained by the fact that termination of a contract is more costly to workers on the beginning of the game, thus less is required to motivate them. As the game progresses, dismissals affect fewer rounds of the game, therefore, higher incentive is necessary. This effect is one of the most important reasons for using the dynamic programming approach to model workers’ behaviour.

Otherwise we would assume that agents do not care about future consequences of their decisions, which is certainly not the case in the labour market. The increase in wages is much weaker in controls which might be explained by higher turnover, which corresponds to lower risk of long-term unemployment.

Predictions generated by means of the model can be compared to findings from the experimental data. The graph below (Figure 8) shows average wages for each round for both conditions.

6,5

6

5,5

5

4,5

4

9

8,5

8

7,5

7

1 2 3 4 5 6

Round

7 8 9 10

Figure 8: Average wages set by employers in controls and treatments

(bars have length of one standard deviation for each round)

Control

Treatment

11

Average wage is higher in treatments than in controls for all rounds. The difference becomes greater as the game progresses. Furthermore, in the treatment condition we observe a strong upward trend in wages (up to round 7) as predicted by the model. The following fall might be explained by the fact that in rounds 6 and 7, average wage was above the optimum, which led to lower profits for buyers, who adjusted the prices downwards in the next two rounds. The upward trend then continues for the final round.

The graph provides support for the research hypothesis that average wages will be higher in the treatment condition than in the control. Due to high number of observations (more than 350 per sample) it was justified to use a t-test with unequal variances to test whether average wage in controls is statistically different than in treatments. Average wage in treatments (7.42) is lower than average wage in controls (5.90) by 1.52. The test statistic (-12.82) is large enough in absolute terms so that the null hypothesis (the means are equal) can be rejected at 1% significance level (p<0.0001).

4.3

Concluding remarks

Incomplete employment contracts are beneficial for workers who are employed. They receive a significant wage premium over the market equilibrium. At the same time, firms’ profits are much lower in treatments than in controls. It is also important to note that in the real labour market not all workers benefit from incomplete contracts. The number of people unemployed is higher (due to higher wage premium) and mobility of labour is lower. Long-term unemployment is more likely under incomplete employment contracts. Therefore, it can be concluded that incomplete employment contracts are a major source of income inequality. They might negatively affect long-run economic growth by depressing investment (due to lower firms’ profit) and increasing number of long-term unemployed. As a result, it justified to consider policy solutions aiming to offset negative consequences of incomplete employment contracts.

Word count: 1998 (excluding figures, tables, and references)

12

5 References

Akerlof, G. (1982). Labor Contracts as Partial Gift Exchange. The Quarterly Journal of

Economics , 97(4), p.543.

Altmann, S., Falk, A., Grunewald, A. and Huffman, D. (2013). Contractual Incompleteness,

Unemployment, and Labour Market Segmentation. The Review of Economic Studies , 81(1),

Chen, D., Schonger, M. and Wickens, C. (2016). oTree - An open-source platform for laboratory, online, and field experiments. Journal of Behavioral and Experimental Finance , 9, pp.88-97.

Fehr, E. and Falk, A. (1999). Wage Rigidity in a Competitive Incomplete Contract Market.

Journal of Political Economy , 107(1), p.106.

Fischbacher, U. (2007). z-Tree: Zurich toolbox for ready-made economic experiments. Exp Econ ,

10(2), pp.171-178.

Friedman, D., Cassar, A. and Selten, R. (2004). Economics lab . London: Routledge.

Friedman, D. and Shyam Sunder, (1994). Experimental methods . Cambridge [England]:

Cambridge University Press.

Gneezy, U. (2013). Does high wage lead to high profits? An experimental study of reciprocity using real effort. The University of Chicago GSB, Chicago

Louviere, J., Hensher, D. and Swait, J. (2000). Stated choice methods . Cambridge: Cambridge

University Press.

Powell, W. (2011). Approximate dynamic programming . Hoboken, N.J.: Wiley.

Shapiro, C., Stiglitz J. E. (1984). Equilibrium Unemployment as a Worker Discipline Device.

American Economic Review , 74(3), 433–444.

Yellen, J. (1984). Efficiency Wage Models of Unemployment. American Economic Review ,

74(2), pp.200-205.

13

!

O

PEN

S

OURCE

: I

N

S

EARCH OF

C

URES FOR

N

EGLECTED

T

ROPICAL

D

ISEASES

Sukhi Wei 1

B.Sc Economics With A Year Abroad

2 nd year

University College London

Explore Econ Undergraduate Research Conference

March 2016

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

1

Special thanks to Dr Parama Chaudhury and Dr Frank Witte.

!

Behind every new drug is an arduous journey. The estimated cost of research and development (R&D) varies, but could amount to $2 billion across 10-15 years (Adams &

Bratner, 2006). While upfront cost is high, the marginal cost of producing medicine is low.

Thus, a pharmaceutical company needs patent protection, which grants monopoly power that enables a markup to recover its expenditure. Once the patent expires (typically after 10 years upon release), generic versions of a drug flood the market, eroding its profitability. Price of the second global best-selling drug, blood-thinning Plavix, fell from $162 to $10 per month when its patent expired in 2012 (Strawbridge, 2012). Another type of product faces a similar cost structure: software. Depending on its complexity, software development can be an expensive endeavour. Windows Vista operating system exhausted $6 billion over 5 years upon release, but to reproduce it costs virtually nothing. Hence, it is unsurprising that

Windows sold each copy for $239 and took painstaking steps to prevent privacy (Protalinski,

2009).

While the inflated price of Windows caused rampage among users, expensive drugs pose a more alarming concern. Pharmaceutical companies direct research to the most profitable drugs, namely those targeting first-world diseases. This leaves a void in studies on illnesses prevalent in developing regions of Africa, South Asia and Latin America, where people cannot afford the treatments. The World Health Organisation identified 17 diseases as

Neglected Tropical Diseases (NTDs), which despite affecting 1 billion people, fail to garner adequate funds for research (WHO, 2016).

Given the similarities between the industries, a solution for NTDs may exist in the IT world.

In the mid 90s, the domineering Microsoft faced an unexpected threat. The Linux operating system created a sensational buzz it was being for free. If developed via conventional propriety means, the Linux counterpart to Windows Vista named Fedora 9 would cost approximately $10 billion (McPherson, Proffitt, & Hale-Evans, 2008). However, as a product of open source, it came without a price tag.

The open source process resembles a bazaar of ideas where a sharing culture prevails in these communities. a new version of Linux is released nearly every 6 months, and independent hobbyists/programmers work collaboratively to fix bugs present. The ‘ open ’ element is exemplified by:

•

!

open participation - anyone can join

!

• !

open knowledge - all code is made public

• !

open distribution – all code can be freely reused

In contrast, traditional software is built like a cathedral, carefully crafted by an isolated team of programmers, only opening its door when all visible bugs are fixed – much like Microsoft

Windows, which is released every few years.

Figure 1 compares the two varying approaches. While both begin with a core group of programmers defining the direction of a project, they soon diverge as an open source software is made public as a ‘kernel’ (a small segment or crude version of code). After a peer review of the kernel, the process enters an iterative cycle of testing and bug-fixing, where anyone may contribute new code to improve upon the original. This process is repeated in the

Figure 1 Traditional vs Open Source development models

!

‘parallel debugging’ stage, after new code is incorporated by the core team in ‘development release’, but before the final ‘production release’ (Roets, Minnaar, & Wright, 2007).

Known for the model’s efficiency and cost-effectiveness, open source could contain R&D costs of drug research, thus keeping new drugs cheap. It does so by tackling all four contributors to the sky-high price: outlay, risk, duration and monopoly power.

The average annual wage of a pharmaceutical employee is over $100,000 (PhRMA, 2013).

Cost of labour undoubtedly adds up to a sizeable sum given the duration large number of employees involved in developing a drug. The open source model, which relies almost entirely on volunteers’ donation of time and effort essentially reduces wages to 0. Further cost savings is achieved with the use of free online platforms and databases, which minimises money spent on private severs and physical facilities. It may be hard to reconcile the fact the images of an IT enthusiast behind his computer and a pharmacist in a sterile laboratory.

Nevertheless, programmers find bugs to fix, while researchers find lead molecules that interact with disease-related proteins. Both involve ‘finding and fixing tiny problems hidden in an ocean of code’ ( Maurer, Rai, Sali, 2004). A number of open research initiatives are already underway, such as the Tropical Disease Initiative, Open Source Drug Discovery and

Collaborative Drug Discovery. Adopting the ethos of open source, research is split into bitesize tasks delegated to many contributors. Results are shared online, enabling speedy peer review.

While open research is largely limited to the Discovery and Pre-clinical stages of drug development, the influence of open source does not end here. Several free software facilitating the R&D process have emerged, such as the clinical trial data management,

OpenClinica. Historically, clinical trial involves the daunting tasks of capturing, cleaning, and extracting data, which translate into large volumes of paperwork .

Despite the advent of commercial Electronic Data Capture (EDC) software that digitalises the tedious process, many poorly-funded research institutions fail to shift to electronic records due to the exorbitant its exorbitant price. OpenClinica is an open source EDC software that lowers the cost of paper-based studies by an approximately 47% (Huger , 2013).

With such significant reduction, more parties are empowered to conduct NTD trials that are otherwise beyond their financial capacity.

!

Illustrated in Figure 2, a combination of open source concept and software can be incorporated to drug development to reduce outlay. Additionally, open research drives down cost in early stages, freeing up funds for more needed phase of product development. This would reverse the recent trend of funds shifting towards basic research, expediting clinical trials of existing, untested drugs (Moran, et al., 2011). Nevertheless, unlike software development, physical equipment and facilities are inevitably needed for wet experiments.

Open source research projects can form partnerships with public research institutions for these stages. Aided by rapid technological progress, drug researchers are increasing their use computers as substitutes for lab functions, from virtually screening thousands of compounds for suitable drug candidates, to modelling the interactions between chemicals and diseaserelated proteins (Carlson, 2012). For example, a SARS protein responsible for nearly 800 deaths in 2003 was identified by a virtual scan on proteins encoded by the SARS genome

(von Grotthuss M, 2003) .!

As such, open source has become even more feasible in recent years.

Open!Research!

Open!Source!

Software !

Figure 2 Open source in phases of drug development

Aside from the tangible expenditure in drug development, a pharmaceutical company takes into account the opportunity cost of capital when pricing a new drug. Thus, the duration and risks contribute to the unaffordability since investors are attracted only if expected returns are lower than the opportunity cost. Given the dismal success rate of the clinical trial phase – in some studies only 12% - profits made from one successful drug must be large enough to cover for the cost of failure, the reward and time value for investors. The average opportunity cost of capital across the same 5 studies mentioned above, adjusted for risk, is $534 million per drug, a significant sum that pharmaceutical companies incorporate into the price of a new drug (Morgan, Lexchin, Cunningham, Greyson, & Grootendorst, 2011). An open source research, however, can be viewed as a huge risk-sharing platform with many stakeholders. In this case, failure mainly incurs cost on lost time, which volunteers have willingly donated to the cause. Thus, there is no obligation for the final product to compensate for the risks.

Moreover, open source may reduce chances of failure. In the software industry, a famous

!

saying dubbed as ‘Linus’s Law’ states that “given enough eyeballs, all bugs are shallow.”

Just as how software experiences rapid prototyping and rapid failures, experiment results are released quickly online (compared to publishing in journals) so the community can identify dead ends and change the direction of a project quickly. Moreover, ‘the averaged opinion of a mass of equally expert (or equally ignorant) observers is quite a bit more reliable a predictor than that of a single randomly-chosen one of the observers (Raymond, 2001). With many participants across the globe, viable solutions are more likely to emerge when a research project encounters roadblocks.

Although open source could increase the success rate, there is no evidence for whether it quickens the pace of research. Unlike private pharmaceutical research, the open source approach imposes an opportunity cost on volunteers’ time. Following the argument for risks, volunteers do not expect monetary returns for their donated time. They are rewarded with immediate recognition and satisfaction from attaining a goal. Opportunity cost for funds could also be overlooked since those spent on conventional research methods has not been proven effective in delivering treatments for NTDs.

To sum up, the cost in discovery and pre-clinical phase are minimised by open research, open source software like OpenClinica, and disregarding the opportunity cost, as shown in Figure

3.

Total:!$1064!

50%!

33%!

17%!

Figure 3 How open source reduces R&D cost

Profits!forgone!

Open!source!

software!

Open !

research !

!

Pharmaceutical companies often cite high R&D cost to justify the high prices of new drugs, but profit remains the driving reason. As Table 1 shows, spending on sales and marketing often exceeds that of R&D – sometimes twice as much, and the top drug companies make billions of profit each year (Anderson, 2014). On the other hand, the composition of a new drug is made public in an open source project. Once approved, any generic manufacturer can freely obtain the information and produce it. The competition will drive prices down, close to marginal cost and eliminating profits. Without the need to please investors, reward management and pay employees, affordable drugs for NTDs come within reach with open source.

Company!

Johnson!&!Johnson!

(US)!

Novartis!(Swiss)!

Pfizer!(US)!

58.8!

51.6!

50.3!

HoffmannNLa!

Roche!(Swiss)!

Sanofi!(France)!

Merck!(US)!

44.4!

44.0!

GSK!(UK)!

41.4!

AstraZeneca!(UK)!

25.7!

Eli!Lilly!(US)!

AbbVie!(US)!

23.1!

18.8!

Total!

revenue!

($bn)!

71.3!

R&D!spend!

($bn)!

8.2!

9.9!

6.6!

9.3!

6.3!

7.5!

5.3!

4.3!

5.5!

2.9!

Sales!and!marketing!

spend($bn)!

17.5!

14.6!

11.4!

9.0!

9.1!

9.5!

9.9!

7.3!

5.7!

4.3!

Profit!

($bn)!

13.8!

9.2!

22.0!

12.0!

8.5!

4.4!

8.5!

2.6!

4.7!

4.1!

Profit!

margin!(%)!

19!

16!

43!

24!

11!

10!

21!

10!

20!

22!

!

Table 1 Profile of top pharmaceutical companies in 2014, BBC News

As of today, open source has yet to deliver a drug to the market. Its potential has not been fully unleashed, with projects utilising either the concept of open research, or free facilitating software. Merging these two elements can reduce cost more substantially, providing an impactful push along the drug pipeline. Furthermore, some companies relying on open source have made profit with innovative business models - Red Hat Inc, the largest commercial distributor of Linux forecasted a profit of $1.80/share on its $2 billion revenue last year

!

(Reuters, 2015). W ith suitable license schemes, open source drug development could be selfsustainable in the long run. Like a chef who shares his recipe but charges a franchise fee if it is used by restaurants, open source initiatives could provide license to generic manufacturers on profit-sharing terms. A small percentage of profit could be donated back to the initiatives to fund more ongoing research.

Manufacturers must obtain approval from health and safety regulators before production, creating a complete list of obliging firms to enforce compliance. Even if this cannot not fund future research completely, it further reduces the burden of NTDs on public sector and NGOs.

The main concerns with open source drug development are incentives, safety and availability of technology. Incentives are self-evident in the thriving open source software community; economists have also delved into this topic (Tirole & Lerner, 2002). The safety of a drug that involved less qualified volunteers may come into question, but experienced project managers, rising computational abilities and rigorous peer review should mitigate the risks. Lastly, some regions may not have decent internet connectivity and lab facilities to conduct open source research. Nevertheless, open source is not bounded by national borders. While this approach is more likely to thrive in more technologically advance countries such as India, dispersed institutions in other countries can collaborate online, simultaneously raising local research standards. Governments also may be more likely to fund such projects if they appear promising.

Above all other strengths, the true power of open source lies in the fact that programmers want to scratch a ‘personal itch’. With free software comes inevitable bugs that they are eager to fix for personal utility. Similarly, in drug research, open source enables local institutions that may not have the financial capabilities to solve a local disease, instead of hopelessly waiting for help from profiteering MNCs that never came.

!

(2000 words)

!

BIBLIOGRAPHY !

Levine, S. S., & Prietela, M. J. (2014). Open Collaboration for Innovation: Principles and

Performance. Collective Intelligence 2014 (pp. 1-4). Massacheusettes: MIT Sloan School of

Business.

Raymond, E. S. (2001). The Cathedral and the Bazaar.

O’Reilly Media, Inc.

Strawbridge, H. (2012, May 21). Wallets rejoice as Plavix goes generic . Retrieved March 9,

2016, from Harvard Health Publications: http://www.health.harvard.edu/blog/wallets-rejoiceas-plavix-goes-generic-201205214727

WHO. (2016). Neglected tropical diseases . Retrieved May 2016, 9, from World Health

Organisation: http://www.who.int/neglected_diseases/diseases/en/

Protalinski, E. (2009, June 25). Windows 7 pricing announced: cheaper than Vista . Retrieved

March 9, 2016, from Ars Technica: http://arstechnica.com/informationtechnology/2009/06/windows-7-pricing-announced-cheaper-than-vista/

McPherson, A., Proffitt, B., & Hale-Evans, R. (2008, October). The Linux Foundation .

Retrieved March 9 2016, from Estimating the Total Development Cost of a Linux

Distribution: http://www.linuxfoundation.org/sites/main/files/publications/estimatinglinux.html

Raymond, E. S. (2001). The Cathedral and the Bazaar.

O'Reilly Media, Inc.

PhRMA. (2013). 2013 Biopharmaceutical Research Industry Profile.

Washington DC:

Pharmaceutical Research and Manufacturers of America.

Carlson, E. (2012, August 29). 5 Ways Computers Boost Drug Discovery . Retrieved March 9,

2016, from Live Science: http://www.livescience.com/22786-computers-drug-designnigms.html von Grotthuss M, W. L. (2003). mRNA cap-1 methyltransferase in the SARS genome. Cell ,

113 (6), 701-2.

WHO. (2003, December 31). Summary of probable SARS cases with onset of illness from 1

November 2002 to 31 July 2003 . Retrieved March 9, 2016, from World Health Organisation: http://www.who.int/csr/sars/country/table2004_04_21/en/