OceanStore: An Architecture for Global-Scale Persistent Storage John Kubiatowicz, et al ASPLOS 2000

advertisement

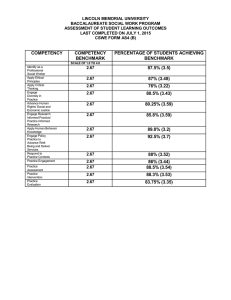

OceanStore: An Architecture for Global-Scale Persistent Storage John Kubiatowicz, et al ASPLOS 2000 OceanStore 1010 users, each with 10,000 files Global scale information storage. Mobile access to information in a uniform and highly available way. Servers are untrusted. Caches data anywhere, anytime. Monitors usage patterns. OceanStore [Rhea et al. 2003] Main Goals Untrusted infrastructure Nomadic data Example applications Groupware and PIM Email Digital libraries Scientific data repository Personal information management tools: calendars, emails, contact lists Example applications Groupware and PIM Email Digital libraries Scientific data repository Challenges: scaling, consistency, migration, network failures Storage Organization OceanStore data object ~= file Ordered sequence of read-only versions Every version of every object kept forever Can be used as backup An object contains metadata, data, and references to previous versions Storage Organization A stream of objects identified by AGUID Active globally-unique identifier Cryptographically-secure hash of an application-specific name and the owner’s public key Prevents namespace collisions Storage Organization Each version of data object stored in a Btree like data structure Each block has a BGUID Cryptographically-secure hash of the block content Each version has a VGUID Two versions may share blocks Storage Organization [Rhea et al. 2003] Access Control Restricting readers: Symmetric encryption key distributed to allowed readers. Restricting writers: ACL. Signed writes. ACL for object chosen with signed certificate. Location and Routing Attenuated Bloom Filters Find 11010 Location and Routing Plaxton-like trees Updating data All data is encrypted. A set of predicates is evaluated in order. The actions of the earliest true predicate are applied. Update is logged if it commits or aborts. Predicates: compare-version, compare-block, compare-size, search Actions replace-block, insert-block, delete-block, append Application-Specific Consistency An update is the operation of adding a new version to the head of a version stream Updates are applied atomically Represented as an array of potential actions Each guarded by a predicate Application-Specific Consistency Example actions Replacing some bytes Appending new data to an object Truncating an object Example predicates Check for the latest version number Compare bytes Application-Specific Consistency To implement ACID semantic Check for readers If none, update Append to a mailbox No checking No explicit locks or leases Application-Specific Consistency Predicate for reads Examples Can’t read something older than 30 seconds Only can read data from a specific time frame Replication and Consistency A data object is a sequence of read-only versions, consisting of read-only blocks, named by BGUIDs No issues for replication The mapping from AGUID to the latest VGUID may change Use primary-copy replication Serializing updates A small primary tier of replicas run a Byzantine agreement protocol. A secondary tier of replicas optimistically propagate the update using an epidemic protocol. Ordering from primary tier is multicasted to secondary replicas. The Full Update Path Deep Archival Storage Data is fragmented. Each fragment is an object. Erasure coding is used to increase reliability. Introspection computation optimization Uses: •Cluster recognition •Replica management •Other uses observation Software Architecture Java atop the Staged Event Driven Architecture (SEDA) Each subsystem is implemented as a stage With each own state and thread pool Stages communicate through events 50,000 semicolons by five graduate students and many undergrad interns Software Architecture Language Choice Java: speed of development Strongly typed Garbage collected Reduced debugging time Support for events Easy to port multithreaded code in Java Ported to Windows 2000 in one week Language Choice Problems with Java: Unpredictability introduced by garbage collection Every thread in the system is halted while the garbage collector runs Any on-going process stalls for ~100 milliseconds May add several seconds to requests travel cross machines Experimental Setup Two test beds Local cluster of 42 machines at Berkeley Each with 2 1.0 GHz Pentium III 1.5GB PC133 SDRAM 2 36GB hard drives, RAID 0 Gigabit Ethernet adaptor Linux 2.4.18 SMP Experimental Setup PlanetLab, ~100 nodes across ~40 sites 1.2 GHz Pentium III, 1GB RAM ~1000 virtual nodes Storage Overhead For 32 choose 16 erasure encoding 2.7x for data > 8KB For 64 choose 16 erasure encoding 4.8x for data > 8KB The Latency Benchmark A single client submits updates of various sizes to a four-node inner ring Metric: Time from before the request is signed to the signature over the result is checked Update 40 MB of data over 1000 updates, with 100ms between updates The Latency Benchmark Latency Breakdown Update Latency (ms) Key Size 512b 1024b Update 5% Size Time Median Time 95% Time Phase Time (ms) Check 0.3 Serialize 6.1 4kB 39 40 41 2MB 1037 1086 1348 Apply 1.5 4kB 98 99 100 Archive 4.5 2MB 1098 1150 1448 Sign 77.8 The Throughput Microbenchmark A number of clients submit updates of various sizes to disjoint objects, to a fournode inner ring The clients Create their objects Synchronize themselves Update the object as many time as possible for 100 seconds The Throughput Microbenchmark Archive Retrieval Performance Populate the archive by submitting updates of various sizes to a four-node inner ring Delete all copies of the data in its reconstructed form A single client submits reads Archive Retrieval Performance Throughput: 1.19 MB/s (Planetlab) 2.59 MB/s (local cluster) Latency ~30-70 milliseconds The Stream Benchmark Ran 500 virtual nodes on PlanetLab Inner Ring in SF Bay Area Replicas clustered in 7 largest P-Lab sites Streams updates to all replicas One writer - content creator – repeatedly appends to data object Others read new versions as they arrive Measure network resource consumption The Stream Benchmark The Tag Benchmark Measures the latency of token passing OceanStore 2.2 times slower than TCP/IP The Andrew Benchmark File system benchmark 4.6x than NFS in read-intensive phases 7.3x slower in write-intensive phases Bloom Filters Compact data structures for a probabilistic representation of a set Appropriate to answer membership queries [Koloniari and Pitoura] Bloom Filters (cont’d) Bit vector v Element a 1 H1(a) = P1 H2(a) = P2 1 H3(a) = P3 1 H4(a) = P4 1 m bits Query for b: check the bits at positions H1(b), H2(b), ..., H4(b). back Pair-Wise Reconciliation Site A V0 : (x0) write x V1 : (x1) Site B V0: (x0) write x V2: (x2) Site C V0 : (x0) write x V3 : (x3) V4 : (x4) V5: (x5) [Kang et al. 2003] Hash History Reconciliation H0 H0 Site A V0 V1 H1 Site B V2 back Site C H0 H0 V3 H3 H2 V4 H0 H1 V5 H0 H2 H4 H1 H4 Hi = hash (Vi) H2 H3 H5 Erasure Codes n Message Encoding Algorithm cn Encoding Transmission n Received Decoding Algorithm n Message back [Mitzenmacher]