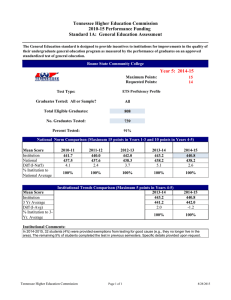

Quality Assurance Funding 2015-20 Cycle Standards Tennessee Higher Education Commission

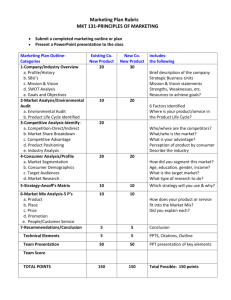

advertisement