Confirmation Discomforted John Watling The inconsistency of the principle of induction

advertisement

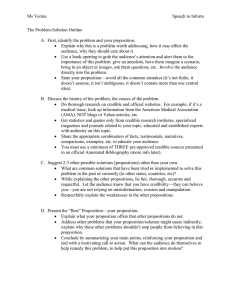

Confirmation Discomforted John Watling The inconsistency of the principle of induction From a universal hypothesis a man deduces a body of propositions which he can put to an experimental test; he makes the experiments and discovers the propositions to be true. Do his experiments provide evidence in favour of his hypothesis? The principle of induction states that they do, but the principle is inconsistent. One demonstration of the inconsistency can he found in Nelson Goodman’s book Fact, Fiction and Forecast, another in Karl Popper’s article “The Philosophy of Science: a Personal Report’’ reprinted in his book Conjectures and Refutations. It can be shown quite simply. Evidence in favour of a proposition is equally evidence against its negation. Evidence against a proposition is evidence—at least as strong—against anything of which it is a logical consequence: just as evidence in favour of a proposition is evidence—at least as’ strong—in favour of any of its logical consequences. Therefore evidence in favour of a proposition is evidence—at least as strong—against each of its contraries, since each of its contraries has its negation as logical consequence. Now suppose the man’s experiments concern the relationship between two varying circumstances, the different values of one represented in the figure by different lengths in the direction OX, those of the other by lengths in the direction OY. Suppose he makes determinations of the value of OY for four values of OX, giving him the four points a, b, c, d. Suppose, lastly, that his hypothesis concerning the relationship is represented by the straight line L. According to the principle of induction the fact that the points lie on the line L is evidence in favour of the hypothesis represented by L. But other lines, representing hypotheses which are contraries of L, comprise these same points—which represents the fact that they too have the four experimental results as consequences. According to the principle of induction these other hypotheses —for example M, represented by a sine curve—are equally supported by the 1 experiments. Therefore whatever evidence the experiments provide in favour of his hypothesis, they provide evidence—at least as strong—against it. But the conclusion that the experiments are evidence for his hypothesis is inconsistent with the conclusion that they are evidence at least as strong against it: the principle of induction has led us into inconsistency. A less ambitious principle would avoid inconsistency. The hypotheses which an experiment does not refute divide into two sorts: those put in jeopardy by the experiment— that is, those which would have been refuted had the experiment tuned out differently—and those for which the experiment provided no test. The less ambitious principle would be: an experiment provides favourable evidence for the disjunction of all the hypotheses which it puts in jeopardy but does not refute. But this principle is of no use; for no matter how strict and extensive the experimental test, an infinite number of hypotheses will survive it, only a few of which can even be guessed at. The disjunction for which the experiment provides favourable evidence cannot be formulated. Assume in addition that what is favourable evidence for a disjunction is favourable evidence for each of the disjuncts and the inconsistency is again present. The inconsistency of this principle raises two questions: first, how is it that a patently inconsistent principle should have stood for so long in so important a place, providing for the experimental basis of science? Second, can the principle be replaced by one that is consistent? The inconsistency of the Classical interpretation of probability 2 There is in the theory of probability—by which I mean the analysis or interpretation of probability propositions, not the mathematical calculus—an inconsistency almost as striking as that of the principle of induction. The classical interpretation defines the probability of one event on another. Suppose the latter event divides into a number of alternatives, that in some of these the former event happens, in the remainder it does not; then the probability of the former event on the latter is the proportion of the alternatives for the latter which yield the former. The greatest probability on this definition is unity, when all the alternatives yield the former event; the least is zero, when none do; the probabilities between are rational fractions between zero and unity. For example, what is probability of a dice falling three spot side uppermost on the event that it falls with one of its six sides uppermost? On the classical definition this probability is the number of alternatives for falling with one of its sides uppermost, that is six, dividing the number of these alternatives in which it falls three spot side uppermost, that is one: the probability is 1/6. Now consider the following problem: we are following a man along a road and reach a place where the road divides into three, two paths climbing the hillside, one lying in the valley. What is the probability that the man, now out of sight, took the path lying in the valley on the fact that he took one of the three paths? One of us argues: there are three roads, the path along the valley is one of them, therefore the probability is 1/3. Another argues: there are two ways he may have gone, he may have climbed the hill or he may have stayed in the valley; staying in the valley is one of these two ways, therefore the probability is 1/2. Since a probability of 1/3 is inconsistent with a probability of 1/2, these two answers are inconsistent. But on the classical interpretation each is correct, therefore the interpretation is inconsistent. This inconsistency, obvious enough when the problem is simple, can be very puzzling when it is complicated. Faced with the inconsistency there are two courses open: to amend the definition by inserting the condition that each of the alternatives must be as likely as each of the others on the fact of the occurrence of one of them. This removes the inconsistency, for it cannot be that on the fact that our man took one of the three roads he is both equally likely to have taken any or them and equally likely to have climbed the hill as stayed in the valley. To take this course is to give up the attempt to define probability in favour of the attempt to set up a body of principles for deducing one probability from another: to move from the classical interpretation to the classical probability calculus of which this amended definition is the fundamental principle. The other course is to seek a consistent definition of probability by replacing the vague concept of the alternatives for an event by something more precise. Rudolph Carnap in his book Logical Foundations of Probability attempts this latter course. If the proposition that a particular event will occur were analyzable—as the doctrine of logical atomism held—in just one way into unanalyzable basic propositions, then a precise sense could be given to the alternatives for an event. Carnap does not hold that this can be done, rather 3 he holds that for any given language the sentence which states that a certain event will occur is analyzable in just one way into the affirmation or denial of elementary sentences—comprising individual constants and primitive predicates—which are not themselves analyzable. Each elementary sentence is logically consistent with every other and the totality of elementary sentences enables a complete description of the universe to be given. He defines the concept of a state-description for a given language as any sentence which affirms or denies every elementary sentence of the language. A state-description is an elementary and comprehensive logical possibility for a given language. These elementary possibilities enable him to give a precise sense to the alternatives for any sentence in a given language which states that a particular event will occur, and so to give a consistent definition of the probability, in a given language, of one event on another. Moreover probabilities in this definition concern logic and language alone, not experiment or scientific hypothesis. Carnap’s approach raises several difficult problems. First, can such elementary sentences be defined? Second, are probabilities relative to language? If they are not, then Carnap has not given a consistent definition of probability, for the probability of one event on another will have one probability when calculated in one language, another in another. The discussion of these problems promises to be lengthy; let us suppose therefore that Carnap has succeeded: that he has provided a consistent definition of probability as a logical relation between sentences. Then the following example shows that, whatever shape it may take, it is unsatisfactory. Everyone who picks mushrooms knows that amongst mushroom-like species of fungi some are edible and some are not. What is the probability that a mushroom-like fungus which a man is wondering whether to eat belongs to an edible species? One intuitive way of applying the classical interpretation would be to argue that the different alternatives for a mushroom-like fungus are the different species to which it might belong. There are a large number of mushroom-like species of which only a few are poisonous, therefore on this interpretation the probability that a mushroom-like fungus is edible is high. But in fact the poisonous species are the most common, and a man without experience who picks mushroom-like fungi will end up with many more poisonous specimens than edible ones. Therefore, on an empirical interpretation of probability, the probability of the fungus being edible is low. There is no doubt that between these two ways of determining the probability we shall choose the second: the way which determines the probability by an empirical question, a question concerning, in one way or another, the question of which species are the commonest. Now no matter what logical definition of probability we used, consistent or inconsistent, it would give way to these empirical facts. Here is the explanation of the inconsistency of the classical definition of probability: if the alternatives to be counted are specified in a vague enough fashion, then a man may choose the alternatives in such a 4 way that they are equally probable on an empirical interpretation: he can adjust his a priori notions to fit the facts. So he believes, perhaps, that a dice has six ways to fall, because it falls on each of its six faces with equal frequency; that there are twelve ways of choosing two balls from an urn without replacing the first before drawing the second, because there are twelve distinct selections which turn up with equal frequency. If Carnap has found a consistent a priori definition then that must be an end of a priori definitions: a consistent a priori definition might come into blatant conflict with the facts, therefore we choose an inconsistent one which we can adjust as the facts demand. The explanation of the inconsistency of the principle of induction This is the clue to the explanation of the inconsistency of the principle of induction. But how can the a priori support which, according to the principle, an experimental result provides for a universal hypothesis, be in danger of falling foul of scientific facts? One experimental fact—say the dropping of a china cup onto a wooden floor—may tend to produce an other—the breaking of the cup —so that there is no doubt that the question of whether the discovery that a china cup has been dropped onto a wooden floor is favourable evidence for the conclusion that it has broken is a question for science and not for logic. But no experimental fact could tend to produce the truth of a universal hypothesis. How can the a priori determination of inductive support fall foul of scientific fact? My demonstration that this can be the case rests on the fellow of a principle I have already made use of: evidence in favour of a proposition is evidence—at least as strong—in favour of any of its logical consequences. Of course the evidence may favour a consequence more strongly. Perhaps the evidence that a dice has been thrown supports to degree 2/3 the conclusion that it fell with either one spot, or two spots, or three spots, or four spots, uppermost. But the same evidence supports a logical consequence of this—that it fell either one spot, or two, or three, or four, or five spots, uppermost, to degree 5/6. This principle must not be confused with the transitivity of evidential support. Support is not transitive: the two facts that E supports F, and that F supports G, do not have the consequence that E supports G. Nor must it be confused with a principle which Carnap rejects during his discussion of Hempel’s work on confirmation (Logical Foundations of Probability, Section 87). Carnap rejects the alleged principle—he claims, without much excuse, that Hempel alleges it—that a piece of evidence which reinforces the existing evidence for a conclusion reinforces equally the existing evidence for any consequence of that conclusion. He is right to reject this, for, as he remarks, the existing evidence for that con sequence might already be conclusive. Once free from these confusions I take the principle that evidence in favour of a proposition is evidence—at least as strong—in favour of any of its logical 5 consequences to be incontestable. Now universal hypotheses have particular consequences—the hypothesis that it always rains on Sunday has the consequence that if today is Sunday then it will rain today—so that if there is a priori support of a universal hypothesis by an experimental fact, then there is a priori support of one experimental proposition by another. But an a priori relation of support between experimental propositions, as the discussion of the mushroom-like fungus example has shown, is always in danger of conflicting with an empirical relation. Consider an example. We are driving in a motor car. One of our companions is in possession of a consistent definition of inductive support as an a priori relation. We have driven all the afternoon across uninhabited country and begin to wonder whether the car will run long enough to allow us to reach the next village. Our companion applies his definition of inductive evidence and assures us that the fact that the car has run for 200 miles with out refuelling provides favourable evidence for the conclusion that it will continue to run for ever and, hence, favourable evidence for the conclusion that it will continue to run until we reach the next village. Another companion—better acquainted with the internal combustion engine—points out that, on the contrary, the fact that the car has already run for 200 miles without refuelling is very strong evidence against the conclusion that it will run another 20. Now we cannot regard the two estimates of the support given by this piece of evidence to this conclusion as both correct: the a priori estimate is in conflict with an empirical one. In fact, being firmly convinced of the scientific fact upon which the rival estimate is based, we would discard the a priori estimate. This is not a case where one piece of evidence is favourable, another piece unfavourable, to the same conclusion: it is a case where one person asserts that a piece of evidence is favourable, another person that it is unfavourable, to the very same conclusion. Of course, if we were not firmly convinced of the scientific fact, then we might accept the a priori estimate of the support; that would be a case where the conflict between the two estimates was not forced upon our notice. If we later became convinced of the scientific fact, then we ought, to he consistent, to return and notice that in accepting the a priori measure of support we were mistaken: mistaken in accepting the measure of support, not merely in accepting—if we did accept—the supported hypothesis. Since we use an inconsistent principle of induction this re-appraisal is never forced upon us; for we can always remember that the fact that the car was able to run 200 miles without refuelling follows from the hypothesis that it can run 210 miles without refuelling and forget that this same fact follows from the hypothesis that it can run for ever. Briefly my argument here is this. If the question whether experimental facts provide evidence for a universal hypothesis were an a priori question, then the question whether an experimental fact was evidence for an experimental conclusion would also be an a priori question. But such a question is 6 always a question for science: whether, for example, the fact that all the cherry trees in the orchard have cropped well this year is favourable evidence for the conclusion that they will crop well next. The inconsistency of the principle of induction is explained, and with the explanation the hope of formulating a satisfactory consistent principle vanishes. The probabilities of the classical calculus concern strength of evidence These two inconsistencies, that of the classical interpretation and that of the principle of induction, are closely related, for they each concern an interpretation of a proposition of evidential support. The propositions with which the classical calculus of probabilities enables us to reason are often expressed in such a way as to conceal the fact that they are propositions concerning evidential support, but these forms of expression are mistaken. The probabilities of the classical calculus are sometimes expressed in the form “The probability that this dice will fall three spots uppermost given that it is thrown is 1/6”, and sometimes in the form “The probability that if this die is thrown it will fall three spots uppermost is 1/6.” Neither of these formulations is correct. Take the first. The existence of a fact on which the probability that this die will fall three spots uppermost is 1/6 is consistent with the existence of another fact on which the probability that this die will fall three spots uppermost is 1/3. For example on the fact that the die is thrown the probability might be 1/6, on the fact that the die is thrown and falls an odd number of spots uppermost it might be 1/3. Now from the fact that the die has been thrown together with the fact that given that the die is thrown the probability of its falling six spots uppermost is 1/6, the conclusion follows that the probability of the die falling three spots uppermost is 1/6. Again from the fact that the die has been thrown and has fallen with an odd number of spots uppermost together with the fact that given that the die is thrown and lands an odd number of spots uppermost the probability of its falling three spots uppermost is 1/3, there follows the conclusion that the probability of the die falling three spots uppermost is 1/3. But a probability of 1/6 is inconsistent with a probability of 1/3; from consistent premises we have deduced an inconsistent conclusion. Therefore our argument is invalid. But if the probabilities in our premises are correctly expressed as the probability that the die will fall three spots uppermost given that, it is thrown, the argument would be valid. This cannot be the correct formulation of those probabilities. The same invalid argument is allowed by the other formulation. The facts that the die is thrown and that there is a probability of 1/6 that if it is thrown then it will fall three spots uppermost, together have the consequence that the probability that the dice will fall three spots uppermost is 1/6. If they did not there would be little point in knowing the probability of an 7 hypothetical. By a similar argument the probability of 1/3 that if the die is thrown and falls an odd number of spots uppermost then it will fall three spots uppermost, together with the fact that it has been thrown and fallen an odd number of spots uppermost, yields the conclusion that the probability of it falling three spots uppermost is 1/3. These probabilities are inconsistent; therefore the probabilities of the classical calculus are not probabilities of hypotheticals. The probabilities of the calculus must be interpreted in such a way that the argument: The probability of P on Q is 1/6, Q is certain, therefore the probability of p is 1/6 is invalid. What probabilities fulfil this condition? A man who is wondering whether to eat a certain fungus specimen may calculate a probability which relates the evidence he has—perhaps that the specimen is mushroom-like— to its edibility, but his interest in this relational question arises only because of his interest in a probability which is not relational: “Is it probable—or, How probable is it—that this fungus is edible?” A man who asks this question, finding that the evidence in his possession offers little support either for the edibility or the inedibility of the fungus, will set out to collect more evidence: clearly his question is not about the support given by the evidence in his possession to the proposition that the fungus is edible. When he has eaten the fungus and found it edible he can answer the question he asked: “It is certain that the fungus was edible.” He is answering the very question he asked, yet he relies on quite different evidence and speaks sometime later, the question he asked cannot be relative to time, cannot be “Is it probable now that this fungus is edible?” If he eats the fungus and it kills him, someone else may answer his question, again the very question he asked? “It is certain that the fungus was inedible. His question, since another person can answer it on the evidence of his death, can not have concerned his relation to the proposition, his knowledge of it or belief in it. If it does concern the strength of belief he ought to put in the edibility of the fungus, then it concerns the belief that anyone, with any evidence, at any time, ought to put; not the belief that he ought to put, in his position, with the evidence, at that time. Probability—in questions like this—does not belong to time, or person, or evidence, just as truth does not. Just as a man does not ask “is it true now?” but “Is it true?”, so he does not ask “Is it probable now?” but “Is it probable?”. In fact the question asked with the words “How probable is it that this fungus is edible?” is the very same as the question asked with the words “Is this fungus edible?”: the difference lies in the 8 manner of asking. The probability question indicates that an unqualified answer is not to be expected, and the probability answer indicates what degree of trust the speaker recommends us to put in his answer, indicates, perhaps, how we are to adjust our risk to our confidence. Probability resembles truth in another respect: to ask about the probability of a proposition is not to ask whether the proposition possesses the property of “being probable”, but is rather to ask the pro positional question in a particular manner. The proposition which follows “How probable is it that. . .“ may itself be an hypothetical, or a universal hypothesis, or any sort of proposition whatsoever. If the man eats the fungus and finds it edible he may lose interest in his original question— now that it has been answered—and gain an interest in a question of a different sort. “Was I,” he may ask, “acting rashly when I ate that mushroom? How probable did it seem to me that it was edible?” This is certainly a relational question, though not a question about a relational probability, just as the question “What colour did it seem to you from a distance?” is a relational question but not a question about a relational property. Or he might speculate about how probable it would have seemed to him had he been in possession of a certain piece of evidence he did not have, or had he had more experience with fungi. Such questions—since they always concern the relation between a person and a proposition —cannot be the relational probabilities of the classical calculus, for into those no person enters. Or he may ask yet another question, a question about evidential support. “How strongly did the evidence I had support the conclusion that the fungus was edible? How great a risk did I take in eating it?” Here is a question which is about the relation between two propositions, or two sorts of proposition: the principle of induction answers a question of this sort. The argument: P strongly supports Q, P is certain, therefore Q is probable, is not valid; for the premises of this argument are consistent with the existence of further evidence R which is equally certain and which strongly supports not-Q. Probability questions divide—to summarize this discussion—into two principal sorts: nonrelational questions where the questioner’s serious interest is in the proposition he asks about, for example “How probable is it that this fungus is poisonous?”, and relational questions concerning the strength of evidential support which one proposition gives to another. This distinction is confused by the fact that questions of the first sort form the basis of a number of relational questions: 9 “How probable is it that if I eat this fungus then I shall be sick?” “If I eat this fungus, then how probable is it that I shall be sick?” “How probable did it seem to him that the fungus was poisonous?” “How probable would it seem on the evidence that this is a mushroom-like fungus, that it is edible?” The variety of these questions and the possibility of confusing them with questions about strength of evidential support shows that the contention that probability is a relation may mean a number of different things and be maintained for a number of different reasons. The principles of the probability calculus apply to propositions of the second sort alone, propositions of the form “The evidence X provides strong support for the conclusion Y.” For these are the only probability propositions which fulfil the various conditions: which concern a relation, yet not a relation with a person, and which do not validate the argument from the certainly of X to the probability of Y. Since propositions of evidential support are those to which the probability calculus applies, they are also the propositions which the classical interpretation interprets. I conclude that both the inconsistencies concern definitions, or at least conditions, of evidential support. The principle of induction offers a sufficient condition of “the experimental result X provides favourable evidence for the hypo thesis Y.” Put succinctly the principle is: The experimental result X provides favourable evidence for the hypothesis Y if, and perhaps only if, the experimental result not-X would refute the hypothesis Y. The classical interpretation offers a definition of ‘X provides evidence for or against Y to degree m/n’, put succinctly the definition is: X provides evidence for or against Y to degree m/n if and only if X is m of the n alternatives for Y. The classical interpretation is always intended as a definition, but the principle of induction has sometimes been intended as a non-logical principle. A number of different proposals have been made as to its status, from that of a fundamental scientific hypothesis to that of a metaphysical presupposition. Since the principle is inconsistent the issue between these proposals may seem unimportant, but if the principle is to be replaced by one that is consistent the issue arises again. My argument does not rest on any particular proposal for the status of the principle, but I shall consider the clearest and most fashionable proposal: that it is a principle of logic providing a logically sufficient condition of the experimental support for a hypothesis. 10 Evidential support: a logical or non-logical concept? The two inconsistencies share one further characteristic: not only are they both logical principles providing definitions—or at least logically sufficient conditions—of evidential support, but both interpret propositions of evidential support as propositions of logic. That the principle of induction is a principle of logic is one thing, that it is a principle defining evidential support as a logical relation is another. For there might be have argued that there is a logical principle defining evidential support as a non-logical relation. Investigating the logic of evidential support does not imply that evidential support is itself a logical relation, any more than investigating the logic of the concepts of mechanics implies that mechanics is a branch of logic. But to accept the principle of induction or the classical interpretation is to accept that evidential support is a logical relation. Carnap’s term “confirmation” has come to connote this logical relation. The thesis that the question whether one proposition provides favourable, but not conclusive, evidence for another is a question for logic may take a stronger or a weaker form: it may state that the concept of favourable evidence is definable solely in terms of concepts which are recognized as concepts of logic: the concepts of logical consequence, contradiction, negation, and the like; or it may state that questions about evidence share the fundamental character of questions about logic, being questions concerning conventions, or meaning, or syntax, or analysis, or questions independent of experiment, according to the view of the nature of logic the philosopher may favour. Carnap, for example asserts the thesis in its weaker form. In Section 8 of his Logical Foundations of Probability he says: “Then he tries to determine whether and to what degree the hypothesis is confirmed by the evidence. This last question alone is what we shall be concerned with. We call it a logical question because, once a hypothesis is formulated by h and any possible evidence by e (it need not be the evidence actually observed), the problem whether and how much h is confirmed by e is to be answered merely by a logical analysis of h and e and their relations. This question is not a question of fact in the sense that factual knowledge is required to find the answer. The sentences h and e which are studied, do themselves refer to facts. But, once h and e are given, the question mentioned requires only that we are able to understand them, to grasp their meanings, and to establish certain relations which are based upon their meanings.” Carnap contends, in The Logical Foundations of Probability and elsewhere, that the analysis of probability has been the subject of a pointless dispute. He argues that there are two concepts for 11 analysis, the concept of confirmation and the concept of relative frequency, both having a proper and independent place in the logic of probability. But a piece of analysis has already been performed once the concept of evidential support is identified with that of confirmation. First, propositions of evidential support have been declared to be relational in form: a declaration which, I have argued, is correct. Second, such propositions have been declared to be propositions of logic. By his use, from the very beginning of his discussion, of the loaded term “confirms” in place of the neutral term “provides evidence in favour” Carnap avoids al together the question of the correctness of this analysis. He conceals the fact that confirmation and relative frequency are both of them analyses of the concept of evidential support. Does the fact that these two concepts are both analyses of evidential support mean that they are, after all, rivals? Or are there not merely two concepts of probability, but two concepts of evidential support? Or is one of them, and not the other, the correct analysis? Now there certainly is a non-logical concept: there certainly are propositions concerning evidential support which are not propositions of logic. Consider the question whether a certain skiturn, say the stem-christiana is dangerous. This is the question of how strongly the fact that a skier employs the stem-christiana supports the conclusion that he will sustain an injury. This question is certainly not a question for logic. If there is also a logical concept, that of confirmation, then the question “Is X favourable evidence for Y?” must be ambiguous, expressing either of two quite different questions, a question of logic and a question of fact. Now a proposition which is a logical consequence of another cannot also be its causal consequence: the logical relation excludes the natural relation, the answer to the logical question takes away the opportunity of asking the scientific question. So, by this analogy, the logical relation of support would exclude the scientific relation. The analogy is borne out by our discussion of the inconsistency of the classical interpretation and of the principle of induction: for there we found, in two important cases, the logical relation giving way before the scientific: they were examples where, since a scientific relation was recognised, no logical one could be. If the relation of confirmation does exist, where is an example of a pair of propositions between which it holds? 12