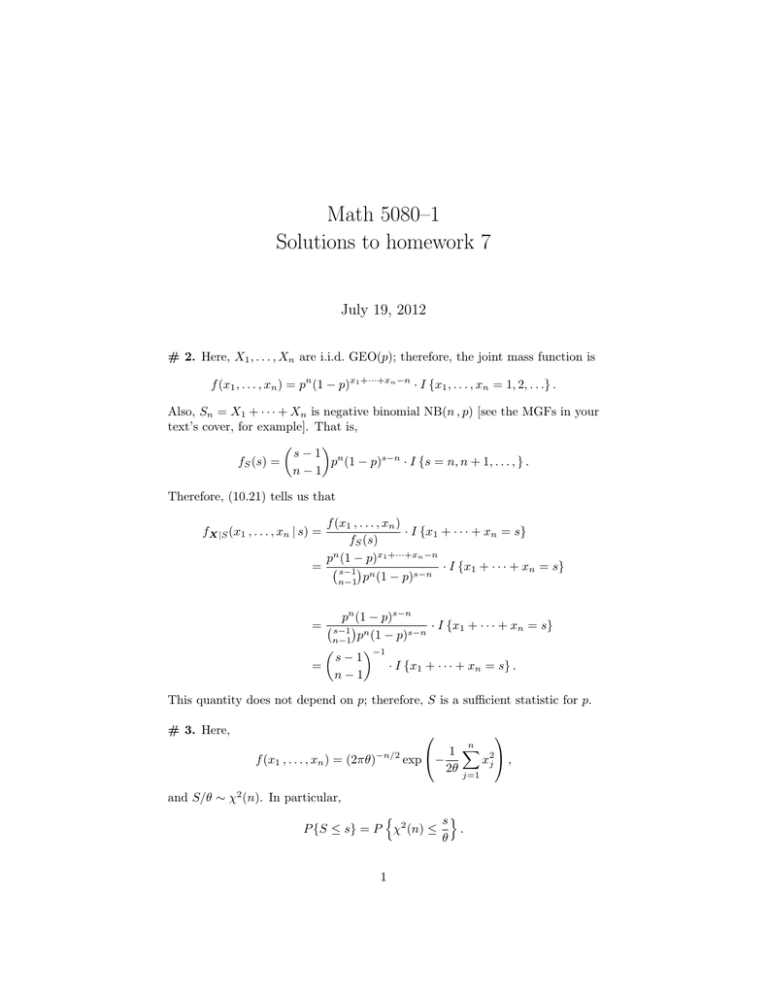

Math 5080–1 Solutions to homework 7 July 19, 2012

advertisement

Math 5080–1

Solutions to homework 7

July 19, 2012

# 2. Here, X1 , . . . , Xn are i.i.d. GEO(p); therefore, the joint mass function is

f (x1 , . . . , xn ) = pn (1 − p)x1 +···+xn −n · I {x1 , . . . , xn = 1, 2, . . .} .

Also, Sn = X1 + · · · + Xn is negative binomial NB(n , p) [see the MGFs in your

text’s cover, for example]. That is,

s−1 n

fS (s) =

p (1 − p)s−n · I {s = n, n + 1, . . . , } .

n−1

Therefore, (10.21) tells us that

f (x1 , . . . , xn )

· I {x1 + · · · + xn = s}

fS (s)

pn (1 − p)x1 +···+xn −n

= s−1 n

· I {x1 + · · · + xn = s}

s−n

n−1 p (1 − p)

fX|S (x1 , . . . , xn | s) =

pn (1 − p)s−n

· I {x1 + · · · + xn = s}

s−1 n

s−n

n−1 p (1 − p)

−1

s−1

=

· I {x1 + · · · + xn = s} .

n−1

=

This quantity does not depend on p; therefore, S is a sufficient statistic for p.

# 3. Here,

n

X

1

f (x1 , . . . , xn ) = (2πθ)−n/2 exp −

x2 ,

2θ j=1 j

and S/θ ∼ χ2 (n). In particular,

n

so

P {S ≤ s} = P χ2 (n) ≤

.

θ

1

Differentiate both sides (d/ds) to see that

1

fS (s) = fχ2 (n) (s/θ) ×

θ

s (n/2)−1

1

1

= n/2

e−s/(2θ) × .

θ

2 Γ(n/2) θ

It follows from (10.21) that

f (x1 , . . . , xn )

· I x21 + · · · + x2n = s

fS (s)

Pn

1

2

(2πθ)−n/2 exp − 2θ

j=1 xj

=

· I x21 + · · · + x2n = s

(n/2)−1

1

s

e−s/(2θ) × θ1

2n/2 Γ(n/2) θ

fX|S (x1 , . . . , xn | s) =

=

Γ(n/2)

· I x21 + · · · + x2n = s .

π n/2 s(n/2)−1

This does not depend on θ; therefore, S is sufficient for θ.

# 4. First of all,

f (x1 , . . . , xn ) = e−

Pn

j=1 (xj −η)

· I {x1:n > η} .

Now we work on the distribution of S = X1:n . Since

n

P {S ≤ s} = 1 − (P {X1 > s}) = 1 − (1 − F (s))

n

for all s,

we differentiate both sides (d/ds) to see that

fS (s) = n (1 − F (s))

n−1

f (s).

Since f (x) = e−(x−η) · I{x > η},

Z s

F (s) =

e−(a−η) da = 1 − e−(s−η)

if s > η.

η

If s ≤ η then F (s) = 0. Therefore,

fS (s) = ne−n(s−η) · I{s > η} ⇒ S ∼ DE(n , η)

And by (10.21),

fX|S (x1 , . . . , xn | s) =

=

=

f (x1 , . . . , xn )

· I {x1:n = s}

fS (s)

e−

Pn

e−

Pn

j=1 (xj −η)

· I {x1:n > η}

· I {x1:n = s}

ne−n(s−η) · I{s > η}

j=1 (xj −s)

· I {x1:n = s} .

n

2

This quantity does not depend on η.

# 6. The joint mass function of our data is

n Y

mi xi mi −xi

f (x1 , . . . , xn ) =

p q

· I {xi = 0 , . . . , mi for all i = 1, . . . , n}

xi

i=1

Pn

= Cp

where C :=

Qn

i=1

mi

xi

i=1

xi

q

Pn

i=1 (mi −xi )

. That is,

Pn

f (x1 , . . . , xn ) = C 0 (p/q)

where C 0 := Cq

Pn

i=1

· I {xi = 0 , . . . , mi for all i = 1, . . . , n} ,

mi

xi

i=1

· I {xi = 0 , . . . , mi for all i = 1, . . . , n} ,

. The factorization theorem does the rest for us.

# 7. Let us apply the factorization theorem. The joint mass function is

n Y

xi − 1 ri xi −ri

f (x1 , . . . , xn ) =

p q

· I {xi = r1 , r1 + 1, . . . for all i ≤ n}

ri − 1

i=1

= Cq

where C :=

Qn

i=1

Pn

i=1

xi −1

ri −1

xi

· I {xi = r1 , r1 + 1, . . . for all i ≤ n} ,

(p/q)ri . Therefore, S :=

Pn

i=1

Xi is sufficient for p.

# 16. The likelihood function is

L(p) =

n Y

Xi − 1

ri − 1

i=1

pri q Xi −ri ,

therefore, the log-likelihood function is

n

n

n

X

X

X

Xi − 1

ln L(p) =

log

+ ln p

ri + ln(1 − p)

(Xi − ri ).

ri − 1

i=1

i=1

i=1

Therefore,

d

ln L(p) =

dp

Pn

i=1 ri

p

Pn

−

− ri )

.

1−p

i=1 (Xi

Set this equal to zero in order to see that the usual MLE of p is

Pn

Pn

ri

ri

i=1

Pn

= Pni=1 .

p̂ = Pn

i=1 ri

i=1 (Xi − ri ) +

i=1 Xi

Pn

Now let us maximizePthe mass function of S := i=1 Xi . Examine the MGF’s

n

to see that S ∼ NB( i=1 ri , p). Therefore,

(

)

P

n

X

Pn

n

s−1

fS (s) = Pn

p i=1 ri q s− i=1 ri · I s ≥

ri .

i=1 ri − 1

i=1

3

The corresponding likelihood function

P

Pn

n

S−1

LS (p) = Pn

p i=1 ri q S− i=1 ri ,

i=1 ri − 1

whose log is

n

X

S−1

P

+ ln p

ln LS (p) = ln

ri +

n

i=1 ri − 1

i=1

This yields

!

ri

ln(1 − p).

i=1

Pn

S − i=1 ri

−

.

p

1−p

Pn

Set the preceding to zero to see that p̂ = i=1 ri /S, as before. So the answer

is “yes,” the two procedures yield the same MLE.

d

ln LS (p) =

dp

Pn

S−

n

X

i=1 ri

4