Review

advertisement

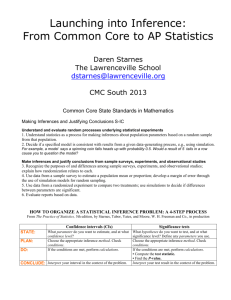

Review Question Question µ? p? Question µ? C.I.? Test? p? C.I.? Test? Question µ? C.I.? 1-S? 2-S? p? Test? 1-S? C.I.? 2-S? 1-S? 2-S? Test? 1-S? 2-S? Question µ? C.I.? 1-S? σ known? 2-S? p? Test? 1-S? C.I.? 2-S? σ unknown? σ known? σ unknown? 1-S? 2-S? Test? 1-S? 2-S? Question µ? C.I.? 1-S? Test? 2-S? 2-S? P476 σ unknown? σ known? P366 C.I.? 1-S? P476 σ known? p? P447 P378 σ unknown? P450 1-S? P505 2-S? P526 Test? 1-S? P513 2-S? P532 • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Confidence intervals: • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Confidence intervals: ∗ form: estimate ± margin of error / interpretation • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Confidence intervals: ∗ form: estimate ± marginof error / interpretation σ σ ∗ x̄ − z ∗ √ , x̄ + z ∗ √ n n • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Confidence intervals: ∗ form: estimate ± marginof error / interpretation σ σ ∗ x̄ − z ∗ √ , x̄ + z ∗ √ n n ∗ z ∗ is determined by the confidence level C — the z-score corresponding to the upper tail (1 − C )/2 • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Test of significance: • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: z = σ/ n • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: z = σ/ n ∗ P-value: • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: z = σ/ n ∗ P-value: ? Ha : µ > µ0 — upper tail probability corresponding to z • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: z = σ/ n ∗ P-value: ? Ha : µ > µ0 — upper tail probability corresponding to z ? Ha : µ < µ0 — lower tail probability corresponding to z • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: z = σ/ n ∗ P-value: ? Ha : µ > µ0 — upper tail probability corresponding to z ? Ha : µ < µ0 — lower tail probability corresponding to z ? Ha : µ 6= µ0 — twice upper tail probability corresponding to |z| • Inference about µ with known σ — z-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: z = σ/ n ∗ P-value: ? Ha : µ > µ0 — upper tail probability corresponding to z ? Ha : µ < µ0 — lower tail probability corresponding to z ? Ha : µ 6= µ0 — twice upper tail probability corresponding to |z| ∗ significance level α and conclusion • Assumptions for z-procedures: • Assumptions for z-procedures: ∗ the sample is an SRS • Assumptions for z-procedures: ∗ the sample is an SRS ∗ the population has a normal distribution • Assumptions for z-procedures: ∗ the sample is an SRS ∗ the population has a normal distribution ∗ the population standard deviation σ is known • Assumptions for z-procedures: ∗ the sample is an SRS ∗ the population has a normal distribution ∗ the population standard deviation σ is known • Margin of errors in confidence intervals are affected by C , σ and n to get a level C C.I. with margin of m, we need an SRS with sample size ∗ 2 z σ n= m • Assumptions for z-procedures: ∗ the sample is an SRS ∗ the population has a normal distribution ∗ the population standard deviation σ is known • Margin of errors in confidence intervals are affected by C , σ and n to get a level C C.I. with margin of m, we need an SRS with sample size ∗ 2 z σ n= m • The significance of test will also be affected by sample size • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) s • Standard error: √ n • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) s • Standard error: √ n • t-distribution; degrees of freedom (n − 1) • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) s • Standard error: √ n • t-distribution; degrees of freedom (n − 1) • Confidence intervals: • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) s • Standard error: √ n • t-distribution; degrees of freedom (n − 1) • Confidence intervals: ∗ s ∗ s ∗ x̄ − t √ , x̄ + t √ n n • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) s • Standard error: √ n • t-distribution; degrees of freedom (n − 1) • Confidence intervals: ∗ s ∗ s ∗ x̄ − t √ , x̄ + t √ n n ∗ t ∗ is determined by the confidence level C — the t-score corresponding to the upper tail (1 − C )/2 • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) • Test of significance: • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: t = s/ n • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: t = s/ n ∗ P-value: • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: t = s/ n ∗ P-value: ? Ha : µ > µ0 — upper tail probability corresponding to t • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: t = s/ n ∗ P-value: ? Ha : µ > µ0 — upper tail probability corresponding to t ? Ha : µ < µ0 — lower tail probability corresponding to t • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: t = s/ n ∗ P-value: ? Ha : µ > µ0 — upper tail probability corresponding to t ? Ha : µ < µ0 — lower tail probability corresponding to t ? Ha : µ 6= µ0 — twice upper tail probability corresponding to |t| • Inference about µ with unknown σ — t-procedures (confidence interval & test of significance) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ = µ0 x̄ − µ0 √ ∗ test statistics: t = s/ n ∗ P-value: ? Ha : µ > µ0 — upper tail probability corresponding to t ? Ha : µ < µ0 — lower tail probability corresponding to t ? Ha : µ 6= µ0 — twice upper tail probability corresponding to |t| ∗ significance level α and conclusion • Inference about two means — µ1 − µ2 • Inference about two means — µ1 − µ2 • Standard error for x̄1 − x̄2 : s s12 s2 + 2 n1 n2 • Inference about two means — µ1 − µ2 • Standard error for x̄1 − x̄2 : s s12 s2 + 2 n1 n2 • Confidence interval for µ1 − µ2 : • Inference about two means — µ1 − µ2 • Standard error for x̄1 − x̄2 : s s12 s2 + 2 n1 n2 • Confidence interval for µ1 − µ2 : s s 2 2 s1 s2 s12 s22 ∗ ∗ ∗ (x̄1 − x̄2 ) − t + , (x̄1 − x̄2 ) + t + n1 n2 n1 n2 • Inference about two means — µ1 − µ2 • Standard error for x̄1 − x̄2 : s s12 s2 + 2 n1 n2 • Confidence interval for µ1 − µ2 : s s 2 2 s1 s2 s12 s22 ∗ ∗ ∗ (x̄1 − x̄2 ) − t + , (x̄1 − x̄2 ) + t + n1 n2 n1 n2 ∗ t ∗ is determined by the confidence level C — the t-score corresponding to the upper tail (1 − C )/2 • Inference about two means — µ1 − µ2 • Standard error for x̄1 − x̄2 : s s12 s2 + 2 n1 n2 • Confidence interval for µ1 − µ2 : s s 2 2 s1 s2 s12 s22 ∗ ∗ ∗ (x̄1 − x̄2 ) − t + , (x̄1 − x̄2 ) + t + n1 n2 n1 n2 ∗ t ∗ is determined by the confidence level C — the t-score corresponding to the upper tail (1 − C )/2 ∗ degrees of freedom: smaller of n1 − 1 and n2 − 1 • Inference about two means — µ1 − µ2 • Inference about two means — µ1 − µ2 • Test of significance: • Inference about two means — µ1 − µ2 • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ1 = µ2 (µ1 − µ2 = 0) • Inference about two means — µ1 − µ2 • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ1 = µ2 (µ1 − µ2 = 0) x̄1 − x̄2 ∗ test statistics: t = q 2 s22 s1 n1 + n2 • Inference about two means — µ1 − µ2 • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ1 = µ2 (µ1 − µ2 = 0) x̄1 − x̄2 ∗ test statistics: t = q 2 s22 s1 n1 + n2 ∗ P-value: • Inference about two means — µ1 − µ2 • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ1 = µ2 (µ1 − µ2 = 0) x̄1 − x̄2 ∗ test statistics: t = q 2 s22 s1 n1 + n2 ∗ P-value: ? degrees of freedom: smaller of n1 − 1 and n2 − 1 • Inference about two means — µ1 − µ2 • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ1 = µ2 (µ1 − µ2 = 0) x̄1 − x̄2 ∗ test statistics: t = q 2 s22 s1 n1 + n2 ∗ P-value: ? degrees of freedom: smaller of n1 − 1 and n2 − 1 ? Ha : µ > µ0 — upper tail probability corresponding to t • Inference about two means — µ1 − µ2 • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ1 = µ2 (µ1 − µ2 = 0) x̄1 − x̄2 ∗ test statistics: t = q 2 s22 s1 n1 + n2 ∗ P-value: ? degrees of freedom: smaller of n1 − 1 and n2 − 1 ? Ha : µ > µ0 — upper tail probability corresponding to t ? Ha : µ < µ0 — lower tail probability corresponding to t • Inference about two means — µ1 − µ2 • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ1 = µ2 (µ1 − µ2 = 0) x̄1 − x̄2 ∗ test statistics: t = q 2 s22 s1 n1 + n2 ∗ P-value: ? degrees of freedom: smaller of n1 − 1 and n2 − 1 ? Ha : µ > µ0 — upper tail probability corresponding to t ? Ha : µ < µ0 — lower tail probability corresponding to t ? Ha : µ 6= µ0 — twice upper tail probability corresponding to |t| • Inference about two means — µ1 − µ2 • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : µ1 = µ2 (µ1 − µ2 = 0) x̄1 − x̄2 ∗ test statistics: t = q 2 s22 s1 n1 + n2 ∗ P-value: ? degrees of freedom: smaller of n1 − 1 and n2 − 1 ? Ha : µ > µ0 — upper tail probability corresponding to t ? Ha : µ < µ0 — lower tail probability corresponding to t ? Ha : µ 6= µ0 — twice upper tail probability corresponding to |t| ∗ significance level α and conclusion • Inference about population proportion p — z-procedures (confidence interval & test of significance) • Inference about population proportion p — z-procedures (confidence interval & test of significance) • Sampling distribution of the sample proportion p̂ for an SRS: • Inference about population proportion p — z-procedures (confidence interval & test of significance) • Sampling distribution of the sample proportion p̂ for an SRS: ∗ mean of p̂ equals the population proportion p • Inference about population proportion p — z-procedures (confidence interval & test of significance) • Sampling distribution of the sample proportion p̂ for an SRS: ∗ mean of p̂ equals the population rproportion p p(1 − p) ∗ standard deviation of p̂ equals n • Inference about population proportion p — z-procedures (confidence interval & test of significance) • Sampling distribution of the sample proportion p̂ for an SRS: ∗ mean of p̂ equals the population rproportion p p(1 − p) ∗ standard deviation of p̂ equals n ∗ If the sampler size is large, p̂ is approximately normal, i.e. p(1 − p) approx p̂ ∼ N(p, ) n • Inference about population proportion p — z-procedures (confidence interval & test of significance) • Sampling distribution of the sample proportion p̂ for an SRS: ∗ mean of p̂ equals the population rproportion p p(1 − p) ∗ standard deviation of p̂ equals n ∗ If the sampler size is large, p̂ is approximately normal, i.e. p(1 − p) approx p̂ ∼ N(p, ) rn p̂(1 − p̂) • Standard error of p̂: n • Inference about population proportion p — z-procedures • Inference about population proportion p — z-procedures • Large-sample confidence intervals: • Inference about population proportion p — z-procedures • Large-sample confidence intervals: r r p̂(1 − p̂) p̂(1 − p̂) ∗ ∗ , p̂ + z ∗ p̂ − z n n • Inference about population proportion p — z-procedures • Large-sample confidence intervals: r r p̂(1 − p̂) p̂(1 − p̂) ∗ ∗ , p̂ + z ∗ p̂ − z n n ∗ z ∗ is determined by the confidence level C — the z-score corresponding to the upper tail (1 − C )/2 • Inference about population proportion p — z-procedures • Large-sample confidence intervals: r r p̂(1 − p̂) p̂(1 − p̂) ∗ ∗ , p̂ + z ∗ p̂ − z n n ∗ z ∗ is determined by the confidence level C — the z-score corresponding to the upper tail (1 − C )/2 ∗ Use it only when np̂ ≥ 15 and n(1 − p̂) ≥ 15 • Inference about population proportion p — z-procedures • Large-sample confidence intervals: r r p̂(1 − p̂) p̂(1 − p̂) ∗ ∗ , p̂ + z ∗ p̂ − z n n ∗ z ∗ is determined by the confidence level C — the z-score corresponding to the upper tail (1 − C )/2 ∗ Use it only when np̂ ≥ 15 and n(1 − p̂) ≥ 15 • Plus four confidence intervals: • Inference about population proportion p — z-procedures • Large-sample confidence intervals: r r p̂(1 − p̂) p̂(1 − p̂) ∗ ∗ , p̂ + z ∗ p̂ − z n n ∗ z ∗ is determined by the confidence level C — the z-score corresponding to the upper tail (1 − C )/2 ∗ Use it only when np̂ ≥ 15 and n(1 − p̂) ≥ 15 • Plus four confidence intervals: r r p̃(1 − p̃) p̃(1 − p̃) ∗ ∗ ∗ p̃ − z , p̃ + z n+4 n+4 • Inference about population proportion p — z-procedures • Large-sample confidence intervals: r r p̂(1 − p̂) p̂(1 − p̂) ∗ ∗ , p̂ + z ∗ p̂ − z n n ∗ z ∗ is determined by the confidence level C — the z-score corresponding to the upper tail (1 − C )/2 ∗ Use it only when np̂ ≥ 15 and n(1 − p̂) ≥ 15 • Plus four confidence intervals: r r p̃(1 − p̃) p̃(1 − p̃) ∗ ∗ ∗ p̃ − z , p̃ + z n+4 n+4 number of successes in the sample + 2 ∗ p̃ = n+4 • Inference about population proportion p — z-procedures • Large-sample confidence intervals: r r p̂(1 − p̂) p̂(1 − p̂) ∗ ∗ , p̂ + z ∗ p̂ − z n n ∗ z ∗ is determined by the confidence level C — the z-score corresponding to the upper tail (1 − C )/2 ∗ Use it only when np̂ ≥ 15 and n(1 − p̂) ≥ 15 • Plus four confidence intervals: r r p̃(1 − p̃) p̃(1 − p̃) ∗ ∗ ∗ p̃ − z , p̃ + z n+4 n+4 number of successes in the sample + 2 ∗ p̃ = n+4 ∗ Use it when the confidence level is at least 90% and the sample size n is at least 10 • Inference about population proportion p — z-procedures • Inference about population proportion p — z-procedures • Test of significance: • Inference about population proportion p — z-procedures • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p = p0 • Inference about population proportion p — z-procedures • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p = p0 p̂ − p0 ∗ test statistics: z = q p0 (1−p0 ) n • Inference about population proportion p — z-procedures • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p = p0 p̂ − p0 ∗ test statistics: z = q p0 (1−p0 ) n ∗ P-value: • Inference about population proportion p — z-procedures • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p = p0 p̂ − p0 ∗ test statistics: z = q p0 (1−p0 ) n ∗ P-value: ? Ha : p > p0 — upper tail probability corresponding to z • Inference about population proportion p — z-procedures • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p = p0 p̂ − p0 ∗ test statistics: z = q p0 (1−p0 ) n ∗ P-value: ? Ha : p > p0 — upper tail probability corresponding to z ? Ha : p < p0 — lower tail probability corresponding to z • Inference about population proportion p — z-procedures • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p = p0 p̂ − p0 ∗ test statistics: z = q p0 (1−p0 ) n ∗ P-value: ? Ha : p > p0 — upper tail probability corresponding to z ? Ha : p < p0 — lower tail probability corresponding to z ? Ha : p 6= p0 — twice upper tail probability corresponding to |z| • Inference about population proportion p — z-procedures • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p = p0 p̂ − p0 ∗ test statistics: z = q p0 (1−p0 ) n ∗ P-value: ? Ha : p > p0 — upper tail probability corresponding to z ? Ha : p < p0 — lower tail probability corresponding to z ? Ha : p 6= p0 — twice upper tail probability corresponding to |z| ∗ significance level α and conclusion • Inference about population proportion p — z-procedures • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p = p0 p̂ − p0 ∗ test statistics: z = q p0 (1−p0 ) n ∗ P-value: ? Ha : p > p0 — upper tail probability corresponding to z ? Ha : p < p0 — lower tail probability corresponding to z ? Ha : p 6= p0 — twice upper tail probability corresponding to |z| ∗ significance level α and conclusion ∗ use this test when np0 ≥ 10 and n(1 − p0 ) ≥ 10 • Inference about two proportions — p1 − p2 • Inference about two proportions — p1 − p2 • Sampling distribution of p̂1 − p̂2 : • Inference about two proportions — p1 − p2 • Sampling distribution of p̂1 − p̂2 : ∗ mean of p̂1 − p̂2 is p1 − p2 • Inference about two proportions — p1 − p2 • Sampling distribution of p̂1 − p̂2 : ∗ mean of p̂1 − p̂2 is p1 − p2 ∗ standard deviation of p̂1 − p̂2 is s p1 (1 − p1 ) p2 (1 − p2 ) + n1 n2 • Inference about two proportions — p1 − p2 • Sampling distribution of p̂1 − p̂2 : ∗ mean of p̂1 − p̂2 is p1 − p2 ∗ standard deviation of p̂1 − p̂2 is s p1 (1 − p1 ) p2 (1 − p2 ) + n1 n2 ∗ If the sample size is large, p̂1 − p̂2 is approximately normal • Inference about two proportions — p1 − p2 • Sampling distribution of p̂1 − p̂2 : ∗ mean of p̂1 − p̂2 is p1 − p2 ∗ standard deviation of p̂1 − p̂2 is s p1 (1 − p1 ) p2 (1 − p2 ) + n1 n2 ∗ If the sample size is large, p̂1 − p̂2 is approximately normal s p̂1 (1 − p̂1 ) p̂2 (1 − p̂2 ) • Standard error of p̂: + n1 n2 • Inference about two proportions — p1 − p2 • Inference about two proportions — p1 − p2 • Large-sample confidence intervals: • Inference about two proportions — p1 − p2 • Large-sample confidence intervals: ∗ ∗ ∗ (p̂1 − p̂2 ) − z SE, (p̂1 + p̂2 ) + z SE , where SE is the standard error of p̂1 − p̂2 : s p̂1 (1 − p̂1 ) p̂2 (1 − p̂2 ) SE = + n1 n2 • Inference about two proportions — p1 − p2 • Large-sample confidence intervals: ∗ ∗ ∗ (p̂1 − p̂2 ) − z SE, (p̂1 + p̂2 ) + z SE , where SE is the standard error of p̂1 − p̂2 : s p̂1 (1 − p̂1 ) p̂2 (1 − p̂2 ) SE = + n1 n2 ∗ z ∗ is determined by the confidence level C — the z-score corresponding to the upper tail (1 − C )/2 • Inference about two proportions — p1 − p2 • Large-sample confidence intervals: ∗ ∗ ∗ (p̂1 − p̂2 ) − z SE, (p̂1 + p̂2 ) + z SE , where SE is the standard error of p̂1 − p̂2 : s p̂1 (1 − p̂1 ) p̂2 (1 − p̂2 ) SE = + n1 n2 ∗ z ∗ is determined by the confidence level C — the z-score corresponding to the upper tail (1 − C )/2 ∗ Use it only when np̂ ≥ 10 and n(1 − p̂) ≥ 10 • Inference about two proportions — p1 − p2 • Large-sample confidence intervals: ∗ ∗ ∗ (p̂1 − p̂2 ) − z SE, (p̂1 + p̂2 ) + z SE , where SE is the standard error of p̂1 − p̂2 : s p̂1 (1 − p̂1 ) p̂2 (1 − p̂2 ) SE = + n1 n2 ∗ z ∗ is determined by the confidence level C — the z-score corresponding to the upper tail (1 − C )/2 ∗ Use it only when np̂ ≥ 10 and n(1 − p̂) ≥ 10 • Inference about two proportions — p1 − p2 • Inference about two proportions — p1 − p2 • Plus four confidence intervals: • Inference about two proportions — p1 − p2 • Plus four confidence intervals: ∗ ∗ ∗ (p̃1 − p̃2 ) − z SE, (p̃1 + p̃2 ) + z SE , where SE is the standard error of p̃1 − p̃2 : s p̃1 (1 − p̃1 ) p̃2 (1 − p̃2 ) + SE = n1 + 2 n2 + 2 • Inference about two proportions — p1 − p2 • Plus four confidence intervals: ∗ ∗ ∗ (p̃1 − p̃2 ) − z SE, (p̃1 + p̃2 ) + z SE , where SE is the standard error of p̃1 − p̃2 : s p̃1 (1 − p̃1 ) p̃2 (1 − p̃2 ) + SE = n1 + 2 n2 + 2 ∗ p̃i = number of successes in the i th sample + 1 , i = 1, 2 ni + 2 • Inference about two proportions — p1 − p2 • Plus four confidence intervals: ∗ ∗ ∗ (p̃1 − p̃2 ) − z SE, (p̃1 + p̃2 ) + z SE , where SE is the standard error of p̃1 − p̃2 : s p̃1 (1 − p̃1 ) p̃2 (1 − p̃2 ) + SE = n1 + 2 n2 + 2 number of successes in the i th sample + 1 , i = 1, 2 ni + 2 ∗ Use it when n1 ≥ 5 and n2 ≥ 5 ∗ p̃i = • Test of significance: • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p1 = p2 (p1 − p2 = 0) • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p1 = p2 (p1 − p2 = 0) ∗ pooled sample proportion p̂: number of successes in both samples combined p̂ = number of individuals in both samples combined • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p1 = p2 (p1 − p2 = 0) ∗ pooled sample proportion p̂: number of successes in both samples combined p̂ = number of individuals in both samples combined p̂1 − p̂2 p̂(1 − p̂) n11 + ∗ test statistics: z = s 1 n2 • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p1 = p2 (p1 − p2 = 0) ∗ pooled sample proportion p̂: number of successes in both samples combined p̂ = number of individuals in both samples combined p̂1 − p̂2 p̂(1 − p̂) n11 + ∗ test statistics: z = s ∗ P-value: 1 n2 • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p1 = p2 (p1 − p2 = 0) ∗ pooled sample proportion p̂: number of successes in both samples combined p̂ = number of individuals in both samples combined p̂1 − p̂2 p̂(1 − p̂) n11 + ∗ test statistics: z = s 1 n2 ∗ P-value: ? Ha : p1 − p2 > 0 — upper tail probability corresponding to z • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p1 = p2 (p1 − p2 = 0) ∗ pooled sample proportion p̂: number of successes in both samples combined p̂ = number of individuals in both samples combined p̂1 − p̂2 p̂(1 − p̂) n11 + ∗ test statistics: z = s ∗ P-value: ? Ha : p1 − p2 > 0 corresponding to ? Ha : p1 − p2 < 0 corresponding to 1 n2 — upper tail probability z — lower tail probability z • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p1 = p2 (p1 − p2 = 0) ∗ pooled sample proportion p̂: number of successes in both samples combined p̂ = number of individuals in both samples combined p̂1 − p̂2 p̂(1 − p̂) n11 + ∗ test statistics: z = s ∗ P-value: ? Ha : p1 − p2 > 0 corresponding to ? Ha : p1 − p2 < 0 corresponding to ? Ha : p1 − p2 6= 0 corresponding to 1 n2 — upper tail probability z — lower tail probability z — twice upper tail probability |z| • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p1 = p2 (p1 − p2 = 0) ∗ pooled sample proportion p̂: number of successes in both samples combined p̂ = number of individuals in both samples combined p̂1 − p̂2 p̂(1 − p̂) n11 + ∗ test statistics: z = s 1 n2 ∗ P-value: ? Ha : p1 − p2 > 0 — upper tail probability corresponding to z ? Ha : p1 − p2 < 0 — lower tail probability corresponding to z ? Ha : p1 − p2 6= 0 — twice upper tail probability corresponding to |z| ∗ significance level α and conclusion • Test of significance: ∗ hypotheses: H0 v.s Ha / H0 : p1 = p2 (p1 − p2 = 0) ∗ pooled sample proportion p̂: number of successes in both samples combined p̂ = number of individuals in both samples combined p̂1 − p̂2 p̂(1 − p̂) n11 + ∗ test statistics: z = s 1 n2 ∗ P-value: ? Ha : p1 − p2 > 0 — upper tail probability corresponding to z ? Ha : p1 − p2 < 0 — lower tail probability corresponding to z ? Ha : p1 − p2 6= 0 — twice upper tail probability corresponding to |z| ∗ significance level α and conclusion ∗ use this test when counts of successes and failures are each 5 or more in boh samples • Variables - Catigorical v.s. Quantitative • Variables - Catigorical v.s. Quantitative • Graphs for distributional information: Pie chart, Bar graph, Histogram, Stemplot, Timeplot, Boxplot • Variables - Catigorical v.s. Quantitative • Graphs for distributional information: Pie chart, Bar graph, Histogram, Stemplot, Timeplot, Boxplot • Overall pattern of the graph: Symetric/Skewed, Center, Spread, Outlier, Trend • Measure of center: Mean/Median • Measure of center: Mean/Median • Measure of variability: Quartiles (Q1 , Q2 , Q3 ), Range, IQR, 1.5×IQR rule, Outlier, Variance, Standard deviation • Measure of center: Mean/Median • Measure of variability: Quartiles (Q1 , Q2 , Q3 ), Range, IQR, 1.5×IQR rule, Outlier, Variance, Standard deviation • Five-number summary, Boxplot • Density curve • Density curve • Normal distributions / Normal curves • Density curve • Normal distributions / Normal curves • z-score, Standard normal distribution • Density curve • Normal distributions / Normal curves • z-score, Standard normal distribution • 68 − 95 − 99.7 rule, Probabilities for normal distribution • Explanatory variable / Response variable • Explanatory variable / Response variable • Scatterplot: Direction (Positive / Negative), Form (Linear / Nonlinear), Strength, Outlier • Explanatory variable / Response variable • Scatterplot: Direction (Positive / Negative), Form (Linear / Nonlinear), Strength, Outlier • Correlation • Linear regression: ŷ = a + bx; Slope b, Intercept a, Predication • Linear regression: ŷ = a + bx; Slope b, Intercept a, Predication • Correlation and regression, r 2 , Residual • Linear regression: ŷ = a + bx; Slope b, Intercept a, Predication • Correlation and regression, r 2 , Residual • Cautions for regression: Influential observations, Extrapolation, Lurking variables • Sample / Population • Sample / Population • Random sampling design: Simple random sample (SRS), Stratified random sample, Multistage sample • Sample / Population • Random sampling design: Simple random sample (SRS), Stratified random sample, Multistage sample • Bad samples: Voluntary response sample, Convenience sample • Observational studies & Experimental studies (experiments) • Observational studies & Experimental studies (experiments) • Treatments / Factors • Observational studies & Experimental studies (experiments) • Treatments / Factors • Design of experiments: • Observational studies & Experimental studies (experiments) • Treatments / Factors • Design of experiments: control (comparison, placebo) • Observational studies & Experimental studies (experiments) • Treatments / Factors • Design of experiments: control (comparison, placebo) randomization (table of random digits, double-blind) • Observational studies & Experimental studies (experiments) • Treatments / Factors • Design of experiments: control (comparison, placebo) randomization (table of random digits, double-blind) matched pairs design / Block design • Probability: Sample space (S) & Events • Probability: Sample space (S) & Events • Rules for probability model: • Probability: Sample space (S) & Events • Rules for probability model: 1. for any event A, 0 ≤ P(A) ≤ 1 • Probability: Sample space (S) & Events • Rules for probability model: 1. for any event A, 0 ≤ P(A) ≤ 1 2. for sample space S, P(S) = 1 • Probability: Sample space (S) & Events • Rules for probability model: 1. for any event A, 0 ≤ P(A) ≤ 1 2. for sample space S, P(S) = 1 3. if two events A and B are disjoint, then P(A or B) = P(A) + P(B) • Probability: Sample space (S) & Events • Rules for probability model: 1. for any event A, 0 ≤ P(A) ≤ 1 2. for sample space S, P(S) = 1 3. if two events A and B are disjoint, then P(A or B) = P(A) + P(B) 4. for any event A, P(A does not occur) = 1 − P(A) • Probability: Sample space (S) & Events • Rules for probability model: 1. for any event A, 0 ≤ P(A) ≤ 1 2. for sample space S, P(S) = 1 3. if two events A and B are disjoint, then P(A or B) = P(A) + P(B) 4. for any event A, P(A does not occur) = 1 − P(A) • Discrete probability models / Continuous probability models • Probability: Sample space (S) & Events • Rules for probability model: 1. for any event A, 0 ≤ P(A) ≤ 1 2. for sample space S, P(S) = 1 3. if two events A and B are disjoint, then P(A or B) = P(A) + P(B) 4. for any event A, P(A does not occur) = 1 − P(A) • Discrete probability models / Continuous probability models • Random variables / Distributions • Population / Sample; Parameters / Statistics µ / x̄, σ / s, p / p̂ • Population / Sample; Parameters / Statistics µ / x̄, σ / s, p / p̂ • Statistics are random variables • Population / Sample; Parameters / Statistics µ / x̄, σ / s, p / p̂ • Statistics are random variables • Sampling distribution of the sample mean x̄ for an SRS: • Population / Sample; Parameters / Statistics µ / x̄, σ / s, p / p̂ • Statistics are random variables • Sampling distribution of the sample mean x̄ for an SRS: ∗ mean of x̄ equals the population mean µ • Population / Sample; Parameters / Statistics µ / x̄, σ / s, p / p̂ • Statistics are random variables • Sampling distribution of the sample mean x̄ for an SRS: ∗ mean of x̄ equals the population mean µ ∗ standard deviation of x̄ equals √σn , where σ is the population standard deviation and n is the sample size • Population / Sample; Parameters / Statistics µ / x̄, σ / s, p / p̂ • Statistics are random variables • Sampling distribution of the sample mean x̄ for an SRS: ∗ mean of x̄ equals the population mean µ ∗ standard deviation of x̄ equals √σn , where σ is the population standard deviation and n is the sample size ∗ if the population has a normal distribution, then √ x̄ ∼ N(µ, σ/ n) • Population / Sample; Parameters / Statistics µ / x̄, σ / s, p / p̂ • Statistics are random variables • Sampling distribution of the sample mean x̄ for an SRS: ∗ mean of x̄ equals the population mean µ ∗ standard deviation of x̄ equals √σn , where σ is the population standard deviation and n is the sample size ∗ if the population has a normal distribution, then √ x̄ ∼ N(µ, σ/ n) ∗ central limit theorem: if the sample size is large (n ≥ 30), then x̄ is approximately normal, i.e. √ approx x̄ ∼ N(µ, σ/ n)