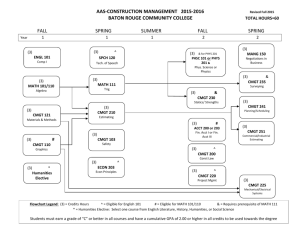

B.S. Program in Construction Management In this Report …

advertisement