Author(s) Hatcher, Ervin B.

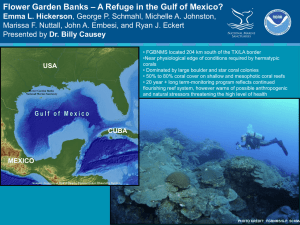

advertisement