Neural network parameterisation of the mapping of wave–spectra onto nonlinear four–wave interactions

advertisement

Neural network parameterisation of the

mapping of wave–spectra onto nonlinear

four–wave interactions

Kathrin Wahle ∗ , Heinz Günther, Helmut Schiller

GKSS Research Center, Max–Planck–Str. 1, 21502 Geesthacht, Germany

Abstract

A new approach to parameterise the exact nonlinear interaction source (snl) term

for wind wave spectra is presented. Discrete wave spectra are directly mapped onto

the corresponding snl–terms using a neural net (NN). The NN was trained with

modeled wave spectra varying from single mode spectra to highly complex ones. The

specification of training data was based on a classification of the wave spectra by

cluster analysis. In course of the structuring of the NN the intrinsic dimensionalty of

the spectra was estimated with an auto-associative neural net (AANN). The AANN

might be used for a scope check of the method.

Key words: Neural networks, Nonlinear wave–wave interaction, Wave–spectra,

Wave models, Cluster analysis

1

Introduction

Wave–models compute the dynamics of the sea–surface by solving the action balance equation describing the evolution of interacting weakly–nonlinear

waves, e.g. Komen et al. (1994). Besides propagation of waves this involves

growth of waves due to wind, the dissipation of wave energy due to whitecapping and the transfer of wave energy due to nonlinear wave–wave interaction. The computation of the last–mentioned process is by far the most

time consuming step in the modelling. Operational wave–models therefore use

approximations as the well known discrete interaction approximation (DIA)

Hasselmann et al. (1985). The DIA is computational fast but has also known

deficiencies.

∗ Corresponding authors.

Email address: kathrin.wahle@gkss.de (Kathrin Wahle).

Preprint submitted to Elsevier

2 April 2009

The further development of third generation operational wave–models requires

a replacement of the approximative methods for the calculation of the nonlinear wave-wave interaction by fast and accurate methods. Attempts have

been made with extensions of the DIA but they are so far not generally applicable since tuning for different classes of wave spectra is needed. The same

problem occurs for methods based on diffusion operators. For a comprehensive

discussion see Cavaleri et al. (2007).

In this paper a neural net (NN) parametrisation of the mapping between

wave–spectra and the corresponding nonlinear wave–wave interaction will be

presented. The idea of applying neural networks in this context was first introduced by Krasnopolsky et al. (2001), Krasnopolsky et al. (2002). There the

wave spectra as well as the nonlinear interaction source terms are assumed

to be separable functions of frequency and direction which are approximated

by expansion series, respectively. The neural network is used to map the two

sets of expansion coefficients. This assumption was dropped in a successive

work Tolman et al. (2005) where the authors used twodimensional Empirical

Orthogonal Functions for the expansion of single peaked spectra. In contrast

to this approach the feasibility of the direct mapping of the discretised wave

spectra onto the nonlinear interaction source term will be demonstrated here.

The study will not be restricted to single peaked spectra but will also include

multi–modal ones.

Two design decisions are crucial for the successfull construction of a NN: the

choice of the training data and of the NN architecture, respectively.

The wave spectra used for the training of the neural network (NN) are simulated spectra from a hindcast with the wave model WAM cycle 4 WAMDI

group (1988). Although WAM uses the DIA the simulated spectra are suited

to investigate the approach of a direct mapping. (Once the feasability has

been shown one should make the effort of calculating all spectra with a wave

model that uses the exact nonlinear interaction.) We expect that the training

for wave spectra representing multi–modal wave systems will be more difficult

then for single peaked spectra. Therefore the more complex cases should be

well represented. To be able to enrich the number of multi–modal spectra in

the training data set we perform a classification of the wave spectra. For the

classification we use a cluster algorithm.

To fix the NN structure it is important to have an estimate of the intrinsic

dimensionality of the spectra, i.e. the minimum number of variables needed

to represent the spectra. For this we constructed auto-associative neural nets

(AANN) which map the spectra onto themselves while compressing them in

between. The difference of the original spectrum with the reproduced one gives

a quality measure of the reduced representation. This difference can be used

to decide wether the compression was successfull for a given spectrum (wether

2

it is in the scope of the algorithm). The finding of the intrinsic dimensionality

led to the decision about the actual NN structure for the mapping of the wave

spectra onto the nonlinear interaction source terms.

The results of the cluster analysis are given in section 2 whereas the technical

details of the method are described in appendix A. Section 3 starts with a

short introduction to neural nets and the special case of auto-associative neural nets. The concrete applications follow: in 3.1 some AANN architectures

are tested in order to get an estimate of the intrinsic dimensionality of wave

spectra. The design and training of the actual neural network for the nonlinear

interaction source term is described in section 3.2. It includes the application

of the AANN for outlier identification. We then discuss the relevant steps towards an operational usage of the procedure in an outlook given in section 4.

Finally, the results are summarised in some concluding remarks in section 5.

2

The cluster analysis of wave–spectra

The wave spectra used for the training of the neural network (NN) are simulated spectra from a hindcast with the wave model WAM cycle 4 WAMDI

group (1988). The data span over 22,000 points in the north-atlantic for the

one month period of january 1995. Spectra were saved every three hours at an

equidistant resolution of 15◦ in direction and an equidistant relative resolution in frequency ranging from approximately 0.04 Hz to 0.4 Hz. The resultant

dimensionality of the spectra is 600 (24x25).

From this dataset of over five million spectra a representative subset containing

all classes of spectra had to be selected. By random sampling the simple single–

and bi–modal wave system cases would outweight any other class of spectra.

As a result the neural networks would well map these cases but would badly

perform for other classes. Thus a classification method should first of all be

applied to the whole dataset.

Important characteristics for the classification of the wave spectra are the

number and shapes of wave systems in them. The classification method should

identify these wave systems. This clearly is a pattern recognition problem

which we solved by applying a cluster algorithm.

As the spectra are represented using an azimuthal angle it happens that a

wave system crossing 0◦ /360◦ is splitted artifically. To avoid this the wave

spectra are preprocessed: the point of the origin of the direction is shifted if

necessary to the direction with lowest energy. This is the only preprocessing

needed here. Tolman et al. (2005) showed possiblities how to normalize the

spectra further for other approaches.

3

Input to the cluster algorithm are the 600 points of a wave spectrum. Each

of these points is defined by its coordinates (frequency and direction indices)

and by the energy at this coordinate bin of the spectrum. The energies serve

as weights of the points. The algorithm assigns the points into groups (called

clusters) so that points from the same cluster are more similar (according to

a distance measure) to each other than points from different clusters.

The cluster algorithm CLUCOV Schiller (1980) is used which was adapted

for the twodimensional problem of parametrising the wave spectrum. Each

cluster is characterised by a twodimensional Gaussians. For each cluster its

respective center and covariance matrix are estimated and the resulting Mahalanobis distance is used to assign points to the closest cluster. This allows

for varying orientations of the different clusters. In the iteration procedure it

is checked if the clusters are unimodal. If not, the cluster is split into two. The

number of clusters also can decrease: if two clusters strongly overlap these

clusters are combined. In the appendix A the algorithm is described in more

detail. One can summarize the method as performing a point density function

approximation with Gaussians as radial basis functions (RBF).

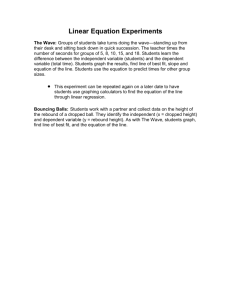

Figure A.1 shows three examples. The left panel shows the input to the algorithm (original wave spectra). The right panel shows its parametrisation by

the cluster algorithmus. It can be seen that the main characteristics are well

reproduced. But also it should be noted that the number of clusters is often

greater then the number of wave systems. In the first example the number of

wave systems is two, but three clusters were found, the second example shows

three wave systems and four clusters and the last spectrum has at least three

wave systems in it and six clusters were found. This is due to the structure of

the wave systems which are best approximated by two Gaussians, one around

the peak and one for the tail (see again figure A.1).

To summarize the cluster algorithm is well suited to classify wave spectra.

The main characteristics are well reproduced. The number of clusters is often

larger than the number of wave systems in a spectrum but the two numbers

are highly correlated. High number of clusters indicate complex structures in

the corresponding spectrum.

Table 1 lists the result of the cluster analysis, i.e. the number of clusters

found for each spectrum in the dataset. On this basis a representative subset of

791,570 spectra was choosen. The number of selected spectra increases with the

number of clusters (more complex wave spectra). Above a number of clusters

of five all spectra were taken and included twice into the dataset. Table 1 also

quantifies the selection. This representative dataset was randomly subdevided

in a training set (755,173 spectra) and a testing set (36,397 spectra) for the

neural networks.

4

number of clusters

number of spectra

percentage selected

1

406,332

9.8

2

1,536,057

5.2

3

1,907,143

6.3

4

1,033,269

15.5

5

354,712

56.4

6–12

95,804

200

Table 1

Spectra used for the representative dataset.

3

Neural Networks

Wave spectra and the nonlinear interaction source term are related via a six–

dimensional Boltzmann integral. A computational efficient method to parametrise

this complex functional relation is the usage of artificial neural networks (NN).

This is possible since a NN with at least one hidden layer – a layer between the

input and the output layer – is able to approximate any continuous function

(Universal Approximation Theorem) as was shown by Haykin (1999).

So a NN — in this context — is a computational tool for function approximation whose generalities will be described briefly (see Bishop (1995)).

A NN is organized in layers: one input layer, one output layer and one or

more hidden layers inbetween. Each layer consists of neurons. The number of

neurons in the in– and output layer are given by the dimensions of the in–

and output vectors. (In our application the two–dimensional wave spectra and

nonlinear interaction source terms will therefore be arranged as vectors with

600 components, respectively.) The number of neurons in the hidden layer(s)

are not preset and depend upon the problem. Each neuron in a layer is linked

to each neuron in a neighboring layer with a weight.

Neural nets work sequentially: each element of the input vector serves as entry

for one of the neurons of the input layer. The output of the first hidden layer

is computed by summation of the weighted inputs, shifting it by a bias and

applying a nonlinear function (a sigmoid here). The procedure is repeated

itself until the output layer is reached where the outcome of each neuron gives

one element of the output vector.

Weights and biases are the free parameters of the approximation. They are

fixed during the training phase of the NN. To do so a dataset — the training

set — is needed consisting of N pairs of input vectors (the 600 components of

the wave spectra) and corresponding desired output vectors (the correspond5

ing 600 components of the exact nonlinear interaction source term). At the

beginning of the training the outcome of the NN will differ largely from the

desired output. Let ~y and y~′ be the m–dimensional output vectors as emulated

by the NN and as given in the dataset respectively. Then the difference of the

two will result in an error e defined by

e=

N

1 X

ep

N p=1

m

y ′ i − yj

1 X

ep =

m i=1 max(y ′pi , p ∈ [1, N]) − min(y ′pi , p ∈ [1, N])

!2

.

(1)

This mean squared relative error per neuron e is iteratively minimized during

the training by backpropagating it through the NN and adjusting the biases

and weights according to a gradient descent scheme. It is measure for the

quality of the function approximation by the NN.

It is good practise to have an additional independent dataset — the testing

set — which is needed to check the generalization–power of the NN after the

training, i.e. to test if reasonable output is produced for input not included in

the training.

The training and testing phase is time consuming. But it needs to be done

only once. Whereas the usage of a NN is very fast.

The abovementioned holds true for an auto–associative NN which is a particular NN. Its especialness is that it maps the input vector onto itself. But at one

stage of the mapping — in the so called bottleneck layer — the dimensionality

is reduced. So when applying AANN’s with different degrees of dimensionality

reduction to the 600 components of the wave spectra the number of variables

needed to capture the information contained in the spectra can be determined.

Technically speaking the number of neurons in at least one hidden layer in

an AANN is less than the dimension of the in- and output vector ~x and x~′ .

The AANN part m(~x) which maps the input vector onto the neurons in the

bottleneck layer p~ is also called mapping part and the backprojection x~′ = d(~p)

is called demapping part, accordingly (see figure A.2).

The mapping part can be considered as performing a nonlinear generalization

of the Principal Component Analysis (Nonlinear Principal Component Analysis, NLPCA) Kramer (1991). It retains the maximum possible amount of information from the original data, for a given degree of compression. Like PCA

also NLPCA can serve important purposes, e.g. filtering noisy data, feature

extraction, outlier identification, the compression of data, and the restauration

of missing values Hsieh (2001), Schiller (2003).

6

3.1 Auto–associative neural network of wave–spectra

In order to achieve the goal of directly mapping the wave spectra onto the

corresponding nonlinear interaction source term it is essential to find their

intrinsic dimensionality (the number of independent variables) to be able to

fix an appropriate NN structure.

The cluster analysis in the last section was not only useful for the creation of a

representtive dataset but also it gives a hint about the intrinsic dimensionality

of the wave spectra. This can be reasoned since the parametrisation of the wave

spectra by gaussians is a first compressed representation. Since each spectrum

can be parametrised by a few clusters and each cluster is characterised by

six numbers (energy of the cluster, its center, its orientation and shape) the

intrinsic dimensionality is expected to be of the order of a few tens. This

will now be further investigated by means of auto–associative neural networks

(AANN’s).

To find an optimal AANN–parametrisation of the wave spectra AANN’s with

different number of neurons in the bottleneck layer had to be trained. The

optimization problem consists of balancing the loss of information with decreasing bottleneck neuron number and the usability of the NN. At optimum

the compression is as loss–free as possible with additional bottleneck neurons

giving only slight improvement.

For the training the neural net program developed by Schiller (2000) was

used. The AANN’s have 600 neurons in the in– and output layer since the

wave spectrum is interpreted as a 600-dimensional vector. Each of the trained

AANN’s had three hidden layers with 80 neurons in the first and third hidden

layer and with differing number of neurons in the middle bottleneck layer.

Figure A.3 shows the mean squared relative error per neuron e (see equation

1) of the AANN’s for the testing data set as a function of the number of

bottleneck neurons.

As expected the error e decreases with increasing number of bottleneck neurons. Clearly two different regimes are visible (the straight lines were fitted

by linear regression): one for the number of bottleneck neurons below approximately 33 and one above. From this it was decided to choose the smallest

AANN belonging to the second regime with 39 bottleneck neurons in it.

Figure A.4 shows three examples of the performance of this AANN. The left

panel shows the original wave spectra. The middle panel shows the output

of the AANN — the mapping of the original wave spectra onto themselves.

The right panel shows the directionally integrated wave spectra. The examples exhibit an increasing complexity of the wave spectra. The AANN output

strongly resembles the original wave spectra throughout the spectral space.

7

The residual average error

√

e is 1.4, 1.5 and 1.8% from top to bottom.

To summarize the construction of different AANN’s has shown that the intrinsic dimensionality of the analysed wave spectra is about 40. An AANN with

39 bottleneck neurons gives a good parametrisation for all different classes of

√

wave spectra with a residual average error of e =1.3%.

3.2 Nonlinear wave–wave interaction

So far we have selected a dataset which incorporates all classes of wave spectra

(see section 2) and we have found the typical number of independent variables

describing each of these wave spectra (see section 3.1).

After these preparatory works one can now start with the construction of a

neural net (NN) for the direct derivation of the nonlinear interaction source

terms from wave spectra. As a first approximation we decided to use the same

architecture already used for the AANN: In– and output layers consist of 600

neurons as the discretized wave spectra and the snl–terms are interpreted as

600–dimensional vectors. Inbetween there are again three hidden layers with

80, 39 and 80 neurons, respectively.

To train and test the NN the (exact) nonlinear interaction source (snl) terms

corresponding to each of the spectra of the selected dataset of 791,570 wave

spectra (see section 2) had to be calculated first. The method first suggested

by Webb (1978) and known as the WRT method with further improvements

by Vledder (2006) was applied for this purpose.

The pairs of wave spectra and snl–terms were again subdivided

√ into the training and testing dataset. The resulting residual average error e (see equation

1) of the resulting NN was 1.1% for both the testing– and training.

Figure A.5 shows results of the performance of the NN. The top row shows

the original wave spectra which served as input for the NN. The next row

shows the corresponding exact snl–terms calculated with the WRT–method

and below it the NN results (the WRT emulation by the NN) are shown. The

bottom row shows the directionally integrated snl–terms. In all cases the NN

emulation is very similar to the exact solution. The directionally integrated

plots highlight the quality of the fit. The three wave spectra vary from single

to three wave systems to demonstrate the broad applicability of the method

to different classes of wave spectra.

The choice of a represantative training dataset is crucial since a NN has good

interpolation properties but produces unpredictable output when forced to

extrapolate. The approach described here offers a ’in range’ check Schiller et

8

al. (2001), i.e. the AANN can be used to check if a given spectrum belongs

to the class of spectra chosen for the training of the AANN. If the error e

of the AANN is big for a given wave spectrum then it did not succeed to

represent the spectrum through the bottleneck layer. Then it is questionable

that the outcome of the snl–term NN will be in good agreement with the exaxt

snl–term.

Figure A.6 exemplifies the resulting

√ outlier identification: A wave spectrum

which gave a large of error (here e = 3.6%) when parametrising it with

the AANN was choosen randomly (upper panel). The emulation of the corresponding snl–term with the NN is poorly (lower panel). The

√ resultend relative

error when comparing the exact with the NN solution is e = 10.2%.

The NN method described here differs in mainly two points from the NN

based method first presented in Krasnopolsky et al. (2001) and with latest

results from Tolman et al. (2005): There the wave spectra as well as the snlterms are first decomposed and represented by a linear superposition of two

sets of orthogonal functions. Two different approaches are used for the basis

functions. The former approach is based on a mathematical basis where the

frequency and direction dependence was separated (an assumption beeing not

satisfied by spectra having more than one wave system at one frequency). The

later one is based on principal components but it is restricted to single peaked

spectra. In either case the neural net is used to map the expansion coefficients

onto each other. The training and testing sets used were generated based on

theoretical spectral descriptions.

So firstly, the approach presented here is not restricted to special cases of wave

spectra with e.g. only one wave system in it since a set of modeled spectra of

a high variety of complexity has been used for its development. And secondly,

no assumptions (as separabilty or convergence of an expansion series) about

the wave spectra and nonlinear interaction terms are made.

Finally an important advantage of any NN parametrisation over other implementations of complex physical processes is its computational efficiency. It is

very time consuming to train a NN and to find an appropriate net architecture

but its usage is fast. A runtime comparison of the exact WRT–method with

the NN method presented here gives a speedup factor of roughly 500.

4

Outlook

We have presented results of a neural net (NN) mapping directly wave spectra

onto the corresponding exact nonlinear interaction source term. We now want

to describe how we imagine to distinctly improve the method in order to

9

operationally incorporating it.

To start with, a new training/testing dataset of wave spectra should be calculated with a model that uses the exact nonlinear interaction. The datasets

should again contain all classes of complexity of wave spectra.

With these datasets the training of the neural nets should be repeated and

different net architectures (e.g. different mapping/demapping part) should

be tried. In particular we assume it promising to train a AANN for both,

the wave spectra and the nonlinear interaction source terms and to map the

both bottleneck layers onto each other. This ansatz has some advantages:

Firstly, more complex net architectures can be tried out for the NN which maps

the two bottleneck layers onto eachother since the number of in– and output

variables is one order of magnitude smaller than in the present NN. Secondly,

with two AANN’s the ’in range’ check can be performed twice: one time for

the wave spectra before applying the actual NN and one time afterwards for

the emulated nonlinear interaction. Still, this is a necessary condition but not

a sufficient one. Thirdly, when changing the resolution of the wave model only

the two AANN’s have to be retrained, the NN for the actual mapping stays

the same. This can be easily done, because the number of neurons in the

bottleneck layers of the AANN’s is already known since the complexity of the

spectra and the source terms does not change with resolution.

Finally, it has to be shown that the NN emulation gives robust and accurate results when implemented in a numerical wave model. As suggested by

Krasnopolsky et al. (2005), Krasnopolsky et al. (2007) parallel runs of the wave

model with the original parametrization of the exact nonlinear interaction and

with its NN emulation should be performed to do so. When operationally running the wave model a quality control block as suggested by Krasnopolsky et

al. (2008) could determine whether the NN emulation will be used or not.

5

Conclusions

The feasability of a neural network (NN) based method for direct mapping

of discrete wave spectra onto the corresponding (exact) nonlinear wave–wave

interaction source (snl) terms has been demonstrated.

In a preparatory step the intrinsic dimensionalty of the wave spectra was

estimated by means of an AANN to be about 40, i.e. 40 variables allow to

capture the variability of the wave spectra with a residual average error of

1.3%. The composition of the training data set for the NN was based on

automated classification of the wave spectra by means of a cluster analysis.

10

The NN for the mapping of the wave spectra onto the corresponding snl–terms

shows good performance. It is able to emulate the WRT method calculations

for single and multi mode wave spectra with a much higher accuracy then

the approximations implemented in nowadays operational wave models. The

quality of the emulation might be controlled using the corresponding AANN.

6

Acknowledgements

The authors like to thank Gerbrant Ph. van Vledder for providing his improved

version of the WRT–method for calculating the nonlinear interaction source

terms and for offering his help on running it. Further, we thank the reviewers

for their valuable comments on an earlier version of this paper.

A

The cluster algorithm CLUCOV: twodimensional case

The CLUCOV algorithm is used assign the 600 points of a wave spectrum

to clusters. The k–th cluster Gk is characterized by the moments of order

zero, one and two of the distribution of the N points contained in this cluster.

The points are defined by their frequency and direction coordinates X m =

(Xfm , Xθm ) and their energies which serve as weights w m . Then the three moments are

– the total weight I k of points X m contained in the cluster Gk

k

k

I =

N

X

wm

m=1

– the centroid Qk of the cluster Gk

Qki

Nk

1 X

w m Xim

= k

I m=1

with X m ∈ Gk , i ∈ {f, θ}

– the covariance matrix C k of the cluster Gk

Nk

1 X

k

Cij = k

w m (Xim − Qki )(Xjm − Qkj )

with X m ∈ Gk , i, j ∈ {f, θ}

I m=1

The eigenvectors of the covariance matrix point into the direction of the main

axes of the ellipsoids by which the shape of the clusters is approximated. The

square root of the eigenvalues of the covariance matrix denote the lengths of

the main axes, and the square root of the determinant of the covariance matrix

thus measures the volume of the clusters.

11

k

All these three moments enter the definition of the distance fm

of a point m

m

k

at X from the k–th cluster G . Thus, each cluster builds its ‘own’ metric:

The (Mahalanobis) distance ρkm of a given point X m from the centroid Qk of

the k–th cluster enters the exponent of a Gaussian g k (X m )

g k (X m ) =

ρkm

1

exp[− (ρkm )2 ] with

2

2π |C k |

1

q

q

= (X m − Qk )T (C k )−1 (X m − Qk ).

The total weight I k of points in cluster k is included into the distance measure

k

fm

as a linear weight factor, so that big clusters ‘attract’ further points

k

fm

= I k · g k (X m ).

In the direction of the main axes distances are measured in units of the square

root of the corresponding eigenvalue of the covariance matrix (which is suggested by the quadratic form in the exponent of the gaussian). This distance

measure is also invariant under linear transformations such as translation and

rotation.

k

The determinant in the denominator of fm

favours compact clusters against

voluminous clusters of the same content.

In the algorithm CLUCOV it is also possible to split and merge clusters, that

is to change the number of clusters.

To achieve this, a measure t for the overlap of two clusters Gk and Gl is

defined:

t= √

h0

hk hl

The quantities hk and hl are superpositions of the gaussians f k (X) and f l (X)

of the clusters Gk and Gl in their centroids Qk and Ql :

hk = f k (Qk ) + f l (Qk )

and

hl = f k (Ql ) + f l (Ql )

The quantity h0 is the minimum of this superposition along the distance vector

(Qk − Ql ) between the clusters:

h0 = min[f k (X) + f l (X)]

12

If for a pair of groups this measure t exceeds a limit tmerge (parameter of the

merging procedure) this pair of groups is united into one group.

The compactness of the clusters is tested by arbitrarily subdividing the clusters

with straight lines through the cluster. If the overlap of the two parts of the

clusters is smaller than tsplit (parameter of the splitting procedure) this cluster

is split by the corresponding line.

References

G.J. Komen, L. Cavaleri, M. Donelan, K. Hasselmann, S. Hasselmann,

P.A.E.M. Janssen (1994) Dynamics and Modelling of Ocean Waves. Cambridge University Press,

S. Hasselmann, K. Hasselmann, J.A. Allender, T.P. Barnett (1985) Computations and parametrizations of the non–linear energy transfer in a gravity

wave spectrum. Part II: Parametrization of the non–linear transfer for application in wave models. Journal of Physical Oceanography 15, p.1378–1391

The Wise Group, L. Cavaleri, J.-H.G.M. Alves, F. Ardhuin, A. Babanin, M.

Banner, K. Belibassakis, M. Benoit, M. Donelan, J. Groeneweg, T.H.C.

Herbers, P. Hwang, P.A.E.M. Janssen, T. Janssen, I.V. Lavrenov, R. Magne,

J. Monbaliu, M. Onorato, V. Polnikov, D. Resio, W.E. Rogers, A. Sheremet,

J. McKee Smith, H.L. Tolman, G. van Vledder, J. Wolf, I. Young (2007)

Wave modelling The state of the art. Progress in Oceanography 75(4), p.

603–674

V.M. Krasnopolsky, D.V. Chalikov, H.L. Tolman (2001) Using neural network for parametrization of nonlinear interactions in wind wave models.

In: International Joint Conference on Neural Networks, 15–19 July, 2001,

Washington DC, p.1421–1425

V.M. Krasnopolsky, D.V. Chalikov, H.L. Tolman (2002) A Neural Network

Technique to Improve Computational Efficiency of Numerical Oceanic Models. Ocean Modelling 4, p.363–383

H.L. Tolman, V.M. Krasnopolsky, D.V. Chalikov (2005) Neural network approximation for nonlinear interactions in wave spectra: direct mapping for

wind seas in deep water. Ocean Modelling 8, p.253–278

WAMDI group (1988) The WAM model — a third generation ocean wave

prediction model. Journal of Physical Oceanography 18, p.1775–1809

H. Schiller (1980) Cluster Analysis of Multiparticle Final States for Medium–

Energy Reactions. Fizika elementarnyk chastits i atomnogo yadra 11, p.182–

235, translated in Soviet Journal of Particles and Nuclei 11(1), p.71–90

S. Haykin (1999) Neural Networks — A comprehensive Foundation. Prentice

Hall International, Inc.

C.M. Bishop (1995) Neural Networks for Pattern Recognition. Clarendon

Press, Oxford

13

M.A. Kramer (1991) Nonlinear principal component analysis using autoassociative neural networks. American Institute of Chemical Engineers Journal

37(2), p.233–243

W.W. Hsieh (2001) Nonlinear principle component analysis by neural networks. Tellus A 53(5), p.599–615

H. Schiller (2003) Neural Net Architectures for Scope Check and Monitoring.

CIMSA 2003, Lugano, Switzerland, 29-31 July 2003, ISBN 0-7803-7784-2

H. Schiller (2000) Feedforward–backpropagation neural net program ffbp1.0.

GKSS–report 2000/37, ISSN 0344–9629

D.J. Webb (1978) Non–linear transfers between sea waves. Deep Sea Research

25(3), p.279–298

G.Ph. van Vledder (2006) The WRT method for the computation of non–linear

four–wave interactions in discrete spectral wave models. Coastal Engeneering 53, p.223–242

H. Schiller, V.M. Krasnopolsky (2001) Domain Check for Input to NN Emulating an Inverse Model. International Joint Conference on Neural Networks,

15-19 July 2001, Washington DC, p.2150–2152, ISBN 0-7803-7044-9

V.M. Krasnopolsky, M.S. Fox-Rabinovitz, D.V. Chalikov (2005) New Approach to Calculation of Atmospheric Model Physics: Accurate and Fast

Neural Network Emulation of Long Wave Radiation in a Climate Model.

Monthly Weather Review 133(5), p.1370–1383

V.M. Krasnopolsky (2007) Neural Network Emulations for Complex Multidimensional Geophysical Mappings: Applications of Neural Network Techniques to Atmospheric and Oceanic Satellite Retrievals and Numerical Modeling. Reviews of Geophysics 45, RG3009

V.M. Krasnopolsky, M.S. Fox-Rabinovitz, H.L. Tolman, A.A. Belochitski

(2008) Neural network approach for robust and fast calculation of physical

processes in numerical environmental models: Compound parameterization

with a quality control of larger errors. Neural Networks 21, p.535–543

14

RBF parametrisation

0.

1

0.01

0.01

0.5

1

3

°

0

0.1 .5

0.05

0.1

0.

05

0.

0.5

5

10

05

0.

0.1

0.5

3

1

0.05

0.06

01

3

5

6

1

0.41

5

0.1

0.0

0.10

0.16

0.26

frequency [Hz]

45

0

0.1

°

5

1

1

0.

90

0.01

0.5

5

10

3

0.1

1

0.0

05

0.5 .1

0.01

0.05

0.1

0.5

1

1

00.05

.1

3

5 5

1

0.5

135

1

0.5

direction [ ]

180

0.01

.015 1

00.

3 5

10

6

6

3

0.5

225

10

5

0.01

1

1 .5 0..015

0

0

1

0.0

01

0.05 0.01

0.1

1

0.01

0.05

0.1

1

0.41

0.

0.5

0.5

3 6

0.10

0.16

0.26

frequency [Hz]

RBF parametrisation

270

1

0.06

0.06

360

315

10

6

3

1 .5

0

0.1

0.05

0.01

1

3

5

01

0.5

1

3

5

0.41

0.5

1

0.

5

1

3

0.1

05

0.

3

direction [ ]

0.01

5

0.0 1

0.0

0.1

5

0.0 0

0.05 .01

0.5 0.1

1

3

6

0.

05

05

0.

1

45

0

0.01

3

0.5

0. 0

05 .0

1

1 0

.5

direction [°]

0.1

3

5

0.0

0.0

5

5

°

1

0.5

0.05

direction [°]

0.0

5

0.5

0.0

0.1

5 0.

01

0.05

0.01

5

0.

0. 0.

05 1

0.01

°

0.05

0.10

0.16

0.26

frequency [Hz]

original wave spectrum

270

direction [ ]

5

0.1

135

0.01

6

0.1 0.05 0.01

0.0

0.06

360

315

45

0

1

0.5

1

3

0.5

0.5

0.1

1

0.0

05.1

0. 0

45

0

.1

90

5

90

5

05 0

180

01

0.

0.05

0.1

3

0.

0.41

0.01

0.0

0.1

5

0.5

1

225

1

0.1

0.5

05

0.15

0.0

0.0

0.1

5

0.5

0.5

1

0.01

135

0.01

0.05

0.1

0.0

direction [ ]

180

0.1

0.01

0.5

0.10

0.16

0.26

frequency [Hz]

RBF parametrisation

0.

270

0.01

0.510.1

3

1

0.00

.501

1

0.0

5

0.01

0.

1

0.06

360

315

1

0.

1

1

0.1

225

0.41

1

0.5 00. .05

1

0.0 .05

0 0.1

0.5

0.05

270

45

0

5

0.

3

0.01

0.10

0.16

0.26

frequency [Hz]

original wave spectrum

1

135

0.5

0.06

0.5

180

0.1

0.05

0.05 0.1

0.01

225

90

1

0.0

0.5

0.15

360

315

90

5

0.0

0.01

1

0.

45

0

135

0.1

0.01

0.05

0.1

0.1

0.1

90

180

5

0.

1 3

135

270

0.1

1

180

0.01

0.5

0.01

225

225

0

0.1 .05

0.05

0.1

0.01

0.01

270

360

315

1

00.

.05

0.01

original wave spectrum

360

315

0.10

0.16

0.26

frequency [Hz]

0.41

Fig. A.1. Examples of cluster analysis: left panel shows original wave spectra, right

panel shows corresponding RBF parametrisation.

15

Fig. A.2. Autoassociative NN with bottleneck: the input is mapped by p~ = m(~x)

onto a lower dimensional (dim(~

p) < dim(~x)) space and is approximately reconstructed by the demapping NN part x~′ = d(~

p) ≈ ~x.

16

−4

squared relative error

4

x 10

3

2

1

0

10

20

30

40

50

number neurons in bottleneck

60

Fig. A.3. Squared relative error of the AANN’s as function of the number of bottleneck neurons.

17

original wave spectrum

0.5

AANN parametrisation

315

0.00.1 0.1

5 05

0.

0

3

0.1

0.

5

1

6

5

3

5

0.5 1

0.5

0.06

315

0.1

0.1

00.1

.1

.1

0.10

0.1

0.05

0.05

1

°

direction [ ]

0.1

1 1

0.5

00.0

.055

.1

0.41

05

0.05

0.

original

AANN

1.2

0.05

0.05

5

.05

00.0

1

0.0

1

0.10

0.26

0.01

0.01

1

0.8

0.6

0.4

0.2

0.01

0.01

0.26

.055

00.0

0.1

1

0.5

0.10

0.16

frequency [Hz]

1

05

0.

0.5

1

0.

45

0.10

0.16

frequency [Hz]

0.06

0.0

0.5

90

45

0.1

0.01

0.1

135

0.10.1

1

0.0

0.01

180

90

0.06

spectral energy density

0.01

°

direction [ ]

0.01

0

0. .05

1

5

0.0

0.01

225

55

0.

0.

055

0.0.0

0.5

0.1

0.

1

0.0

0.5

1

1

1 0.555

0.0

0.0

0.01

135

270

5

0.0

0.1

0.1

0.1

0.1

0.0

5

00.1

.1

.05

0.050

00.

.005

5

055

0.0

0.

0.5

1

0.1.1

0

.1

00.1

0

0.01

0.5

1.4

00.

5.5

1

.05

1

directionally integrated

1

0.01.1

1.5

0

0.41

0.0

0.01

0.1

0.1

0.1

0.050.05

5.05

0.00

0.5

225

0.1

0.1

50.05

0

0.0

1 .5

1

00.

.005

0.5

50.01

315

1

0.01

0.5

0.01

0.1

0.26

0.055

0.0

00.0

.055

0.01

original

AANN

2

AANN parametrisation

0.0

270

0.0.55

0.10

0.16

frequency [Hz]

360

0.1

11

0.050.05

0.1

315

0.05

0.05

.1

00.1

0.06

.11

00.

0

0.41

0.5

0.5

5

5.0

0.00

0.26

original wave spectrum

360

1

11

45

0.1

0.0.55

00.5.5

1

0.1

0.10

0.16

frequency [Hz]

0.0

0.1

11

0.0

0.06

90

05

0.

1

0

135

0.0

1

90

0.1

1

0.41

directionally integrated

1

0.1

0.1

0.0

0.26

0.01

180

.1

00.1

0.0

5

135

05 0.1

5

05.0

0.0

055

0.0

0.

.11

00.

01

0.

225

0.10

0.16

frequency [Hz]

5

0.0

0.0

0.

0.1

5

00.5.

1

0.1

0.06

2.5

0.1

1

05

0.

1

0. 5

5

0.0

0.0

0.0

180

0

0.41

0.1

0.0

0.1

05

0.

270

55

0.0

0.0

0.01

0.26

0.01

0.5

0.5

45

0.0

5

0.01

00.1.1

225

20

10

0.0

5

5

0.0

1

0.01

1

00.

.005

5

0.05

0.05

270

°

55

0.0

0.0

0.1

0.0

0.1

0.0

315

30

AANN parametrisation

360

0.05

0.05

1

0.1

0.10

0.16

frequency [Hz]

original wave spectrum

360

direction [ ]

3

1

0.0

0.1

0.5

0

0.41

0.0

0.1 0.0

55

5

0.26

0.1

10

5

0.10

0.16

frequency [Hz]

5

.50

0.0

1

0.0

0.0

1

0.0

0.5

0.06

5

6

10 6

0.1

0.1

0.5

10

6 5

0.1

0

45

5

0.01

0.05

0.1 0.05

0.5 0.5

1

1

3

90

0.0

3

0.05

0.1

0.51

40

0.

05

1

1

11

11

0.5

05

0.01

135

10

3

0.1

5

6

180

0.5

0.01

00.0.0

55

0.1

3

1

0.0

6

0.1

0.5

11

0.

0.1

05

1

1

0.5

0.1

1

0.5

1

0.0 0.1

5

0.

3

0.1

0.1

0.5

1

0.5

°

°

1

01

0.

0.01

0.05

0.05

5

0.0

0.01

0.05

225

0.1

0.0

0.0

0.

55

5

0.0155

00.0.0

135

direction [ ]

180

0.

°

50

0.5

0.5

0.1

0.01

direction [ ]

0.100.0

.15

1

0.00.1

5

90

direction [ ]

original

AANN

1

0.0

270

0.01

0.05

0.5

225

0

0.1

0.1.05

.005

0.01

270

180

0.5

0.5

spectral energy density

1

0.1

0.05

0.1

0.05

1

1

0.01

45

directionally integrated

60

1

0.0

0.1 .05

0

1

0.0

0.5

1

0.

0.0

315

05

360

1

1

0.

spectral energy density

360

0.41

0

0.06

0.10

0.16

frequency [Hz]

0.26

0.41

0

0.06

0.10

0.16

frequency [Hz]

0.26

Fig. A.4. Examples of the performance of the AANN: left panel shows original wave

spectra, panel in the middle shows corresponding AANN parametrisation, and right

panel shows the directionally integrated spectra.

18

0.41

original wave spectrum

original wave spectrum

360

°

5

0.0

0

−1e

−1e

−1−08

1e

0 −08

1e−06

−0.0001

−1e−05

1e−10 1e−06

1e−05

0.0001

001

−0.0 −5e

−1 −0

1e−10

1e−05 1ee−−055

1e−05

0−1e−

6 06

0.001

−1e

10−08

0−1e−

1e−

101e−06

5

1e−0

1e

−1−0

e−80

6

1e−

8

08

0

e− 1e

−1e−10

−06

−10

e−1e−06

−0 0

1e8−1

1e−06

0

6

−1

−0

1e

1e

6

1e−0

−0

1e

1e−10

6

135

1e−08

°

direction [ ]

1e

−10

0

08

e−

−1

06

1e−

1e−0

−180

1e−05

08

−1e−101e−

−0.0005

6

0

1e

1e−−0

10

8

1e

−0

8

1e−10

1e−10

°

direction [ ]

5

1e−0

0

1e

−1

0

0

°

−1e−

−1e−10

−1e−

0806

direction [ ]

8

e−−

10e8

−1

0 −1

1e

−0

−5e−05

5e−06

01

0

−5e−06 .00

05

5e−

0.1

direction [ ]

0.1

5

8

−0

1e

8

1e−0

°

1e−10

−0.0003

direction [ ]

0.00

03

5e−0.0002

−005

6

5e−05

06

10

0.1

0.01

0.0

°

direction [ ]

0.0

5

0.5

°

direction [ ]

°

direction [ ]

5e−

5e−05

5e−06

1e−05

00

0

08

°

068

−−0

11ee

direction [ ]

0.05

−0.001

e8−

1−010

e1−e

.00e05

−−016−

−006

−1

−1e−05

−5e

1e−−05

0.0001

0

0.41

directionally integrated

1e−06

1e−

1e−05

05

1e−05

0.06

0.10

0.16

frequency [Hz]

0.26

x 10

exact

NN

2

2

1

0

−2

0.41

directionally integrated

−3

3

nonlinear transfer rate

nonlinear transfer rate

nonlinear transfer rate

0.05

0.1

0.5

1

0

−1

0

10

0.41

005

0.26

e−

0.10

0.16

frequency [Hz]

1e−05

−1

0.26

−1

0.06

0.0

1

−4

0.41

−0.0001

0

.0

exact

NN

4

−2

1e

−0

1088

10

−1e−08

e0−−0

−11e

1e−

180

45

1

0.10

0.16

frequency [Hz]

3

0

225

8

0

e−

exact

NN

2

0.000

0

−1

x 10

0.26

90

1e

−5

4

1e−05

−1e

06

−1e−

−0e−

10

1e−10

−1

80

1

00

0.0

0

10

directionally integrated

−4

x 10

5

001

0.0

005

0.10

0.16

frequency [Hz]

08

8

0.06

1e−0

−0

1e−

−0

0

1e−06

0

1e−05

1e

0.41

1e

−1−e10

−−1

1e

06e−

−010

08

8

0.0

−1e−10

0.06

1e−

0.26

08

0.10

0.16

frequency [Hz]

1e−06

1e−08

5e−05

1e−05

1e−060

1e−10

1e−08

−1e−10

−1e−08

−1e−06

1e−10

1e−06

05

1e−

1e−06

270

8

0

−0 −1e−

110

1e0−

45

0.06

e−

135

1

−5e− 0.0

05

−−011e

1ee−−−10

−0e0185−e−

080e−

5−1

6 10

5

1e

315

1e−06

0

−1e−06

−1e−08

−1e−10

1e−08

90

0.0001

45

0

135

1e

−1e−05

5e−06−5e−06

1e−05

5e−05

5e−1e

06−05

1e−06

−1e−05

−1e−06

1e−08

−1e−10

1e−10

0−1e−08

1e−06

1e−05

5

1e−10

−1e−06

−1e−10

−1e−08

1e−08 −1e 1e−0

1e−0

0

−05

6 0

−1−e −1

−111−eee0 e−

−−−61001e8180 05

−e0−61

0

0

360

−1e−

1e−

08 0810

−1e−

−1

e−

05

−1e−

−1106

ee−−

100

8

08

005

00

nonlinear interactions from NN

−1

05

−5e−

5

−0

1e

−5e−−06

1e−06

6

5

−0

1e−10

0

0

−1

10e−06

−1e

e−

−1

02

.00

−0

5e

06

−05

8

−0 10

−1e−

1e

−0.0

5

−1e−0

−1e

−1e

−1e

−06

1e−

−08

−10

081e−1

1e−0

60

0

1e−06

1e−

1e−05

5e−06

−5e−06

−1e−05

−5e

180

0

05

0

05

−1e−

1e−05

3

00

0.0 0001

0.

1e−

225

1e−1

1e−05

1e−05

5e−06

05

180

0.41

1e−06

−0

270

e−

6

6

−0

1e

0.26

0

1e

270

−5

−0

nonlinear interactions from NN

315

6

5e5−0

e−0

6

5

−0

1e

e−06

−5−05

−1e

−5e−05

−0.0002

−0.0003

1e−08

08

0.10

0.16

frequency [Hz]

0

05

6

−0

1e

0.06

0.41

.00

05

00 0

0e.60−011

−0.001 00

1

0

8

−−1.0

e−05

−0

−0

1005

05

−08

1e

1e

−.0

−5e−05

0 −0

−1e−

−1e

0.0

−1e−06

−1e−08

1e−10

0

−1e−10

1e−08

1e−06

1e−08

1e−10

−1e−10

01e−10

−1e−08

−1e−10

0 −1e−08

8

225

45

0

315

5e−06

3

06

360

90

1

−1e−

e−

01

1e

1−e1

−00

6

−0

0.41

0.26

1e

90

nonlinear interactions from NN

135

5

05

−1

90

360

5e−0

6 05

1e−

0. 5e−05

00

0.0001

02

0.0

002

5e−06

5e−05

1

1e−08

06

e−

−5

0.26

0.0001

0

0.0

8

0

e−

270

08−1e

1e−0

1e−10

−1e−1

−

0

1e

−1−1

e−

010 0

1e−08

−0

05

−0

135

0.0

0.1 5

1

0.10

0.16

frequency [Hz]

−1e−

1e

0.01

0.5

3

5

1e−0

1e−0

6

−1e

1e−08

1e−10

0

−1e−08

−06 −1e−10

e−

0

8 −1

1e−0−1e−e08

−1

0

0

360

1e−10

−1e−10

−1e−08 0

−1e−08

−1e−10

0

1e−10

1e−08

45

0.10

0.16

frequency [Hz]

0.06

315

−1

8 10 0

e−

01e−1

−1

e−

−1

10

0

180

−06

1e

1e−08

−

1e

1e−06

5

5

1e−10

0

10

6

6 −0

−08 1e

1e1e−0 −

0

−506

−1e−0

−5e

1e−0

5e−06

−5e−06

−1e−05

05

e−

225

1e−06

2

00

5

−5

−5e−05

5

−5e−06

1e−05 −1e−05

−0.0002

−1 e−0

5e−05

e− 5

5e

0.0001

05 1

−0−1−

e−

6 e5−e0

05

−50

5e−05

6

5e−06

1e−0

0

0.41

5 6

10

1e−06

−1

1e−1

−1e−08

1e

6

1e−0

05

1e−

−0

45

180

0.10.05

0.5

1

exact nonlinear interactions

08

0

1e−1

05

0.0

5e−

1e

06

05

065e−

−1−e−

0.0001

1e−

−5e

05

−0.0002−5e−05

0.26

1e−

0

5e−06

1e−05

6

1e−055e−0

−1−0.0

00 5e−0002

1e5

0.0

−0

5e−

5

05

225

5

0.0

0.10

0.16

frequency [Hz]

270

0.06

3

90

0.1

0.06

1e−1

270

0

01

0.

1

exact nonlinear interactions

315

06

0.1

0.0

45

0

0.41

315

5e−

0.5

0.1

0.01

0.01

0.26

360

90

6

90

exact nonlinear interactions

135

0.01

5

5 0.01

45

0.10

0.16

frequency [Hz]

1e−05e−06

5

5e−0

0.0 5

00

1

5

0.0

0.1

5

135

0.01

0.1

360

180

180

0.5

0.1

0.5

5 6

1

0.0

0.1

1

0.1

0.05

0.01

225

0.0

0.5

0.5

0.06

1

0.05

0.01

0.05

1

3

0.0

45

0

135

5

3

0.05

0.0

1

0.1

65

10

1

1

0.

90

1 3

3

00.0.5

5 1

0.0

0.5

1

6

180

1

0.05

0.1

10

0.1

0.5

0.1

56

135

3

0.01

0.1

1

225

0.5

3

0.05

0.01

180

1

5

1

5

0.0

0.0

0.5

225

0.01

0.5

1

0.01

0.1

0.05

10

1

270

0.5

0.01

0.1

0.

1 5

3

6

10

0.05

0.05

225

1

5

0.0

3

270

0.

05

0.

270

315

0.1

5

0.0

315

0.5

315

0.1

0.01

0.05

5

0.01

6

original wave spectrum

360

6

360

1

0

−1

−2

0.06

0.10

0.16

frequency [Hz]

0.26

0.41

−3

0.06

0.10

0.16

frequency [Hz]

0.26

Fig. A.5. Examples of the performance of the NN for emulating the WRT method:

upper row shows original wave spectra, next row the corresponding exact snl–term

and third row shows its emulation by the NN. In the plots of the snl–term the

black areas correspond to most negative values and the white areas to the biggest

positive values. The gray areas inbetween cover ranges of four orders of magnitude

for the snl–terms for either sign. The lower row shows the directionally integrated

snl–terms.

19

0.41

AANN parametrisation

360

0.1

5

0.1

0.0

0.0

5

0.1

0.0

1

1

00.5.5

0.0

1

5

0.0

90

4

3

2

0.06

6

−0

5e

−1

nonlinear transfer rate

1e−08

1e−

07

0.06

5

−0

1e

1e−06

0.10

0.16

frequency [Hz]

0.41

x 10

0.26

0.41

0

−0.5

−1

−1.5

6

−0

0

−1e−05

−5e−06

−1e−06

−1e−07

0

1e−08

e−−071

1

e−10

1e

0.26

0.5

1e−06

6

5e−0

e−

1e−06

−10e6 −1e−06

1e−07

1e−08

−1e−07

−05

5e

0.41

1

0.10

0.16

frequency [Hz]

directionally integrated

−4

−5

1e−06

1e−10

0.26

07

1e−

45

1e−06

6

1e−0

1e−08

0

−1e−07

−1e−08

8

−0

6

1e−07 1e−10

1e−0

6

1e−0

6

5e−

06

−1e−10

1e−−

10e6−

08−1

−5e−05

e−0

7

−0

7

1e−07

1e

0

−1

1e

°

direction [ ]

135

1e−1

1e

06

−1e−−06

0.1

°

direction [ ]

0.5

0.1

0.1

00.0

.05

5

5e

−0

5 −05

1e−08

−1e−10

−5e−0

−1e

6

1e−05

1e

−0

6

6

−0

−

−1e 5e−

−06 06

1e−10

1e−06

5e

−5e−05

−0

6

1e

08

07

1e−

1e−

−1

0

1e

180

6

−0

1e

1e

−

−−00081780

−11ee

−1e−10

1 1

0.

05

0.5

0.

1

0.0

1

°

direction [ ]

°

5

0

−1e−07

07

−1e−08

−1e−1

1e−10

e−

0

−1

−06 1e−0

−1e

8

1e−07

08

e−

−1

06e−07 1e−06

8−−1

−0−e50701−6e0

10

−1−e5e−−

6

1e

−0

5e

225

08

e8−

−0

−10

1e

1e−1

7

−0

1e

direction [ ]

0.41

08

10

1e−10

−1e−

−1e−

8

1e−0

1e−0

0.10

0.16

frequency [Hz]

0.1

270

−10

−11ee−10

07

−1

e

1e−10

−1e−10

1e−08

1e−07

1e−07

1e−08

−1e−10

1e−10

−1e−08

−1e−07

7

6

0.26

5

−0

e

−1

07

e−

−1e

−1−08

315

0

0−71

1−e

1−e

5

−0

−0

1e

1e−0

0.06

360

6

−0

1e

0

1e−08

10

1e−

45

0

1e−1

08 −10

08

e−−11e−

e

90

−1

7

06 e−−008

5e−70−81−0−11e

0−e

1−−e1

1e

−5e−06 −1e−

6

nonlinear interactions from NN

−1

−5e−

e− 06

1e−07

1e−08

1e−10

05

0

135

0.10

0.16

frequency [Hz]

1e

0

7

1

0.01

0.05

0

1e−1

180

1e−1

06

e−

08 1e−07

1e−05

−07

−106

1e−

−1e−

5e−06

−1e

7

0.1

5e−06

6

−0

0.50.5

0.06

1e−1

−0

06

1e

5

05

0. 0.1

0

0.41

0

1e−1

7

0−0608

ee1−−e

81−011−

−e0−

1−e1

5e

5e−

08

0.0

06

0078

1−10

1e−e−

−1−1e

1e−

0.01

0.26

−1

e−

−1

0 −1e−10 −10 1e−08

e−

1e

1e−07

1e708

−1e−10

1e−07 1e−08

−1−07

e−

06

−1e−07

−1e−08

1e−06

5e−06

1e−05

−5

e−

5e−06

5e−0

1e−07

1e−10

06

5

−1e−05

5

−0

1e

1

0. 0.05

0.05

0.01

8

−0

1e

7

−0

1e

e−

270

225

−5

e−

06

1e

1e−−0

087

1

1

1e−07

1e−08

−−11−0

−1e5e

−06

ee−−60

1708

−1

−

1e

.5

00.5

0.0

exact nonlinear interactions

10

315

0.1

135

90

0.10

0.16

frequency [Hz]

360

1

0.5

0.5

0.01 0.05

0.1 0.05

0.1

0.5

0.06

0.05

0.01

45

0.05

0.05

.1

0.5

00.1

11

5

0.0 0.1

0.0

0

180

0.01

1

0.0

0.01

5

0.1

0.1

05

0.05 0.05

0.105

1 .

0. 0

1

00.0

.055

45

1

0.0

225

original

AANN

8

0.

0.1

1

90

0.5

135

0.0

0.1

0.5

1

1

0.5

0.5

0.0

0.5

5

0.5

270

.055

00.0

0.1

0.01

180

3

1

0.05

0.05

0.01

225

1

0.

01.0

50.1

0.0

0.1

270

315

0.05.5

0.1

05

0.

3

0.1

directionally integrated

9

0.1

5

1

0.

0.55

5

0.1

.5

00.5

315

10

6

.5

1 .5

00 0.1

55

00.0.0

1

0.0

1

spectral energy density

original wave spectrum

360

exact

NN

1e−06

1e−0

8

0.26

0.41

−2

0.06

0.10

0.16

frequency [Hz]

Fig. A.6. The upper panel shows the orinal wave spectra and the output from the

AANN for a case where the error of the AANN is large. The lower panel shows the

corresponding exact and NN emulated snl–term (contours as in figure A.5).

20