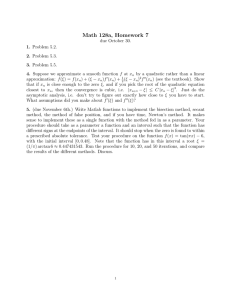

Bayesian statistics 2 More on priors plus model choice

advertisement

Bayesian statistics 2 More on priors plus model choice Bayes formula f ( D | ) f ( ) f ( | D) f ( D) f ( D | ) f ( ) f ( D | ' ) f ( ' )d ' • Prior: f() – needs to be specified! • Posterior: f(|D) – we can get this if we can calculate f(D). • Marginal data density, f(D): The normalization. The reason we do MCMC sampling rather than calculate everything analytically. Priors • Priors are there to sum up what you know before the data. • Sometimes you may have sharp knowledge (mass>0), sometimes “soft”. (Ex: It seems reasonable to me that the average elephant mass is between 4 and 10 tons, but I’m not 100% certain.) • Ideally, we would like to have an informed opinion about the prior probability for any given parameter interval. (unrealistic) • In practise, you choose a form for the probability density and choice on who’s parameter (hyper-parameters) gives you reasonable expectations or quantiles. • For instance, choose hyper-parameters that give you a reasonable 95% credibility interval (a interval for which there is 95% probability that the parameter will be found.) • The properties of the prior can be extracted from yourself, from (other) experts in the field from which the data comes or from general knowledge of the data. Typical prior distributions • Normal distribution: For a parameter that can take any real value, but where you have a notion about in which interval it ought to be. (Alt: t-distribution). • Lognormal distribution: For a strictly positive valued parameter, where you have a notion about in which interval it ought to be. (Alt: gamma and inverse-gamma). • Uniform distribution from a to b: When you really don’t have a clue what the parameter values can be except it’s definitely not outside a given interval. • Beta-distribution: Strictly between 0 and 1 (a rate), but you can say you have a notion of where the value is most likely to be found. (Generalization of U(0,1)). • Dirichlet: Same as beta, except instead of getting two rates (p and 1-p) you get a set that sums to one. (For the multinomial case). The beta distribution Since rates pop up ever so often, it’s good to have a clear understanding of the beta-distribution (the standard prior form for rates). 1. It has non-zero density only inside the interval (0,1), but can be rescaled to go from any fixed limits. 2. It has two parameters, a and b, which allows you to choose any 95% credibility interval inside (0,1). 3. a=b=1 describes the uniform distribution, U(0,1). 4. a=1, b large describes a very low rate (almost 0). 5. b=1, a large describes a very high rate (almost 1). 6. The higher a+b is the less variance (the more precise) it is. 7. The mean value is a/(a+b). (The mode is (a-1)/(a+b-2).) Prior-data conflict Some times our choice of prior can clash with reality. Ex: I may have been given the impression that the detection rate for elephants in an area is somewhere between 30% and 40%, when it’s really closer to 80%. Can be detected by: 1. Observing that the posterior mean is somewhere near the limit of our prior 95% cred. interval, or even outside. 2. Seeing that a wider prior or one with a different form gives different results (robustness). 3. Bayesian conflict measures (hard) General advice when making a prior is to think to yourself: “what if I’m wrong?” Avoid priors with hard limits, when there’s even the slightest possibility that reality is found outside those limits. Non-informative priors If there does not seem to be a well-founded way of specifying a prior for a given parameter, you can either... i. Use a so-called non-informational prior. ii. Find a model where all the parameters actually mean something! Non-informative priors: • Flat between 0 and 1 for probabilities/rates (proper). • Flat between - and + for real-valued parameters (improper). • Let the logarithm have a flat distribution if it’s a scale-parameter (improper). Cons: Pros: Often, no one will criticise your prior. You don’t have to spend time thinking about what is and is not a reasonable parameter value. Non-informative priors are usually not proper distributions. Problematic interpretation. A non-proper prior may lead to a non-proper posterior. Standard Bayesian model choice is impossible. Hypothesis testing/ model comparison - Bayesian model probabilities Bayesian inference can be performed on models as well as model parameters and it’s again Bayes theorem that is being used! Pr( M | D) f ( D | M ) Pr( M ) f ( D | M ) Pr( M ) f ( D) f ( D | M ' ) Pr( M ' ) M' In a sense, we just introduce a new discreet variable, M, describing the model we will use. M L D The data can thus be used for inference on M. Can also define the Bayes-factor, the change in model probabilities due to the data: Pr(M1 | D) Pr( M 1 ) f ( D | M 1 ) B12 / Pr(M 2 | D) Pr( M 2 ) f ( D | M 2 ) But, f ( D | M ) available! f ( D | , M ) f ( | M )d may not be analytically Bayesian model probabilities – dealing with the marginal data density f ( D | M ) f ( D | , M ) f ( | M )d may not be analytically available! Possible solutions: • Numeric integration • Sample-based methods (harmonic mean, importance sampling on adapted proposal dist.) • Reversible jumps – MCMC-jumping between models. Probability is estimated from the time the chain spends inside each model. Pros: Don’t waste time on improbable models Cons: Difficult to make, difficult to avoid mistakes, difficult to detect mistakes, difficult to make efficient. • Latent variable for model choice. Sharp but finitely wide priors for parameters that should be fixed according to a zero-hypothesis. (“Reversible jumps light”) M is equivalent to a choice of hyper-parameters, which define the priors on . (We’re making discrete inference on the hyper-parameters). Pros: Much easier to make than Reversible Jumps. It can be more realistic to test a hypothesis 10 than to test =10. Cons: Over-pragmatic? Restricted (how to deal with non-nested models?). Beware of technical issues (is the support the same?). Can be fairly inefficient when the prior becomes very sharp. Model comparison – pragmatic alternatives • Parallel to classic approach: If =0 is to be tested, check whether 0 is inside the posterior 95% credibility interval... Pros: Easy to check. Cons: Throwback to classical model testing. Doesn’t make that much sense in a Bayesian setting. Restricted use. • DIC: Parallel to classic AIC. DIC 2 log( f ( D | )) pD Want the model with the smallest value. Balances model fit (average log-likelihood) and model complexity, pD. Pros: Easy to calculate once you have MCMC samples. Implemented in WinBUGS. Has become a standard alternative to model probabilities because of this. Takes values even when the prior is non-informative. Cons: Difficult to understand it’s meaning. What does it represent? What’s a big or small difference in DIC? Counts latent variables in pD if they are in the sampling but not if they are handled analytically. Same model, different results! This has consequences for the occupancy model!