Network Level Framing in INSTANCE Overview:

advertisement

Network Level Framing in INSTANCE

Pål Halvorsen, Thomas Plagemann, and Vera Goebel

University of Oslo, UniK- Center for Technology at Kjeller

Norway

Overview:

• Application Scenario

• The INSTANCE project:

– Zero-Copy-One-Copy Memory Architecture

– Integrated Error Management

– Network Level Framing

• Conclusions

MIS 2000, Chicago, October 2000

©2000 Pål Halvorsen

Application Scenario

Media-on-Demand server:

Applicable in applications like News- or

Video-on-Demand provided by city-wide

cable or pay-per-view companies

Multimedia Storage Server

Network

Network

Retrieval is the bottleneck:

Project goals:

Some important factors:

• Memory management

• Error management

• Communication protocol processing

Optimize performance within a

single server:

• Reduce resource requirements

• Maximize number of clients

MIS 2000, Chicago, October 2000

©2000 Pål Halvorsen

Zero-Copy-One-Copy I

Zero-Copy

MIS 2000, Chicago, October 2000

Delay-Minimized Broadcast

©2000 Pål Halvorsen

Zero-Copy-One-Copy II

Zero-Copy

Delay-Minimized Broadcast

Integrated Zero-Copy and Delay-Minimized Broadcasting

MIS 2000, Chicago, October 2000

©2000 Pål Halvorsen

Traditional Error Management

Encoder

MIS 2000, Chicago, October 2000

Decoder

©2000 Pål Halvorsen

Integrated Error Management

Encoder

MIS 2000, Chicago, October 2000

Decoder

©2000 Pål Halvorsen

Traditional Storage

TRANSPORT

TRANSPORT TRANSPORT

TRANSPORT

NETWORK

NETWORK

NETWORK

NETWORK

LINK

LINK

LINK

LINK

Upload to server

Frequency: low (1)

MIS 2000, Chicago, October 2000

Download from server

Frequency: very high

©2000 Pål Halvorsen

Network Level Framing (NLF)

TRANSPORT TRANSPORT

TRANSPORT

TRANSPORT

NETWORK

NETWORK

NETWORK

NETWORK

LINK

LINK

LINK

LINK

Upload to server

Frequency: low (1)

MIS 2000, Chicago, October 2000

Download from server

Frequency: very high

©2000 Pål Halvorsen

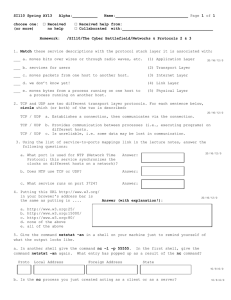

NLF:

When to Store Packets

UDP

Transport Layer

TCP

TCP

UDP

UDP

Network Layer

IP

IP

IP

IP

Link Layer

MIS 2000, Chicago, October 2000

©2000 Pål Halvorsen

NLF:

Splitting the UDP Protocol

udp_PreOut()

udp_output()

Temporarily connect

UDP

Prepend UDP and IP headers

udp_output()

Prepend UDP and IP headers

Prepare pseudo header

for checksum

Prepare pseudo header for

checksum, clear unknown fields

UDP

IP

Prealculate checksum

UDP

udp_QuickOut()

Calculate checksum

Update UDP and IP headers

UDP

Fill in some other IP header fields

IP

Update checksum, i.e., only add

checksum of prior unknown fields

Hand over datagram to IP

Fill in other IP header fields

Disconnect connected

socket

Hand over datagram to IP

MIS 2000, Chicago, October 2000

©2000 Pål Halvorsen

NLF:

Checksum Operations I

• The UDP checksum covers three fields:

– A 12 byte pseudo header containg fields from the IP header

– The 8 byte UDP header

– The UDP data

• Checksum calculation function (in_cksum):

u_16int_t *w;

for each mbuf in packet {

w = mbuf->m_data;

while data in mbuf {

checksum += w;

w++;

}

}

MIS 2000, Chicago, October 2000

©2000 Pål Halvorsen

NLF:

Checksum Operations II

• Traditional checksum operation:

MIS 2000, Chicago, October 2000

©2000 Pål Halvorsen

NLF:

Checksum Operations III

• NLF checksum operation:

MIS 2000, Chicago, October 2000

©2000 Pål Halvorsen

Integrated Error Management and NLF

FEC

UDP - PreOut

UDP - QuickOut

Network

UDP - Input

FEC

MIS 2000, Chicago, October 2000

©2000 Pål Halvorsen

Expected Performance Gain

• Traditionally, 60% of the UDP/IP processing is spent

on checksum calculation [Kay et al. 93]

• Performance gain using NLF (packet size

dependent):

– The checksum operation is greatly reduced

• E.g., 4KB packet size checksum operation on only 0.2% of

data

• Speed-up factor of about 2

– Throughput gain of about 10%

MIS 2000, Chicago, October 2000

©2000 Pål Halvorsen

Conclusion

The INSTANCE project aims at optimizing

the data retrieval in an MoD server:

– Zero-Copy-One-Copy memory architecture

– Integrated Error Management

– Network Level Framing

Saving time for checksum calculation where expected

performance gain is:

– About 99% reduction of the checksum calculation time

(UDP/IP processing speed-up of 2)

– About 10% increase in throughput

– Future work

MIS 2000, Chicago, October 2000

©2000 Pål Halvorsen