An Overview of Goals and Goal Selection Justin L. Blount Knowledge Representation Lab

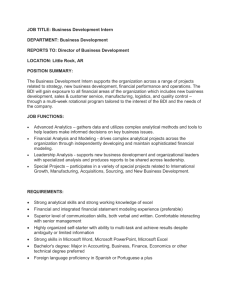

advertisement

An Overview of Goals and Goal Selection Justin L. Blount Knowledge Representation Lab Texas Tech University August 24, 2007 Outline • • • • Goals Goals in ASP agents Goals in Situation Calculus agents Goals in BDI agents Goals What question do I want to answer • What do I do now? (goal/planning) What do I want? (goal selection) How do I get it? (planning) • What is a goal? • How to choose/select a goal? • Goal n. the result or achievement toward which effort is directed; aim; end. (dictionary) Goals in ASP agents (Baral, Gelfond, 2000) • Assume at each moment t the agents memory contains the domain description and a partially ordered set G of the agents goals. • A goal is a finite set of fluent literals the agents wants to make true. • Partial ordering corresponds to the comparative importance Agent loop 1. Observe world 2. Select one of the most import goal g in G to be achieved 3. Find plan a1, … an to achieve g 4 Execute a1 Goals in ASP agents (Balduccini, 2005) Agent loop 1. Observe world 2. Select a goal 3. Find plan a1, … an to achieve g 4 Execute a1 The selection of the current goal is performed taking into account information such as the partial ordering of goals, the history of the domain, the previous goal, and the action description (e.g., to evaluate how hard/time-consuming it will be to achieve a goal). Goals in Situation Calculus agents (Shapiro, Lesperance, 2001) 1. Consistent set of goals --If the agent gets a request for g and it already has the goal that -g , then it does not adopt the goal that , otherwise its goal state would become inconsistent and it would want everything. 2. Paths to goal are finite. A maintenance goal of X is always true is not finite, but can do X is true for next 100 time steps Goals in Situation Calculus agents (Shapiro, Lesperance, 2005) Expansion An agents goal are expanded when it is requested to do something by another agent. Unless it currently has a contradicting goal. Contraction If an agents owner REQUESTS(x) then later changes his mind. The owner uses a CANCEL_REQUEST(x). Can only be used if a REQUEST was executed previously Persistence A goal x persists over an action a, if a is not CANCEL REQUEST, and the agent knows that if x holds then a does not change its value. Goals in situation calculus (Sardina, Shapiro, 2003) • Prioritized goals. Each goal has a priority level • an agent that will attempt to achieve as many goals as possible in priority order even if the agent does not know of a plan that is guaranteed to achieve all the goals. • Priorities are strict • A strategy to achieve 1 High level goal is preffered to strategy to achieve many (or all) lower level goals Goals in Situation Calculus agents (Shapiro,Lesperance, 2007) 1. 2. 3. An agent should drop goal that it believes are impossible to achieve. However, if the agent revises its beliefs, it may later come to believe that it was mistaken about the impossibility of achieving the goal. In that case, the agent should readopt the goal. If an agent receives a request to adopt goal X, it will adopt it if it does not conflict with a higher priority goal. Goals in Situation Calculus agents (Shapiro, Lesperance, 2007) 1. 2. 3. 4. Goal should be compatible with beliefs. The situations that the agent wants to actualize should be on a path from a situation that the agent considers possible. Instead of checking whether each individual goal is consistent with beliefs, check if the set of all goals are consistent with beliefs it could be the case that each goal is individually compatible with an agent’s beliefs but the set of goals of the agent is incompatible, so some of them should be dropped. Which ones should be dropped? 1. Each agent has a preorder over goal formulae that corresponds to a prioritization of goals 2. Chooses a maximal subset respecting this ordering Goals in BDI agents (D’Iverno,Kinny,Luck,Wooldridge,1998) • Goals correspond to the tasks allocated to it • From their agent loop – generate new possible desires (tasks), by finding plans whose trigger event matches an event in the event queue; • A plan consists of subgoals or primitive actions • Thus an agent with goal “achieve PHI” has a goal of performing some (possibly empty) sequence of actions, such that after these actions are performed, PHI will be true. • Thus an agent with goal “query PHI” has a goal of performing some (possibly empty) sequence of actions, such that after it performs these actions, it will know whether or not PHI is true. Thus an agent can have a goal either of achieving a state of affairs or of determining whether the state of affairs holds. Goals in BDI agents (Thanagarajah, Padgham, Harland, 2002) • Desires may be inconsistent • Goals must be consistent • if it is not possible to immediately form an intention towards a goal then the goal is simply dropped. • It certainly seems more reasonable that the agent have the ability to ‘remember’ a goal, and to form an intention regarding how to achieve it when the environment is conducive to doing so. • How to choose between two mutually inconsistent goals? • if a new goal X is more important than an existing goal Y with which it conflicts, then Y should be aborted and pursued. Otherwise, (X is less important or same importance as Y ), X is not adopted. – (too nieve) • if there is no preference ordering between two goals, then we should prefer a goal that is already adopted over one that is not: Goals in BDI agents (Winikoff, Padgham,Harland, 2001) • Problem - BDI is difficult to explain and teach • Solution - simplify • Explicitly represent goals. (instead of desires) – This is vital in order to enable selection between competing goals, dealing with conflicting goals, and correctly handling goals which cannot be pursued at the time they are created and must be delayed. • Highlight goal selection as an important issue. By contrast, BDI systems simply assume the existence of a selection function. • avoidance goals, or safety constraints (e.g. “never move the table while the robot is drilling”). Goals in BDI agents (Winikoff, Padgham, Harland, Thangarajah,2002) • Goals have 2 aspects-- declarative and procedural • Declarative -- to reason about important properties of goals • Procedural -- to ensure goals can be achieved efficiently in dynamic environments • Reasoning about multiple goals (simple case 2 goals) • Plans to achieve both may be independent • irrational to try to achieve both X and -X simultaneously • Necessarily consistent -- iff all possible subgoals do not conflict • Necessarily inconsistent -- iff some necessary subgoals conflict • Possibly inconsistent -- choose a consistent means on achieving both • Necessarily support -- both share a common necessary subgoal • Possibly support -- the exists a common necessary subgoal Goals in BDI agents (Pohkar, Braubach, lamersdorf,2005) • Goal types -- perform, achieve, query, maintain. • Active goals are currently pursued • Options are inactive because the agent explicitly wants them to be – Ex the option conflicts with a active goal • Suspended goals must not be pursued because their context is invalid – Will remain inactive until their context is valid and they become options Deliberation is executed when one of the following occurs • Creation condition -- defines when new goal instance is created • Context condition -- defines when a goal’s execution should be suspended • Drop condition -- defines when a goal instance is removed Inhibition arc -- define a negative relationship between 2 goals used in deliberation, constrain what goals are reconsidered Goals in BDI agents (Duff, Harland, Thangarajah,2006) Maintenance goals - defines states that must remain true rather than a state that is to be achieved. Reactive - goals are only acted upon when the maintenance condition is no longer true. Proactive - anticipate failures and act in order to prevent them from failing ( done by performing actions that we prevent the condition from failing or suspending goals that will cause the maintenance condition to fail) Future -- prioritize maintenance goals via urgency Goals in BDI agents (Morreale,et al 2006) A goal g1 is inconsistent with a goal g2 if and only if when g1 succeeds, then g2 fails. • agent deliberates and generates g as an option agent checks if g is possible and not inconsistent with active goals 2. If both checks are passed then g becomes and intention 3. If case of inconsistency among g and some active goals g becomes intention only if it is prefferred to such inconsistent goals which will be dropped Preference relation -- not total since several goals can be pursued in parallel, there is no need to prefer some goal to another goal if they are not inconsistent each other. References [1] Baral,C. and Gelfond, M. 2000. Reasoning agents in Dynamic Domains, Logic Based Artificial Intelligence , Edited By J. Minker, Kluwer. [2] Balduccini, M. 2005. Answer Set Based Design of Highly Autonomous, Rational Agents. PhD thesis, Texas Tech University. [4] Shapiro, S. and Lesp´erance, Y. 2001. Modeling multiagent systems with the cognitive agents specification language — a feature interaction resolution application. In C. Castelfranchi and Y. Lesp´erance, editors, Proc. ATAL-2000, pages 244–259. Springer-Verlag, Berlin. [5] Sardina, S. and Shapiro, S. 2003 Rational action in agent programs with prioritized goals.AAMAS, 417-424 [6] Shapiro, S., Lesperance, Y., and Levesque, H., 2005. Goal Change, in Proccedings of the IJCAI-05 Conference, Edinburgh, Scotland. [7] Shapiro, S. and Brewka, G. 2007. Dynamic Interactions between Goals and Beliefs. IJCAI, 2625-2630 [8] d’Inverno, M. Kinny, D. Luck, M. and Wooldridge,M. 1998. A Formal Specification of dMARS, In Intelligent Agents IV In Proceedings of the Fourth International Workshop on Agent Theories, Architectures and Languages, Singh, Rao and Wooldridge (eds.), Lecture Notes in Artificial Intelligence, 1365, Springer-Verlag. References - continued [9] Thangarajah, J., Padgham, L., and Harland, J. 2002. Representation and reasoning for goals in BDI agents. In Proceedings of the Twenty-Fifth Australasian Computer Science Conference (ACSC 2002), Melbourne, Australia. [10] Winikoff, M., Padgham, L., and Harland, J. 2001. Simplifying the Development of Intelligent Agents. In AI2001: Advances in Artificial Intelligence. 14th Australian Joint Conference on Artificial Intelligence. LNAI 2256, pages 557-568, Adelaide. [11] Winikoff, M., Padgham, L., Harland, J., and Thangarajah, J. 2002. Declarative and Procedural Goals in Intelligent Agent Systems, Proceedings of the Eighth International Conference on Principles of Knowledge Representation and Toulouse. [12] Pokahr, A., Braubach, L., Lamersdorf, W. 2005. A Goal Deliberation Strategy for BDI Agent Systems, Third German conference on Multi-Agent System Technologies [13]Duff, S., Harland, J., and Thangarajah, J. 2006. On Proactivity and Maintenance Goals, Proceedings of the Fifth International Conference on Autonomous Agents and Multi-Agent Systems (AAMAS'06), Hakodate. [14]Morreale, V., Bonura, S., Francaviglia, G., Centineo, F., Cossentino, M., and Gaglio, S. 2006. Reasoning about Goals in BDI Agents: the PRACTIONIST Framework. Proc. Of the Workshop on Objects and Agents. Catania, Italy.