Chapter 2.3 – Least Squares Regression

advertisement

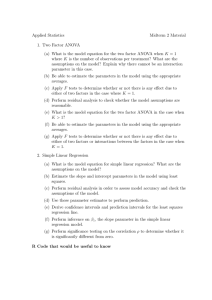

Chapter 2.3 – Least Squares Regression If we find a linear association between two quantitative variables (e.g. through a scatterplot), we can use this knowledge to help predict the value of one variable (y ) given the value of another variable (x). Stat 226 – Introduction to Business Statistics I Spring 2009 Professor: Dr. Petrutza Caragea Section A Tuesdays and Thursdays 9:30-10:50 a.m. What are plausible values when x = 7? Chapter 2, Section 2.3 We can often use a straight line, so-called regression or prediction line, to describe Least Squares Regression how a response variable y changes as an explanatory variable x changes predict a value of y given a specific value of x Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 1 / 22 Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Chapter 2.3 – Least Squares Regression Chapter 2.3 – Least Squares Regression How shall we fit a line, i.e. what is a good line? Least Squares regression line (LS regression line) Section 2.3 2 / 22 The equation of the least squares regression line is given by y! = a + b · x where We will use the so-called “least squares” principle to find the best line y! corresponds to the predicted value of y for a given value of x b=r· the best line should minimize the sum of the squared errors, i.e. give the closest fit to all data points. This best line through the data is called the “least squares regression line” or “prediction line” Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 3 / 22 sy sx is the slope of the LS regression line a = ȳ − b · x̄ is the intercept of the LS regression line r is the correlation coefficient between x and y x̄, sx correspond to the mean and standard deviation of x ȳ , sy correspond to the mean and standard deviation of y Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 4 / 22 Chapter 2.3 – Least Squares Regression Chapter 2.3 – Least Squares Regression Example: recall previous data Let’s discuss next how to plot the LS regression line and the meaning/interpration of the slope b and intercept a. x y 2 2 5 4 8 7 8 6 10 9 12 10 How to plot the LS regression line on the scatterplot Find two points on the prediction line and connect both: we found x̄ = 7.5, sx = 3.5637, ȳ = 6.3, sy = 3.0111, and r = 0.9878 x = 3 and x = 9: so, b=r· sy = sx plot both points (x, y!) on scatterplot and connect them and a = ȳ − b · x̄ = ⇒ LS regression line is given by: y! = Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 5 / 22 Chapter 2.3 – Least Squares Regression Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 6 / 22 Chapter 2.3 – Least Squares Regression interpretation of intercept a and slope b What can we use the line for? a — intercept: corresponds to the predicted value of y , when x = 0 we can use the line to predict the sales amount based on the number of radio ads aired per week, e.g. what is y! when x = 6? b — slope: corresponds to the change in the predicted value y!, when x increases by 1 unit b>0: b<0: Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 7 / 22 Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 8 / 22 Chapter 2.3 – Least Squares Regression Chapter 2.3 – Least Squares Regression Can we use the prediction line to predict the sales amount when the number of radio ads aired per week is 15? That is, what is y! when x = 15? Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 9 / 22 Chapter 2.3 – Least Squares Regression Some facts about LS-Regression 1 In regression, we must clearly know which variable is the explanatory variable and which variable is the response variable. Switching x and y will change the , but will not affect the value of the correlation r . 2 The Least squares regression line point . Stat 226 (Spring 2009, Section A) goes through the Introduction to Business Statistics I Section 2.3 10 / 22 Chapter 2.3 – Least Squares Regression Example: weekly radio ads and the sales amount: r = .9878. So r 2 = Some facts about LS-Regression cont’d 3 We want r 2 close to Stat 226 (Spring 2009, Section A) of the variation in (y ) can be explained by the least squares regression line of the number of advertisements on . is called and corresponds to the amount (percent) of in the y-values that is accounted for by the “regression of y on x” (tells us how good the predictions will be). 5 4 . Thus Based on the value of r 2 how do you know if r is negative or positive (that is the direction of the association?) ; in general Introduction to Business Statistics I Section 2.3 11 / 22 Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 12 / 22 Chapter 2.3 – Least Squares Regression Chapter 2.3 – Least Squares Regression y residuals and residual plots residual A residual is defined as the difference between an observed value y and its predicted value y! based on the prediction line yˆ # a " bx residual = observed y − predicted y = y − y! residual can be thought of as an error that we commit when using the prediction line unless a y -value falls right on the prediction line, a residual we be either positive when observed y -value falls above the prediction line negative when observed y -value falls below the prediction line so residual is only zero ⇔ y! = y Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 residual= ŷ 13 / 22 Chapter 2.3 – Least Squares Regression Stat 226 (Spring 2009, Section A) y ! yˆ y Introduction to Business Statistics I x Section 2.3 14 / 22 Section 2.3 16 / 22 Chapter 2.3 – Least Squares Regression example: radio ads vs. sales LS regression line is radio ads x 2 5 8 8 10 12 Total y! = 0.0735 + 0.835 · x sales y 2 4 7 6 9 10 y! residual y − y! In order to plot residuals we need to plot residuals on the horizontal axis and corresponding x-values on the vertical axis Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 15 / 22 Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Chapter 2.3 – Least Squares Regression Chapter 2.3 – Least Squares Regression curved pattern: data are not linear (straight line fits poorly) what to look for in a residual plot residual plots can tell us whether the fitted linear model is adequate in general residuals appear to be randomly scattered around 0 → good fit any pattern in a residual plot always indicates a bad fit increasing/decreasing spread of residuals: some examples: good fit: residuals are scattered around 0, we have about as many residuals below zero as are above Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 17 / 22 Chapter 2.3 – Least Squares Regression Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 18 / 22 Chapter 2.3 – Least Squares Regression influential points and outliers outlier — an observation that is separated from the main bulk of the data An observation that has a considerable effect on the fitted regression model (e.g. on the correlation r , intercept a, and/or slope b) is considered influential. Often we have that observations which are outliers with respect to their x-values tend to be more influential than observations which are outliers with respect to their y -values. Such observations (outlying w.r.t. all x values) are said to have a high leverage; they alter the fitted least squares regression line significantly consider the next example: data on treadmill time (until exhaustion) versus ski time for biathletes Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 19 / 22 Stat 226 (Spring 2009, Section A) Introduction to Business Statistics I Section 2.3 20 / 22 Chapter 2.3 – Least Squares Regression Chapter 2.3 – Least Squares Regression 72 72.5 Linear Fit ski time = 74.109138 - 0.7075958 treadmill time 70 Linear Fit ski time = 91.119389 - 2.5464928 treadmill time 70 ski time 65 66 64 62.5 62 60 60 8 9 10 11 12 13 14 15 8 Linear Fit Linear Fit treadmill time RSquare RSquare Adj 0.866146 Root Mean Square Error 1.136304 Mean of Response 66.76 Observations (or Sum Wgts) Term treadmill time -3 -2.5 88 RSquare Adj 0.045785 Root Mean Square Error 3.034485 Mean of Response 67.00923 Mean of Response Term Estimate 13 RSquare Adj 0.684109 Root Mean Square Error 1.725788 Mean of Response 67.00923 Std Error t Ratio Prob>|t| <.0001 Intercept 74.109138 5.718203 12.96 <.0001 Intercept -8.50 <.0001 treadmill time -0.707596 0.563685 -1.26 0.2354 treadmill time Term Residual Residual 5.0 2.5 2.5 0.0 0.0 -2.5 -2.5 -5.0 15 12 8 9 10 Stat 226 (Spring 2009, Section A) 11 12 13 14 Introduction to Business Statistics I Estimate 15 12 Prob>|t| Term Std Error t Ratio 93.870923 3.207895 29.26 <.0001 Intercept -2.79398 0.328864 -8.50 <.0001 treadmill time 21 / 22 13 Estimate Std Error t Ratio Prob>|t| 91.119389 4.754056 17.17 <.0001 -2.5464928 0.4901 -5.19 0.0003 3 5.0 1 2.5 -1 0.0 -3 -2.5 -1.5 -2 9 8.5 910 9.511 10 12 10.5 1311 14 11.5 15 12 -5 -5.0 88 8.59 9 10 9.5 11 10 Stat 226 (Spring 2009, Section A) 10.5 12 11 13 11.5 14 12 15 treadmill treadmilltime time treadmill treadmilltime time Section 2.3 0.710433 Observations (or Sum Wgts) 2 1.5 1 0.5 0 -0.5 -1 -3 -2.5 88 treadmill time treadmill treadmilltime time 66.76 Observations (or Sum Wgts) 29.26 14 11.5 Linear Fit Linear Fit RSquare t Ratio 10 12 10.5 1311 12 0.866146 3.207895 9.511 11.5 1.136304 0.328864 910 11 Root Mean Square Error 7.5 5.0 9 8.5 10.5 RSquare Adj Std Error -1.5 -2 10 0.878315 -2.79398 2 1.5 1 0.5 0 -0.5 -1 9.5 RSquare Observations (or Sum Wgts) 12 Prob>|t| 9 0.125303 93.870923 Estimate Intercept 8.5 treadmill time 0.878315 RSquare Residual Linear Fit ski time = 93.870923 - 2.7939803 treadmill time Residual Residual ski time 68 Linear Fit ski time = 93.870923 - 2.7939803 treadmill time 67.5 Introduction to Business Statistics I Section 2.3 22 / 22