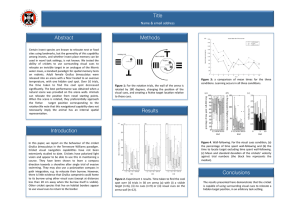

Speaker: Title: Abstract: Sophisticated algorithms have been developed for representing and recognizing objects

advertisement

Speaker: Xiang Li Title:Autonomous Learning of Object Models on Mobile Robots using Visual Cues Abstract: Sophisticated algorithms have been developed for representing and recognizing objects based on a variety of visual cues. However, for deployment in real-world domains characterized by partial observability and unforeseen dynamic changes, mobile robots require the ability to autonomously, reliably and efficiently learn and revise object models. This paper describes an approach that enables a mobile robot to autonomously learn probabilistic models of environmental objects using the complementary properties of local, global and temporal visual cues. Learning is triggered by motion cues and interesting image regions are identified by tracking and clustering salient (local) image gradient features across a sequence of images. These interesting regions are considered to correspond to candidate objects, which are modeled as: (a) relative spatial arrangement of gradient features; (b) graphical models of neighborhoods of gradient features; (c) parts-based representation of image segments; (d) color distribution statistics; and (e) probabilistic models of local context. A generative model is designed for information fusion and an energy minimization algorithm is used to efficiently recognize learned objects in novel scenes. All algorithms are evaluated on wheeled robots in indoor and outdoor domains.