6. Coloring, Images, and File Formats

advertisement

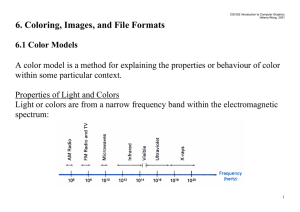

CS3162 Introduction to Computer Graphics Helena Wong, 2001 6. Coloring, Images, and File Formats 6.1 Color Models A color model is a method for explaining the properties or behaviour of color within some particular context. Properties of Light and Colors Light or colors are from a narrow frequency band within the electromagnetic spectrum: Hue (Color): The dominant frequency reflected by the object Brightness: The perceived intensity of the light. This is related to the luminance of the source. Purity (Saturation): How pure the color of the light appears. Chromaticity: collectively refer to purity and hue. Complementary colors: eg. Red and Cyan, Green and Magenta, Blue and Yellow, which combine and form white light. Primary colors: eg. r,g,b - starting from these colors, a wide range of other colors can be formed. Shades of a color: created by adding black pigment to the color. Tints of a color: created by adding white pigment to the color. Tones of a color: created by adding black or white pigment to the color. RGB Model Used by RGB monitors which separate signals for the red, green, blue components of an image. Based on the vision system of our eyes, that we have 3 visual pigments in the cones of the retina, for red, green, and blue. YIQ Model Used by the NTSC television monitors: Y - Contain the luminance (brightness) information I - Contain the orange-cyan hue information Q - Contain the green-magenta hue information 1 CS3162 Introduction to Computer Graphics Helena Wong, 2001 CMY Model Useful for describing color output to hard-copy devices. These devices produce a color picture by coating a paper with color pigments. We see the colors by reflected light, which is a subtractive process. CMYK means using the ink of Cyan, Magenta, Yellow, and Black. Consider that, - Magenta ink indeed subtracts the green component from incident light, so the remaining red and blue components are seen by us, as a resultant color of magenta. - Cyan ink indeed subtracts the red component from incident light, so the remaining green and blue components are seen by us, as a resultant color of cyan. - If we mix the ink of magenta and cyan, then, this ink subtracts the green and red component from the incident light, and the remaining blue component is seen by us, as a resultant color of blue. HSV Model In this model, users select a spectral color and apply the amounts of white or black that are to be added to obtain different shades, tints, and tones. HSV model is derived from the RGB cube. H: Hue S: Saturation V: Value Saturation and value are measured as the horizontal and vertical axes. And a degree (0 360) describes the hue. Complementary colors are 180 degrees apart. Interesting facts about human eyes: - can distinguish about 128 different hues - can distinguish about 130 different tints (saturation levels) - can distinguish about 23 shades for yellow and about 16 different shades for blue colors - hence, can distinguish about 128 x 130 x 23 = 82720 different colors. 2 CS3162 Introduction to Computer Graphics Helena Wong, 2001 HLS Model Used by Tektronix. H: Hue L: Lightness S: Saturation 6.2 Light Intensities Values of intensity calculated by an illumination model must be converted to one of the allowable intensity levels for the particular graphics system in use. We have 2 issues to consider: 1. Human perceive relative light intensities on a logarithmic scale. Eg. We perceive the difference between intensities 0.20 and 0.22 to be the same as the difference between 0.80 and 0.88. Therefore, to display successive intensity levels with equal perceived differences of brightness, the intensity levels on the monitor should be spaced so that the ratio of successive intensities is constant: I1/I0 = I2/I1 = I3/I2 = … = a constant. 2. The intensities produced by display devices are not linear with the electron-gun voltage. This is solved by applying a gamma correction for video lookup correction: Voltage for intensity Ik is computed as: Vk = (Ik / a )1 / ? Where a is a constant and ? is an adjustment factor controlled by the user. For example, the NTSC signal standard is ?=2.2. 3 CS3162 Introduction to Computer Graphics Helena Wong, 2001 6.3 Halftone Patterns and Dithering Halftoning is used when an output device has a limited intensity range, but we want to create an apparent increase in the number of available intensities. Example: The following shows an original picture and the display of it in output devices of limited intensity ranges (16 colors, 8 colors, 4 colors): If we view a very small area from a sufficiently large viewing distance, our eyes average fine details within the small area and record only the overall intensity of the area. By halftoning, each small resolution unit is imprinted with a circle of black ink whose area is proportional to the blackness of the area in the original photograph. Graphics output devices can approximate the variable-area circles of halftone reproduction by incorporating multiple pixel positions into the display of each intensity value. A 2 x 2 pixel grid used to display 5 intensity levels (I) on a bilevel system: A 3 x 3 pixel grid used to display 10 intensities on a bilevel system: 4 CS3162 Introduction to Computer Graphics Helena Wong, 2001 A 2x2 pixel grid used to display 13 intensities on a 4-level system: An RGB 2x2 pixel-grid pattern: Each r,g,b color has 4 phosphor dots in the pattern, which allows 5 possible settings per color. This gives a total of 125 different color combinations. Dithering The above approach, however, needs a higher resolution output device to display a picture in the same physical dimensions. So, in reality, we have to refine this approach so that it does not require for higher resolution. Dithering generally means to approximate halftone without this requirement. Interested students may find further discussion on dithering in many text books. Below are two examples of dithering results, using 4 and 2 colors respectively. 5 CS3162 Introduction to Computer Graphics Helena Wong, 2001 6.4 Anti-Aliasing On dealing with integer pixel positions, jagged or stairstep appearances happen very usually. This distortion of information due to undersampling is called aliasing. A number of antialiasing methods have been developed to compensate this problem. One way is to display objects at higher resolution. However there is a limit to how big we can make the frame buffer and still maintaining acceptable refresh rate. Other methods modify pixel intensities by varying them along the boundaries of primitives => smoothing the edges. These include supersampling, area sampling, and pixel phasing. Supersampling In supersampling, intensity information is obtained from multiple points that contribute to the overall intensity of a pixel. For straight lines with zero widths: 3 intensity values are used to paint the line For straight lines with finite widths: 9 intensity values are used to paint the line Pixel-Weighting Masks: Supersampling can be implemented by giving more weight to sub-pixels near the center of a pixel area. 1 2 1 2 4 2 1 2 1 6 CS3162 Introduction to Computer Graphics Helena Wong, 2001 Filtering Technique: Similar to pixel-weighting. Instead of using the grid of weighting values, we imagine a continuous weighting surface covering the pixel: Area Sampling In area sampling, we set each pixel intensity proportional to the area of overlap of the pixel. Pixel Phasing Move the electron beam to more nearly approximate positions (Micropositioning). Compensation for Line Intensity Differences Diagonal lines normally appear less bright than horizontal line. Some compensation should be done to compensate for this effect by adjusting the intensity of each line according to its slope. Antialiasing Area Boundaries To smooth area outlines, we may adjust each pixel intensity at the boundary positions according to the percent of pixel area that is inside the boundary. 7 CS3162 Introduction to Computer Graphics Helena Wong, 2001 Temporal Aliasing Aliasing problems also appear in temporal cases: - The wheel is in fact rotating clockwisely. But it appears rotating counter-clockwisely. 8 CS3162 Introduction to Computer Graphics Helena Wong, 2001 6.6 Graphic Files Bitmap Images and Vector Images Graphic file formats differ in the way they represent graphic information— either as vector drawings or as bitmap images. Some formats contain only vector drawings or bitmap images, but many include both in the same file. 1. Bitmap images Most image-editing applications generate bitmap images, also called raster images. A bitmap image is resolution-dependent—that is, it contains a fixed number of pixels to represent its image data. As a result, a bitmap image can lose detail and appear jagged if viewed at a high magnification on-screen or printed at too low a resolution. Bitmap images are the best choice for representing gradations of shades and color—for example, in photographs or painted images. Common imaging applications provide a collection of tools to improve or transform bitmap images, eg. Sharpening filters, Blurring filters (normal blurring, Gaussian blur, Motion blur, Unsharp Mask) Bitmap images are usually captured through input devices which support certain image input standards, eg. TWAIN, ISIS (The Image and Scanner Interface Specification). 2. Vector graphics Some drawing applications create vector graphics, made of lines and curves defined by mathematical objects called vectors. Vectors describe graphics according to their geometric characteristics. A vector graphic is resolution-independent—that is, it can be scaled to any size and printed on any output device at any resolution without losing its detail or clarity. As a result, vector graphics are the best choice for graphics that must retain crisp lines when scaled to various sizes—for example, logos. Graphic File Sizes Generally graphic file size is proportional to the pixel dimensions of the image. Images with more pixels may produce more detail at a given printed size, but they require more disk space to store and may be slower to edit and print. Eg. a 1-inch-by-1-inch 200-dpi (dot per inch) image contains 200 x 200 pixels, which is four times as many pixels as a 1-inch-by-1-inch 100-dpi image and so has four times the file size. To determine the image resolution to use, one should consider the medium of final distribution for the image. For on-screen display, the image resolution only needs to match the typical monitor resolution (72 or 96 dpi). For printing on laser printer, a resolution of at least 150 dpi is preferred. Note that, using too low a resolution for a printed image results in pixelation— output with large, coarse-looking pixels. Using too high a resolution (pixels smaller than what the output device can produce) increases the file size and slows the printing of the image, and some printer may not have enough internal memory to cope with the image. 9 CS3162 Introduction to Computer Graphics Helena Wong, 2001 Color Modes To cope with different requirement and characteristics of image files, most applications can handle multiple color modes. 1. Bitmap mode Uses one of two color values (black or white) to represent the pixels in an image. Images in Bitmap mode are called bitmapped, or 1-bit, images because they have a bit depth of 1. 2. Grayscale mode Uses up to 256 shades of gray. Every pixel of a grayscale image has a brightness value ranging from 0 (black) to 255 (white). 3. Indexed color mode Uses at most 256 colors. When converting a true-color image to indexed color, a color lookup table is built to store and index the colors in the image. If a color in the original image does not appear in the table, the software chooses the closest one or simulates the color using available colors. By limiting the palette of colors, indexed color can reduce file size while maintaining visual quality. 4. Multichannel mode Uses 256 levels in each r,g,b channel. File Compression Many image file formats use compression techniques to reduce the storage space required by bitmap image data. Compression techniques are distinguished by whether they remove detail and color from the image. Lossless techniques compress image data without removing detail; lossy techniques compress images by removing detail. - Run Length Encoding (RLE) is lossless. This method scans the bitmap row by row. For each row, it divides the line of colors into run-lengths according to the change of colors, then record for each color how many pixels are to be painted. - Lemple-Zif-Welch (LZW) is lossless. Similarly it archives by identifying repetitions of data. But this is a more complex approach which builds a table of tokens as it encodes data of the whole image, so that if it identifies one chunk of data and then encounters it again, it can store the same token into the file. This technique is most useful in compressing images that contain large areas of single color, such as screenshots or simple paint images. - Joint Photographic Experts Group (JPEG) is lossy. It achieves data compression through sampling techniques in the context of digital signal processing. It is best for continuous-tone images, such as photographs. You can manipulate the compression parameters to choose between greater compression or greater accuracy. 10 CS3162 Introduction to Computer Graphics Helena Wong, 2001 Common File Formats 1. BMP BMP is the standard Windows image format on DOS and Windows-compatible computers. The BMP format supports RGB, indexed-color, grayscale, and Bitmap color modes. 2. GIF The Graphics Interchange Format (GIF) is the file format commonly used to display indexed-color graphics and images. GIF uses a LZW-compressed format. Transparent color is supported. 3. JPEG format The Joint Photographic Experts Group (JPEG) format is commonly used to display photographs and other continuous-tone images. The JPEG format supports CMYK, RGB, and grayscale color modes. 4. PCX The PCX format supports RGB, indexed-color, grayscale, and Bitmap color modes. PCX supports the RLE compression method. Images can have a bit depth of 1, 4, 8, or 24. 5. PDF Portable Document Format (PDF) is used by Adobe Acrobat, Adobe’s electronic publishing software. PDF files can represent both vector and bitmap graphics, and can contain electronic document search and navigation features such as electronic links. 6. Raw The Raw format is a flexible file format for transferring files between applications and computer platforms. Raw format consists of a stream of bytes describing the color information in the file. Each pixel is described in binary format, with 0 equaling black and 255 equaling white (for images with 16bit channels, the white value is 65535). 7. TIFF The Tagged-Image File Format (TIFF) is used to exchange files between applications and computer platforms. TIFF is a flexible bitmap image format supported by virtually all painting, image-editing, and page-layout applications. Also, virtually all desktop scanners can produce TIFF images. The TIFF format supports CMYK, RGB, and grayscale files with alpha channels, and Lab, indexedcolor, and Bitmap files without alpha channels. TIFF also supports LZW compression. 8. DXF (Data Exchange File) format is used by PC-based CAD Applications. It is originally created for the AutoCAD system, and is a kind of script language (text file). 9. MPEG (Moving Picture Experts Group) MPEG is for digital video (and sound) compression. It achieves high compression rate by storing only the changes from one frame to another. (lossy) MPEG-1, the standard for storage and retrieval of moving pictures and audio on storage media MPEG-2, the standard for digital television MPEG-4, the standard for multimedia applications 11