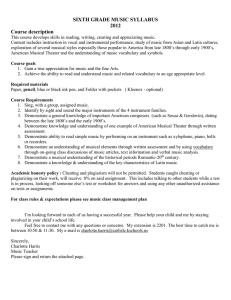

Representing Musical Knowledge

advertisement