USE b y (1985) CASE

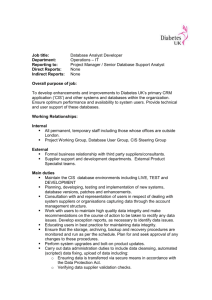

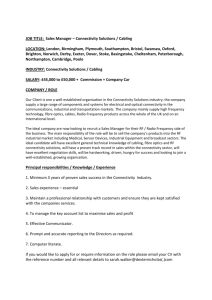

advertisement