Control of Underactuated Fluid-Body Systems M.S. ARCHIVES

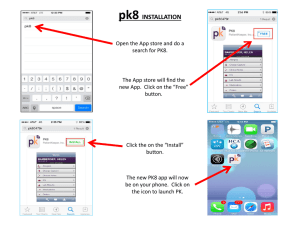

advertisement