Document 11384452

advertisement

This text is my rendering of a part of chapter 5.6 in Jurafsky & Martin (2000), plus an attempt at applying the

Minimum Edit Distance algortihm described by J&M to path finding. The text was intended for the curriculum

for a course in large text processing (the course was never realized).

Asbjørn Brændeland 2008

Dynamic Programming

"Dynamic Programming is the name for a class of algorithms, first introduced by Bellmann (1957), that apply a

table driven method to solve problems by combining solutions to subproblems. This class of algortihms includes

the most commonly-used algorithms in speech and language processing, among them the minimum edit

distance algortihm for spelling error correction, the Viterbi algorithm and the forward algorithm which are

used both in speech recognition and in machine translation, and the CYK and Early algorithm used in parsing."

(Jurafsky & Martin, 2000):

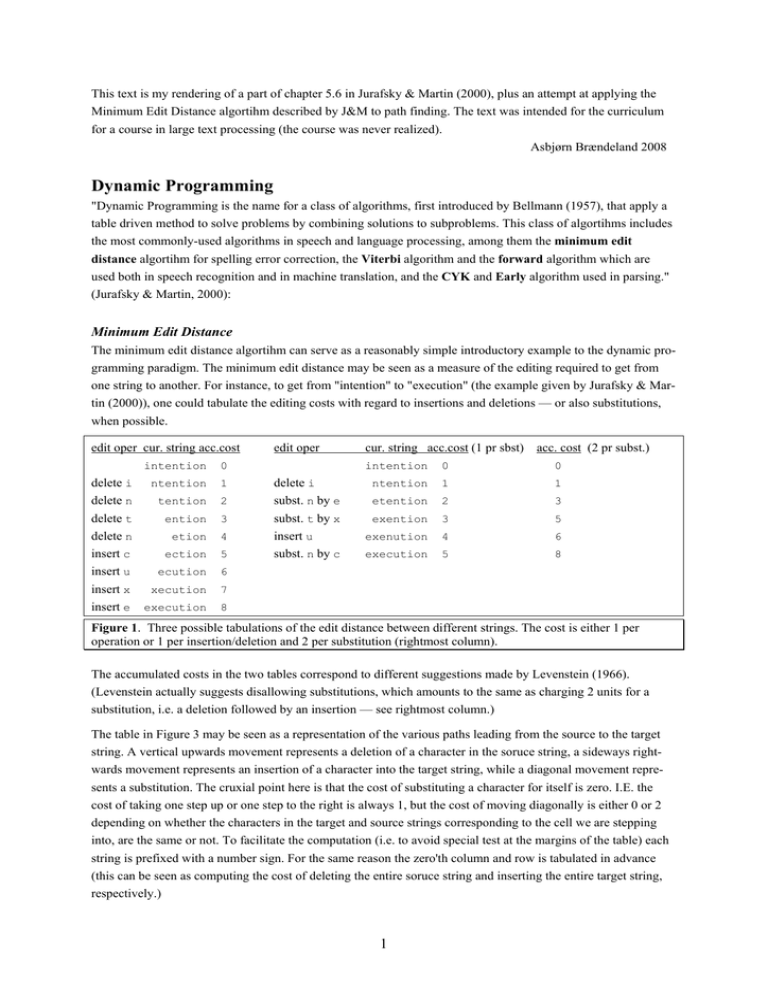

Minimum Edit Distance

The minimum edit distance algortihm can serve as a reasonably simple introductory example to the dynamic programming paradigm. The minimum edit distance may be seen as a measure of the editing required to get from

one string to another. For instance, to get from "intention" to "execution" (the example given by Jurafsky & Martin (2000)), one could tabulate the editing costs with regard to insertions and deletions — or also substitutions,

when possible.

edit oper cur. string acc.cost

intention

0

delete i

delete n

delete t

delete n

insert c

insert u

insert x

ntention

1

tention

2

ention

3

etion

4

insert e

ection

5

ecution

6

xecution

7

execution

8

edit oper

delete i

subst. n by e

subst. t by x

insert u

subst. n by c

cur. string acc.cost (1 pr sbst) acc. cost (2 pr subst.)

intention

0

0

ntention

1

1

etention

2

3

exention

3

5

exenution

4

6

execution

5

8

Figure 1. Three possible tabulations of the edit distance between different strings. The cost is either 1 per

operation or 1 per insertion/deletion and 2 per substitution (rightmost column).

The accumulated costs in the two tables correspond to different suggestions made by Levenstein (1966).

(Levenstein actually suggests disallowing substitutions, which amounts to the same as charging 2 units for a

substitution, i.e. a deletion followed by an insertion — see rightmost column.)

The table in Figure 3 may be seen as a representation of the various paths leading from the source to the target

string. A vertical upwards movement represents a deletion of a character in the soruce string, a sideways rightwards movement represents an insertion of a character into the target string, while a diagonal movement represents a substitution. The cruxial point here is that the cost of substituting a character for itself is zero. I.E. the

cost of taking one step up or one step to the right is always 1, but the cost of moving diagonally is either 0 or 2

depending on whether the characters in the target and source strings corresponding to the cell we are stepping

into, are the same or not. To facilitate the computation (i.e. to avoid special test at the margins of the table) each

string is prefixed with a number sign. For the same reason the zero'th column and row is tabulated in advance

(this can be seen as computing the cost of deleting the entire soruce string and inserting the entire target string,

respectively.)

1

Generally the value in a cell is computed to be the minimum of these three values

- the value in the cell to the left + 1,

- the value in the cell below + 1,

- the value in the cell diagonally below and to the left + the current substitution cost (0 or 2).

Figure 2. shows a Java implementation of the algorithm.

private int minEditDist(String target, String source)

{

n = target.length();

m = sourcelength();

distance = new int[n][m];

for (int i = 0; i < n; i++) distance[i][0] = i;

for (int j = 0; j < m; j++) distance[0][j] = j;

for (int i = 1; i < n; i++)

for (int j = 1; j < m; j++)

distance[i][j] =

min(distance[i - 1][j] + 1,

distance[i - 1][j - 1] +

(target.charAt(i) == source.charAt(j) ? 0 : 2),

distance[i][j - 1] + 1);

return distance[n-1][m-1];

}

Figure 2. The minimum distance algorithm.

The table in Figure 3. shows the values computed during the process of finding the minimum edit distance

between "intention" to "execution".1

n

o

i

t

n

e

t

n

i

#

║ 9 │ 8 │ 9 │ 10

║ 8 │ 7 │ 8 │ 9

║ 7 │ 6 │ 7 │ 8

║ 6 │ 5 │ 6 │ 7

║ 5 │ 4 │ 5 │ 6

║ 4 │ 3 │ 4 │ [5]

║ 3 │ 4 │ [5] │ 6

║ 2 │ 3 │ [4] │ 5

║ 1 │ [2] │ 3 │ 4

║ [0] │ 1 │ 2 │ 3

║ # │ e │ x │ e

│ 11

│ 10

│ 9

│ 8

│ [7]

│ 6

│ 7

│ 6

│ 5

│ 4

│ c

│

│

│

│

│

│

│

│

│

│

│

12

11

10

9

[8]

7

8

7

6

5

u

│ 11

│ 10

│ 9

│ [8]

│ 9

│ 8

│ 7

│ 8

│ 7

│ 6

│ t

│ 10

│ 9

│ [8]

│ 9

│ 10

│ 9

│ 8

│ 7

│ 6

│ 7

│ i

│

│

│

│

│

│

│

│

│

│

│

9

[8]

9

10

11

10

9

8

7

8

o

│ 8

│ 9

│ 10

│ 11

│ 10

│ 9

│ 8

│ 7

│ 8

│ 9

│ n

Figure 3. Computation of the minimum edit distance. To get from the source to the target string with a

minimum of editin one has to move through the white area in the table. In particular one has to pass through the

points of cost free substitutions. The bracketed numbers mark the middle path characterised by a preference for

substitutions whenever possible.

(To make sense of the numbers in the gray areas one would have to imagine that one could actually get the cost

of a the deletion of a character reimbursed by reinserting that character two or more steps later, and vice versa.)

1

A comparison of the above programming code and table with the corresponding figures 5.5 and 5.6. in Jurafsky

& Martin (2000) will reveal several minor discrepancies of which I won't try to make any account.

2

edit oper

subst n by n

subst o by o

subst i by i

subst t by t

insert u

insert c

delete n

subst e by e

insert x

insert e

delete t

delete n

delete i

edit oper

subst n by n

subst o by o

subst i by i

subst t by t

delete n

insert u

insert c

subst e by e

delete t

delete n

delete i

insert x

insert e

edit oper

subst n by n

subst o by o

subst i by i

subst t by t

insert u

subst n by c

subst e by e

delete t

subst n by x

subst i by e

current string

# e x e c u

# e x e c u

# e x e c u

# e x e c u

# e x e c u

# e x e c t

# e x e t i

# e x e n t

# e x e n t

# e e n t i

# e n t i o

# t e n t i

# n t e n t

# i n t e n

current string

# e x e c u

# e x e c u

# e x e c u

# e x e c u

# e x e c u

# e x e c u

# e x e c n

# e x e n t

# e x e n t

# e x t e n

# e x n t e

# e x i n t

# e i n t e

# i n t e n

current string

# e x e c u

# e x e c u

# e x e c u

# e x e c u

# e x e c u

# e x e c t

# e x e n t

# e x e n t

# e x t e n

# e n t e n

cost

8

8

8

8

8

7

6

n

6

n

5

3

4

n

2

o n

1

i o n

0

cost

t i o n

8

t i n n

8

t i o n

8

t i o n

8

t i o n

8

n t i o n

7

t i o n

6

i o n

5

i o n

5

t i o n

4

n t i o n

3

e n t i o n 2

n t i o n

1

t i o n

0

cost

t i o n

8

t i n n

8

t i o n

8

t i o n

8

t i o n

8

i o n

7

i o n

5

i o n

5

t i o n

4

t i o n

2

t

t

t

t

t

i

o

i

i

o

n

o

i

t

i

i

i

i

i

o

n

o

o

n

o

n

o

o

o

n

n

n

n

n

n

# i n t e n t i o n

0

move

move

move

cell

(9, 9)

(8, 8)

(7, 7)

(6, 6)

(5, 5)

(4, 5)

(3, 5)

(3, 4)

(2, 3)

(1, 3)

(0, 3)

(0, 2)

(0, 1)

(0, 0)

cell

(9, 9)

(8, 8)

(7, 7)

(6, 6)

(5, 5)

(5, 4)

(4, 4)

(3, 4)

(2, 3)

(2, 2)

(2, 1)

(2, 0)

(1, 0)

(0, 0)

cell

(9, 9)

(8, 8)

(7, 7)

(6, 6)

(5, 5)

(4, 5)

(3, 4)

(2, 3)

(2, 2)

(1, 1)

(0, 0)

Figure 4. The table shows two marginal paths characterized by a preference for source character deletion and

for target character insertion, respecitvely, and the middle path characterized by a preference for substitutions

(indicated by bracketed numbers in Figure 3.). Note that the editing here progresses upwards.

3

Pathfinding

Finding the most likely path

We now want to apply the dynamic programming paradigm to the task of planning the most effortless path from

one town to another through a network of towns and interconnecting roads. We represent the network by a directed graph where we, rather than indicating the strenuousness of each road, indicate the likelihood of each road

being chosen, relative to other possible choices, as a function of its length, quality,etc,. — The shorter and easier,

the more likely a road is to be chosen over the other roads departing from the same town. The preference likelihoods of all the roads departing from one town sums up to 1.

Figure 5. A graph representation of some towns and some of the roads connecting them. Assuming we want to

get from A to H, only the roads leading in the desired direction are included in the graph. The numbers on the

arcs are the preference likelihoods of the corresponding roads

For the computation we need a table where we can represent

- for each town

- the roads leading from it,

- the accumulated highest likelihood of the town being visited, and

- the last town visited on the way there, and

- for each departing road

- its destination and

- its likelihood of being chosen.

town

last stop on most

likely path here

accum. likelihood

of being visited

departing roads

destination : likelihood

A

-

1.0

B : 0.3

C : 0.4

D : 0.3

B

-

0.0

E : 0.6

F : 0.3

G : 0.1

C

-

0.0

E : 0.3

F : 0.5

G : 0.2

D

-

0.0

E : 0.1

F : 0.7

G : 0.2

E

-

0.0

H : 1

F

-

0.0

H : 1

G

-

0.0

H : 1

H

-

0.0

Figure 6. The initial values in the table for computing the path most likely to be chosen traveling from town A

to town H.

4

The most likely path can be computed dynamically by running through the towns and the roads departing from

them, as shown in Figure 7.

- For each town ti.

- For each departing road rj and its destination town tk

- If the likelihood of getting to ti times the likelihood of rj being chosen

is greater than the previously computed likelihood of getting to tk, then

- store the higher number in likelihood columnk and

- store i in backtracking columnk.

Figure 9. Dynamic programming algorithm for finding most likely path.

i

vi

j, k(j)

destk of roadj : accum. max. l.hood visit : coming from

0

A

B: 0.3

1

B

E: 0.18 : B

F: 0.09 : B

G: 0.03 : B

ABE : 0.18

2

C

E: 0.12 : B

F: 0.20 : C

G: 0.08 : C

ACF : 0.20

3

D

E: 0.03 : B

F: 0.21 : D

G: 0.06 : C

ADF : 0.21

4

E

H: 0.18 : E

ABEH : 0.18

5

F

H: 0.21 : F

ACFH : 0.20

6

G

H: 0.08 : G

ADFH : 0.21

7

H

: A

C: 0.4

: A

D: 0.3

most likely path :

acumm. l.hood

: A

AC : 0.4

Figure 8. The successive updates for each town of the accumulated maximum likelihood of beeing visited and

the immediate prior visitee giving that likelihood.

We se from Figure 8. how towns are falling in and out of the most likely path according to the accumulated

likelihoods for each new round. Figure 9. shows the final results.

destination : likelihood

town

backtrack to

accum. l.hood

0

A

-

1.0

B : 0.3

C : 0.4

D : 0.3

1

B

0

0.3

E : 0.6

F : 0.3

G : 0.1

2

C

0

0.4

E : 0.3

F : 0.5

G : 0.2

3

D

0

0.3

E : 0.1

F : 0.7

G : 0.2

4

E

1

0.18

H : 1

5

F

3

0.21

H : 1

6

G

2

0.08

H : 1

7

H

5

0.21

Figure 9. The final results of computing the most likely path through the graph.

Finding the shortest path

We can easily adapt the above algorithm to the task of finding the shortest path through a directed graph

(a graph where every edge points in only one direction—where there are only one-way edges).

- First we adjust the graph by replacing the relative preference likelihoods by road lengths.

- Next we initialize the cells of the accumulation column to some unreachable maximum.

- Finally we adjust the computation formula so that instead of accumulating a product of road preference

likelihoods we accumulate a sum of road lengths.

5

A Java implementation of the shortest path finding algorithm, along with the corresponding table, is given in

Figure 11. The table has been simplified to an integer matrix m where

- m[i][i] (the top-left to bottom-right diagonal) is the shortest path from v0 to vi, and

- m[i][j] (the part of the row to the right of the diagonal) is the distance from vi to vj,

- m[0][i] (the zeroth column) is the last town on the shortest path to vi.

Figure 10. A distance version of the graph in Figure 5. The numbers on the arcs, representing road lengths, are

"inversions" of the preference likelihoods shown in Figure 5. That is, if Li is the length and Pi is the preference

likelihood of roadi, then Li = (1 – Pi) 10.

public void calcBestPath()

{

for (int i = 0; i < indmax; i++)

for (int j = i + 1; j <= indmax; j++)

if (m[i][j] > 0 &&

// There is a path from i to j.

m[i][i] + m[i][j] < m[j][j]) // The path to j via i is better

{

// than any path seen so far.

m[j][j] = m[i][i] + m[i][j]; // Update total distance to j.

m[j][0] = i;

// Store last node on

}

// the best way to j.

}

Figure 11. Dynamic programming algorithm for finding shortest path.

A

B

C

D

E

F

G

H

A

0

A

A

A

B

D

C

F

B

7

7

C

6

0

6

D

7

0

0

7

E

0

4

7

9

11

F

0

7

5

3

0

10

G

0

9

8

8

0

0

14

H

0

0

0

0

20

20

20

30

Figure 12. The successive updates for each town of the accumulated shortest path and the immediate prior

visitee along that path. For readability the town indices are substituted by town names.

6

Relating edit minimzing and path finding

Let us see how we may relate the minimum edit distance and the pathfinding algorithms. For that purpose we

will consider a simpler edit distance example than the one above, i.e. that of finding the distance between the

strings "ABCD" and "AFCG".

D

C

B

A

#

║

║

║

║

║

║

4

3

2

1

0

#

│

│

│

│

│

│

3

2

1

0

1

A

│

│

│

│

│

│

4

3

2

1

2

F

│

│

│

│

│

│

3

2

3

2

3

C

│

│

│

│

│

│

4

3

4

3

4

G

Figure 13. Computation of the minimum edit distance between "ABCD" and "AFCG".

We can represent the possible movements through the table in Figure 13 in a graph, with the cost of each move

indicated on the arcs — as shown in Figure 14.

Figure 14. Graph representation of the edit options moving from "ABCD" to "AFCG".

Since there are not more than 3 arcs leaving any node, we can represent the above graph in a 25 3 table and

compute the shortest path the same way we did above (or in a similar way). In stead of getting a 5 5 nested

loop where we for each iteration look at 3 numbers, we now get a 25 3 nested loop—So the amount of work

reamins the same, as does the final outcome. Rather than the using the isomorph square table we used for computing the shortest path, we use the plymorph table from the most likely path computation, minus the backtracking column. The algorithm will then be an adaption of the one given in Figure 9.

7

- For each node ni.

- For each directed arc aj and its destination node nk

- If the minimum edit distnace to ni plus the length of aj is

less than the previously computed minimum edit distance to nk, then

- store the lesser number in cellk of the mininum edit column

Figure 16. Dynamic programming algorithm for finding minimum edit path.

node

acc.min.

ed. dist.

neighbor : distance

#,#

0

#,A : 1

A,A : 0

A,# : 1

#,A

1

#,B : 1

A,B : 2

A,A : 1

#,B

2

#,C : 1

A,C : 2

Q,B : 1

#,C

3

#,D : 1

A,D : 2

A,C : 1

#,D

4

A,#

1

A,A : 1

F,A : 2

F,# : 1

A,A

0

A,B : 1

F,B : 2

F,A : 1

A,B

1

A,C : 1

F,C : 2

F,B : 1

A,C

2

A,D : 1

F,G : 2

F,C : 1

A,D

3

F,#

2

F,A : 1

C,A : 2

C,# : 1

F,A

1

F,B : 1

C,B : 2

C,A : 1

F,B

2

F,C : 1

C,C : 0

C,B : 1

F,C

3

F,G : 1

C,D : 2

C,C : 1

F,D

4

C,#

3

C,A : 1

G,A : 2

G,# : 1

C,A

2

C,B : 1

G,B : 2

G,A : 1

C,B

3

C,C : 1

G,C : 2

G,B : 1

C,C

2

C,D : 1

G,D : 2

G,C : 1

C,D

3

G,#

4

G,A : 1

G,A

3

G,B : 1

G,B

4

G,C : 1

G,C

3

G,D : 1

G,D

4

A,D : 1

F,G : 1

C,D : 1

G,D : 1

Figure 15. The final results of computing the most likely edit distance through a graph.

—————————————————————————————————————————————

Ref:

Jurafsky & Martin, Speech and Language Processing, Prentice Hall, 2000

8